The document discusses various optimization algorithms, focusing on gradient descent and its stochastic variant, stochastic gradient descent (SGD), especially in the context of big data applications. It explains the differences between batch gradient descent, SGD, and mini-batch gradient descent, highlighting trade-offs in accuracy and speed, as well as convergence behaviors for optimizing objective functions. Additionally, it explores advanced techniques for improving training effectiveness, such as momentum, Nesterov accelerated gradient, Adagrad, and Adam, each addressing specific challenges associated with gradient-based optimization.

![other hand, using SGD will be faster because you use

only one training sample and it starts improving itself

right away from the first sample.

SGD often converges much faster compared to GD

but the error function is not as well minimized as in

the case of GD. Often in most cases, the close

approximation that you get in SGD for the parameter

values are enough because they reach the optimal

values and keep oscillating there.

There are several different flavors of SGD, which can

be all seen throughout literature. Let's take a look at

the three most common variants:

A)

• randomly shuffle samples in the training set

• for one or more epochs, or until approx. cost

minimum is reached

• for training sample i

• compute gradients and perform weight updates

B)

• for one or more epochs, or until approx. cost

minimum is reached

• randomly shuffle samples in the training set

• for training sample i

• compute gradients and perform weight updates

C)

• for iterations t, or until approx. cost minimum is

reached:

• draw random sample from the training set

• compute gradients and perform weight updates

In scenario A , we shuffle the training set only one

time in the beginning; whereas in scenario B, we

shuffle the training set after each epoch to prevent

repeating update cycles. In both scenario A and

scenario B, each training sample is only used once per

epoch to update the model weights.

In scenario C, we draw the training samples randomly

with replacement from the training set. If the number

of iterations t is equal to the number of training

samples, we learn the model based on a bootstrap

sample of the training set.

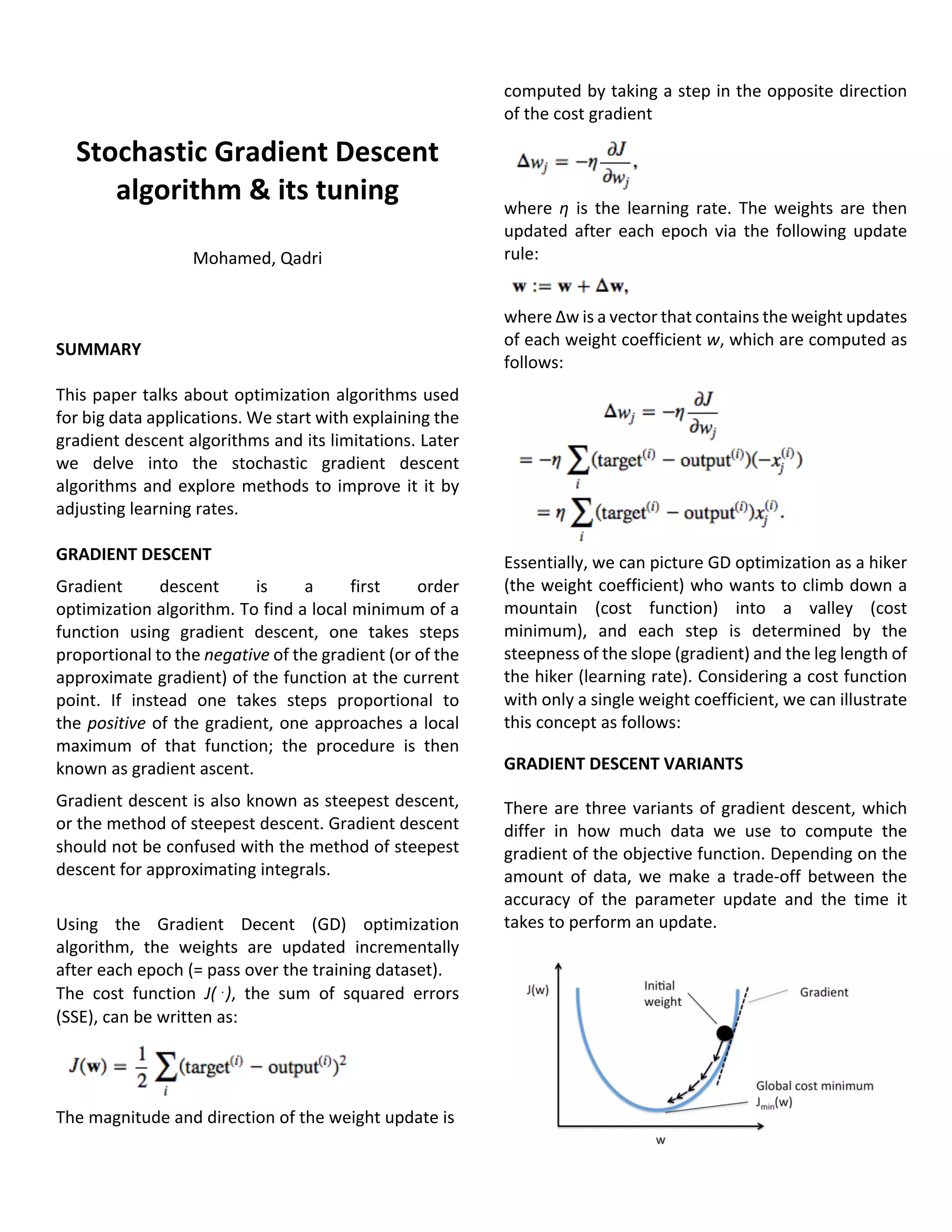

In the Gradient Descent method, one computes the

direction that decreases the objective function the

most in the case of minimization problems. But

sometimes this cant be quite costly. In most Machine

Learning for example, the objective function is often

the cumulative sum of the error over the training

examples. But the size of the training examples set

might be very large and hence computing the actual

gradient would be computationally expensive.

In Stochastic Gradient (Descent) method, we

compute an estimate or approximation to this

direction. The most simple way is to just look at one

training example (or subset of training examples) and

compute the direction to move only on this

approximation. It is called as Stochastic because the

approximate direction that is computed at every step

can be thought of a random variable of a stochastic

process. This is mainly used in showing the

convergence of this algorithm.

Recent theoretical results, however, show that the

runtime to get some desired optimization accuracy

does not increase as the training set size increases.

Stochastic Gradient Descent is sensitive to feature

scaling, so it is highly recommended to scale your

data. For example, scale each attribute on the input

vector X to [0,1] or [-1,+1], or standardize it to have

mean 0 and variance 1. Note that the same scaling

must be applied to the test vector to obtain

meaningful results.

Empirically, we found that SGD converges after

observing approx. 10^6 training samples. Thus, a

reasonable first guess for the number of iterations

is n_iter = np.ceil(10**6 / n), where n is the size of the

training set.

If you apply SGD to features extracted using PCA we

found that it is often wise to scale the feature values

by some constant c such that the average L2 norm of

the training data equals one.

We found that Averaged SGD works best with a larger

number of features and a higher eta0

Here, the term "stochastic" comes from the fact that

the gradient based on a single training sample is a

"stochastic approximation" of the "true" cost](https://image.slidesharecdn.com/termpaperpdf-160410015631/75/Stochastic-gradient-descent-and-its-tuning-3-2048.jpg)

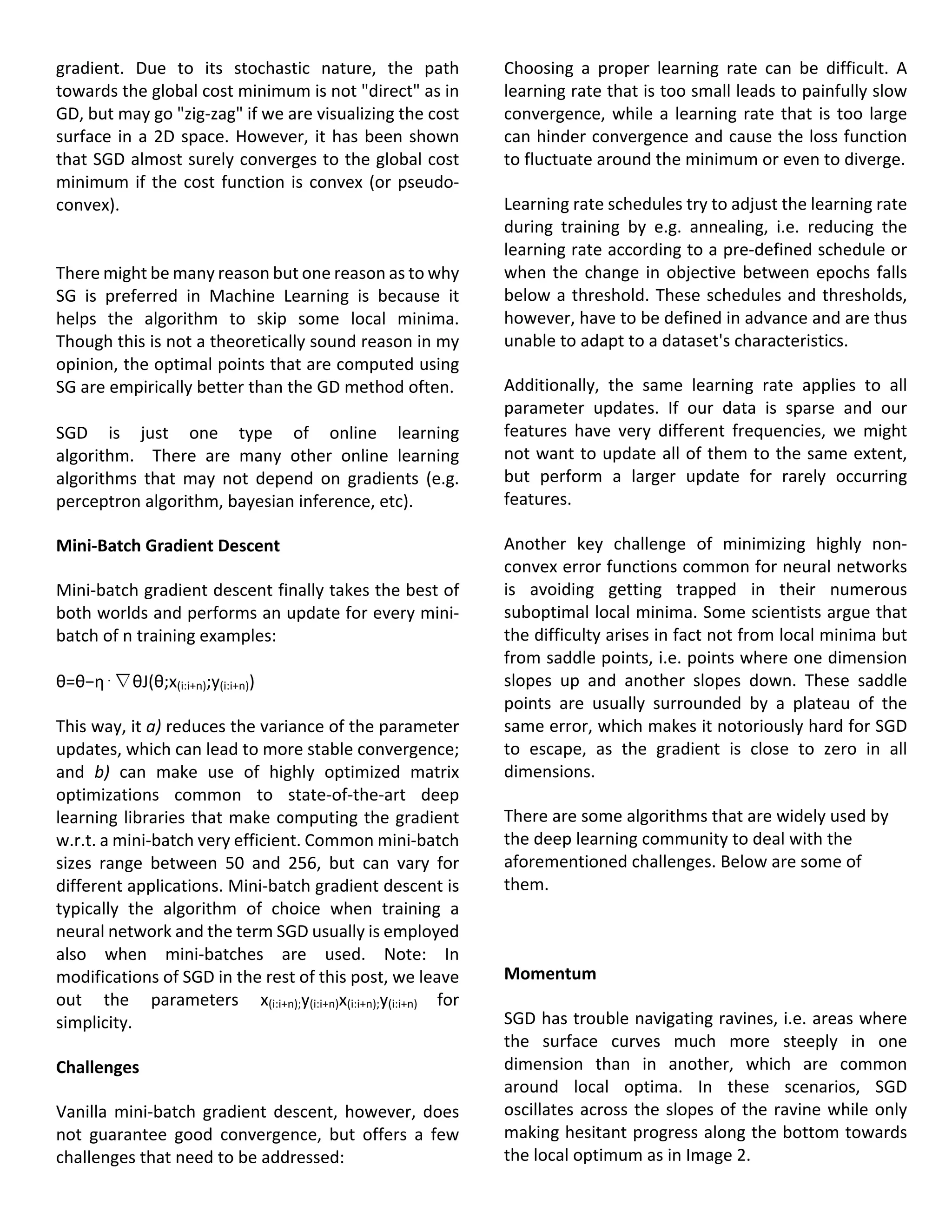

![learning rate to shrink and eventually become

infinitesimally small, at which point the algorithm is

no longer able to acquire additional knowledge. The

following algorithms aim to resolve this flaw.

Adadelta

Adadelta is an extension of Adagrad that seeks to

reduce its aggressive, monotonically decreasing

learning rate. Instead of accumulating all past

squared gradients, Adadelta restricts the window of

accumulated past gradients to some fixed size w.

Instead of inefficiently storing w previous squared

gradients, the sum of gradients is recursively defined

as a decaying average of all past squared gradients.

The running average E[g2

]t at time step t then

depends (as a fraction γ similarly to the Momentum

term) only on the previous average and the current

gradient.

RMSprop

RMSprop is an unpublished, adaptive learning rate

method proposed by Geoff Hinton

RMSprop and Adadelta have both been developed

independently around the same time stemming from

the need to resolve Adagrad's radically diminishing

learning rates. RMSprop in fact is identical to the first

update vector of Adadelta that we derived above:

RMSprop as well divides the learning rate by an

exponentially decaying average of squared gradients.

Hinton suggests γ to be set to 0.9, while a good

default value for the learning rate η is 0.001.

Adam

Adaptive Moment Estimation (Adam) is another

method that computes adaptive learning rates for

each parameter. In addition to storing an

exponentially decaying average of past squared

gradients vt like Adadelta and RMSprop, Adam also

keeps an exponentially decaying average of past

gradients mt, similar to momentum:

mt=β1mt−1+(1−β1)gt

vt=β2vt−1+(1−β2)g2t

mt and vt are estimates of the first moment (the

mean) and the second moment (the uncentered

variance) of the gradients respectively, hence the

name of the method. As mt and vt are initialized as

vectors of 0's, the authors of Adam observe that they

are biased towards zero, especially during the initial

time steps, and especially when the decay rates are

small (i.e. β1 and β2 are close to 1).

ADDITIONAL STRATEGIES FOR OPTIMIZING SGD

Finally, we introduce additional strategies that can be

used alongside any of the previously mentioned

algorithms to further improve the performance of

SGD.

Shuffling And Curriculum Learning

Generally, we want to avoid providing the training

examples in a meaningful order to our model as this

may bias the optimization algorithm. Consequently, it

is often a good idea to shuffle the training data after

every epoch.

On the other hand, for some cases where we aim to

solve progressively harder problems, supplying the

training examples in a meaningful order may actually

lead to improved performance and better

convergence. The method for establishing this

meaningful order is called Curriculum Learning.

Batch Normalization

To facilitate learning, we typically normalize the initial

values of our parameters by initializing them with

zero mean and unit variance. As training progresses

and we update parameters to different extents, we

lose this normalization, which slows down training

and amplifies changes as the network becomes

deeper.

Batch normalization reestablishes these

normalizations for every mini-batch and changes are

back-propagated through the operation as well. By

making normalization part of the model architecture,](https://image.slidesharecdn.com/termpaperpdf-160410015631/75/Stochastic-gradient-descent-and-its-tuning-6-2048.jpg)

![Conclusion

In conclusion we saw that to manage large scale

data we can use variants of gradient descent, batch

gradient descent, stochastic gradient descent (SGD),

and mini gradient descent in the order of

improvement over the previous one. Each of which

differs in how much data we use to compute the

gradient of the objective function. Given the

ubiquity of large-scale data solutions and the

availability of low-commodity clusters, distributing

SGD to speed it up further is an obvious choice. SGD

by itself is inherently sequential: Step-by-step, we

progress further towards the minimum. Running it

provides good convergence but can be slow

particularly on large datasets. In contrast, running

SGD asynchronously is faster, but suboptimal

communication between workers can lead to poor

convergence. Additionally, we can also parallelize

SGD on one machine without the need for a large

computing cluster.

Depending on the amount of data, we make a trade-

off between the accuracy of the parameter update

and the time it takes to perform an update. To

overcome this challenge of parameter update using

the learning rate we looked at various methods that

address this problem such as momentum, Nesterov

Accelerated gradient, Adagrad, Adadelta, RMSProp

and Adam. In summary, RMSprop is an extension of

Adagrad that deals with its radically diminishing

learning rates. It is identical to Adadelta, except that

Adadelta uses the RMS of parameter updates in the

numinator update rule. Adam, finally, adds bias-

correction and momentum to RMSprop. Insofar,

RMSprop, Adadelta, and Adam are very similar

algorithms that do well in similar circumstances. Its

bias-correction helps Adam slightly outperform

RMSprop towards the end of optimization as

gradients become sparser. Insofar, Adam might be

the best overall choice.

Interestingly, many recent papers use vanilla SGD

without momentum and a simple learning rate

annealing schedule. As has been shown, SGD usually

achieves to find a minimum, but it might take

significantly longer than with some of the

optimizers, is much more reliant on a robust

initialization and annealing schedule, and may get

stuck in saddle points rather than local minima.

Consequently, if you care about fast convergence

and train a deep or complex neural network, you

should choose one of the adaptive learning rate

methods.

References

[1]Bottou, Léon (1998). "Online Algorithms and

Stochastic Approximations".

[2]Bottou, Léon. "Large-scale machine learning

with SGD."

[3]Bottou, Léon. "SGD tricks." Neural Networks:

Tricks of the Trade.

[4]https://www.quora.com/Whats-the-difference-

between-gradient-descent-and-stochastic-gradient-

descent

[5]http://ufldl.stanford.edu/tutorial/supervised/Op

timizationStochasticGradientDescent/

[6]http://scikit-learn.org/stable/modules/sgd.html

[7]http://sebastianruder.com/optimizing-gradient-

descent/](https://image.slidesharecdn.com/termpaperpdf-160410015631/75/Stochastic-gradient-descent-and-its-tuning-8-2048.jpg)