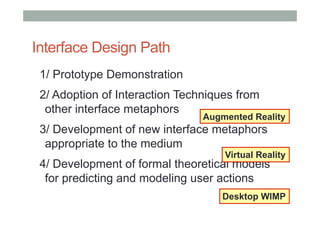

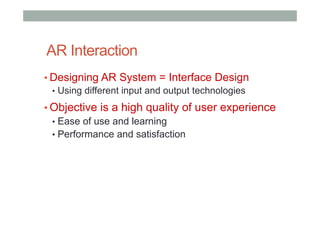

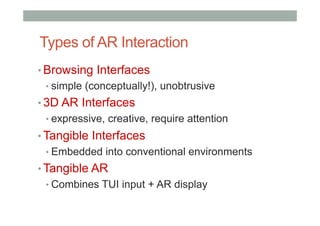

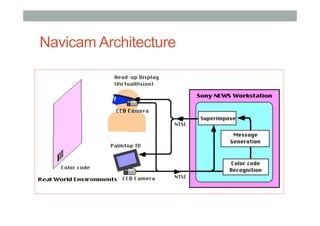

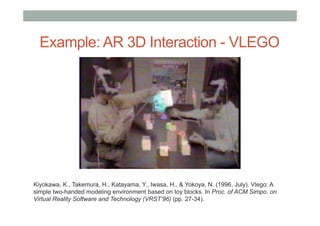

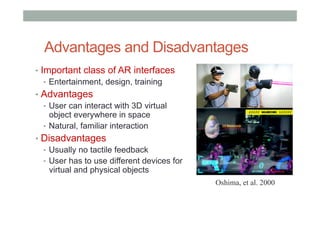

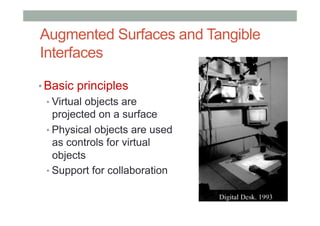

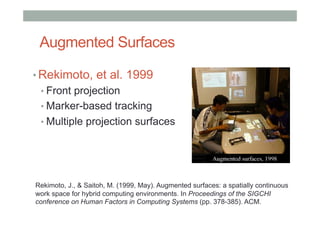

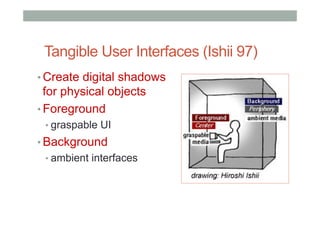

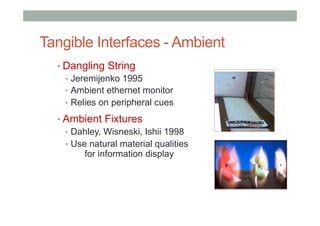

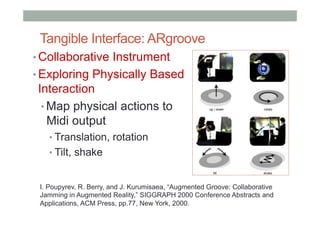

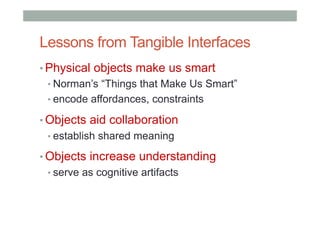

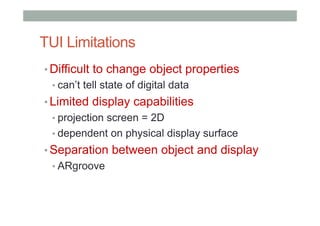

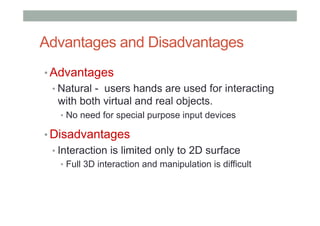

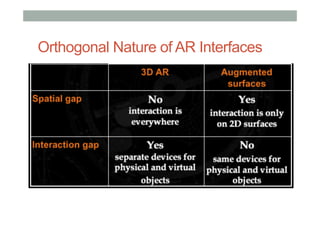

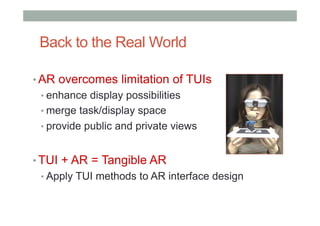

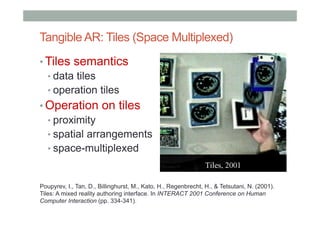

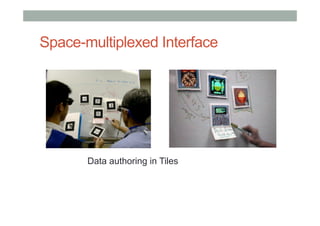

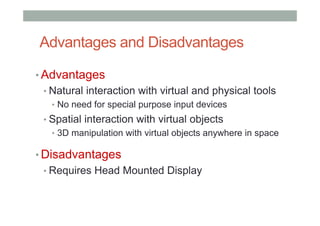

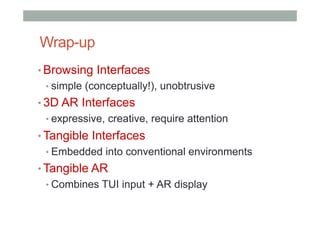

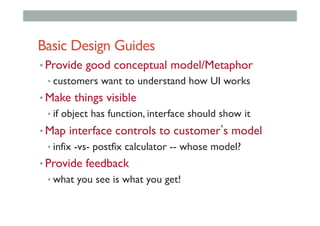

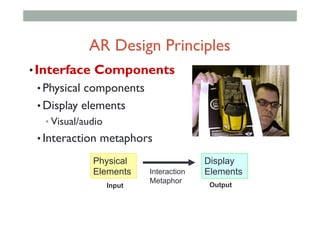

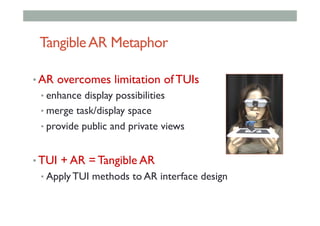

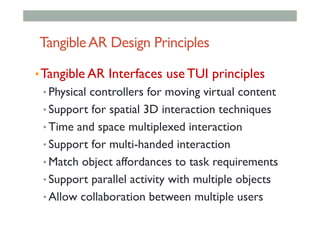

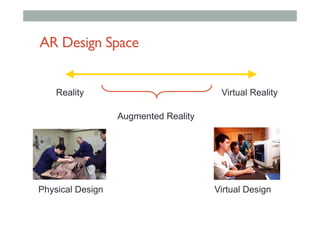

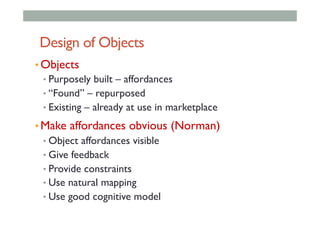

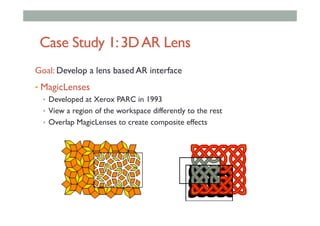

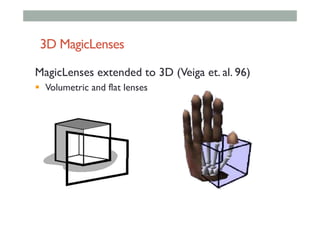

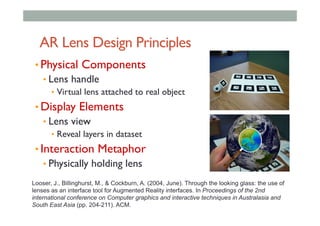

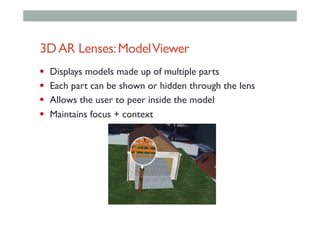

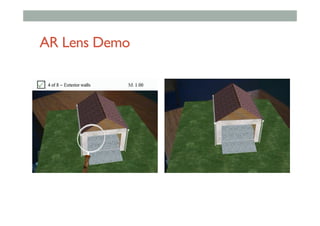

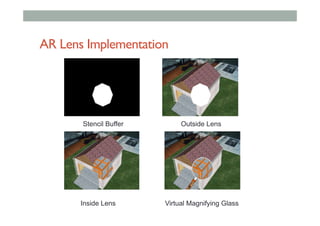

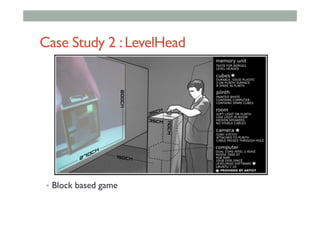

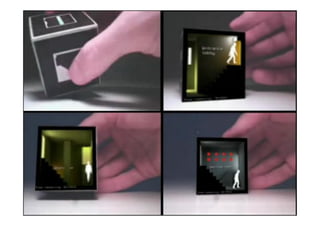

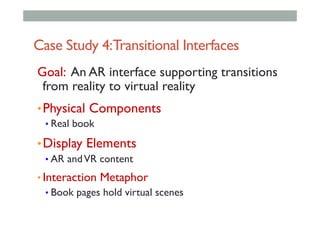

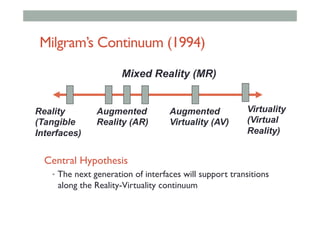

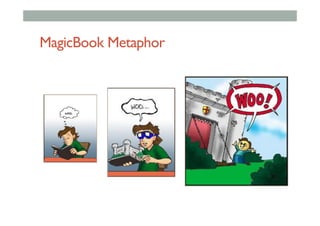

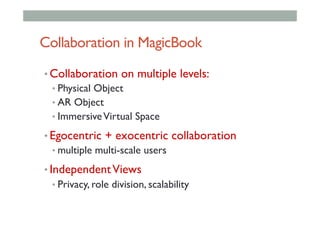

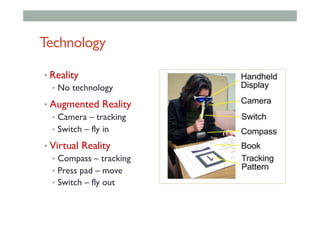

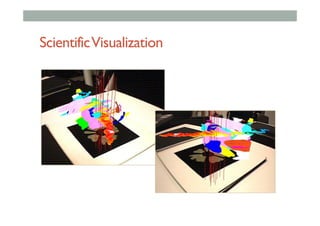

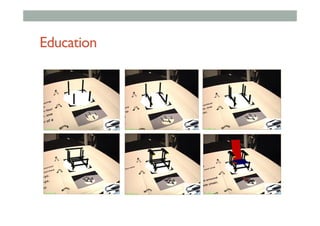

Lecture 11 focuses on augmented reality (AR), defining its characteristics and technologies, including real-time interaction and 3D registration. It discusses various types of AR interfaces, such as browsing interfaces, 3D interfaces, and tangible interfaces, and highlights their advantages and limitations. The lecture also emphasizes design principles for AR systems and explores case studies, illustrating practical applications and transitions between reality and virtuality.

![Augmented Reality Definition

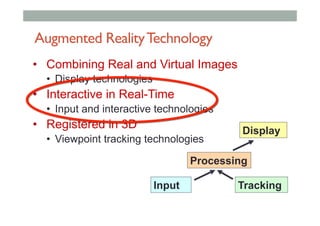

• Defining Characteristics [Azuma 97]

• Combines Real andVirtual Images

• Both can be seen at the same time

• Interactive in real-time

• The virtual content can be interacted with

• Registered in 3D

• Virtual objects appear fixed in space

Azuma, R. T. (1997). A survey of augmented reality. Presence, 6(4), 355-385.](https://image.slidesharecdn.com/lecture11-arinteractionnovideo2-161103080056/85/COMP-4010-Lecture11-AR-Interaction-2-320.jpg)