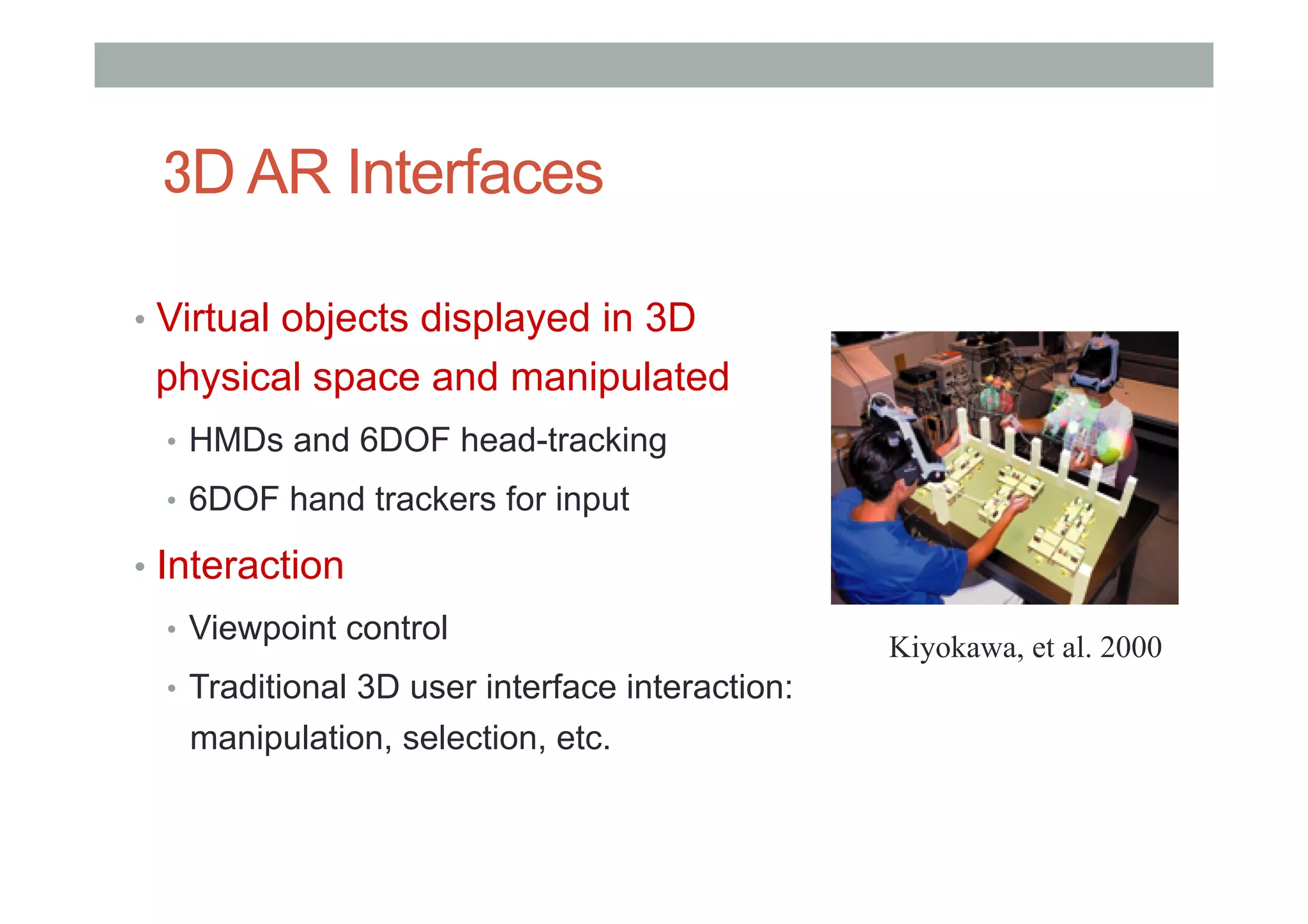

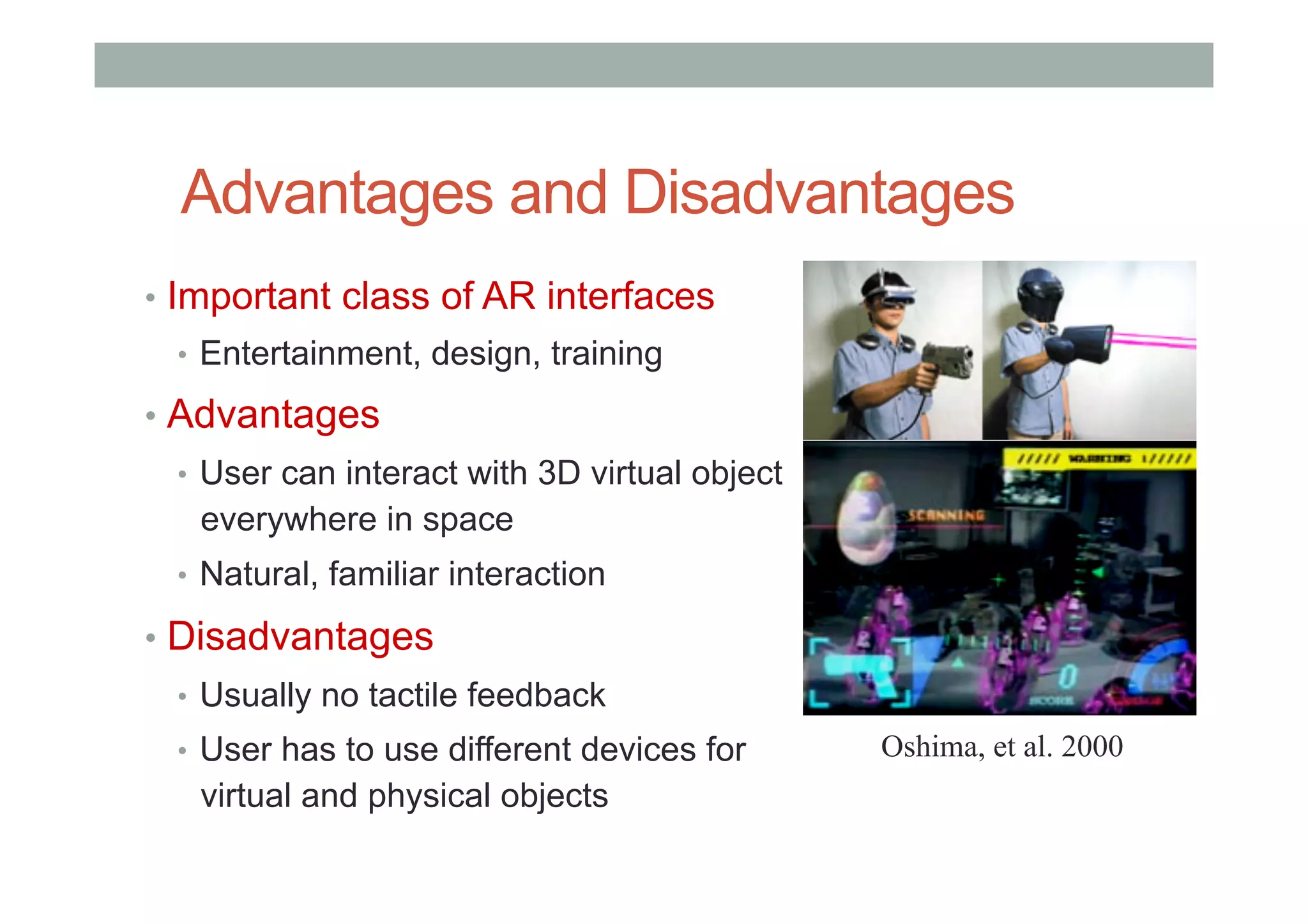

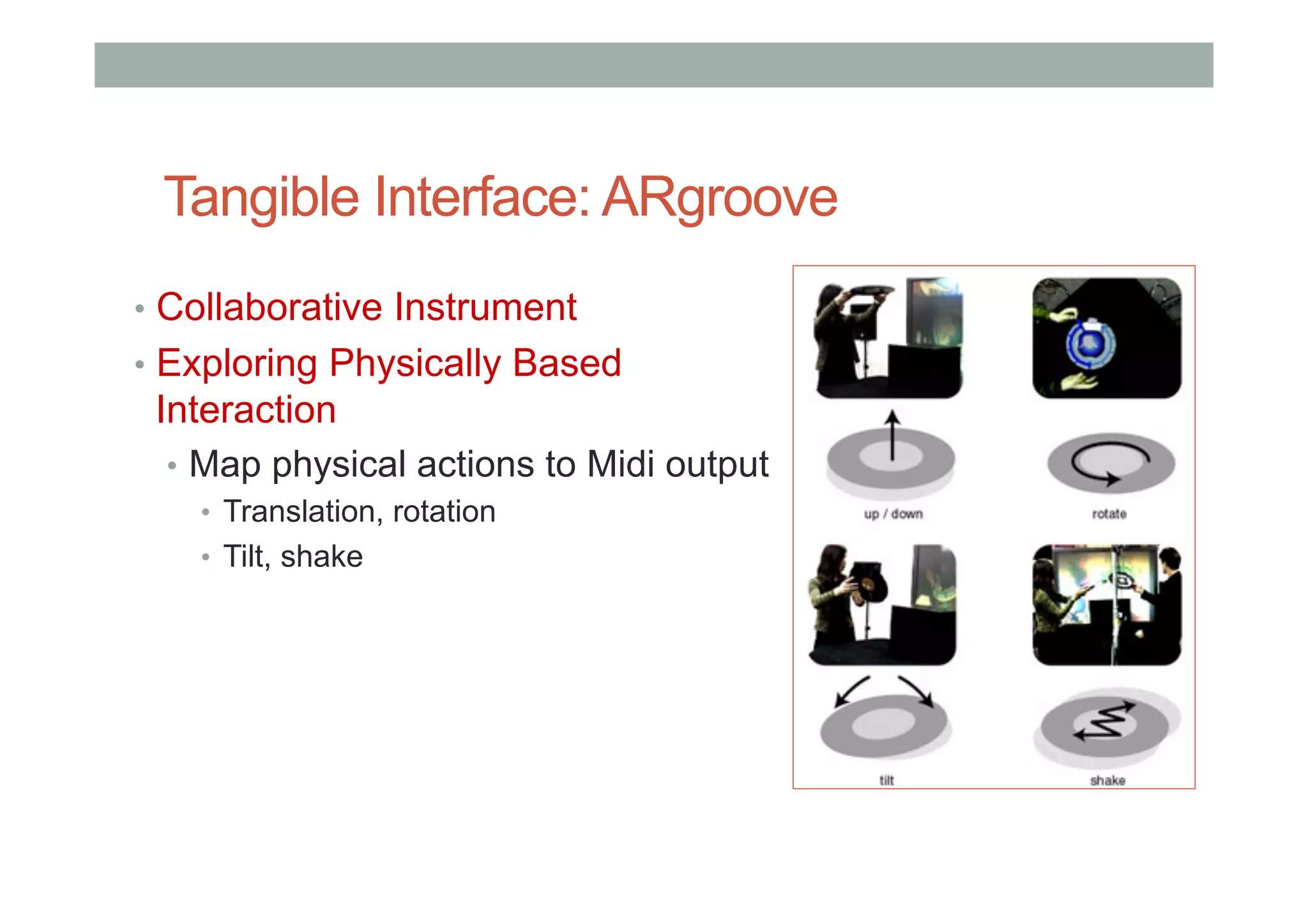

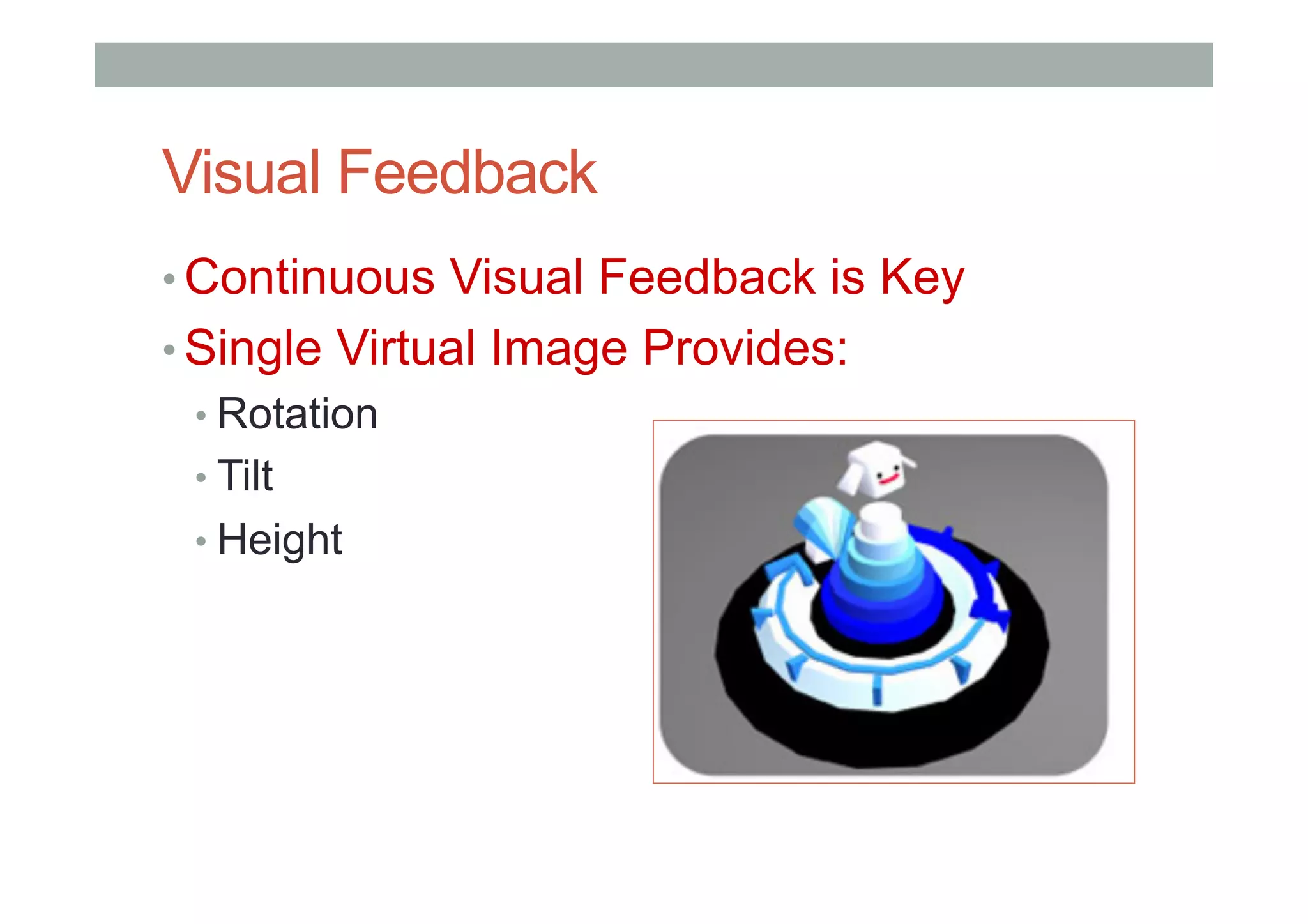

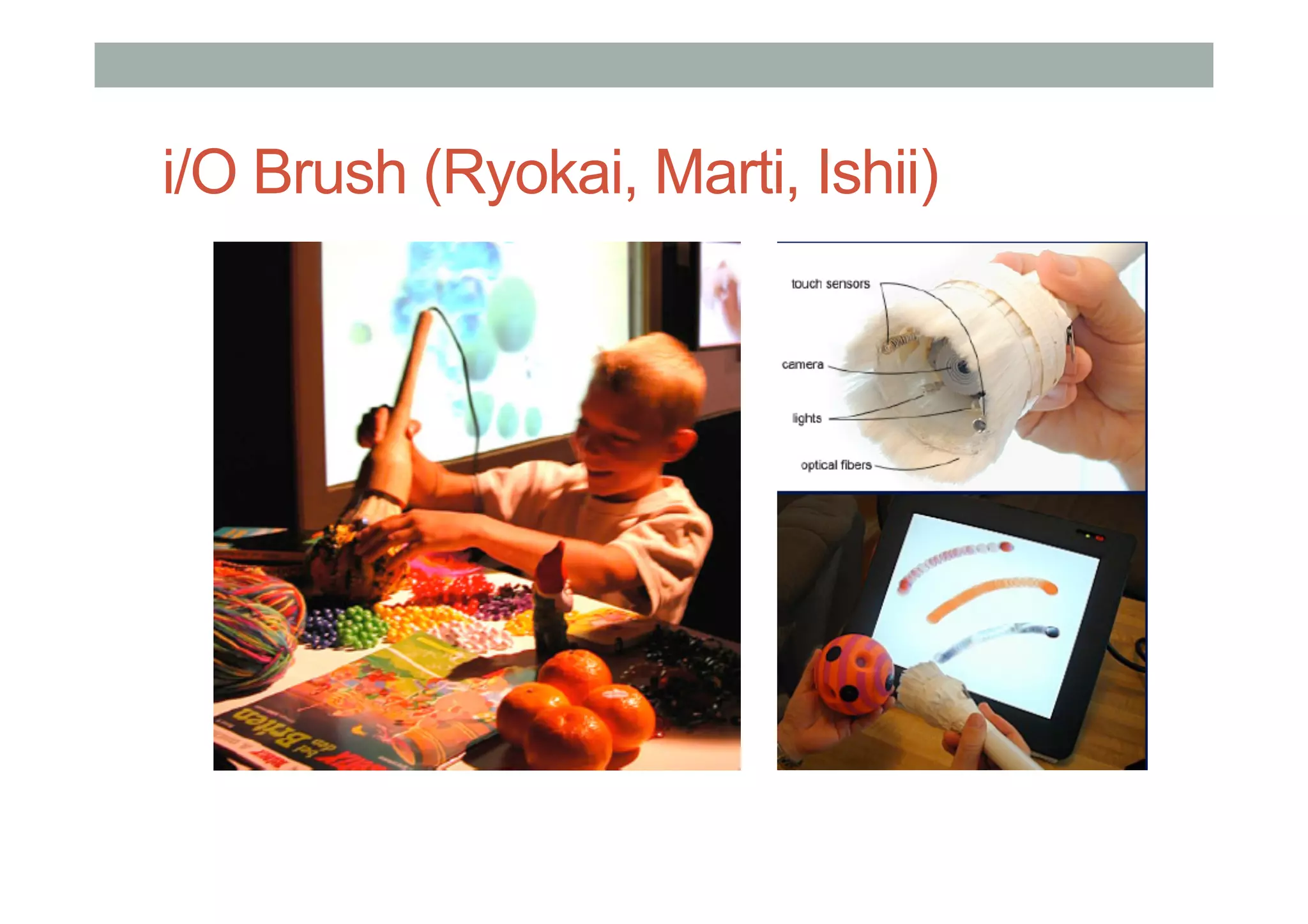

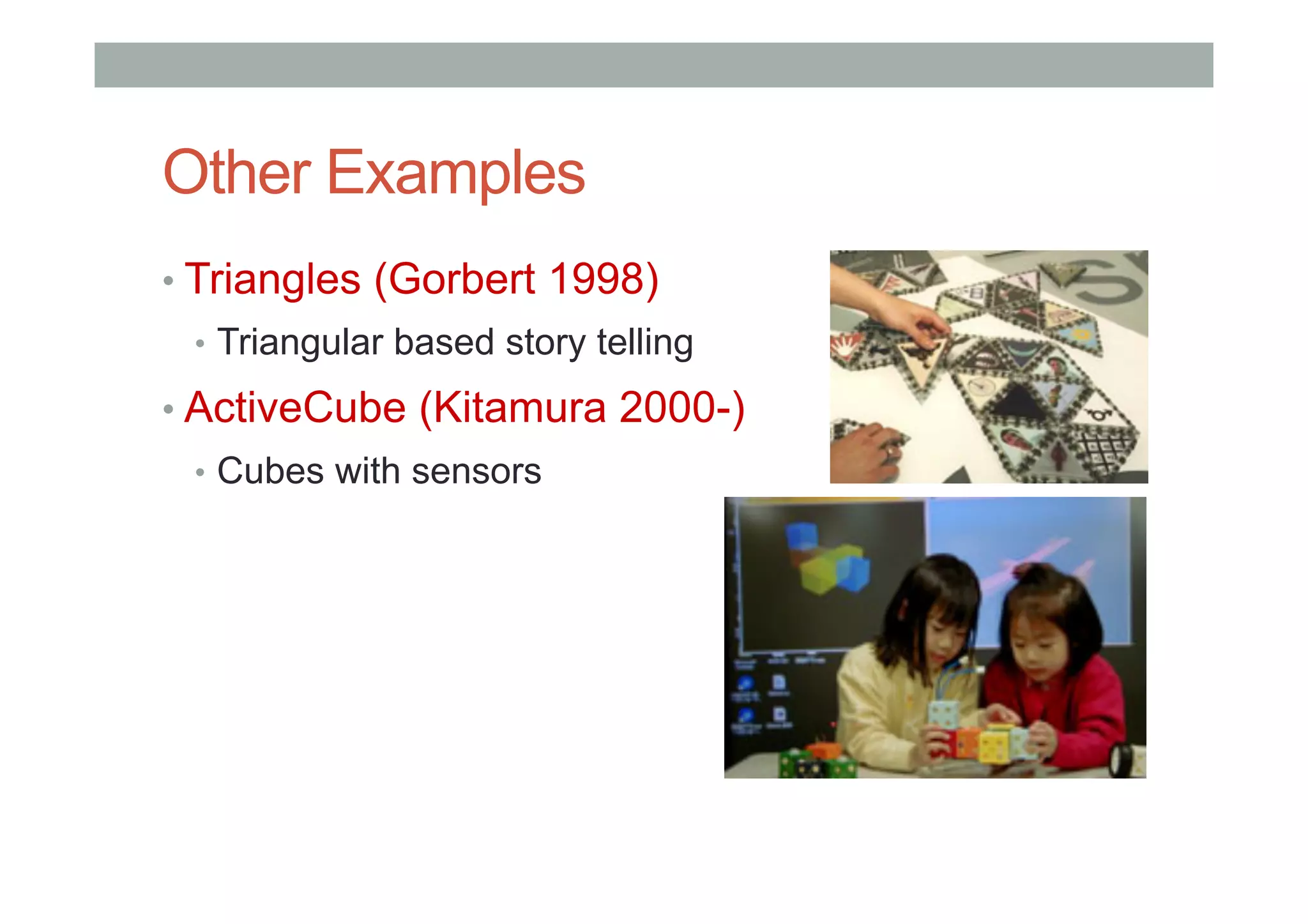

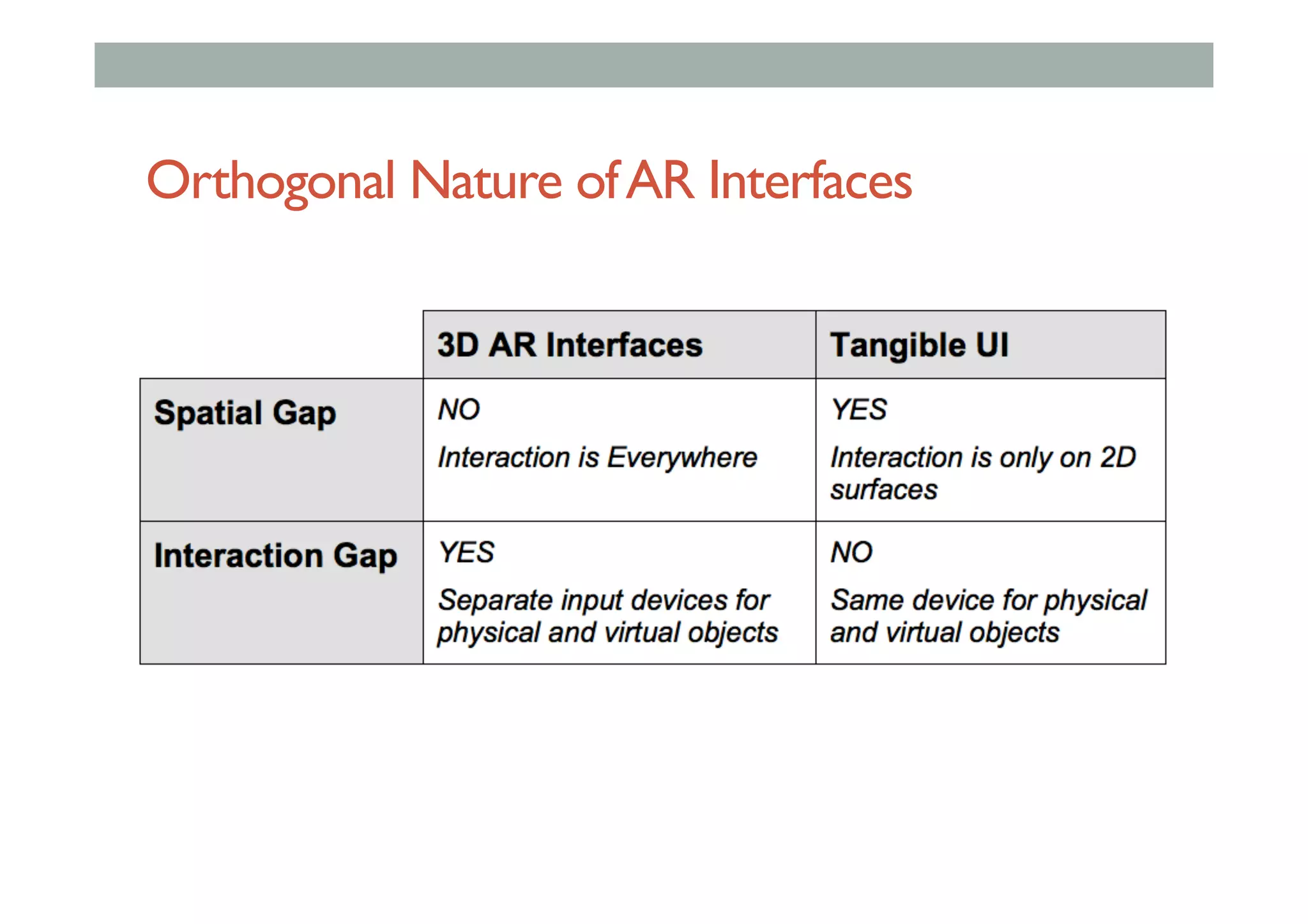

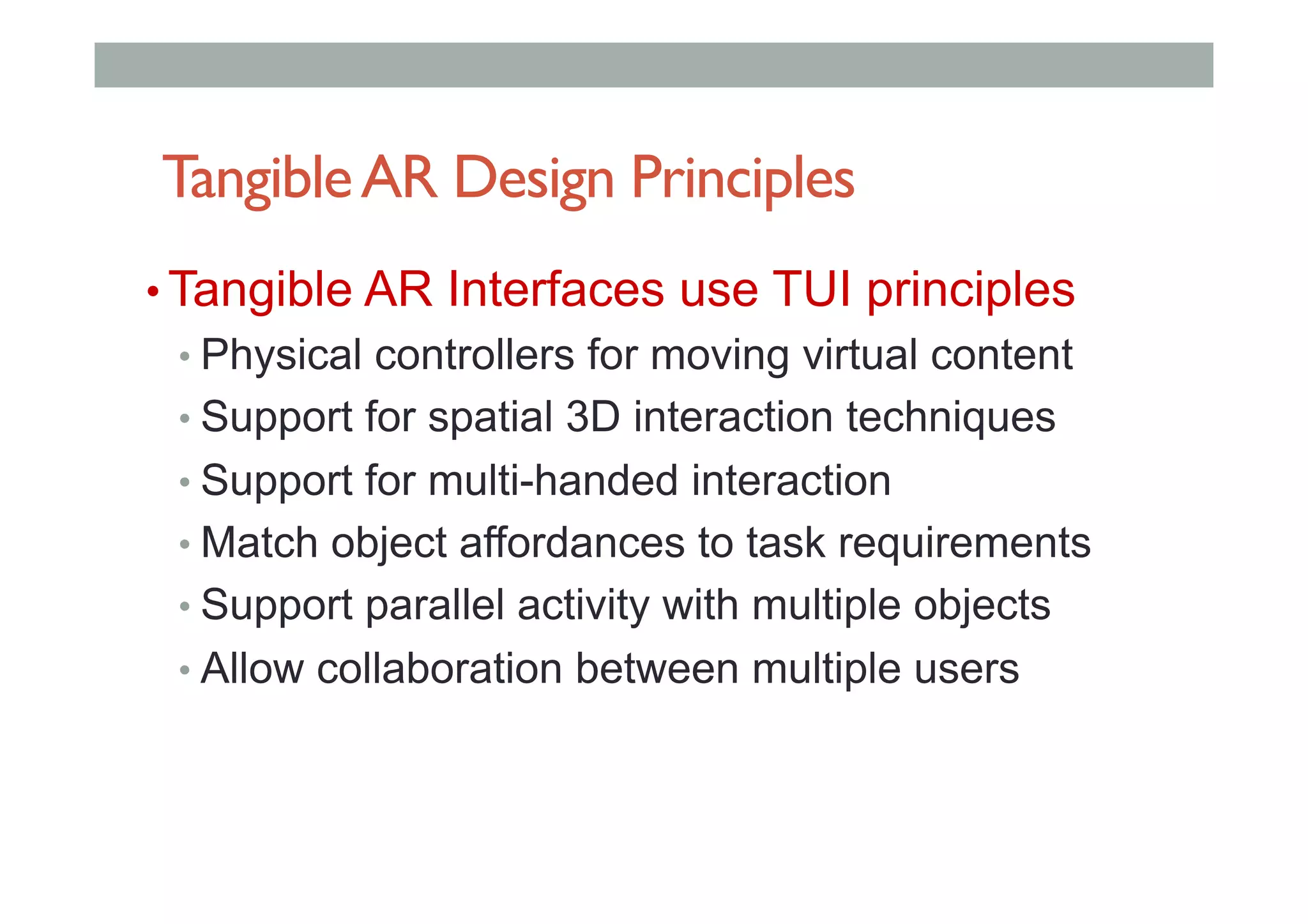

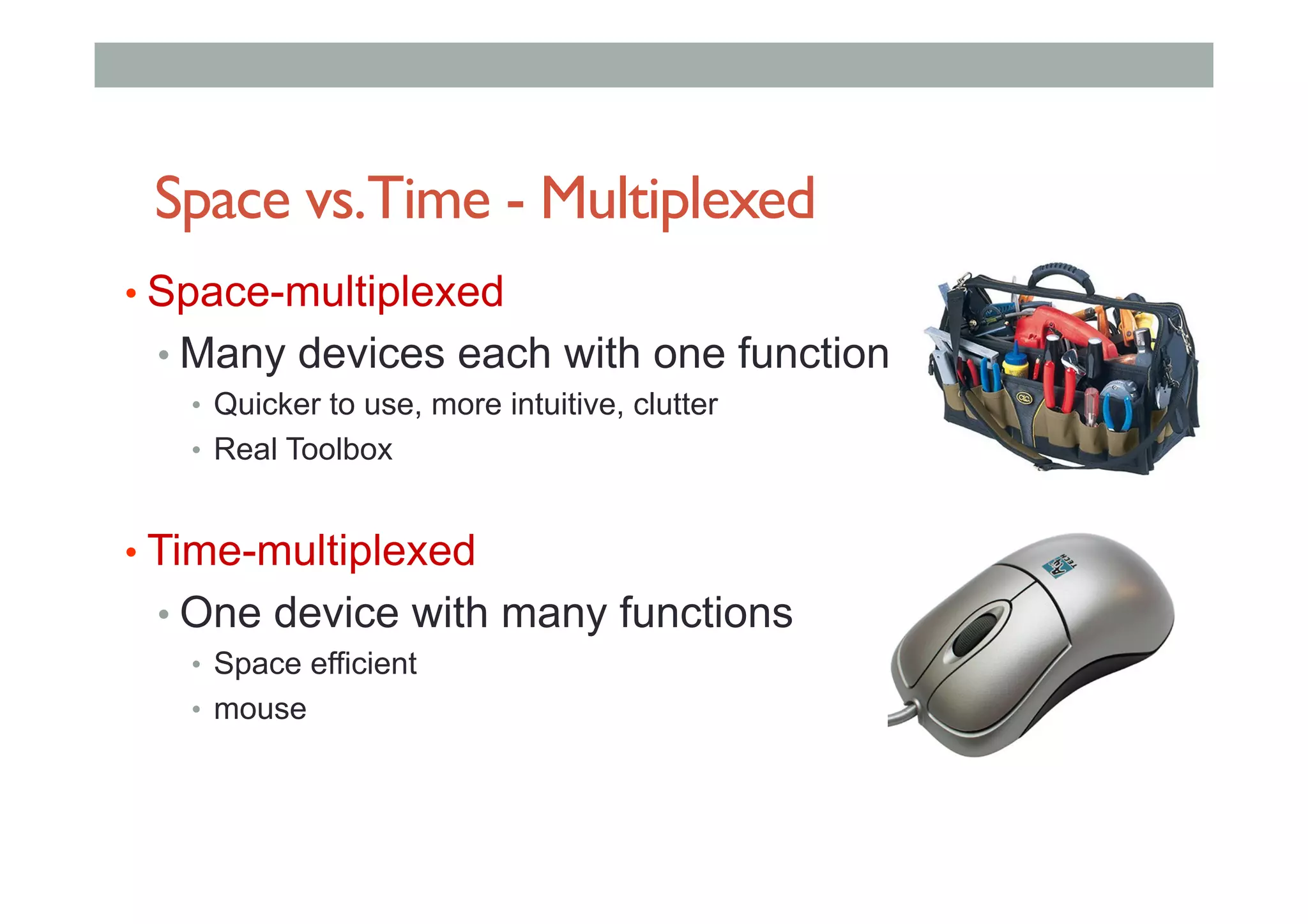

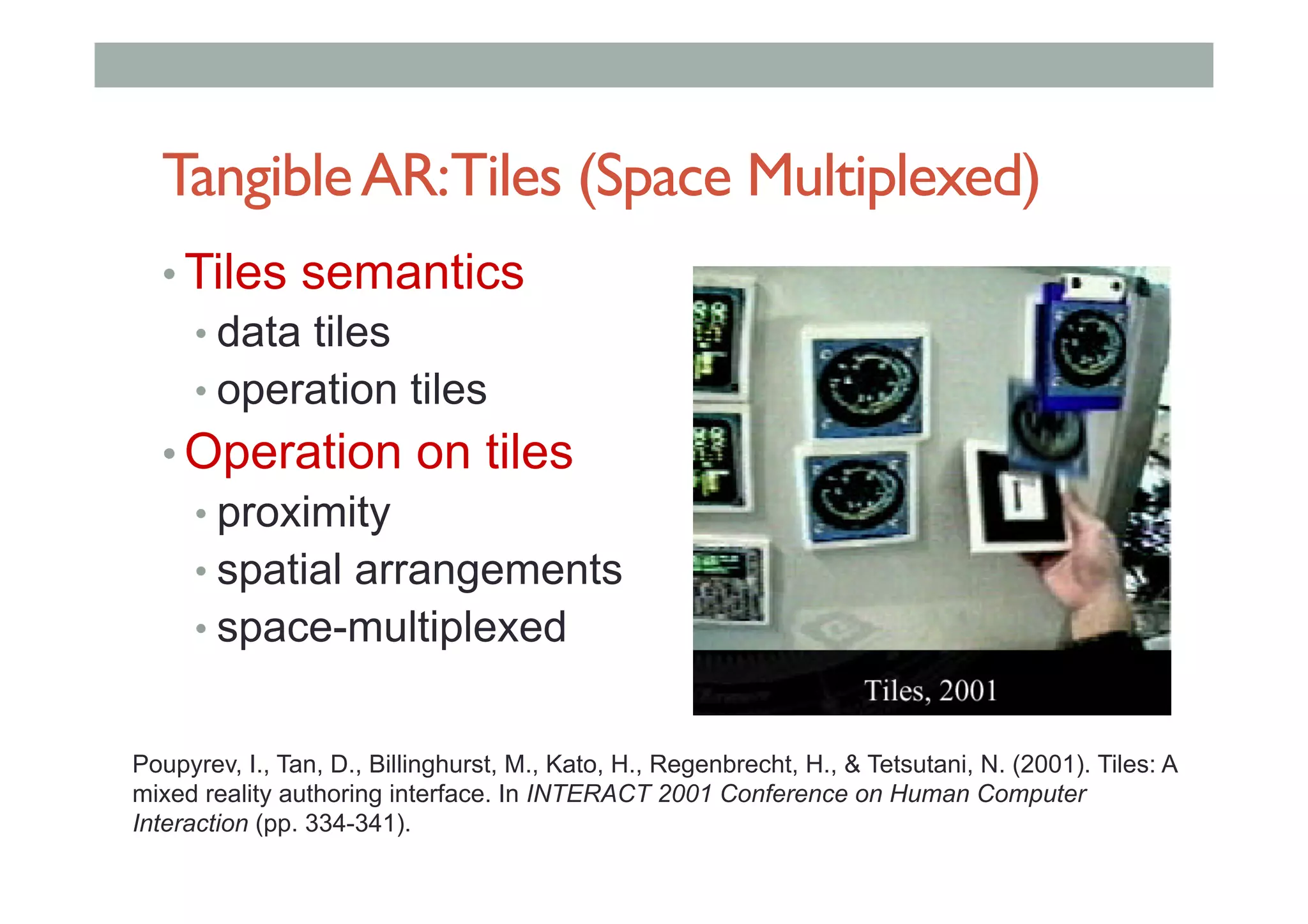

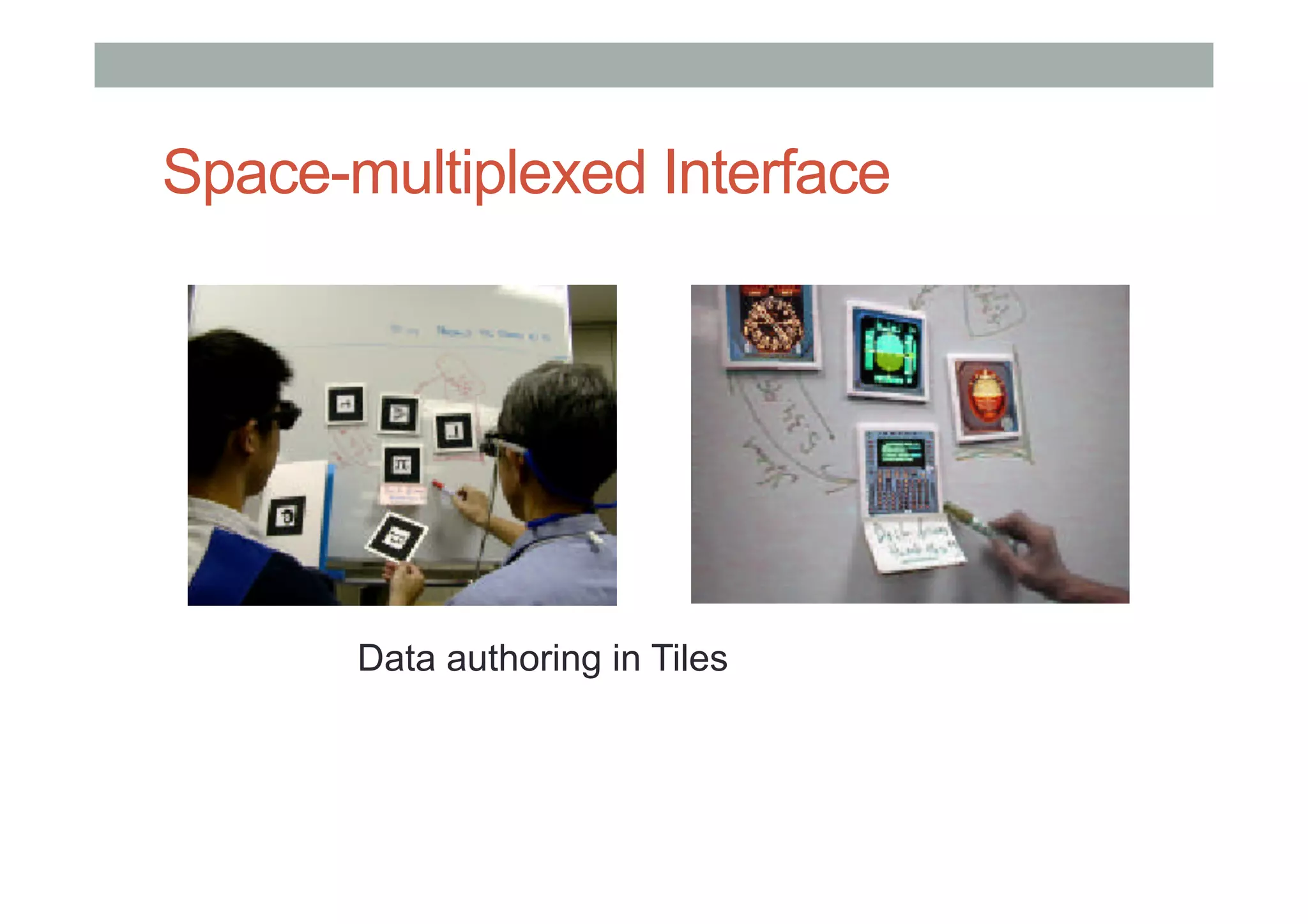

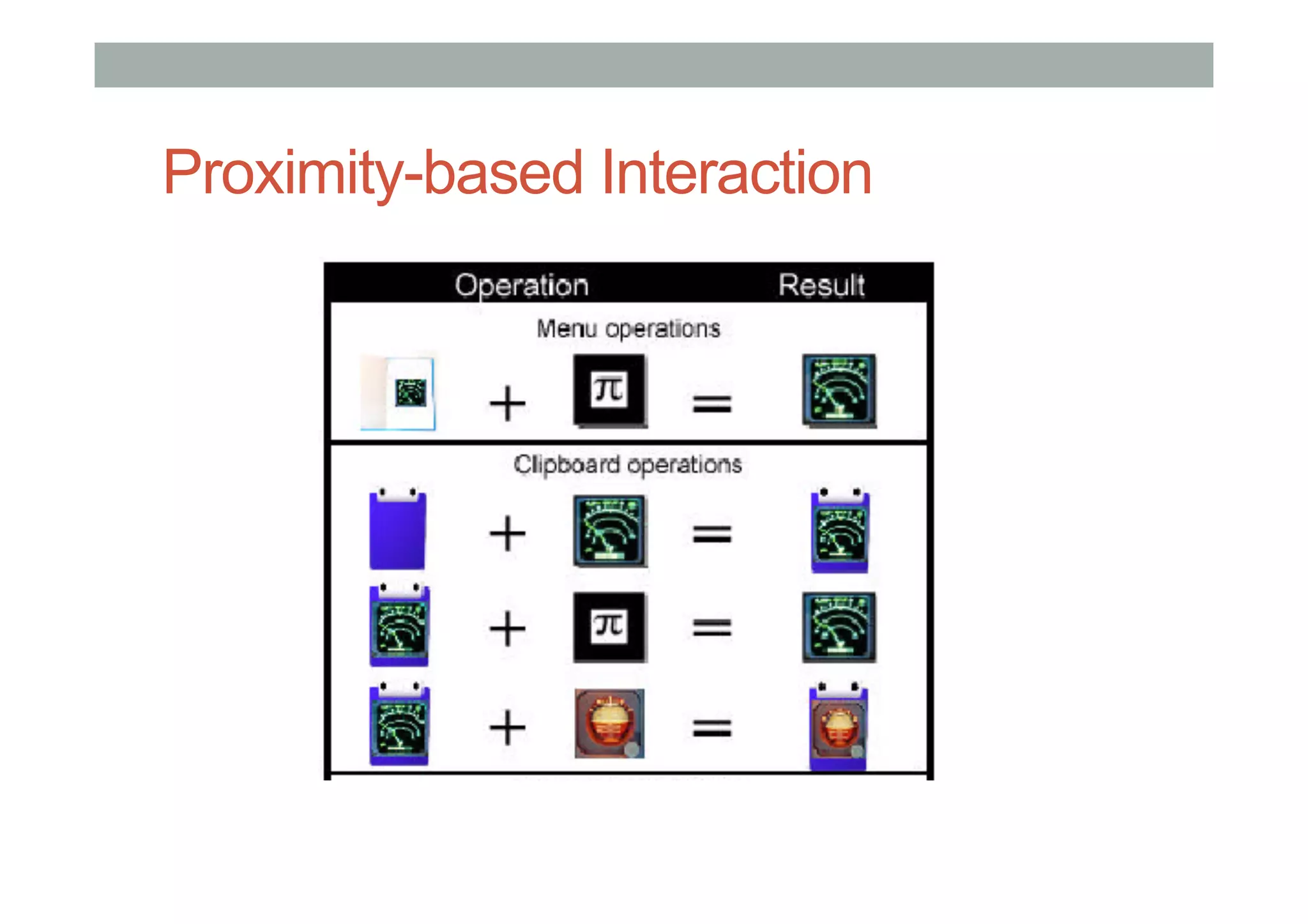

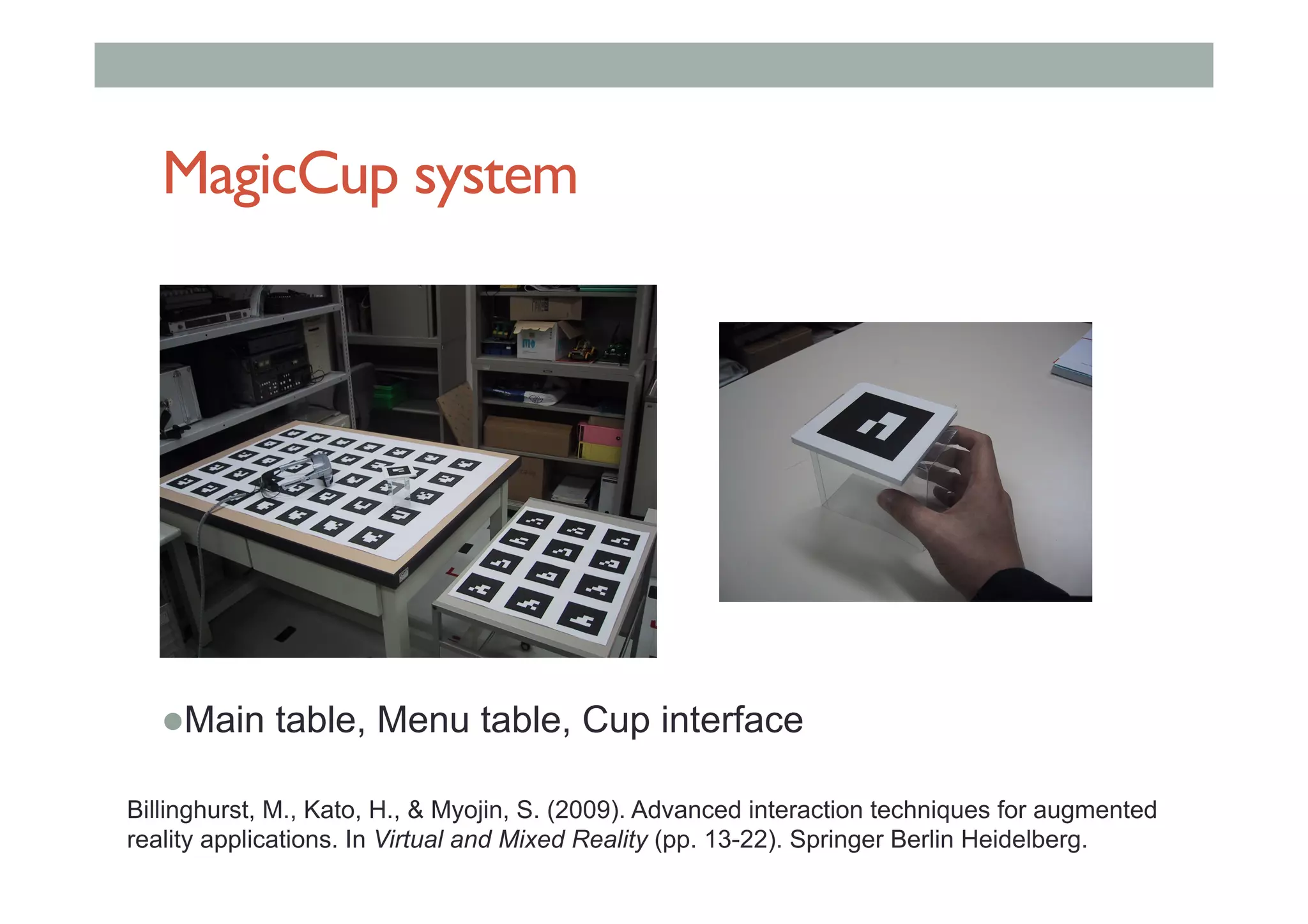

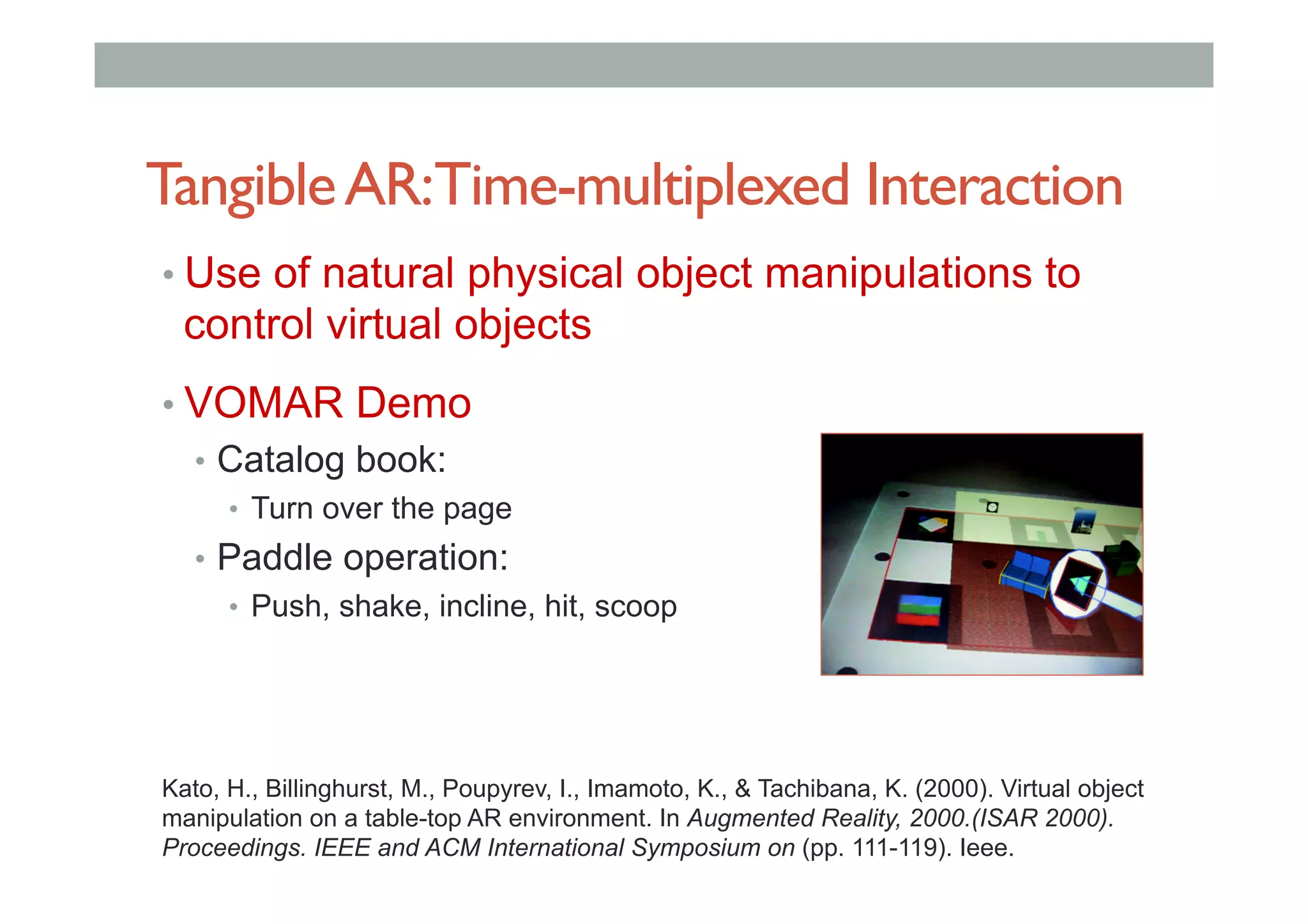

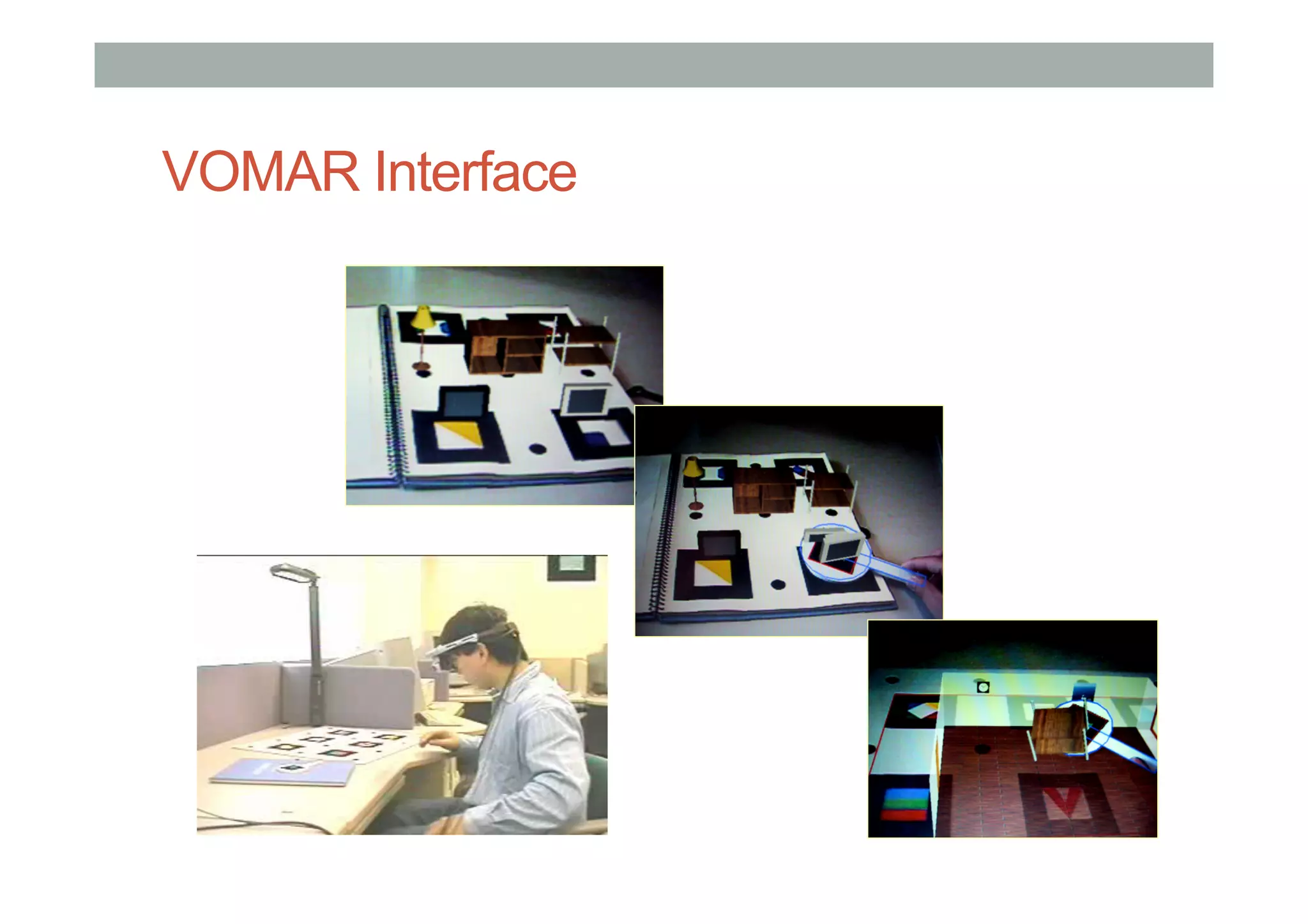

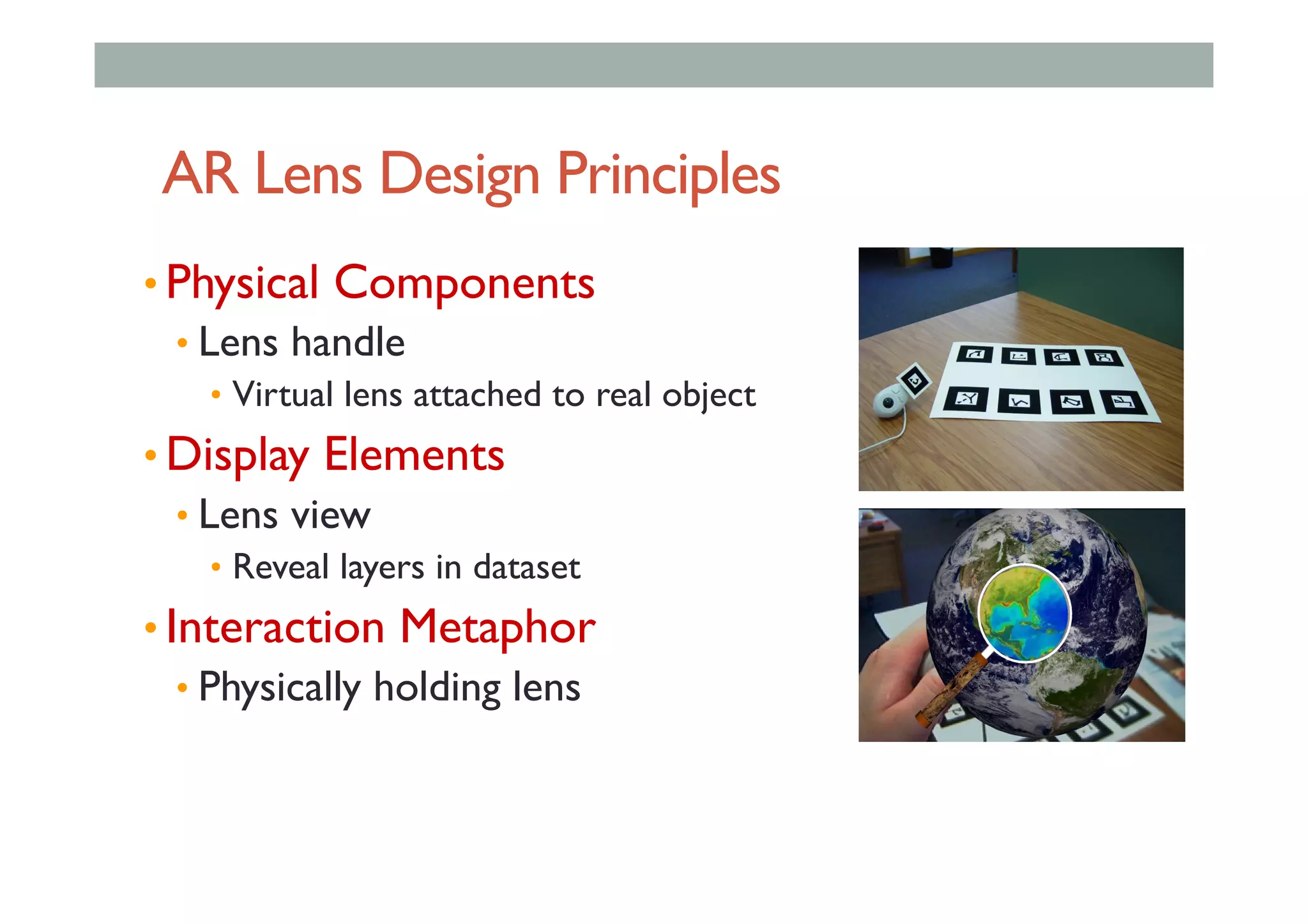

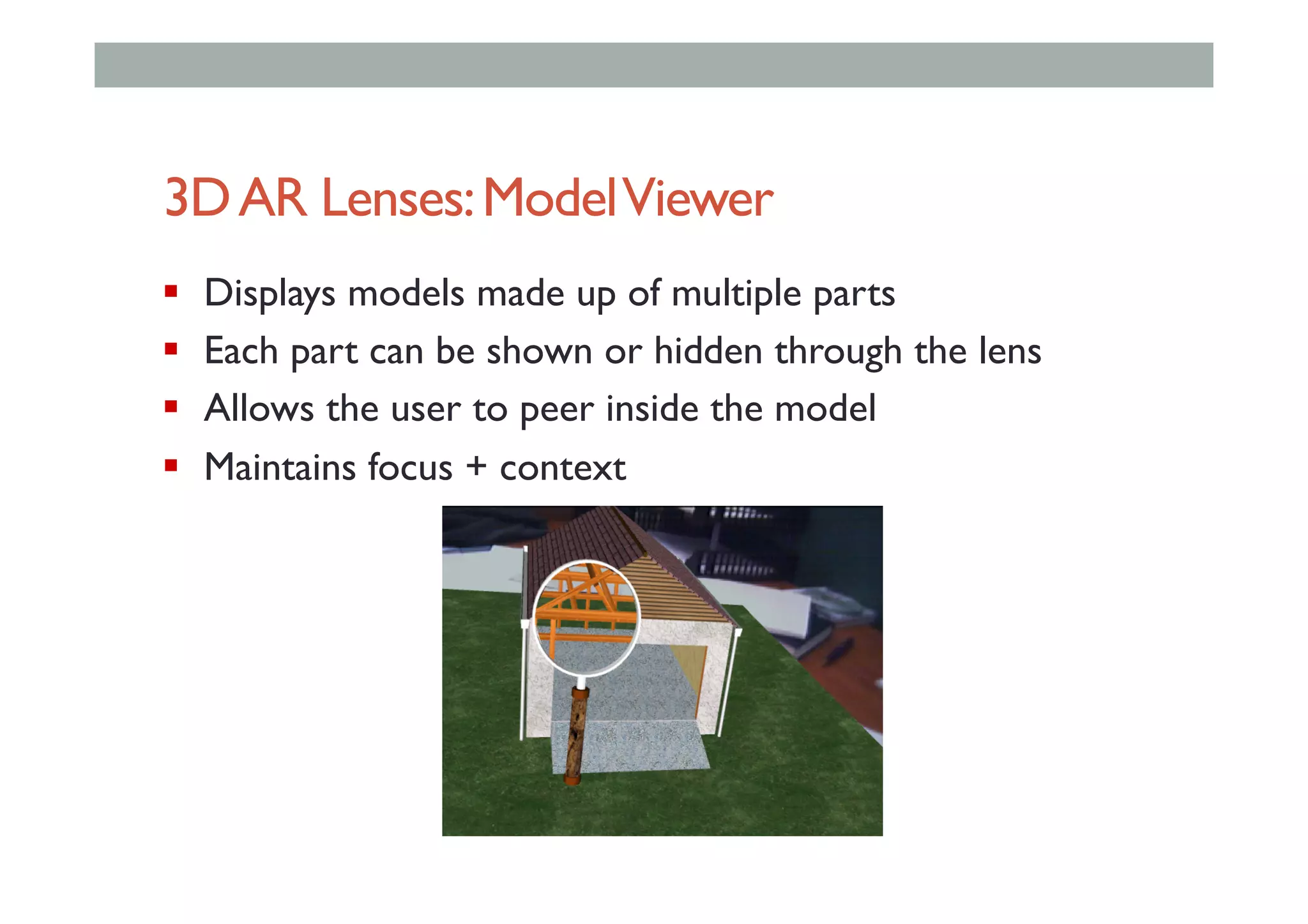

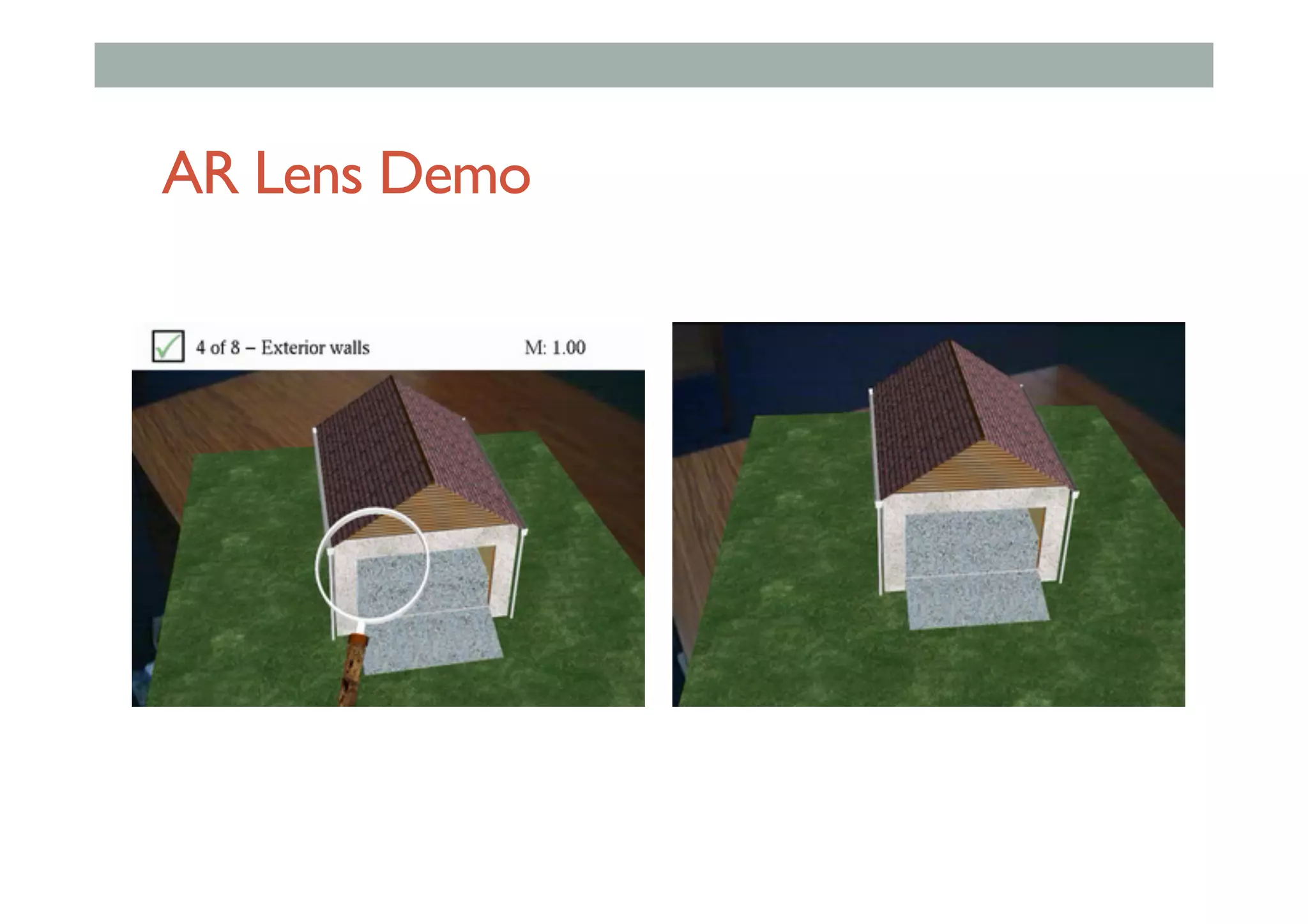

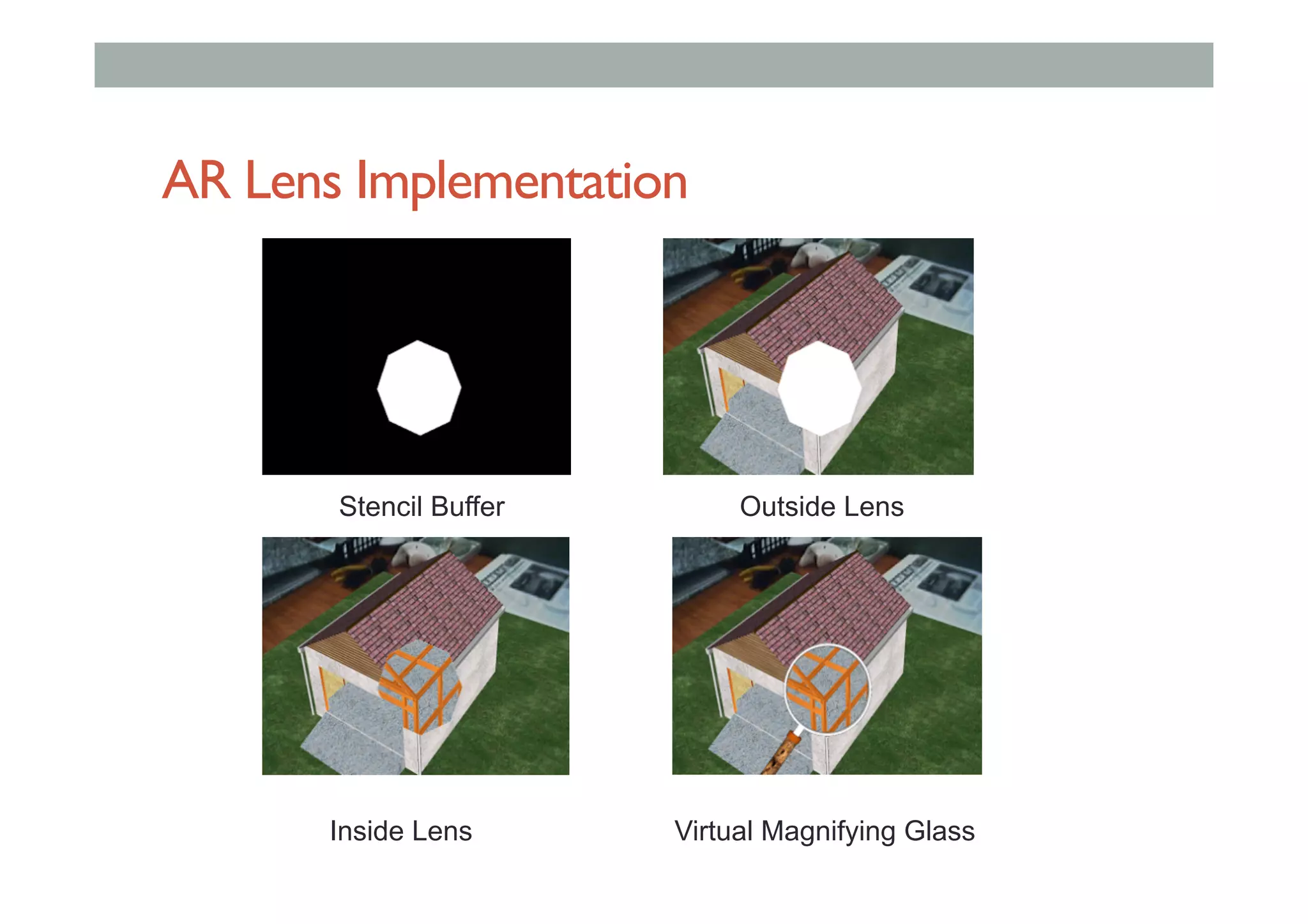

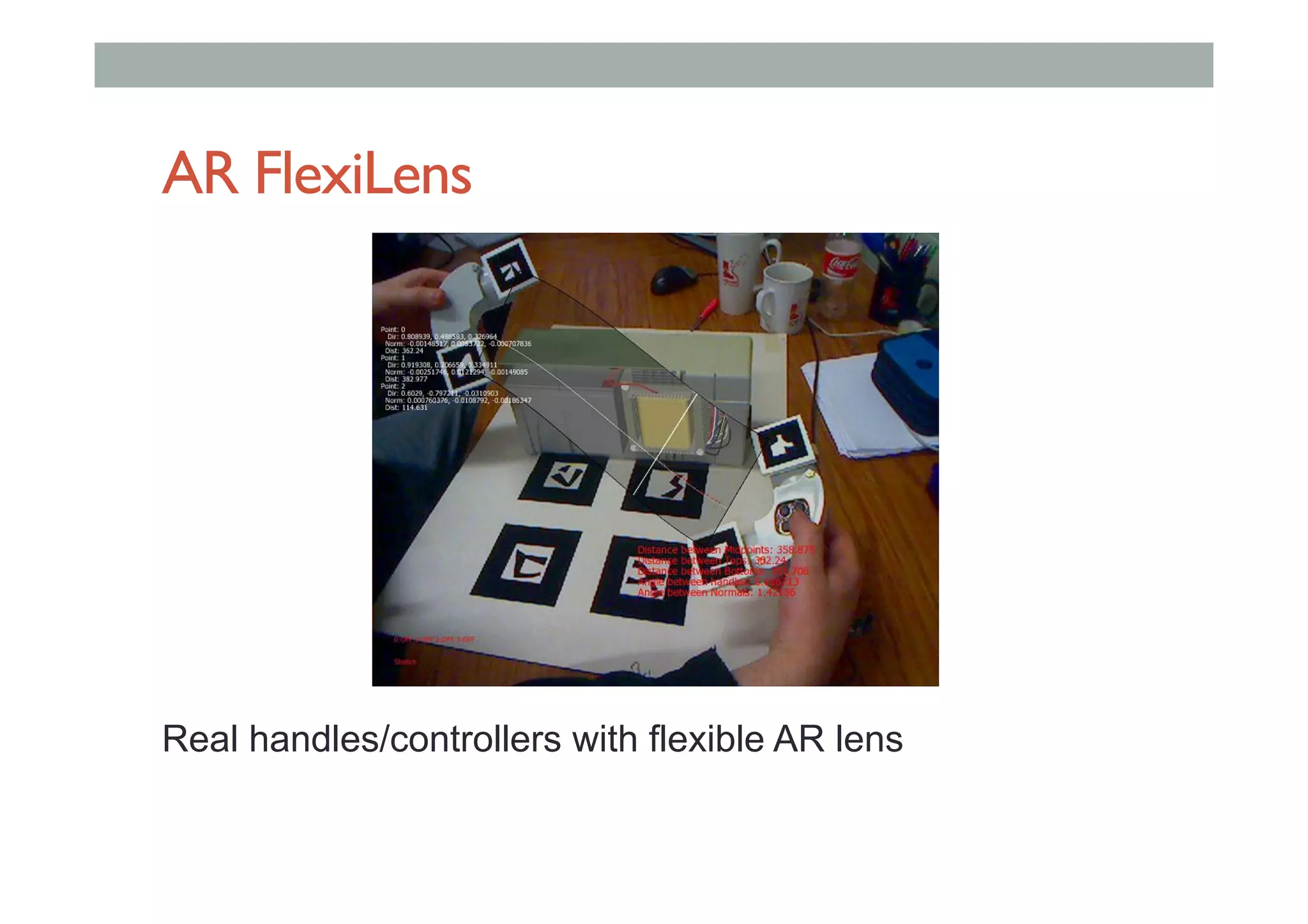

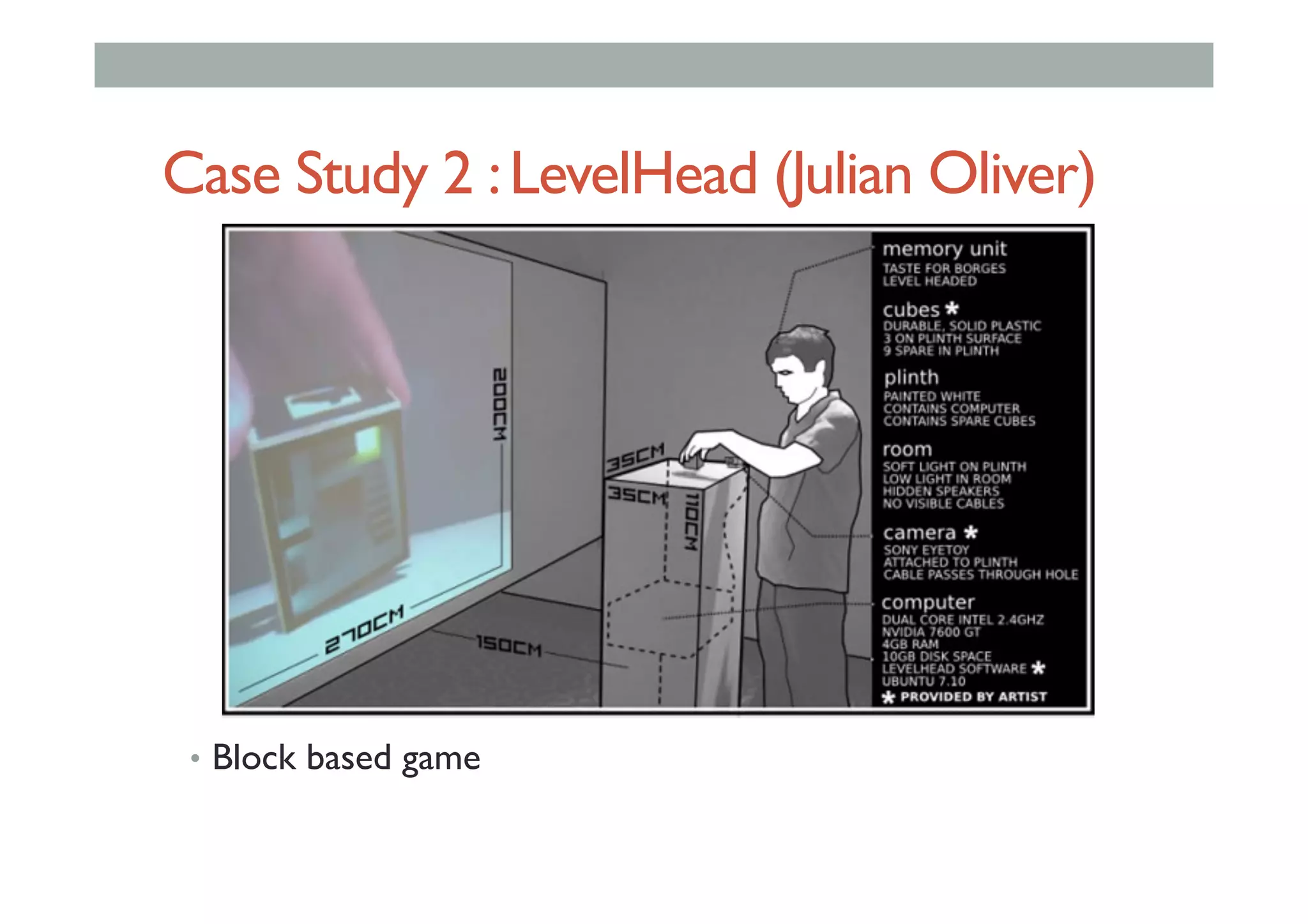

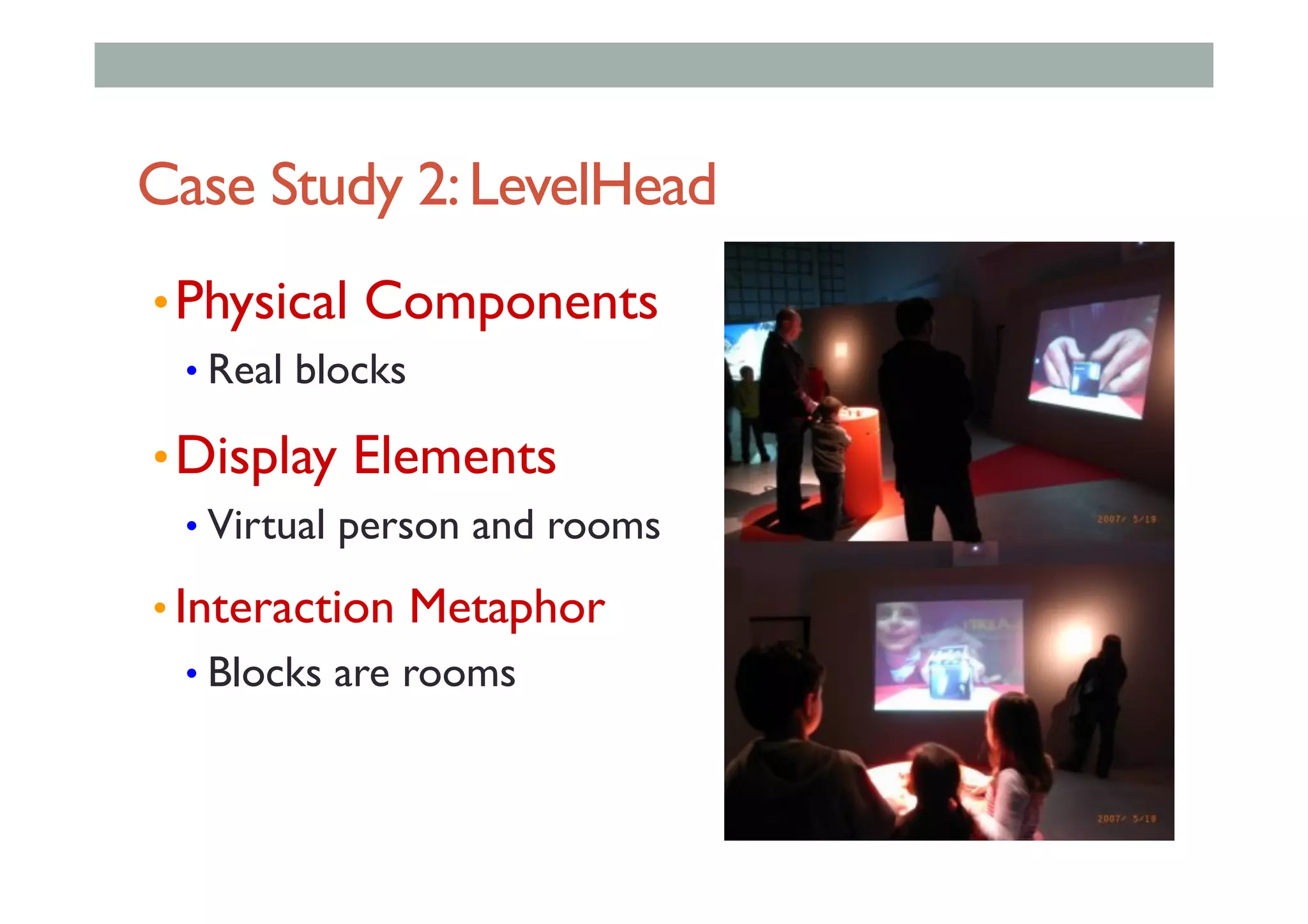

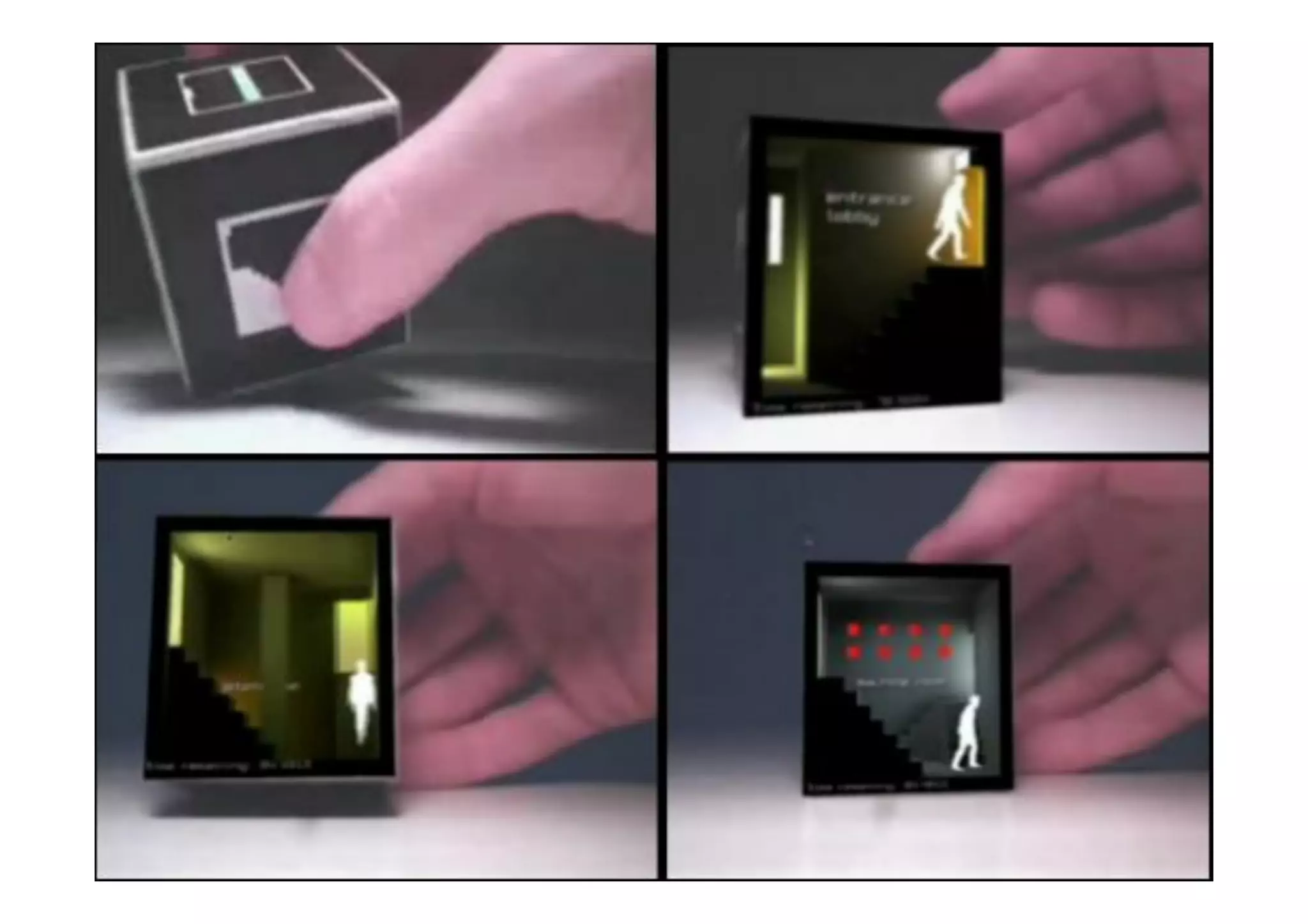

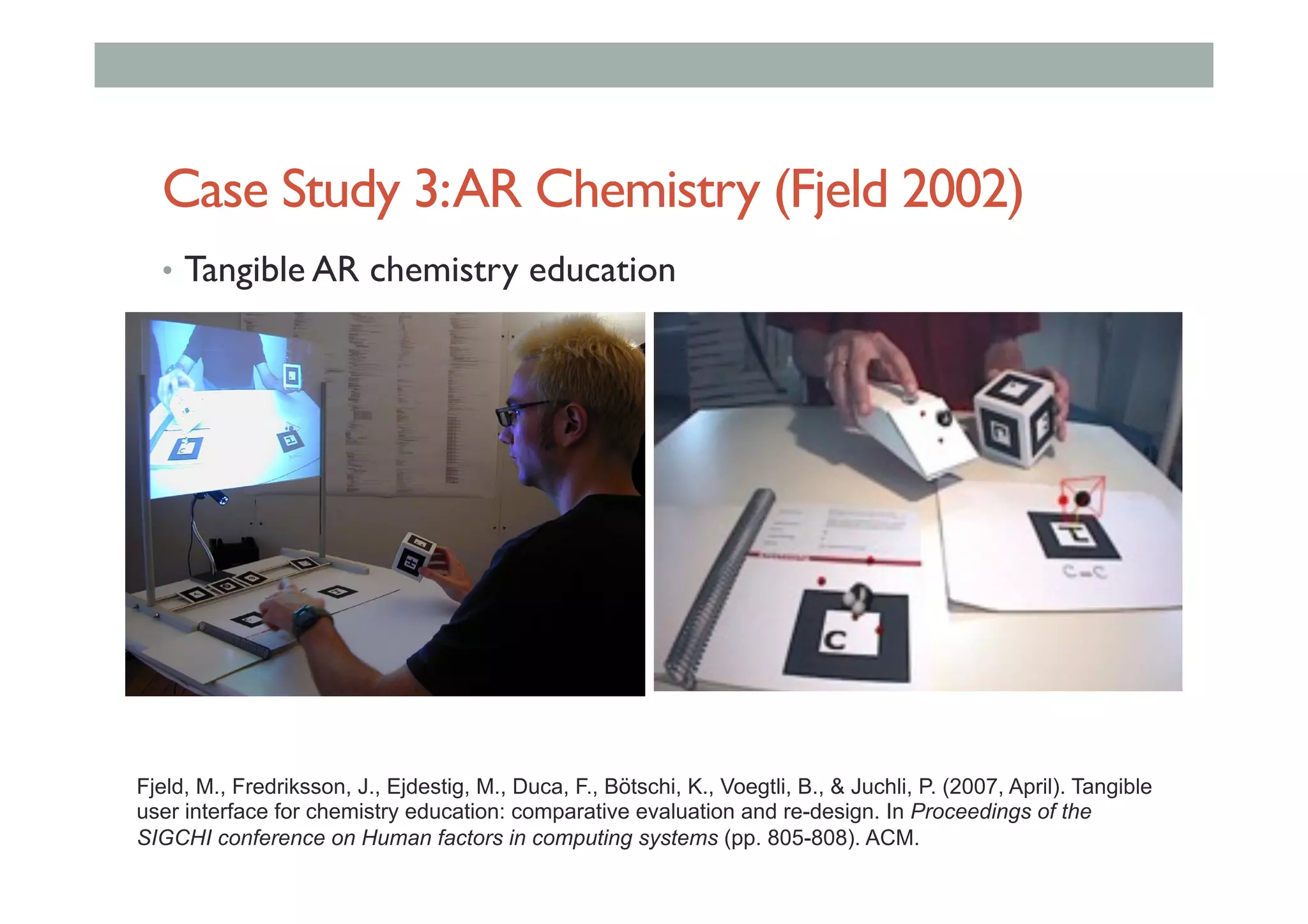

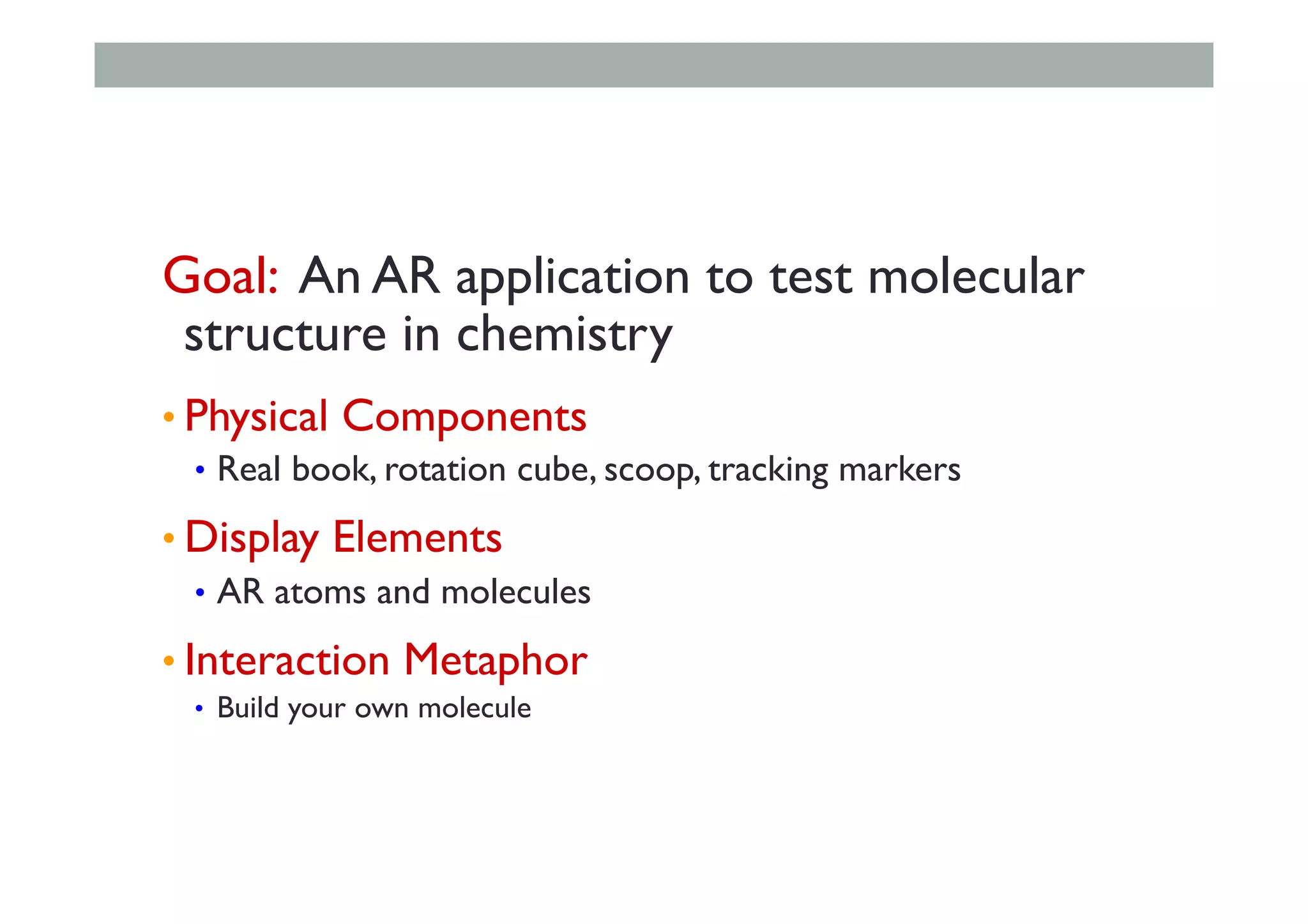

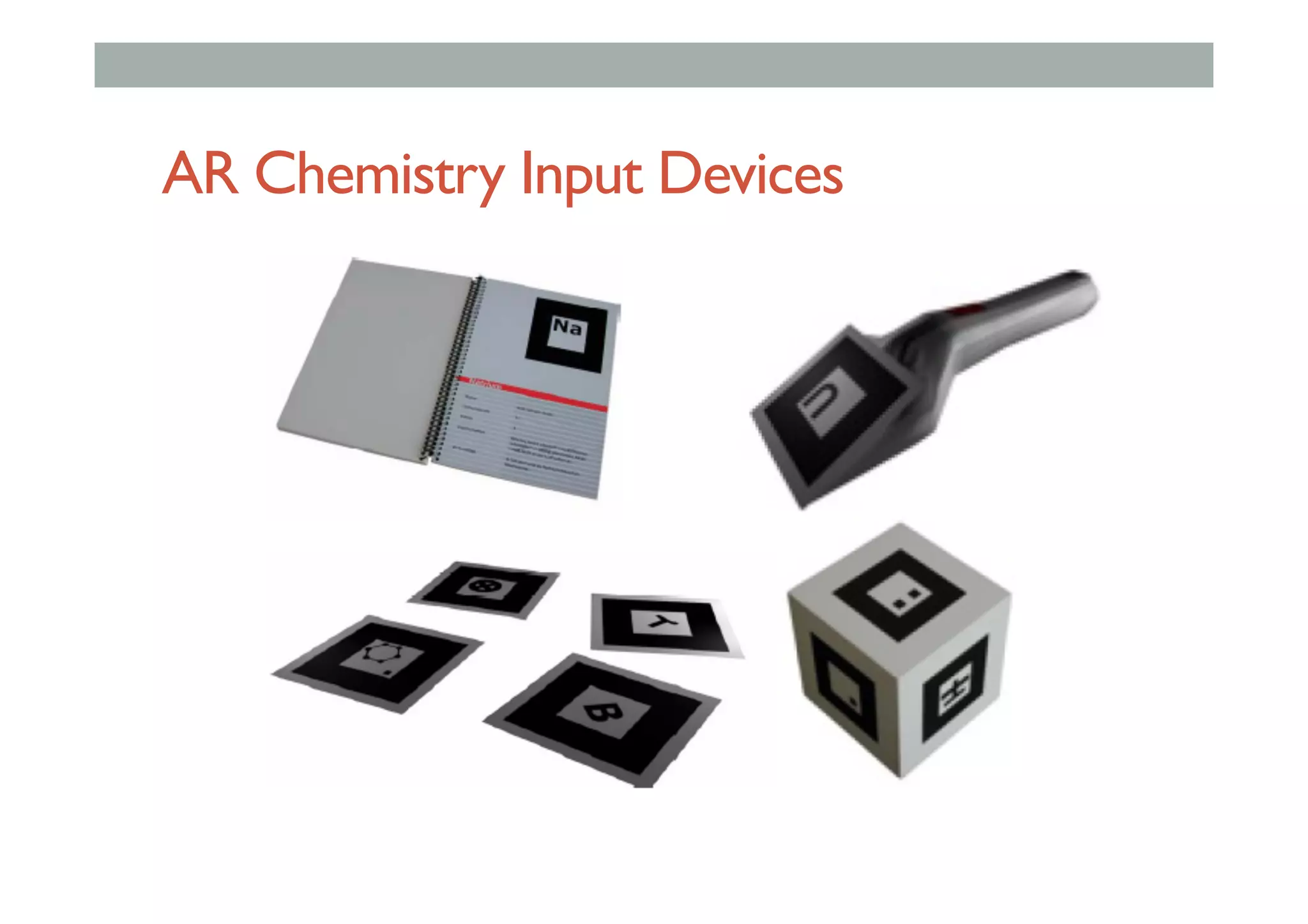

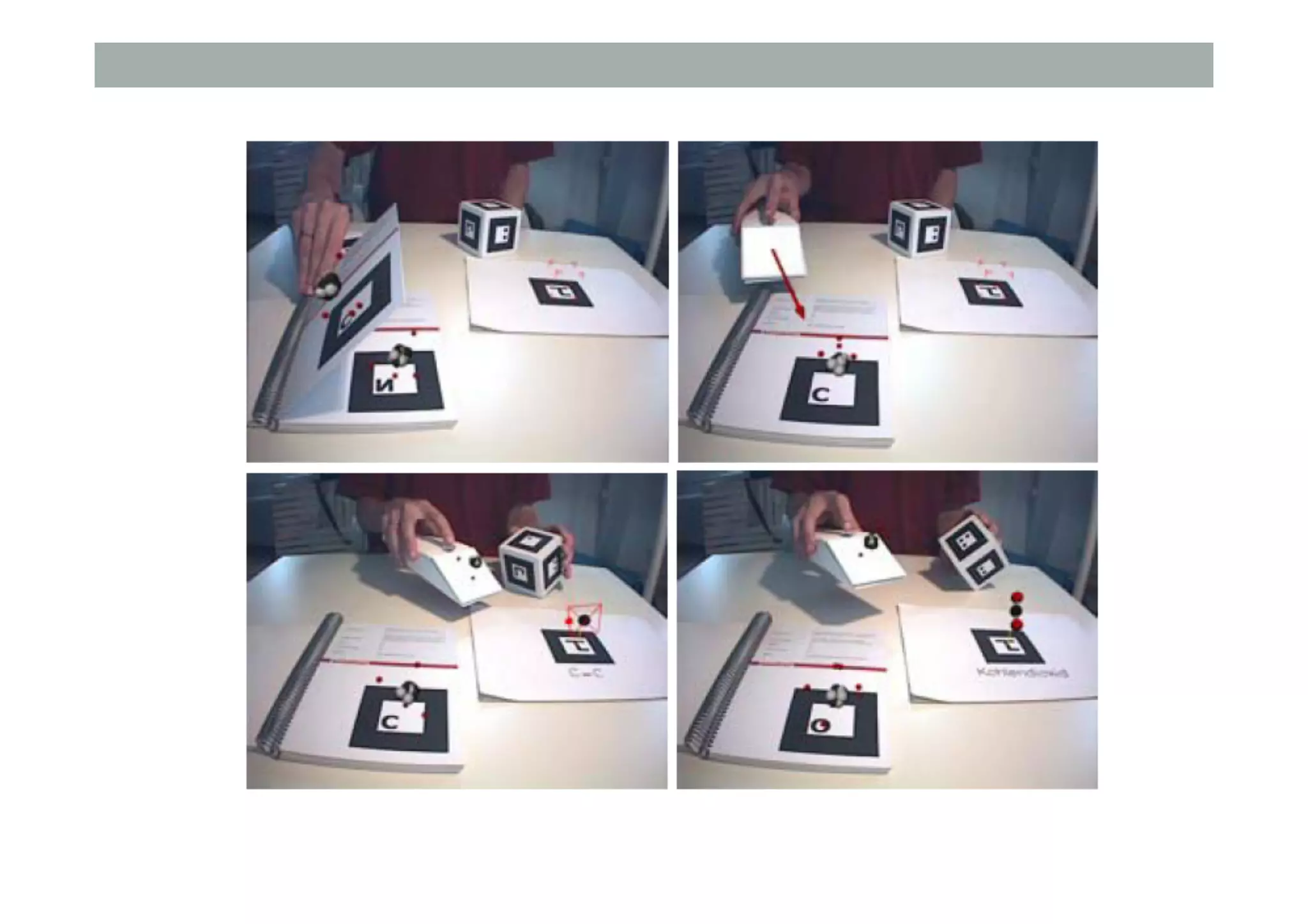

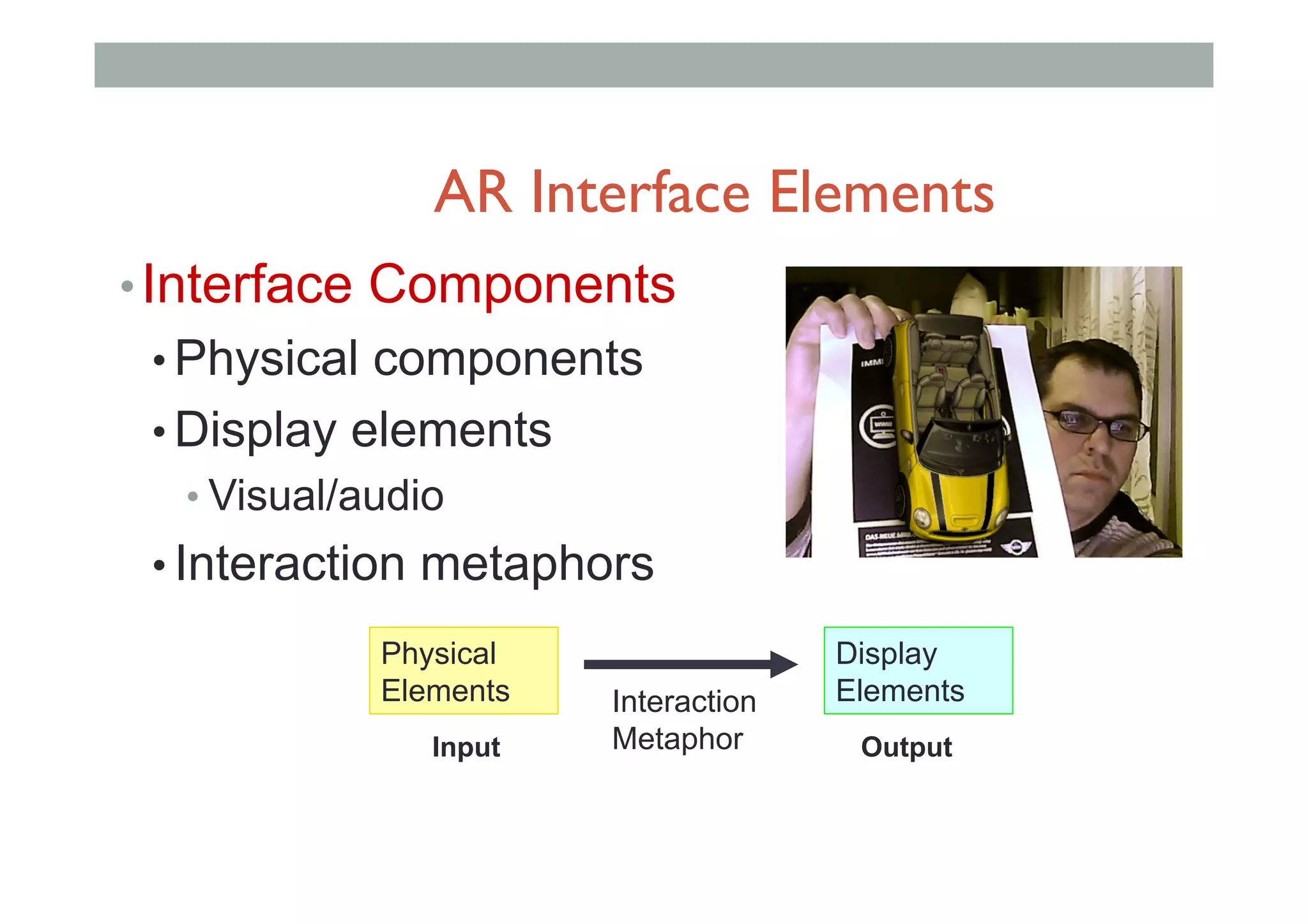

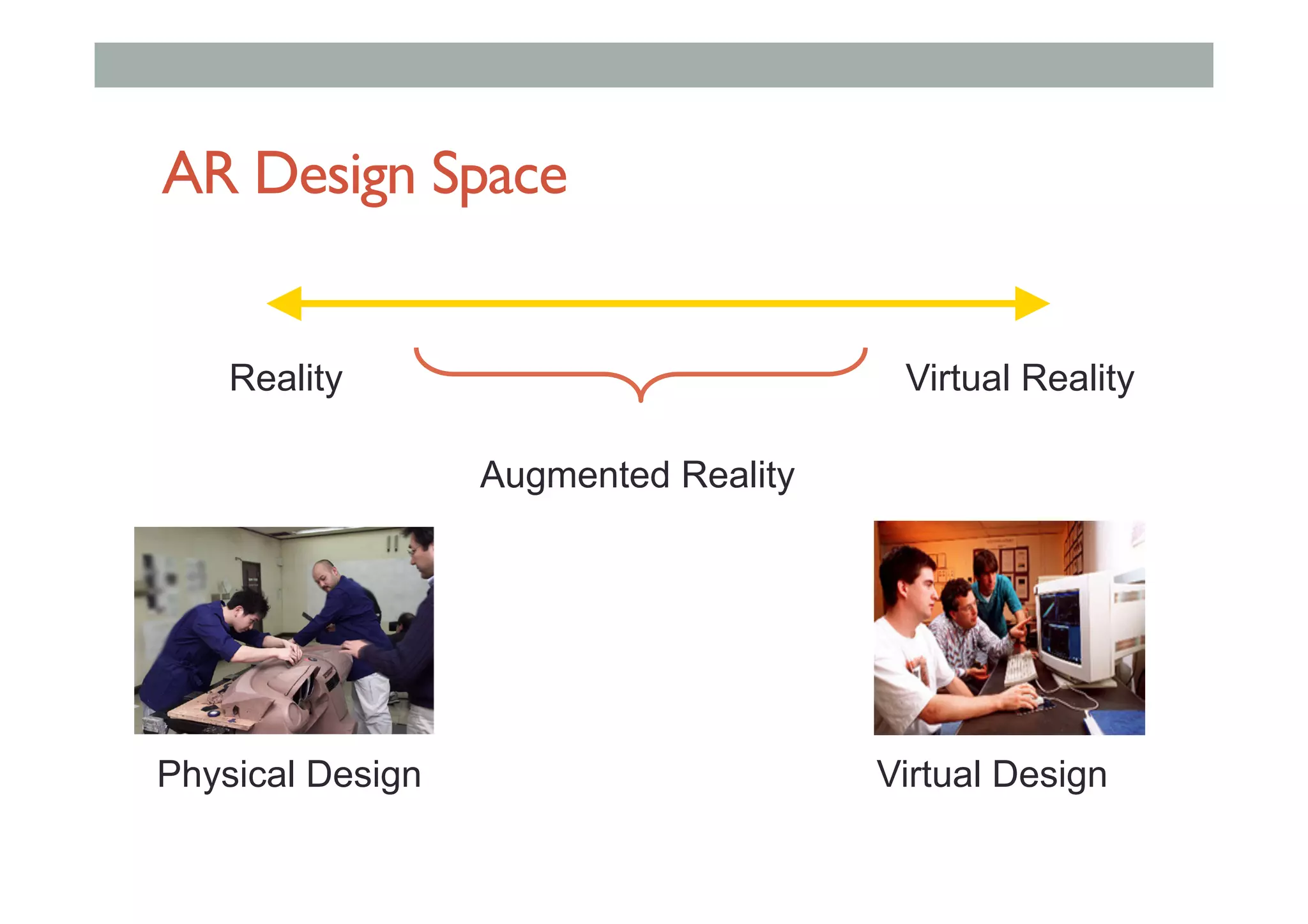

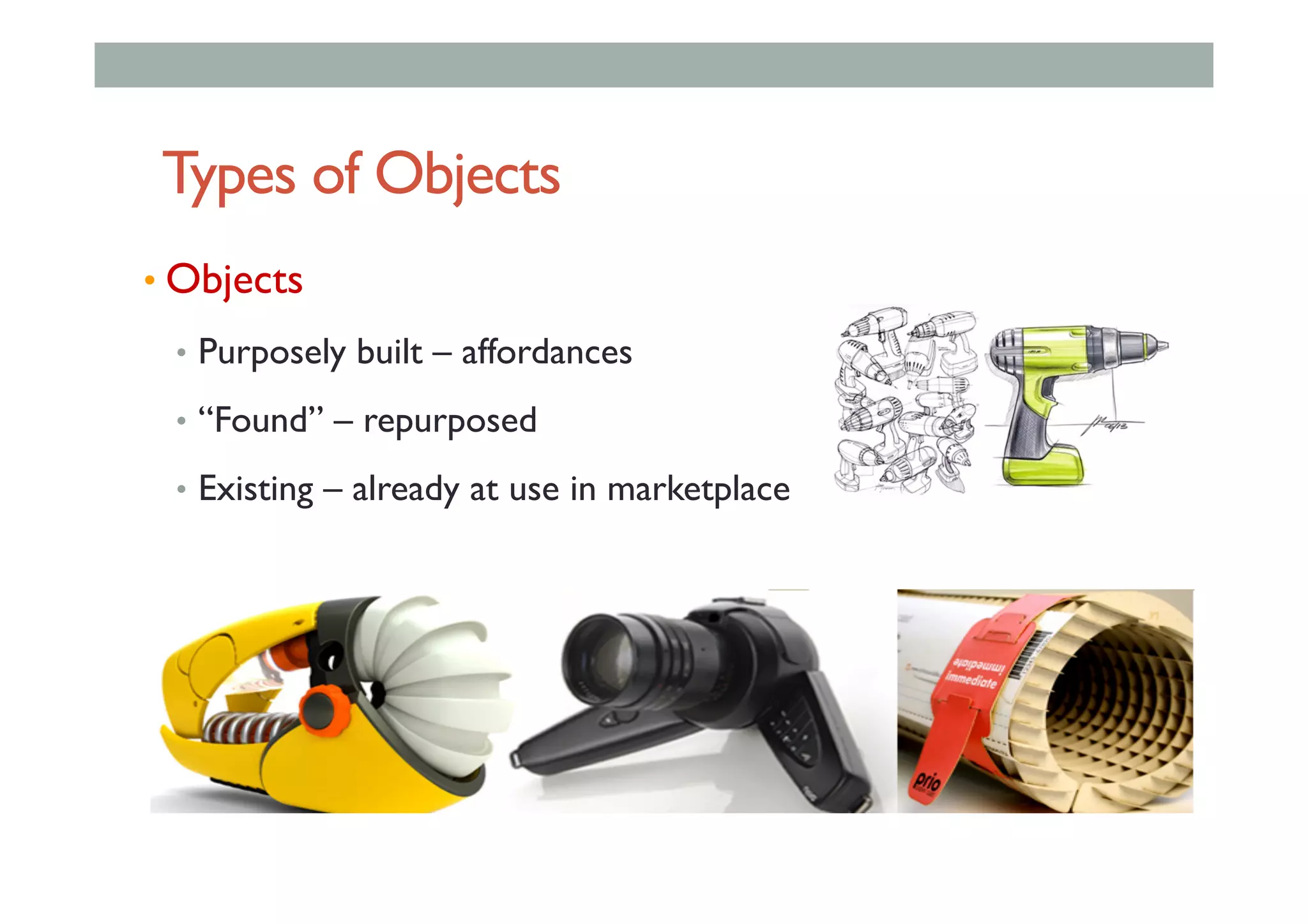

This document summarizes a lecture on interaction design for augmented reality. It discusses several types of AR interfaces including: (1) AR information browsers that allow viewing and manipulating virtual content registered in the real world, (2) 3D AR interfaces that allow interacting with and manipulating 3D virtual objects, and (3) tangible interfaces that use physical objects to interact with and control virtual objects. It also presents case studies of specific AR applications and discusses design principles for AR interaction including using physical affordances, feedback, and natural mappings.

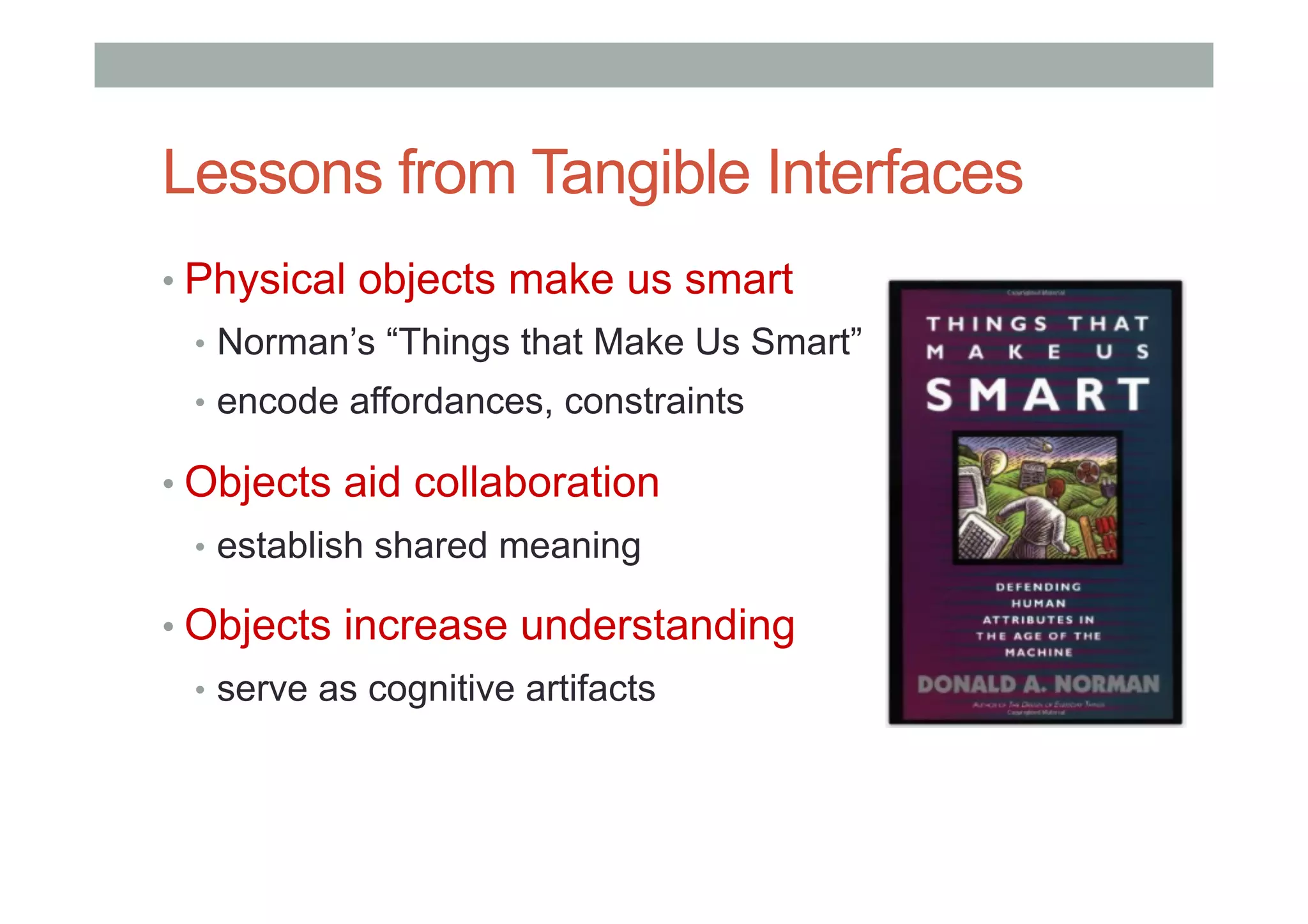

![Augmented Reality Definition

• Defining Characteristics [Azuma 97]

• Combines Real andVirtual Images

• Both can be seen at the same time

• Registered in 3D

• Virtual objects appear fixed in space

• Interactive in real-time

• The virtual content can be interacted with

Azuma, R. T. (1997). A survey of augmented reality. Presence, 6(4), 355-385.](https://image.slidesharecdn.com/lecture3-160229094553/75/2016-AR-Summer-School-Lecture3-2-2048.jpg)

!["...the term affordance refers to the perceived

and actual properties of the thing, primarily

those fundamental properties that determine

just how the thing could possibly be used. [...]

Affordances provide strong clues to the

operations of things.”

(Norman, The Psychology of EverydayThings 1988, p.9)](https://image.slidesharecdn.com/lecture3-160229094553/75/2016-AR-Summer-School-Lecture3-14-2048.jpg)