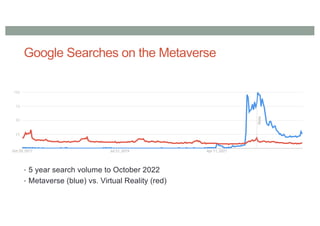

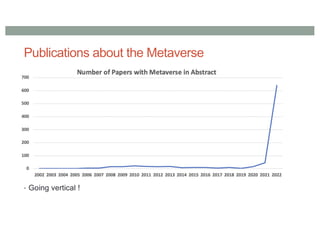

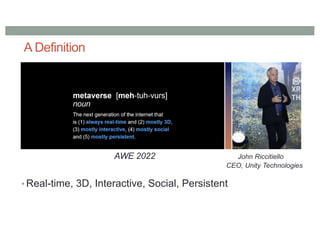

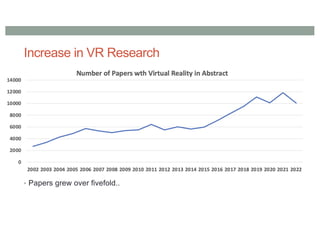

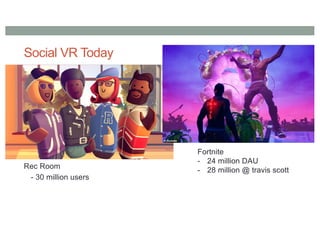

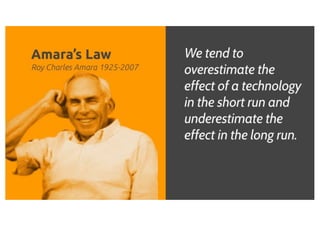

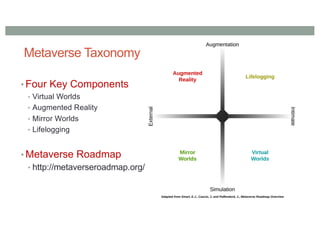

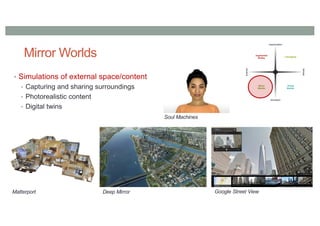

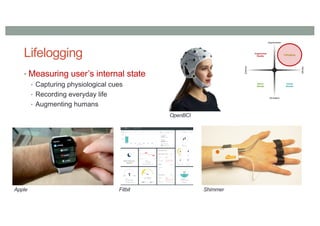

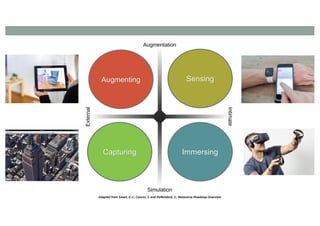

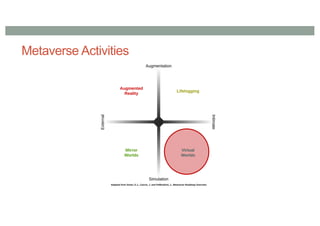

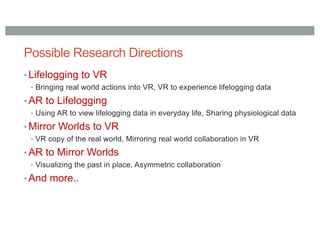

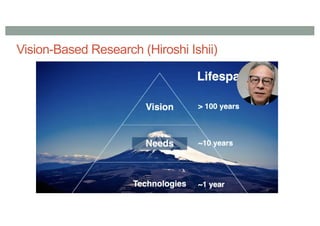

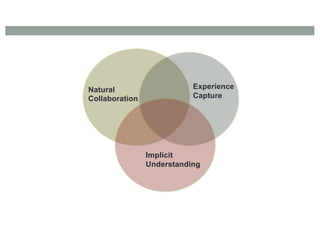

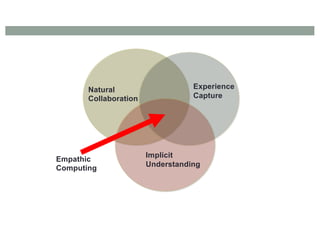

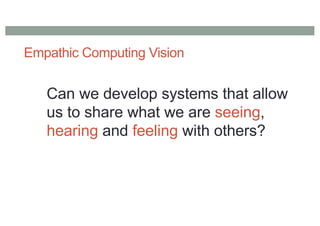

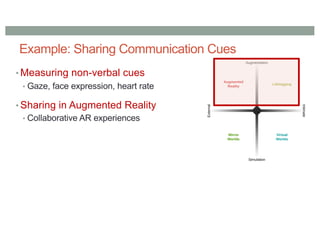

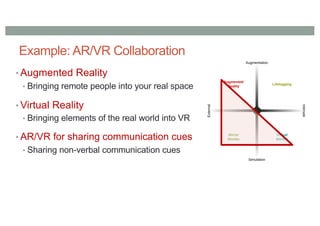

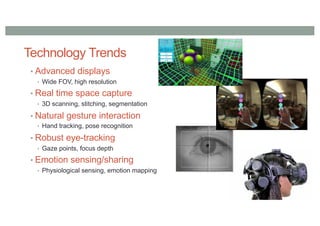

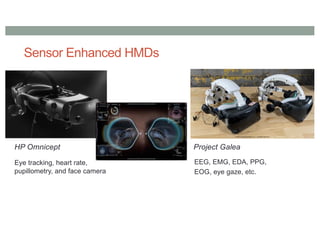

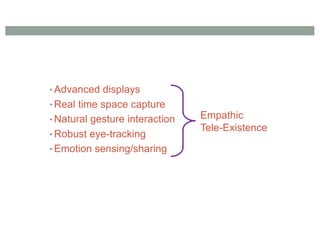

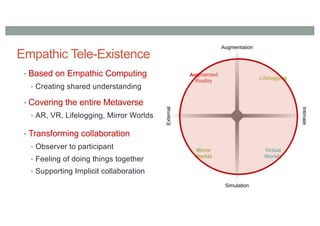

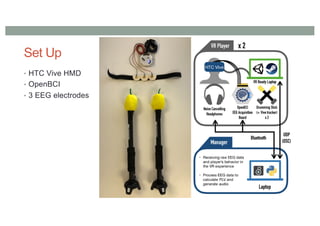

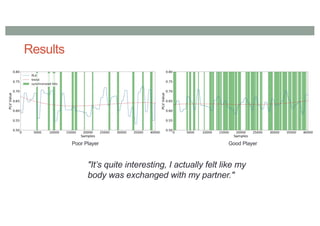

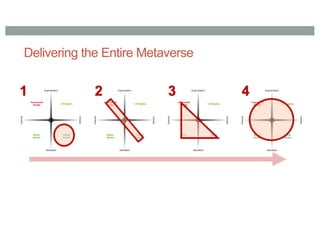

The document discusses the growth and potential of the metaverse, highlighting its definition, key components, and research opportunities. It emphasizes how technological advancements are transforming virtual and augmented realities, leading to new collaborative experiences and interactions. The author calls for a broader understanding of the metaverse, focusing on empathic computing and its implications for future research.