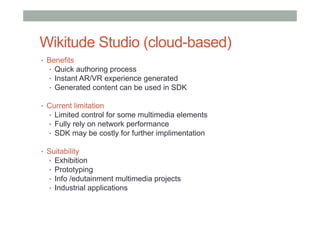

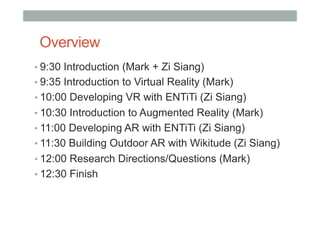

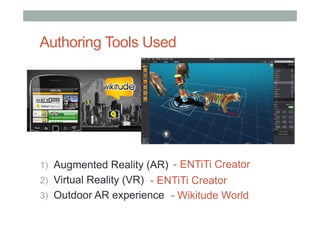

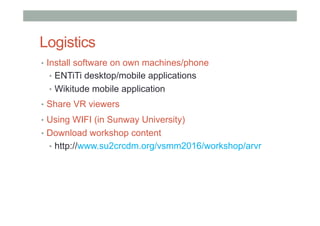

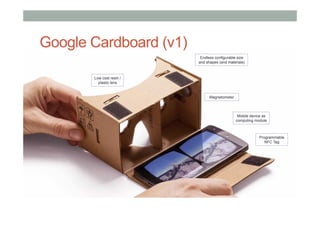

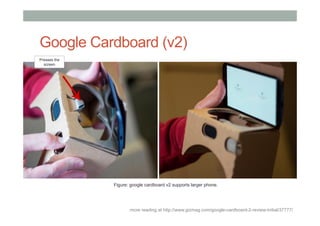

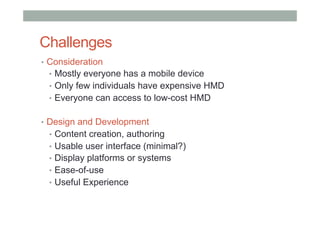

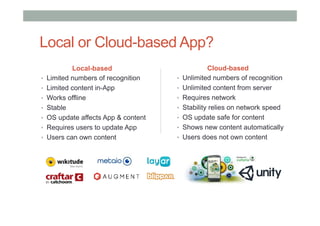

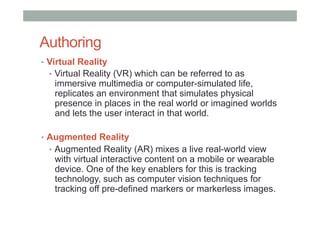

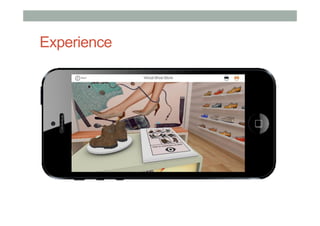

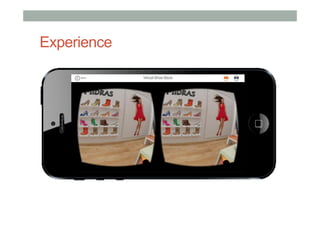

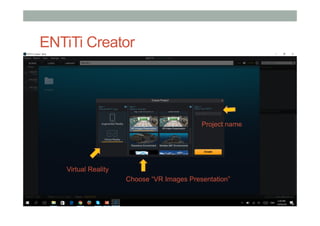

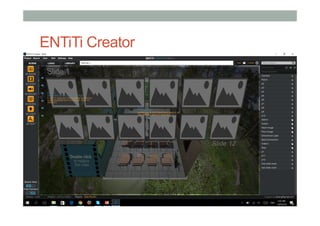

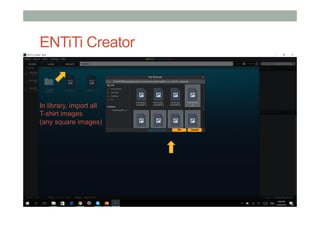

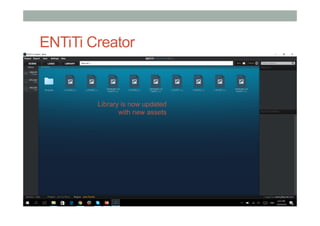

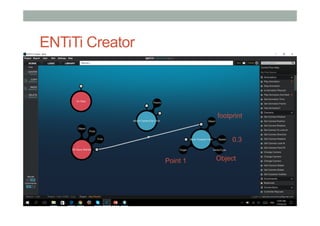

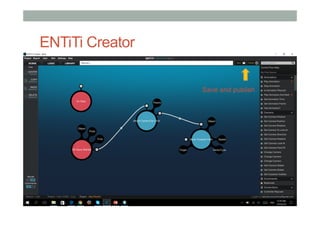

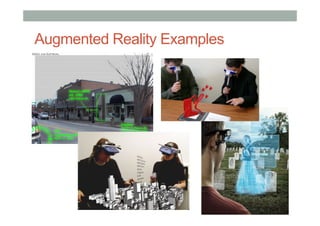

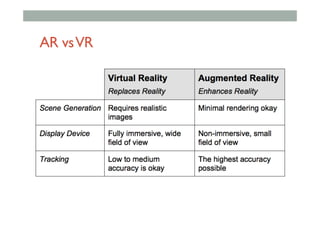

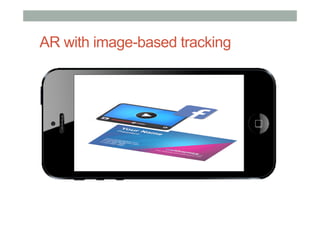

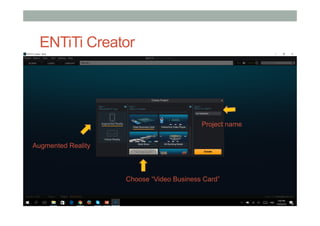

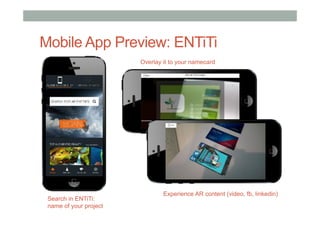

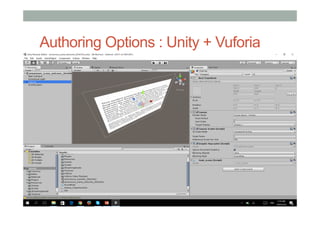

This document provides an overview of a presentation on designing compelling augmented reality (AR) and virtual reality (VR) experiences. The presentation will cover definitions of AR and VR, example applications, hands-on experience with authoring tools ENTiTi Creator and Wikitude World, and research directions. It will also discuss challenges in designing experiences for AR and VR head-mounted displays using mobile devices as computing modules.

![Augmented Reality Definition

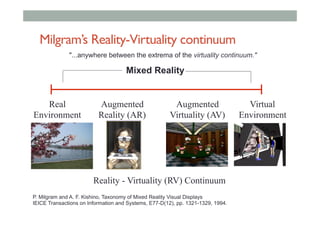

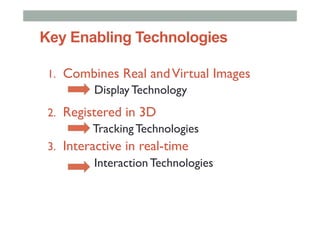

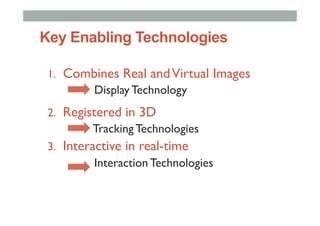

• Defining Characteristics [Azuma 97]

• Combines Real andVirtual Images

• Both can be seen at the same time

• Interactive in real-time

• The virtual content can be interacted with

• Registered in 3D

• Virtual objects appear fixed in space

Azuma, R. T. (1997). A survey of augmented reality. Presence, 6(4), 355-385.](https://image.slidesharecdn.com/arvrworkshop002finalnovideo-161017114931/85/AR-VR-Workshop-131-320.jpg)

![Publish in Wikitude

• KML

• [put stuff here]

• ARML

• [put stuff here]](https://image.slidesharecdn.com/arvrworkshop002finalnovideo-161017114931/85/AR-VR-Workshop-217-320.jpg)

![Free Web Hosting

• KML

• [put stuff here]

• ARML

• [put stuff here]](https://image.slidesharecdn.com/arvrworkshop002finalnovideo-161017114931/85/AR-VR-Workshop-218-320.jpg)

![https://www.google.com/mymaps/

• KML

• [put stuff here]

• ARML

• [put stuff here]](https://image.slidesharecdn.com/arvrworkshop002finalnovideo-161017114931/85/AR-VR-Workshop-220-320.jpg)

![KML (Google Map)

• KML

• [put stuff here]

• ARML

• [put stuff here]](https://image.slidesharecdn.com/arvrworkshop002finalnovideo-161017114931/85/AR-VR-Workshop-221-320.jpg)

![KML (Google Map)

• KML

• [put stuff here]

• ARML

• [put stuff here]](https://image.slidesharecdn.com/arvrworkshop002finalnovideo-161017114931/85/AR-VR-Workshop-222-320.jpg)

![KML (Google Map)

• KML

• [put stuff here]

• ARML

• [put stuff here]](https://image.slidesharecdn.com/arvrworkshop002finalnovideo-161017114931/85/AR-VR-Workshop-223-320.jpg)

![KML (Google Map)

• KML

• [put stuff here]

• ARML

• [put stuff here]](https://image.slidesharecdn.com/arvrworkshop002finalnovideo-161017114931/85/AR-VR-Workshop-224-320.jpg)

![KML (Google Map)

• KML

• [put stuff here]

• ARML

• [put stuff here]](https://image.slidesharecdn.com/arvrworkshop002finalnovideo-161017114931/85/AR-VR-Workshop-225-320.jpg)

![KML: XML Scripting

• KML

• [put stuff here]

• ARML

• [put stuff here]](https://image.slidesharecdn.com/arvrworkshop002finalnovideo-161017114931/85/AR-VR-Workshop-226-320.jpg)

![Publish in Wikitude: KML

• KML

• [put stuff here]

• ARML

• [put stuff here]](https://image.slidesharecdn.com/arvrworkshop002finalnovideo-161017114931/85/AR-VR-Workshop-230-320.jpg)

![Publish in Wikitude: KML

• KML

• [put stuff here]

• ARML

• [put stuff here]](https://image.slidesharecdn.com/arvrworkshop002finalnovideo-161017114931/85/AR-VR-Workshop-231-320.jpg)

![Publish in Wikitude: KML

• KML

• [put stuff here]

• ARML

• [put stuff here]

provide URL, host the *.kml file

from your own server](https://image.slidesharecdn.com/arvrworkshop002finalnovideo-161017114931/85/AR-VR-Workshop-232-320.jpg)

![Free Web Hosting

• KML

• [put stuff here]

• ARML

• [put stuff here]](https://image.slidesharecdn.com/arvrworkshop002finalnovideo-161017114931/85/AR-VR-Workshop-235-320.jpg)

![Publish in Wikitude

• KML

• [put stuff here]

• ARML

• [put stuff here]](https://image.slidesharecdn.com/arvrworkshop002finalnovideo-161017114931/85/AR-VR-Workshop-237-320.jpg)

![ARML: XML Scripting

• KML

• [put stuff here]

• ARML

• [put stuff here]

http://openarml.org/wikitude4.html](https://image.slidesharecdn.com/arvrworkshop002finalnovideo-161017114931/85/AR-VR-Workshop-238-320.jpg)

![Publish in Wikitude: ARML

• KML

• [put stuff here]

• ARML

• [put stuff here]](https://image.slidesharecdn.com/arvrworkshop002finalnovideo-161017114931/85/AR-VR-Workshop-245-320.jpg)

![Publish in Wikitude: ARML

• KML

• [put stuff here]

• ARML

• [put stuff here]](https://image.slidesharecdn.com/arvrworkshop002finalnovideo-161017114931/85/AR-VR-Workshop-246-320.jpg)

![Publish in Wikitude: ARML

• KML

• [put stuff here]

• ARML

• [put stuff here]

provide URL, host the *.xml file

from your own server](https://image.slidesharecdn.com/arvrworkshop002finalnovideo-161017114931/85/AR-VR-Workshop-247-320.jpg)

![Free Web Hosting

• KML

• [put stuff here]

• ARML

• [put stuff here]](https://image.slidesharecdn.com/arvrworkshop002finalnovideo-161017114931/85/AR-VR-Workshop-331-320.jpg)

![Free Web Hosting

• KML

• [put stuff here]

• ARML

• [put stuff here]](https://image.slidesharecdn.com/arvrworkshop002finalnovideo-161017114931/85/AR-VR-Workshop-355-320.jpg)

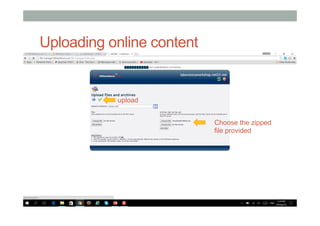

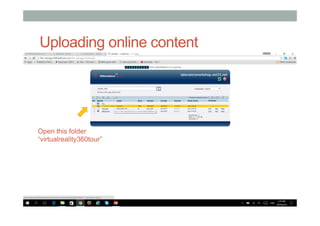

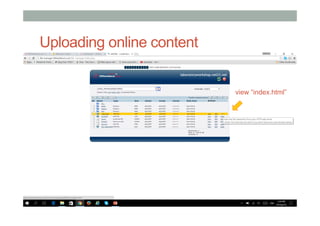

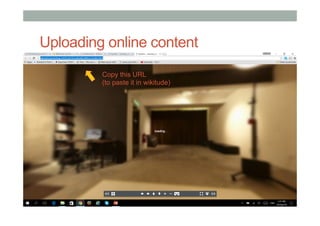

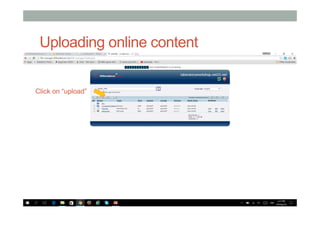

![Uploading online content

• KML

• [put stuff here]

• ARML

• [put stuff here]

File Manager](https://image.slidesharecdn.com/arvrworkshop002finalnovideo-161017114931/85/AR-VR-Workshop-357-320.jpg)