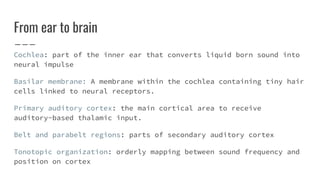

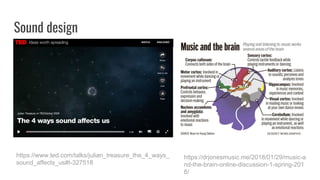

The document discusses the role of the hearing brain. It constructs an internal model of the world from sensory information and experience in order to interpret and act on sounds. The hearing brain extracts consistent features from varying sensory input and actively interprets what is heard. It also discusses how auditory information is processed from the cochlea to primary and secondary auditory cortex and specialized regions involved in voice perception, speech, music and amusia.