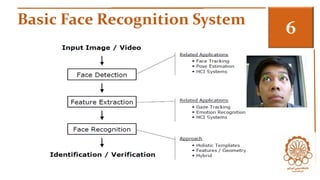

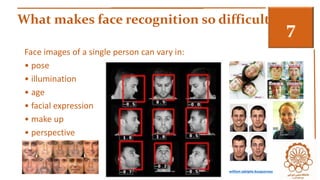

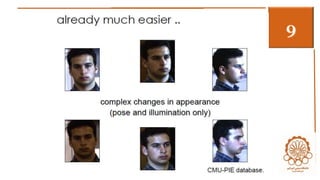

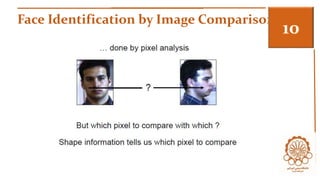

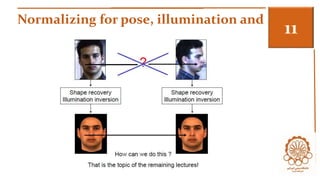

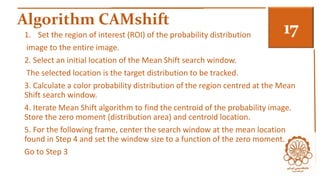

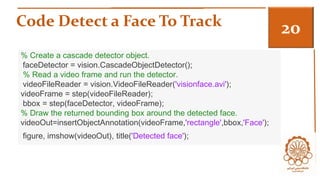

This document discusses face recognition and tracking using the CamShift algorithm. It begins by motivating face recognition, describing applications, and explaining why face recognition is difficult due to variations in pose, illumination, expression, etc. It then provides an overview of the CamShift algorithm and mean shift algorithm, explaining that CamShift continuously adapts distributions between frames. The document concludes with code to detect and track a face in a video using CamShift by extracting the hue channel and initializing a tracker on the detected nose region.

![Step 2: Identify Facial Features To Track 3

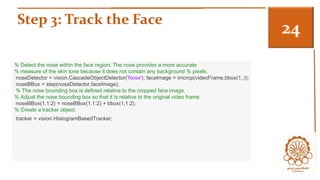

% Get the skin tone information by extracting the Hue from the video frame

% converted to the HSV color space.

[hueChannel,~,~] = rgb2hsv(videoFrame);

% Display the Hue Channel data and draw the bounding box around the

face.

figure, imshow(hueChannel), title('Hue channel data');

rectangle('Position',bbox(1,:),'LineWidth',2,'EdgeColor',[1 1 0])](https://image.slidesharecdn.com/camshaft-141124012331-conversion-gate02/85/Camshaft-23-320.jpg)