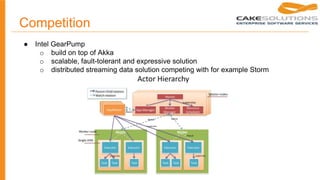

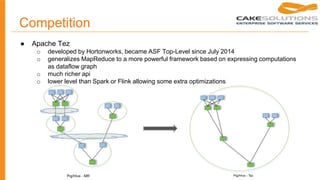

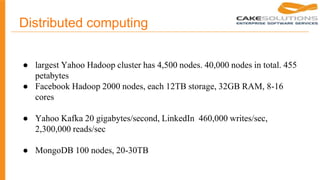

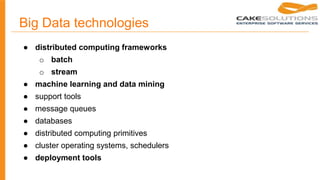

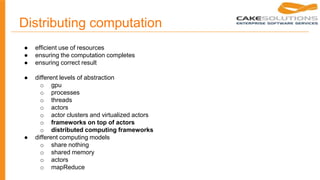

This document provides a history and market overview of Apache Spark. It discusses the motivation for distributed data processing due to increasing data volumes, velocities and varieties. It then covers brief histories of Google File System, MapReduce, BigTable, and other technologies. Hadoop and MapReduce are explained. Apache Spark is introduced as a faster alternative to MapReduce that keeps data in memory. Competitors like Flink, Tez and Storm are also mentioned.

![Hadoop MapReduce

public class WordCount {

public static class Map extends MapReduceBase implements Mapper<LongWritable, Text, Text, IntWritable> {

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(LongWritable key, Text value, OutputCollector<Text, IntWritable> output, Reporter reporter) throws IOException {

String line = value.toString();

StringTokenizer tokenizer = new StringTokenizer(line);

while (tokenizer.hasMoreTokens()) {

word.set(tokenizer.nextToken());

output.collect(word, one);

}

}

}

public static class Reduce extends MapReduceBase implements Reducer<Text, IntWritable, Text, IntWritable> {

public void reduce(Text key, Iterator<IntWritable> values, OutputCollector<Text, IntWritable> output, Reporter reporter) throws IOException {

int sum = 0;

while (values.hasNext()) {

sum += values.next().get();

}

output.collect(key, new IntWritable(sum));

}

}

public static void main(String[] args) throws Exception {

JobConf conf = new JobConf(WordCount.class);

conf.setJobName("wordcount");

conf.setOutputKeyClass(Text.class);

conf.setOutputValueClass(IntWritable.class);

conf.setMapperClass(Map.class);

conf.setCombinerClass(Reduce.class);

conf.setReducerClass(Reduce.class);

conf.setInputFormat(TextInputFormat.class);

conf.setOutputFormat(TextOutputFormat.class);

FileInputFormat.setInputPaths(conf, new Path(args[0]));

FileOutputFormat.setOutputPath(conf, new Path(args[1]));

JobClient.runJob(conf);

}

}](https://image.slidesharecdn.com/5yxgrg1jrz6lxwzbgfla-signature-421b897b13ea623f41b057c1ac2699238676e8758bcde0f2856d11e5209bf267-poli-150329133648-conversion-gate01/85/Apache-spark-History-and-market-overview-17-320.jpg)