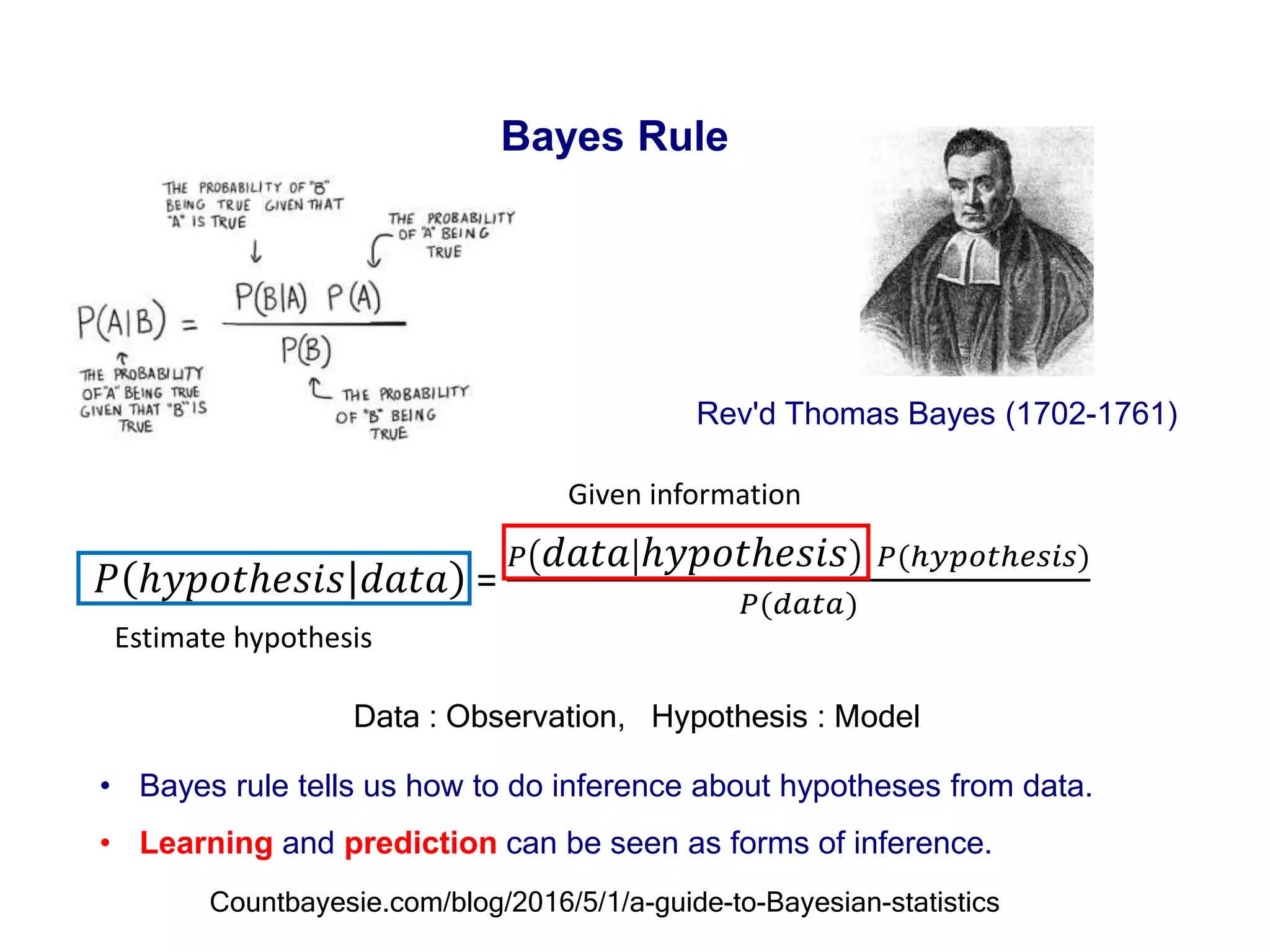

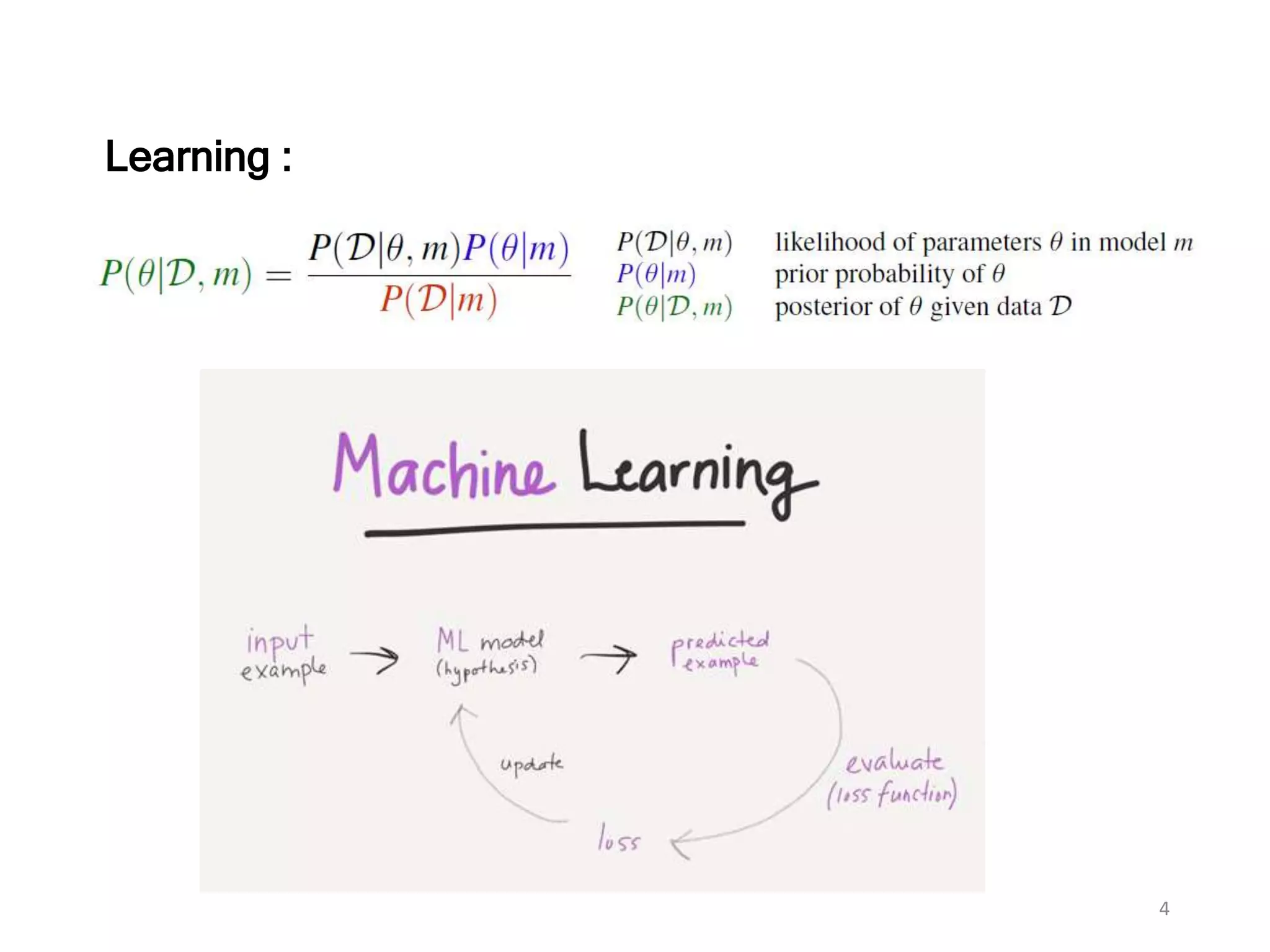

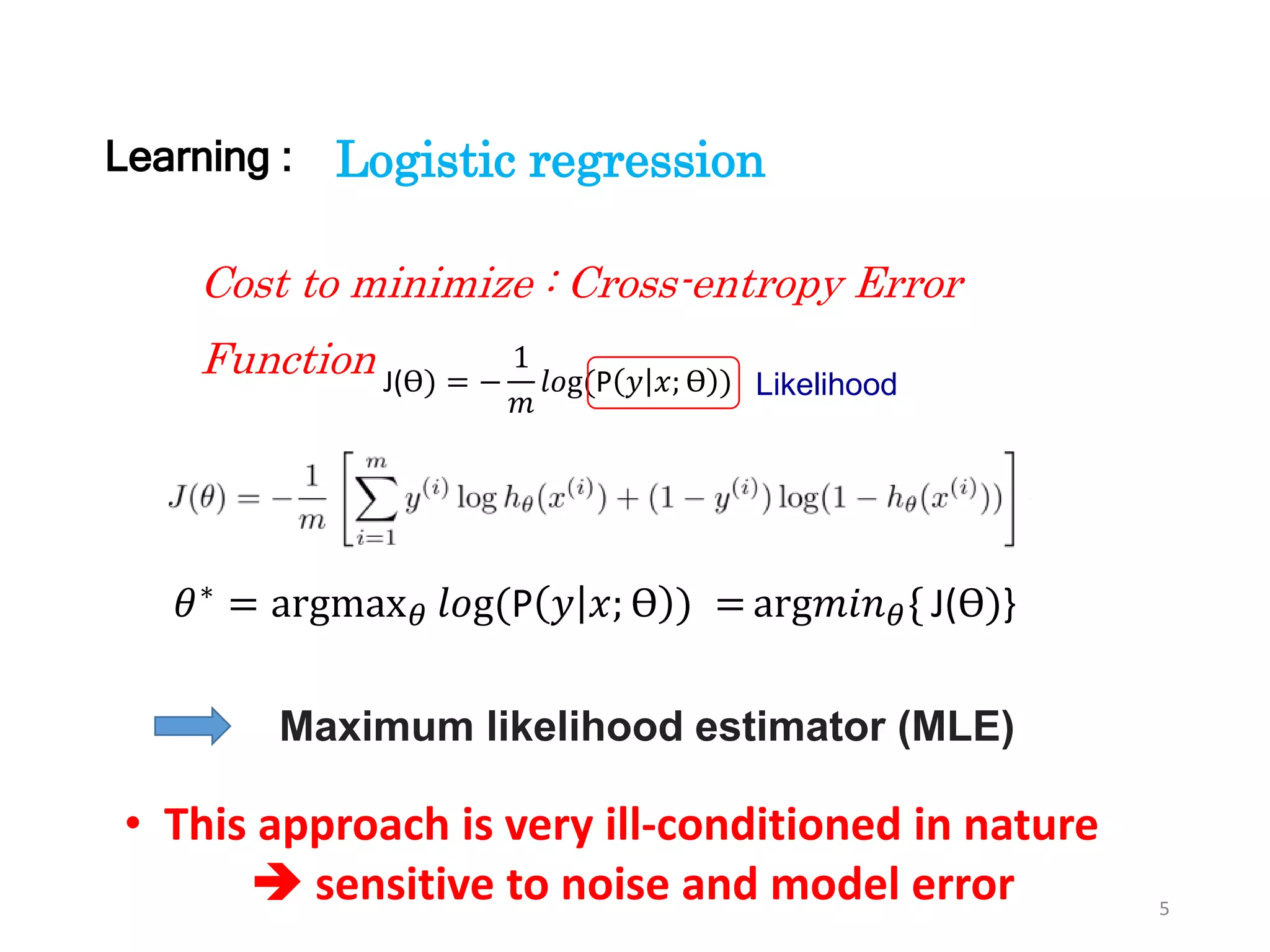

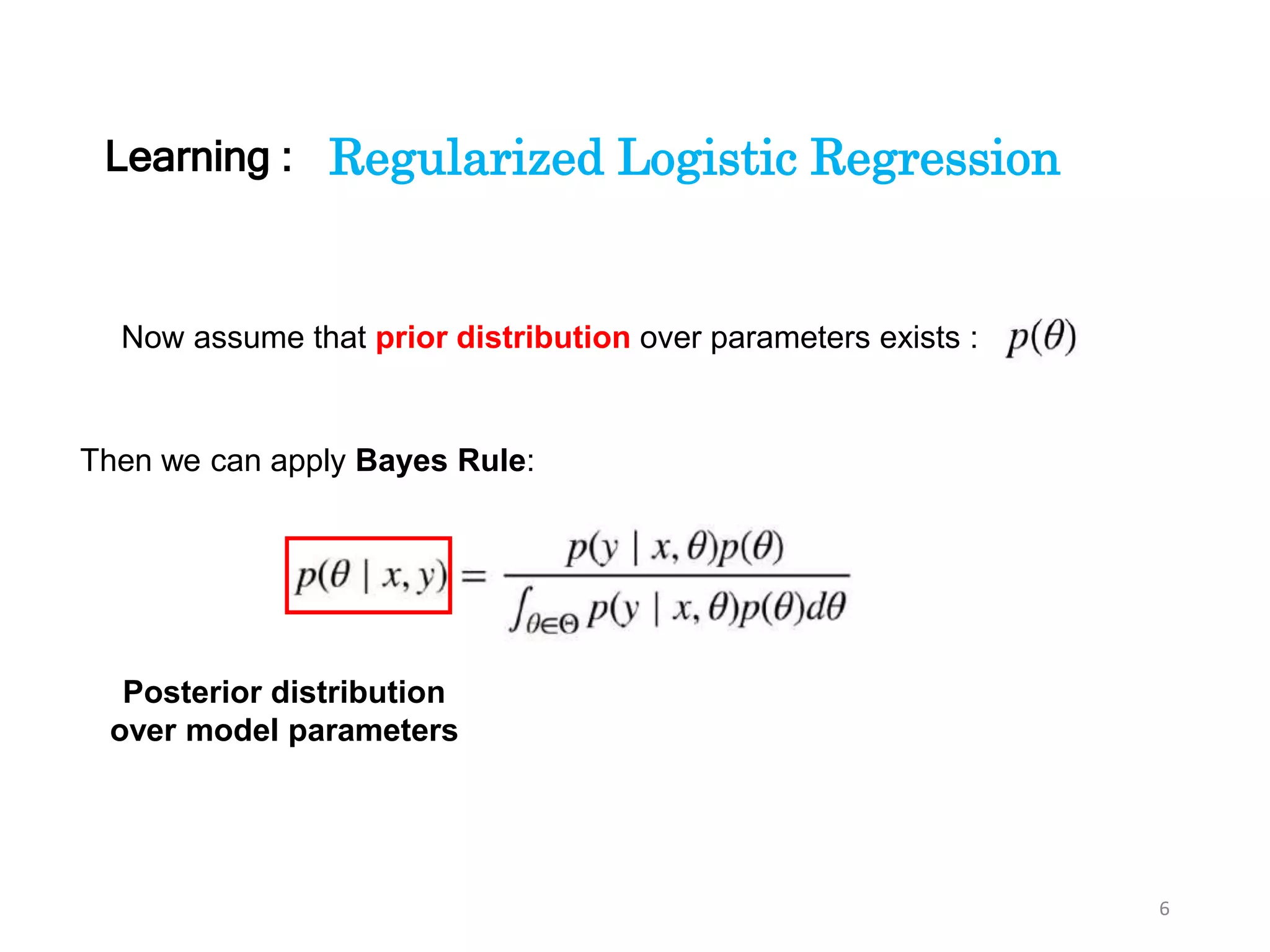

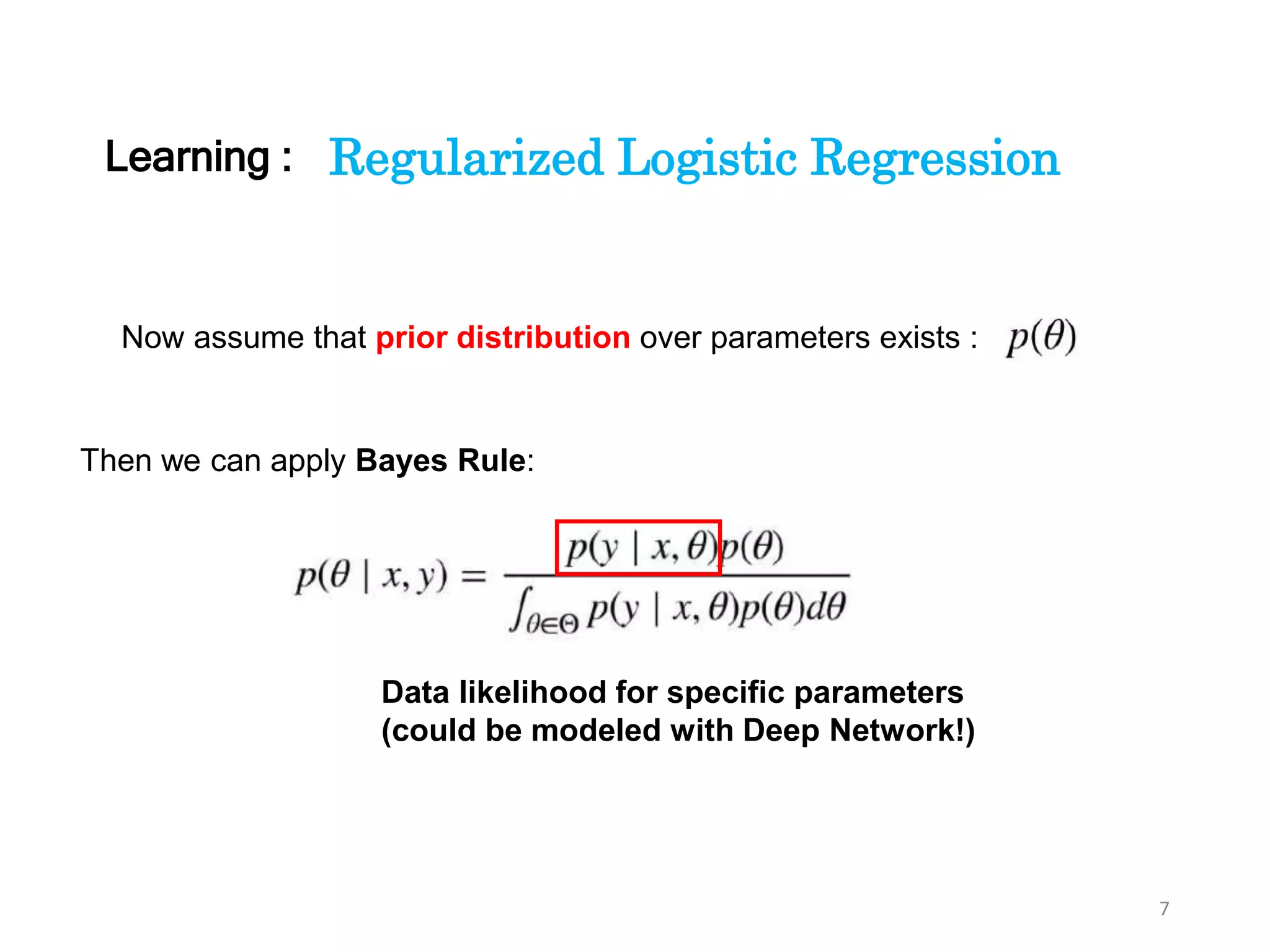

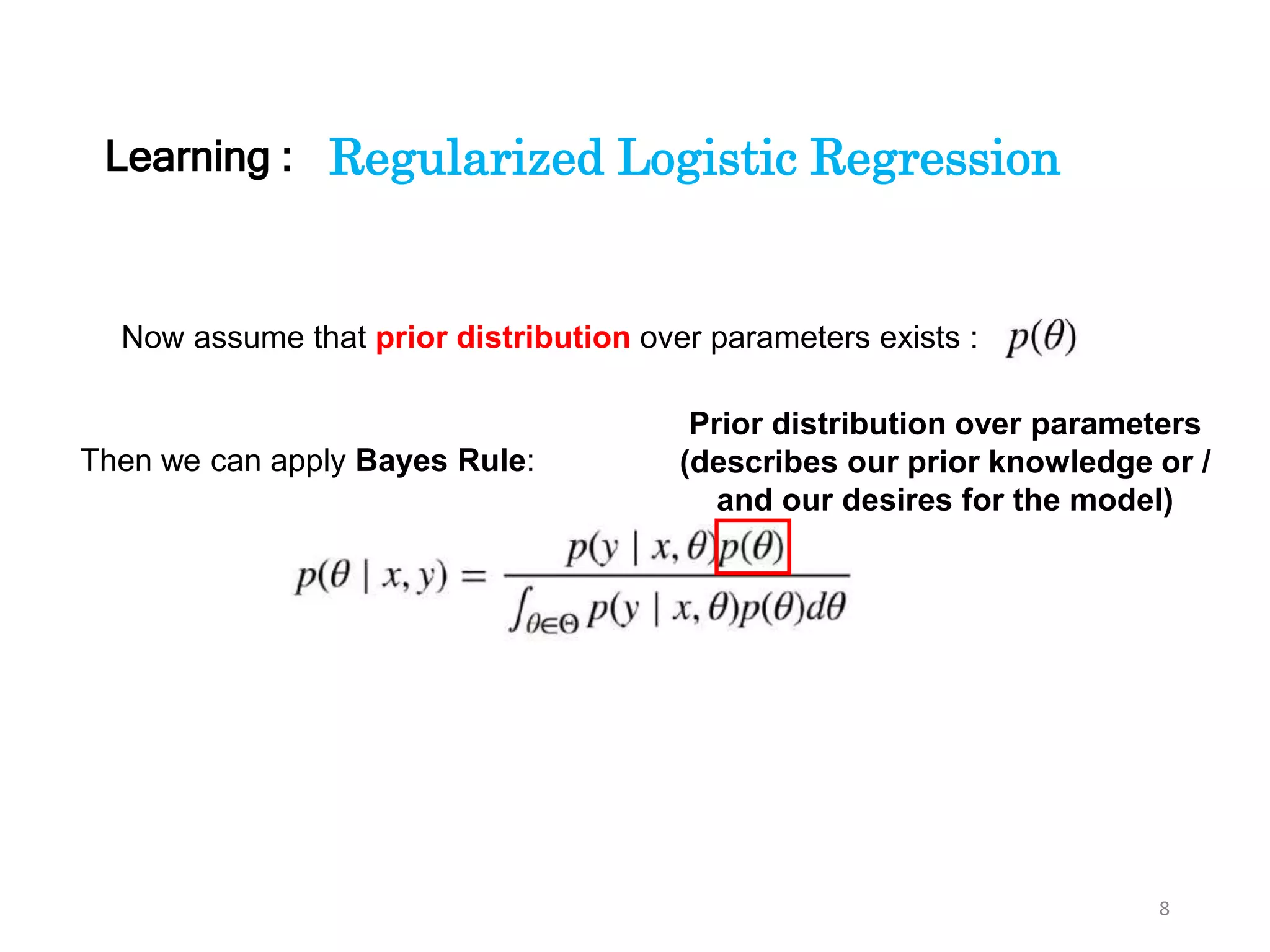

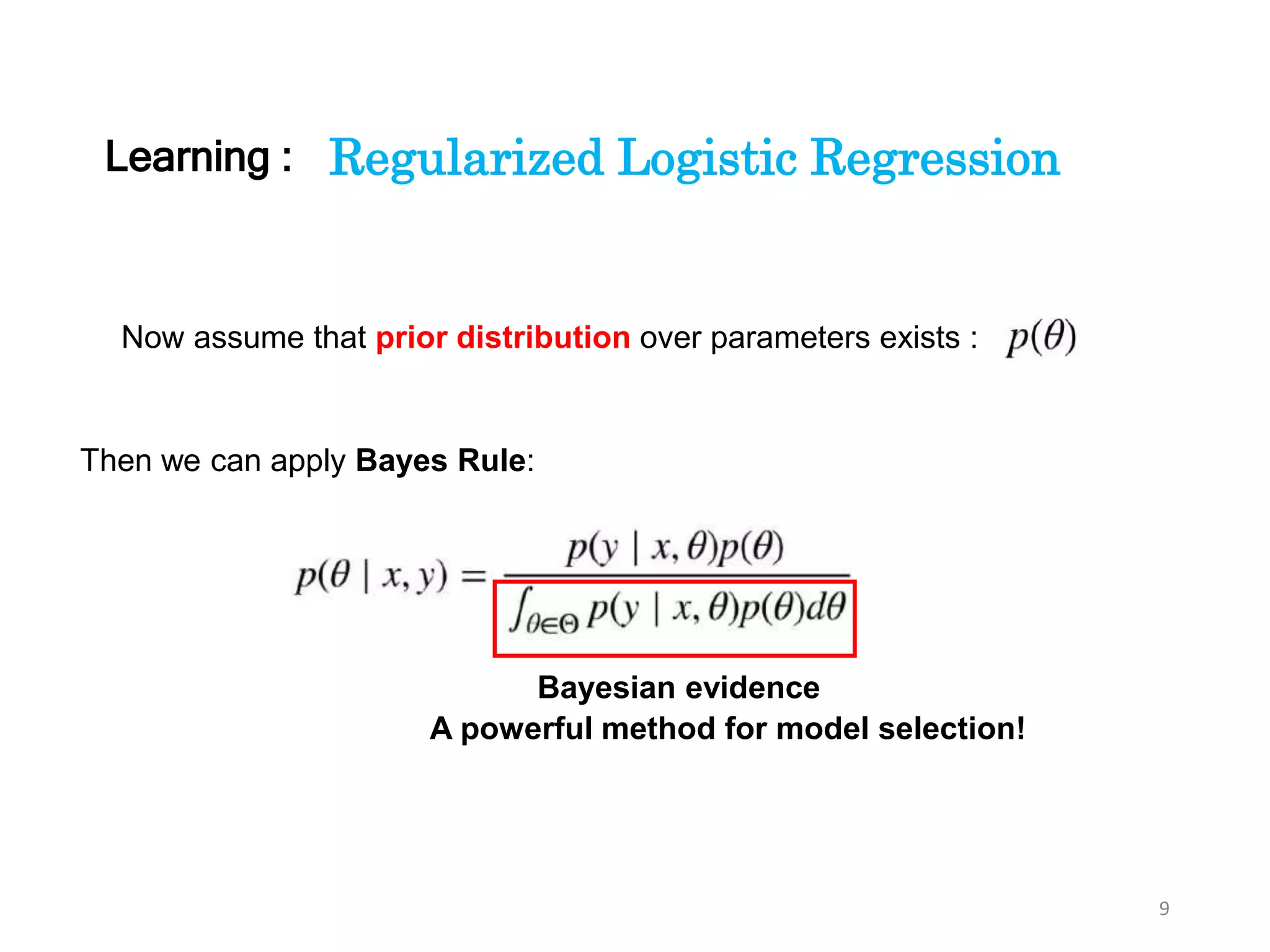

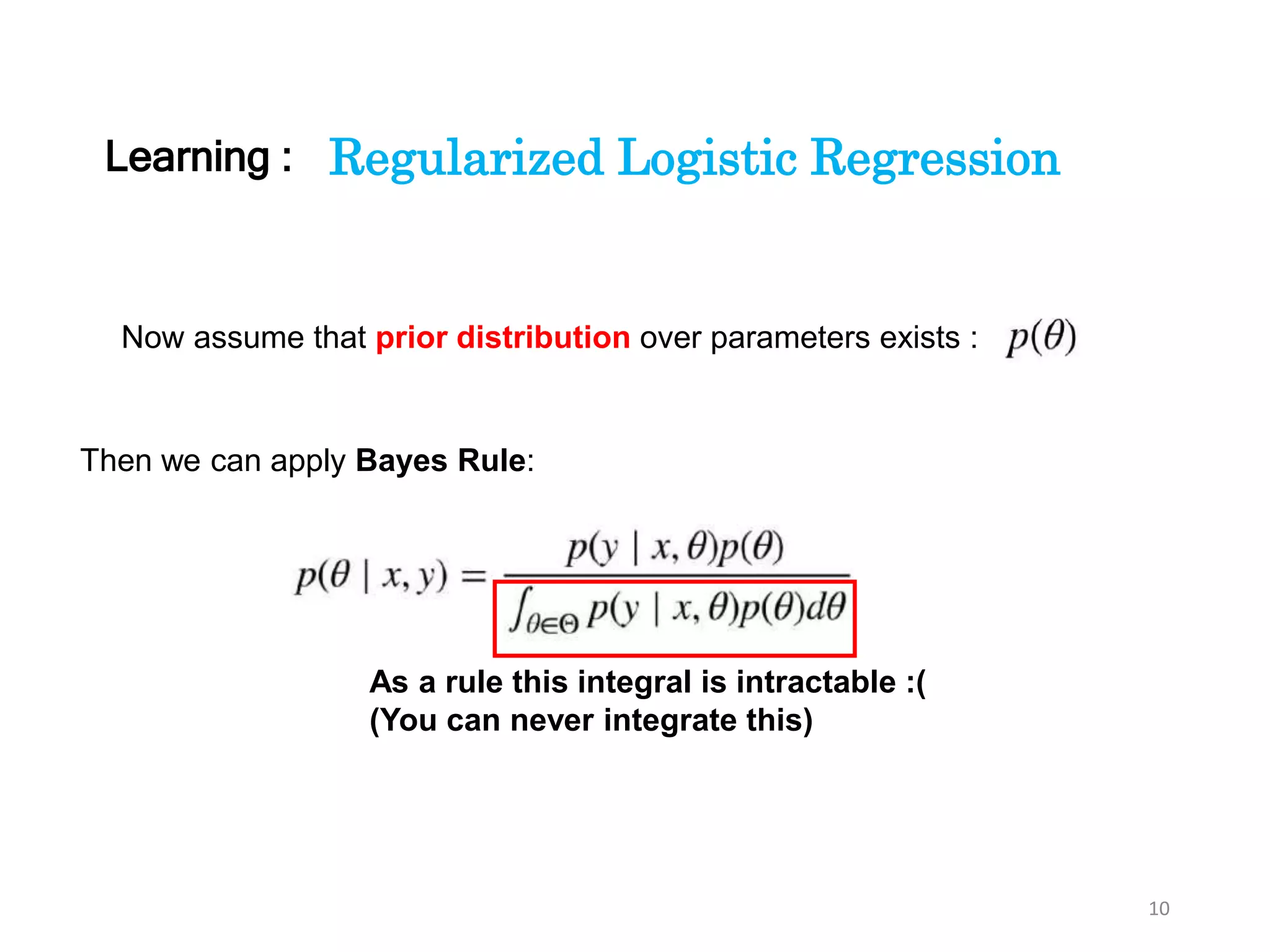

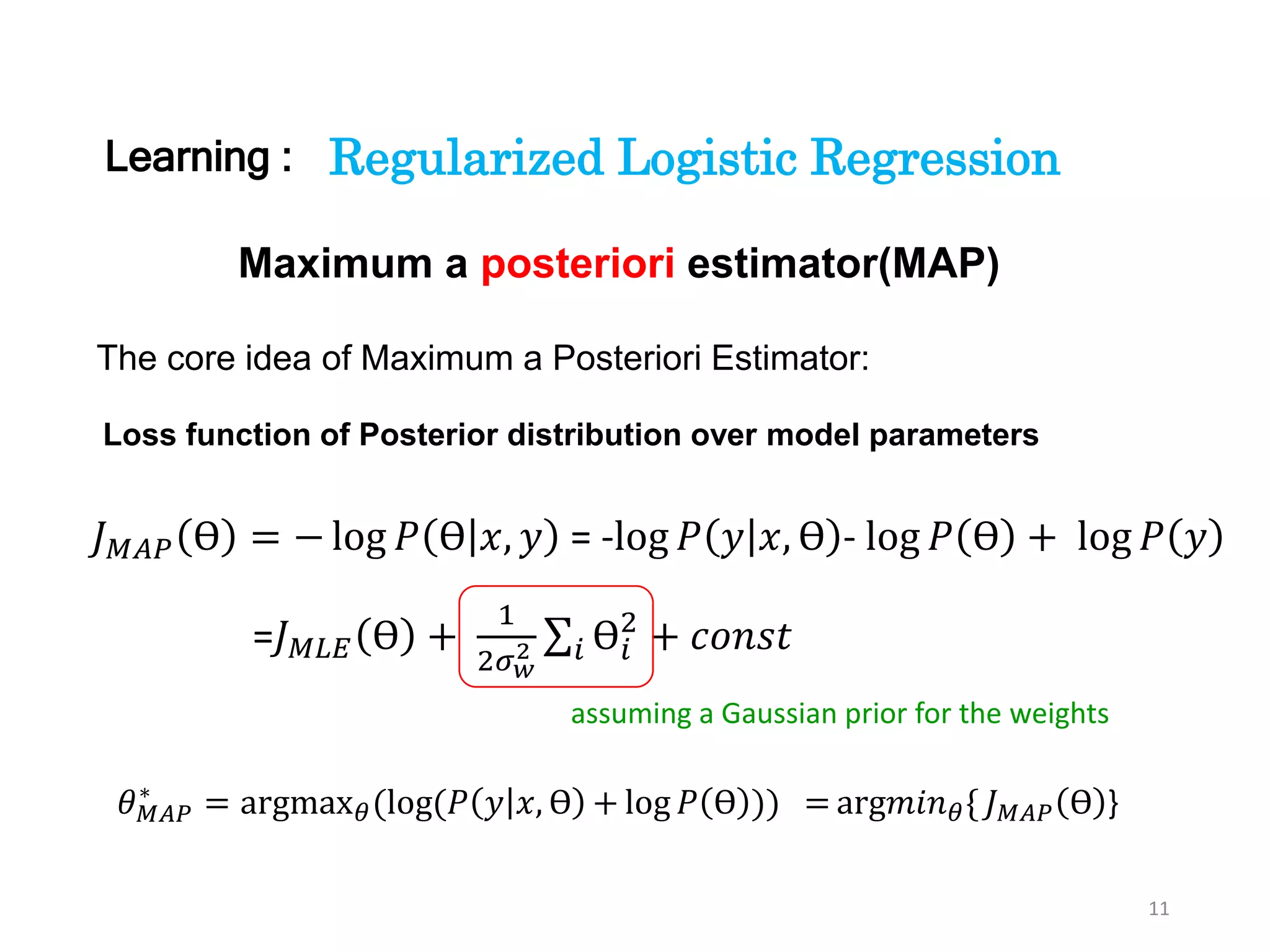

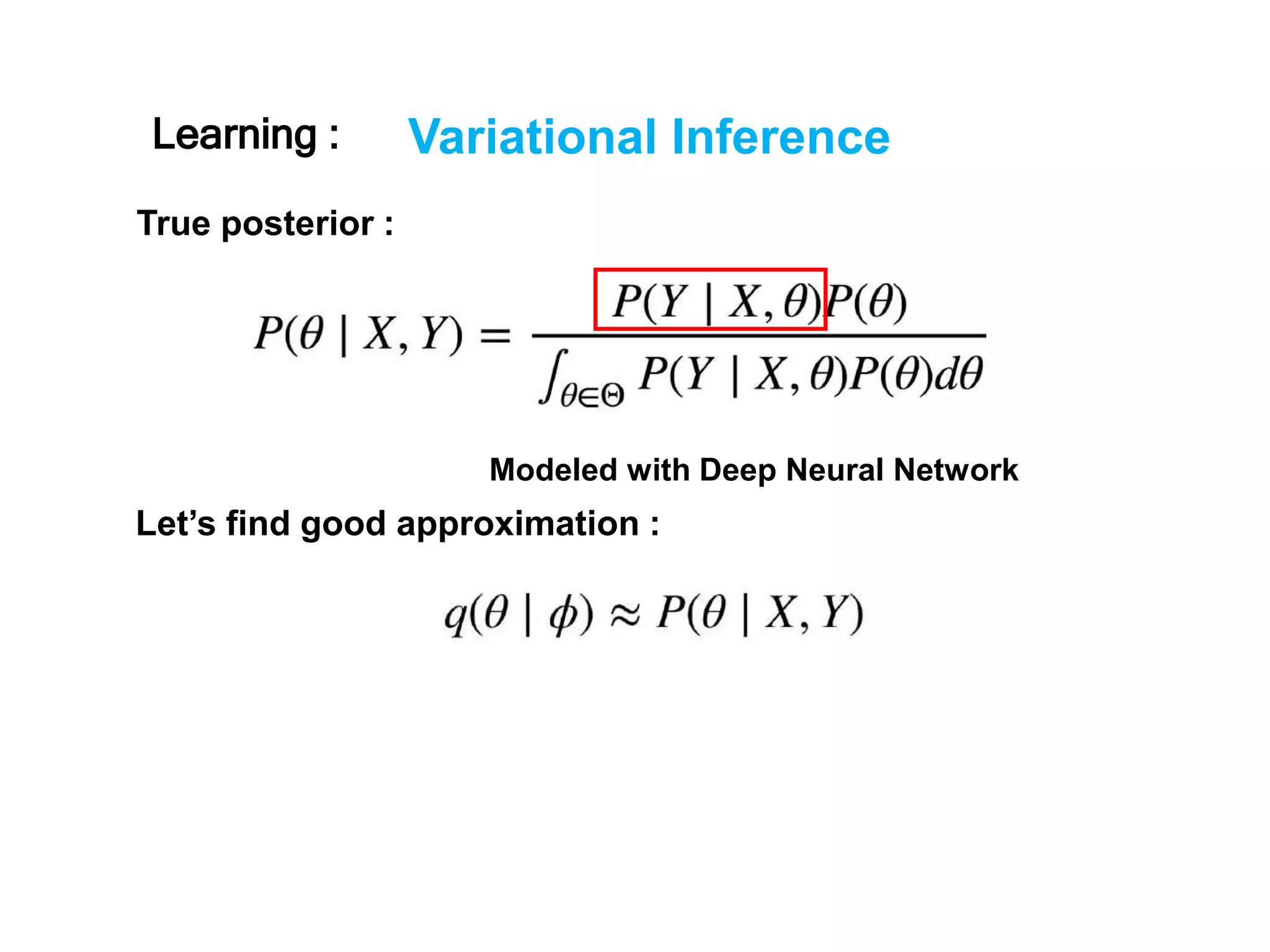

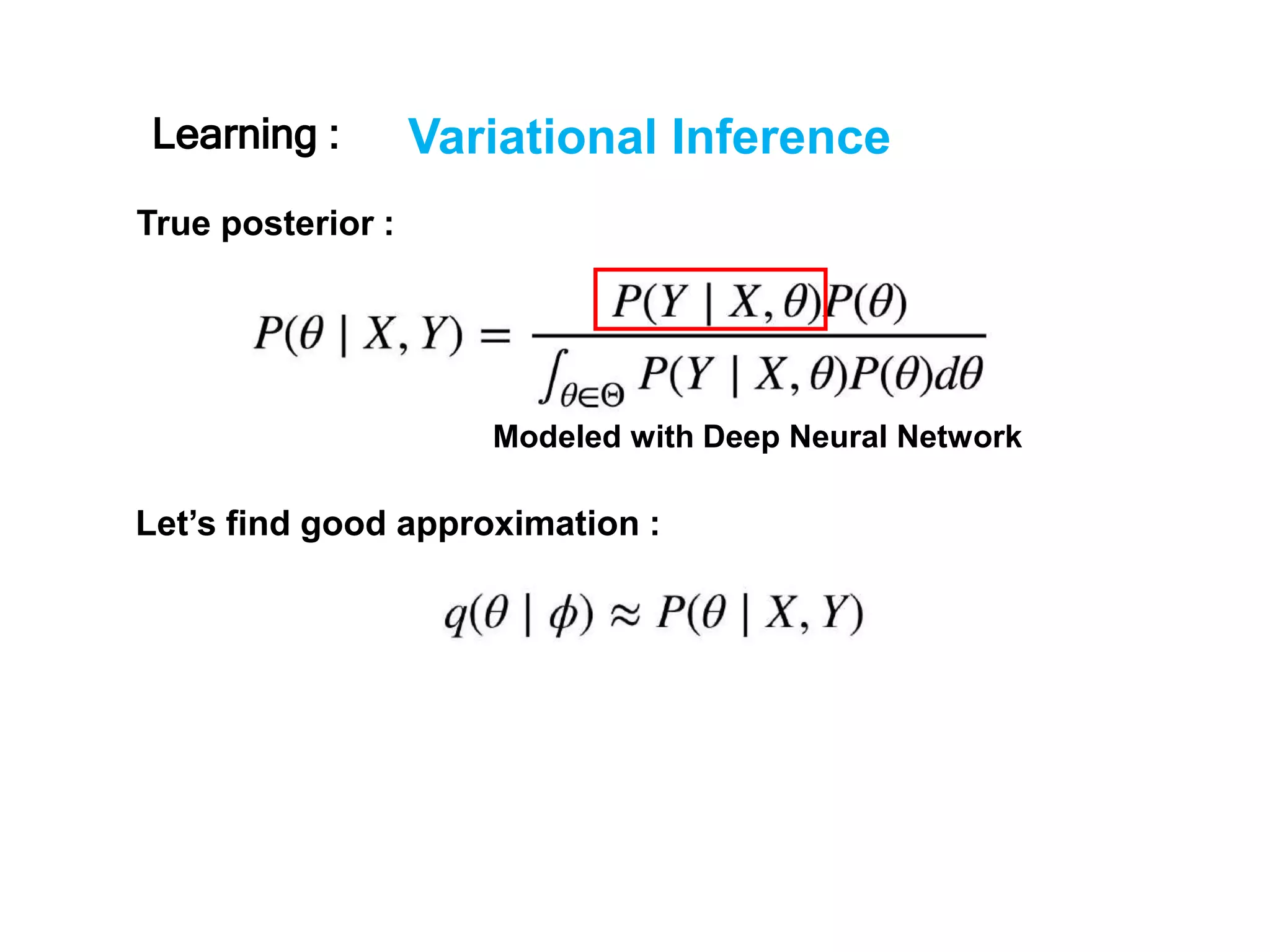

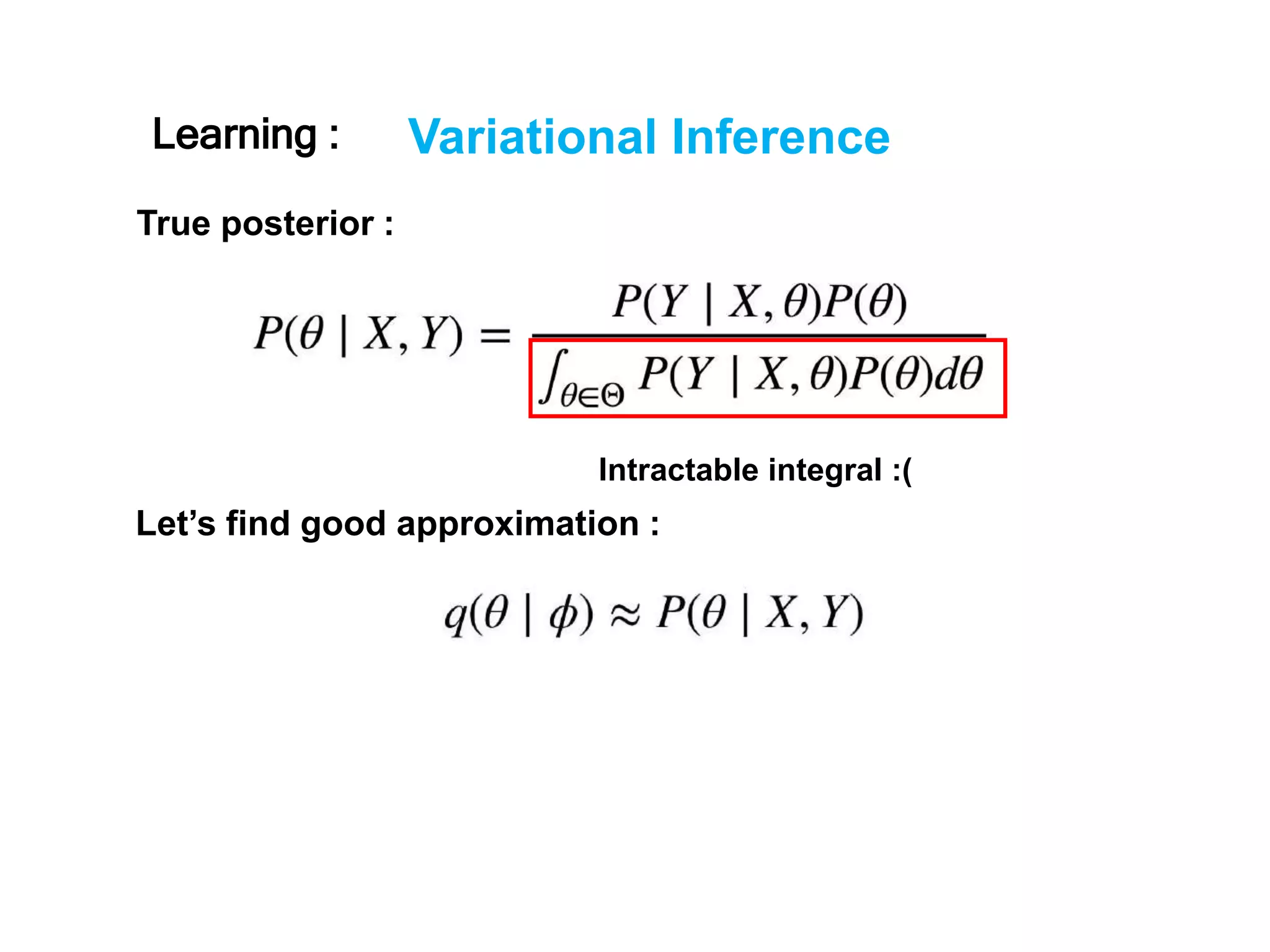

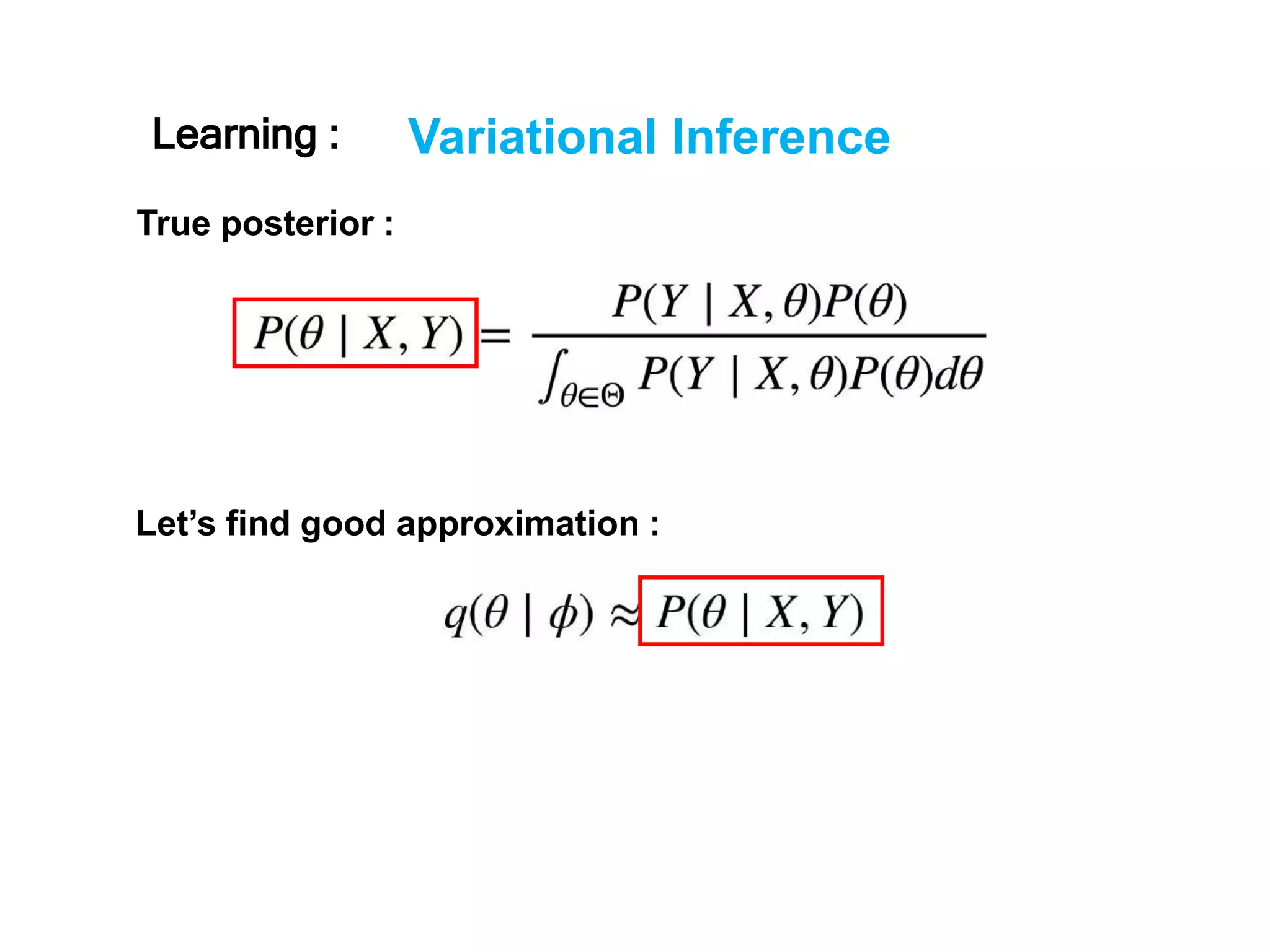

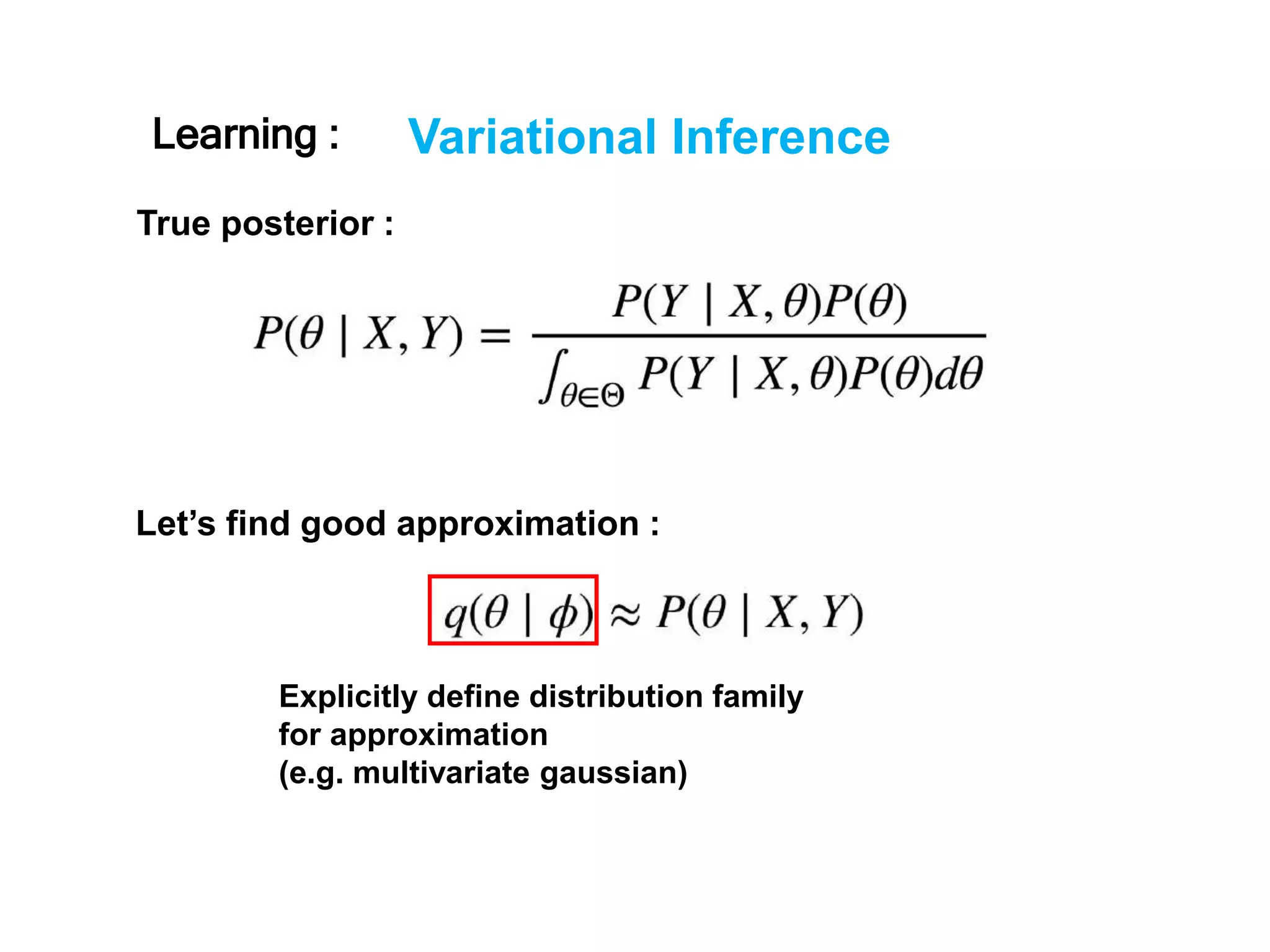

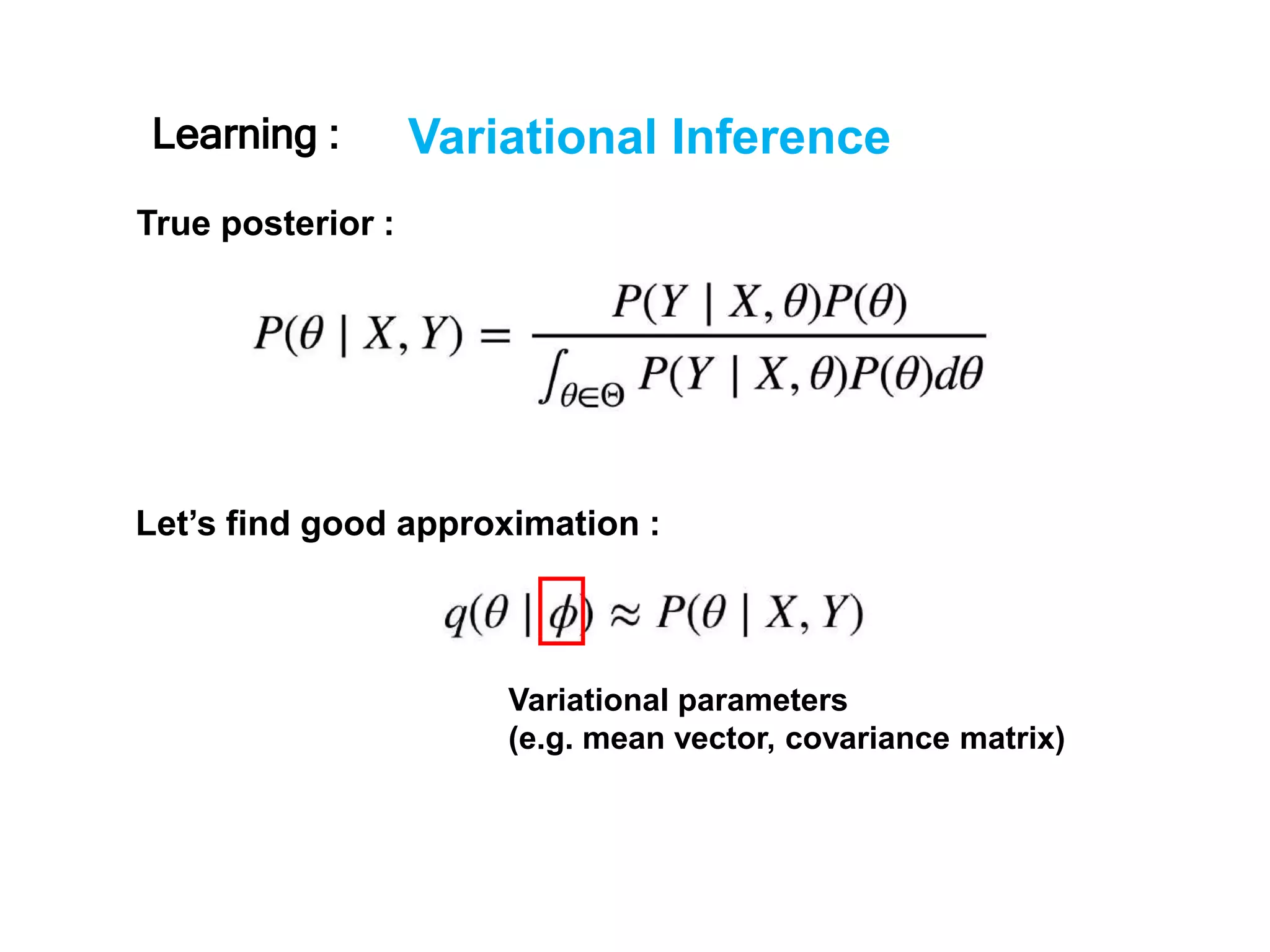

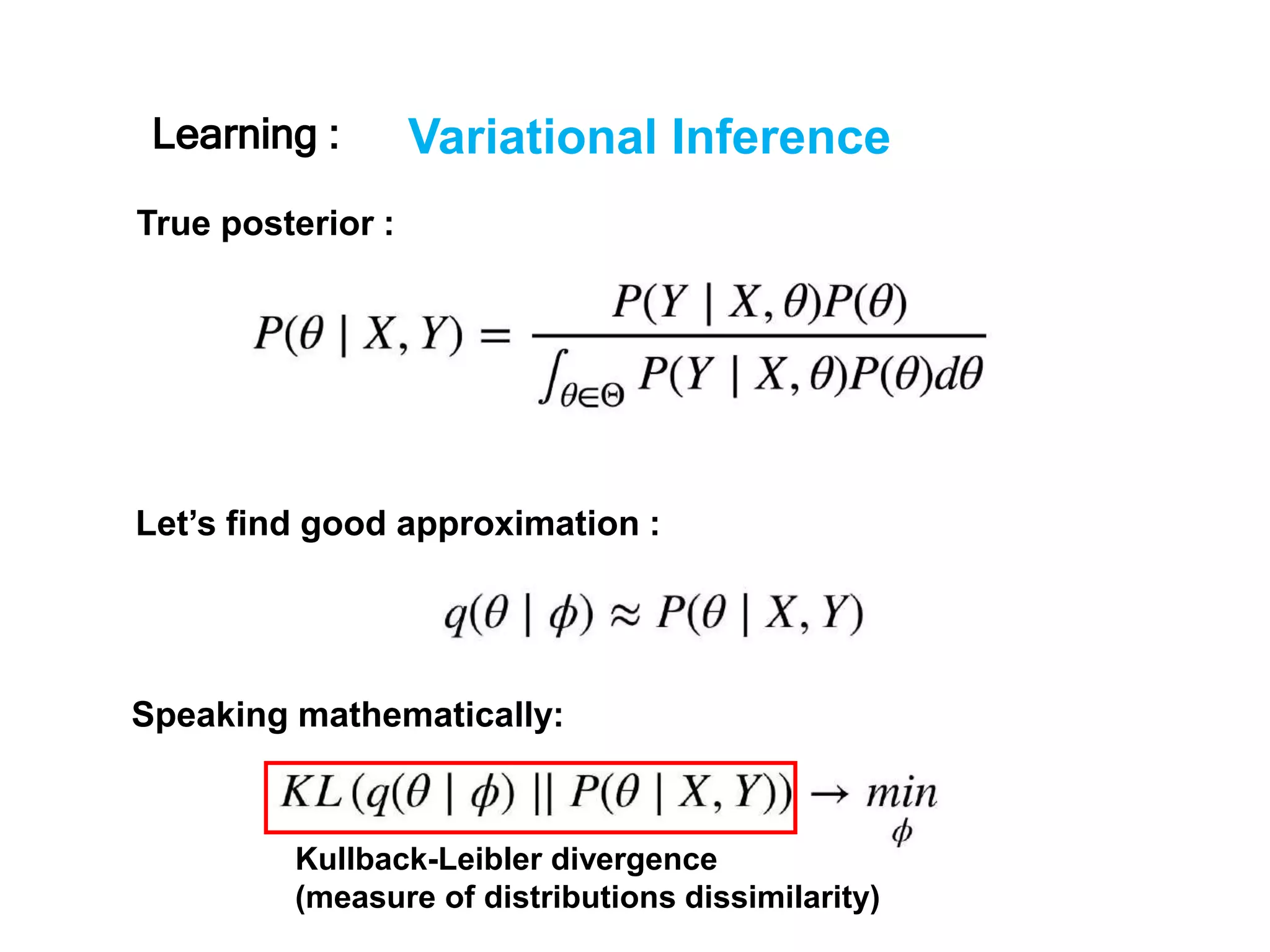

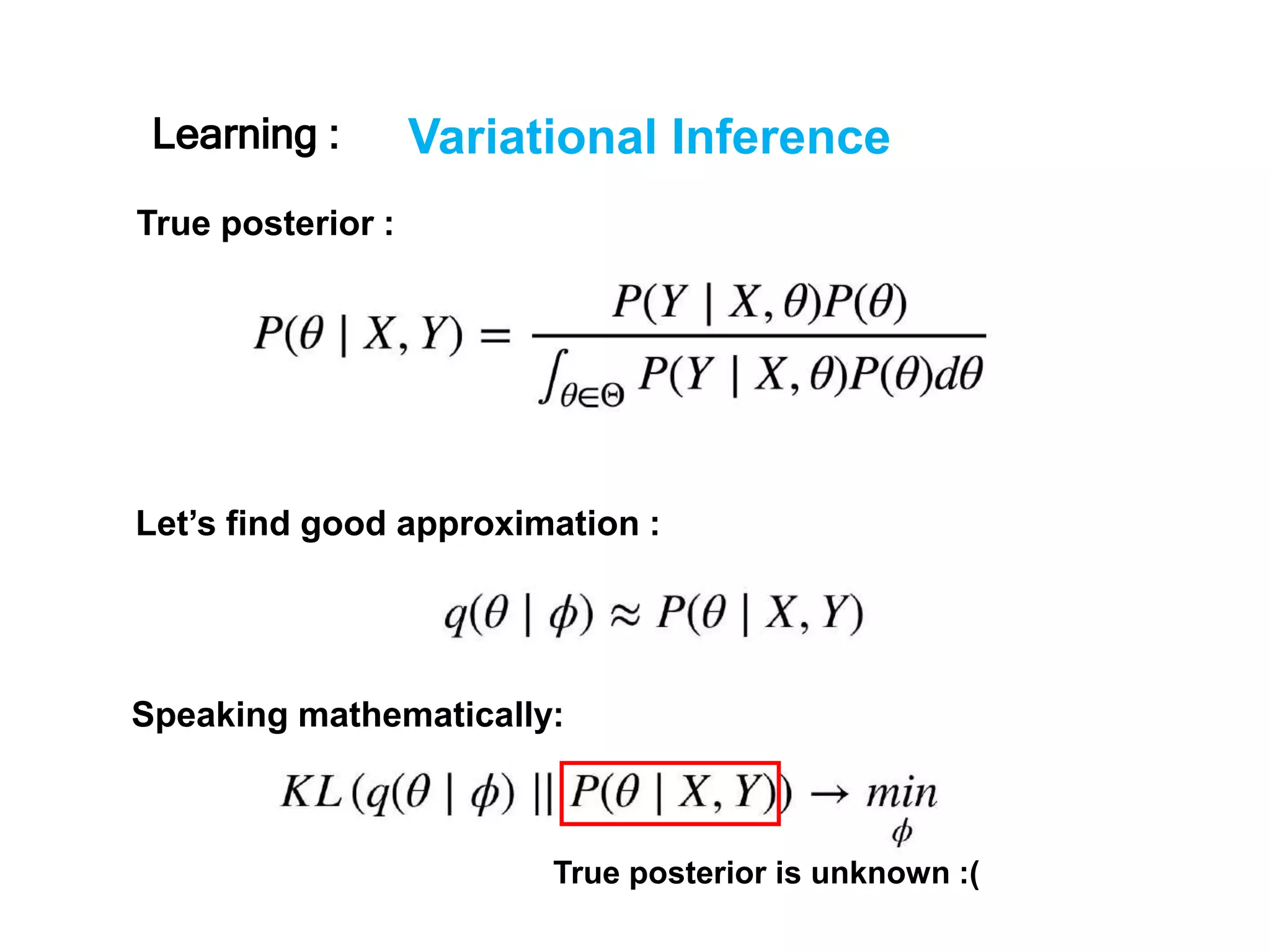

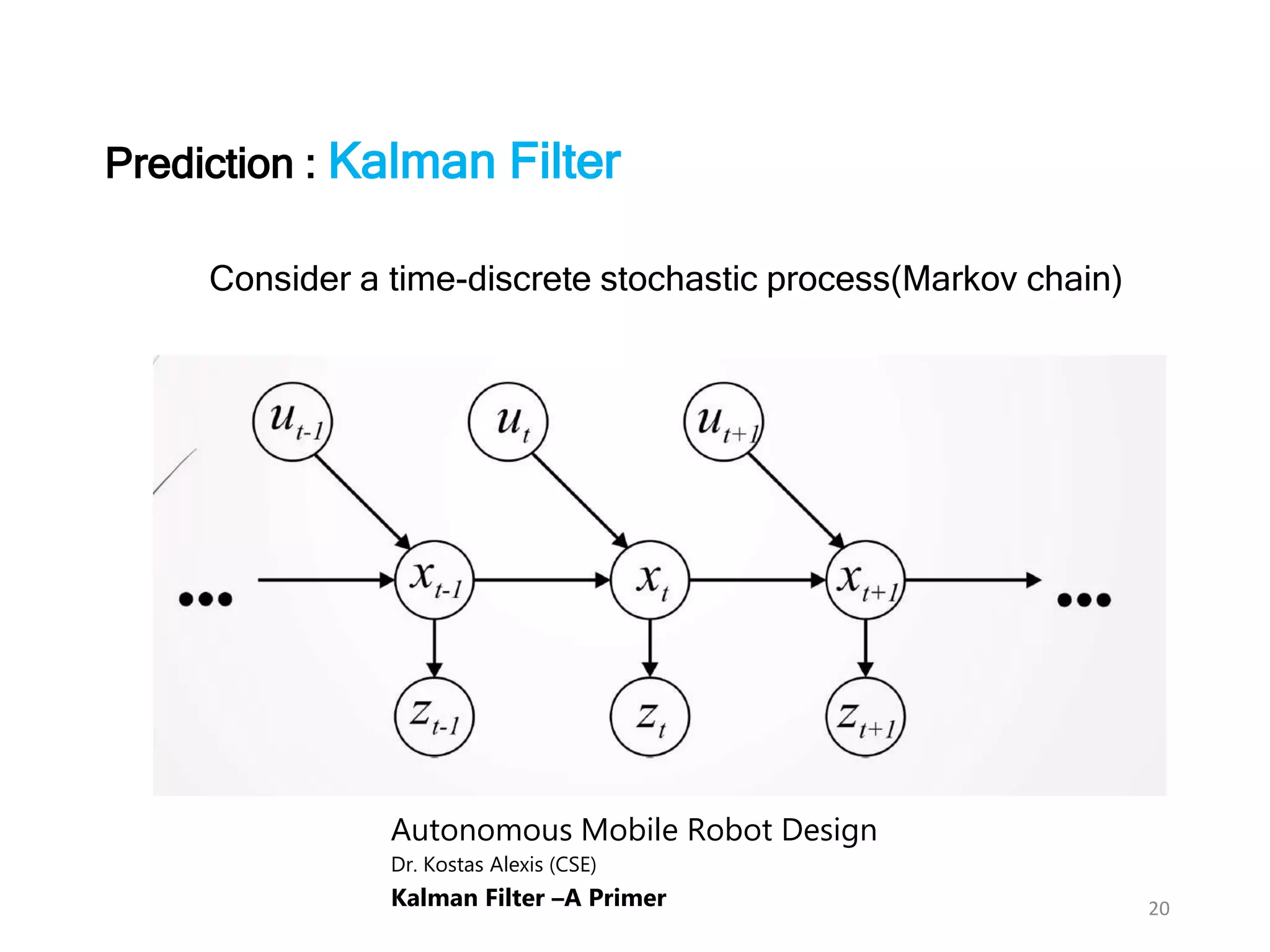

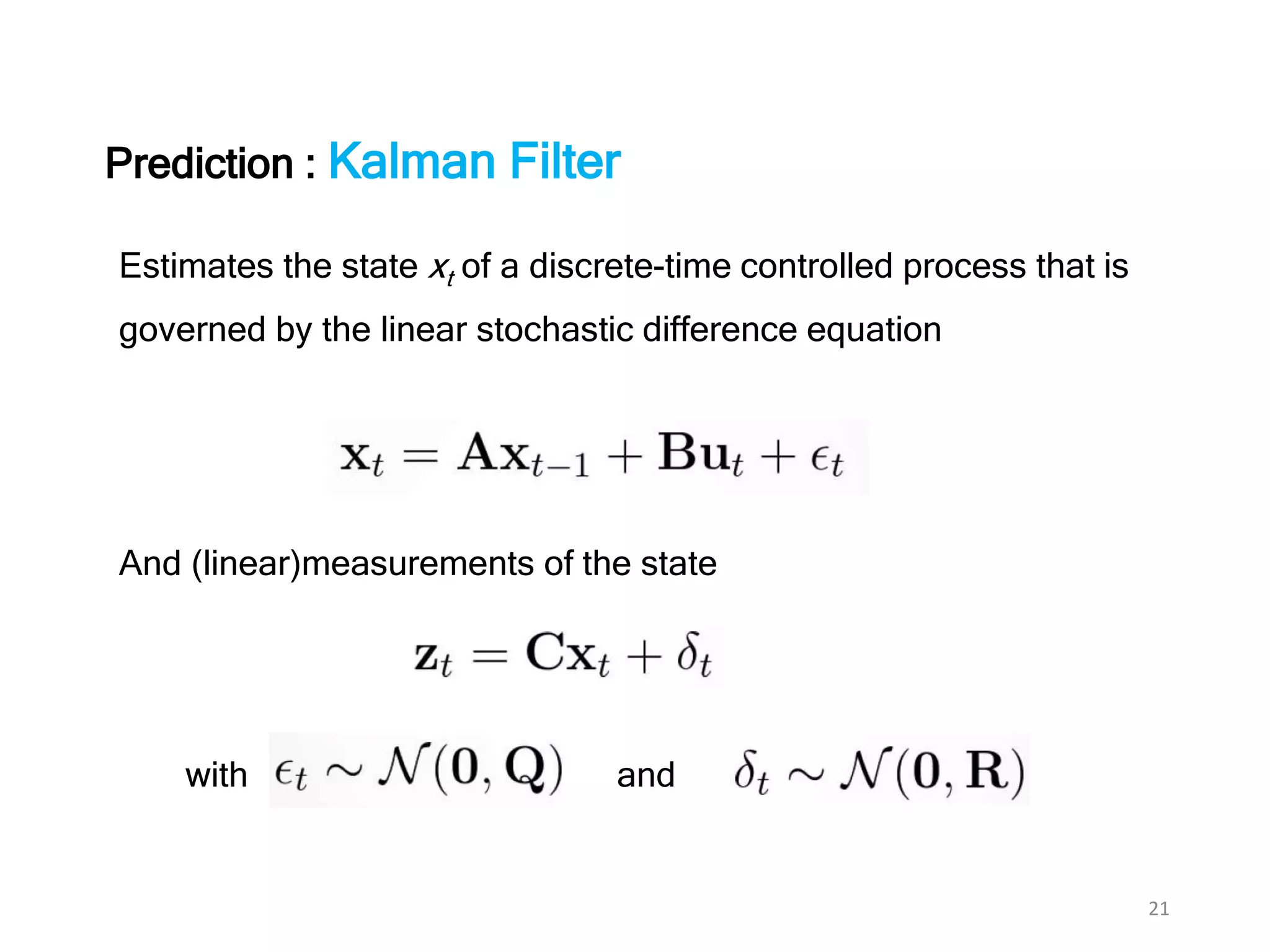

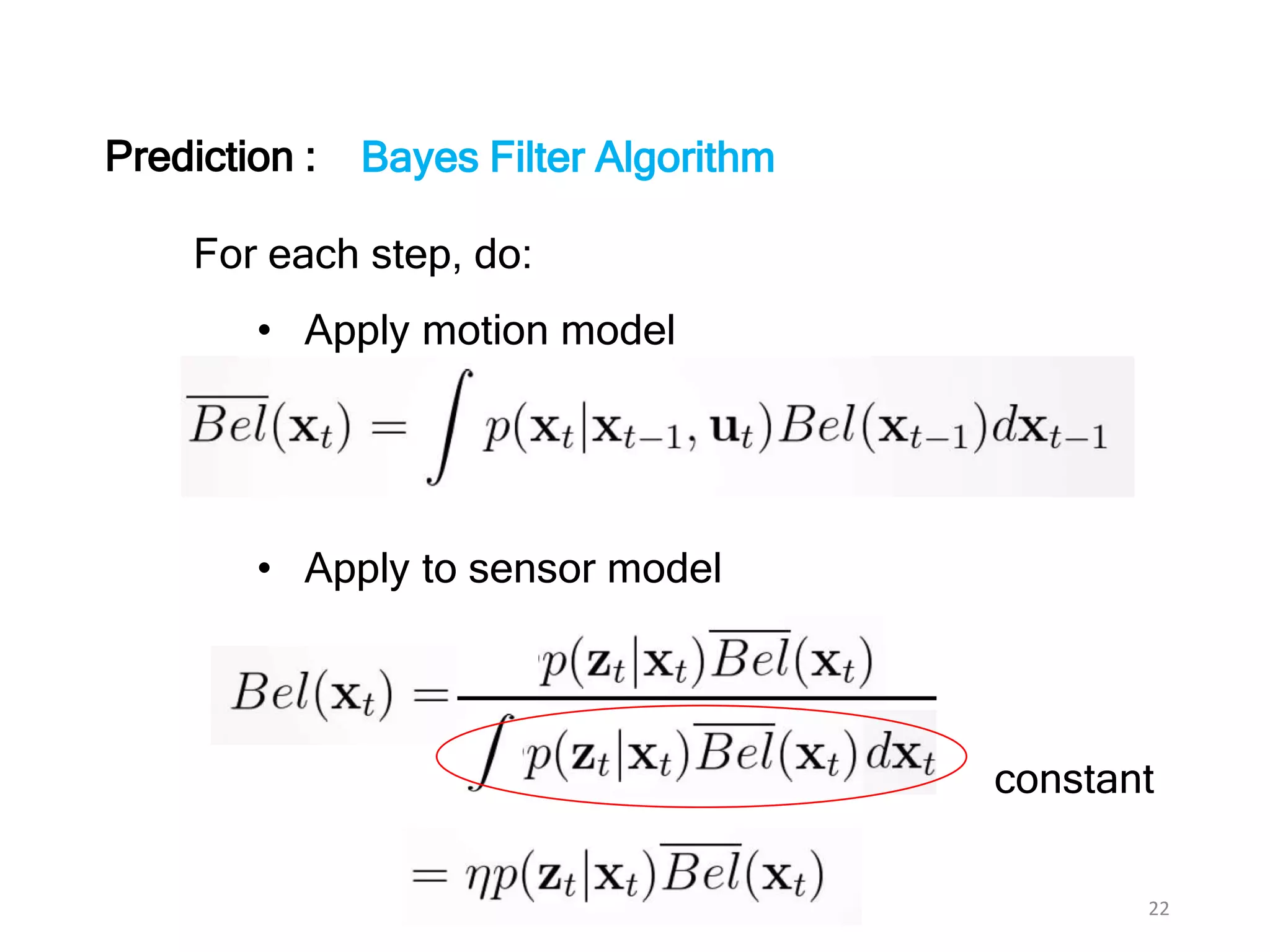

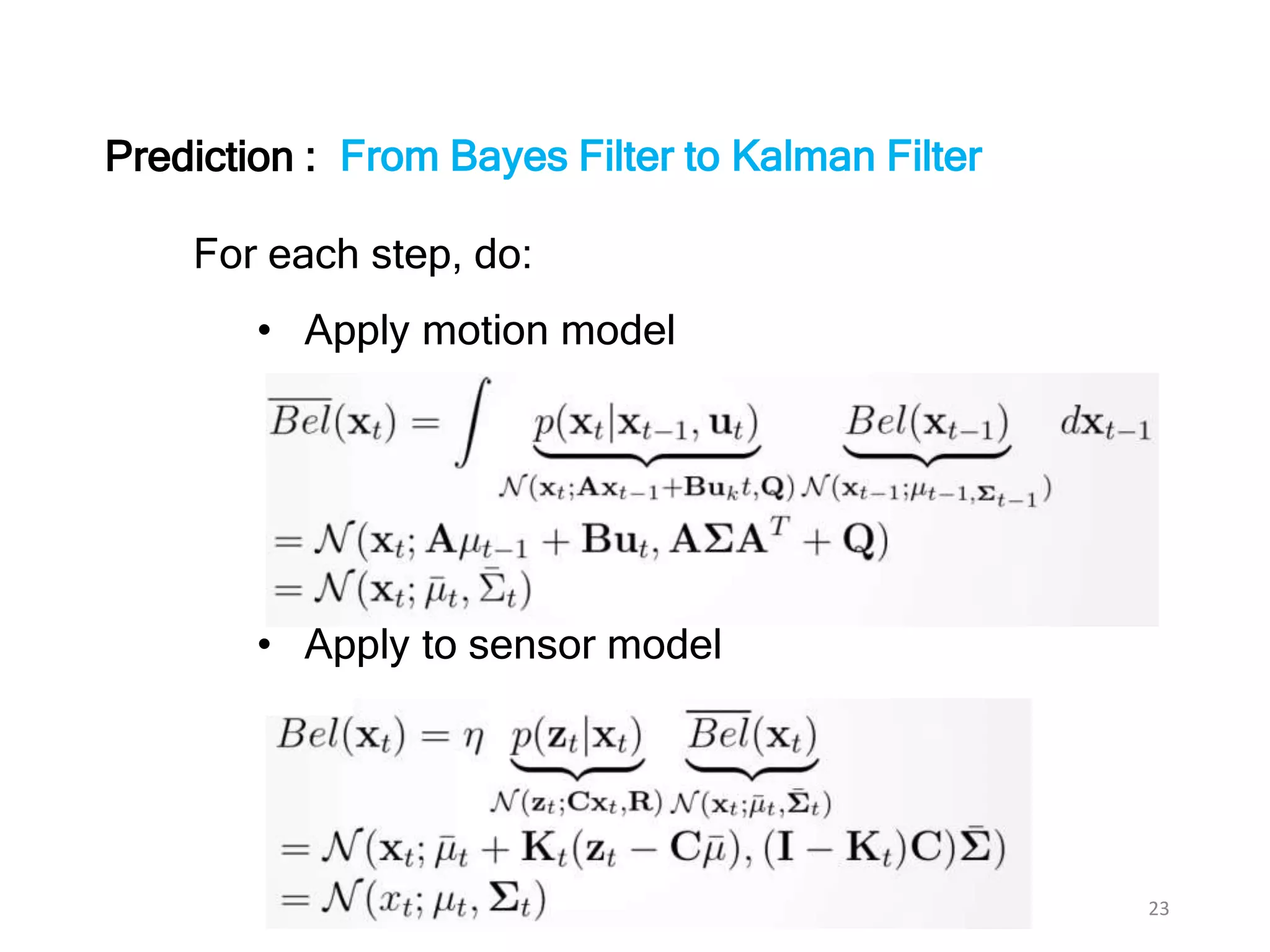

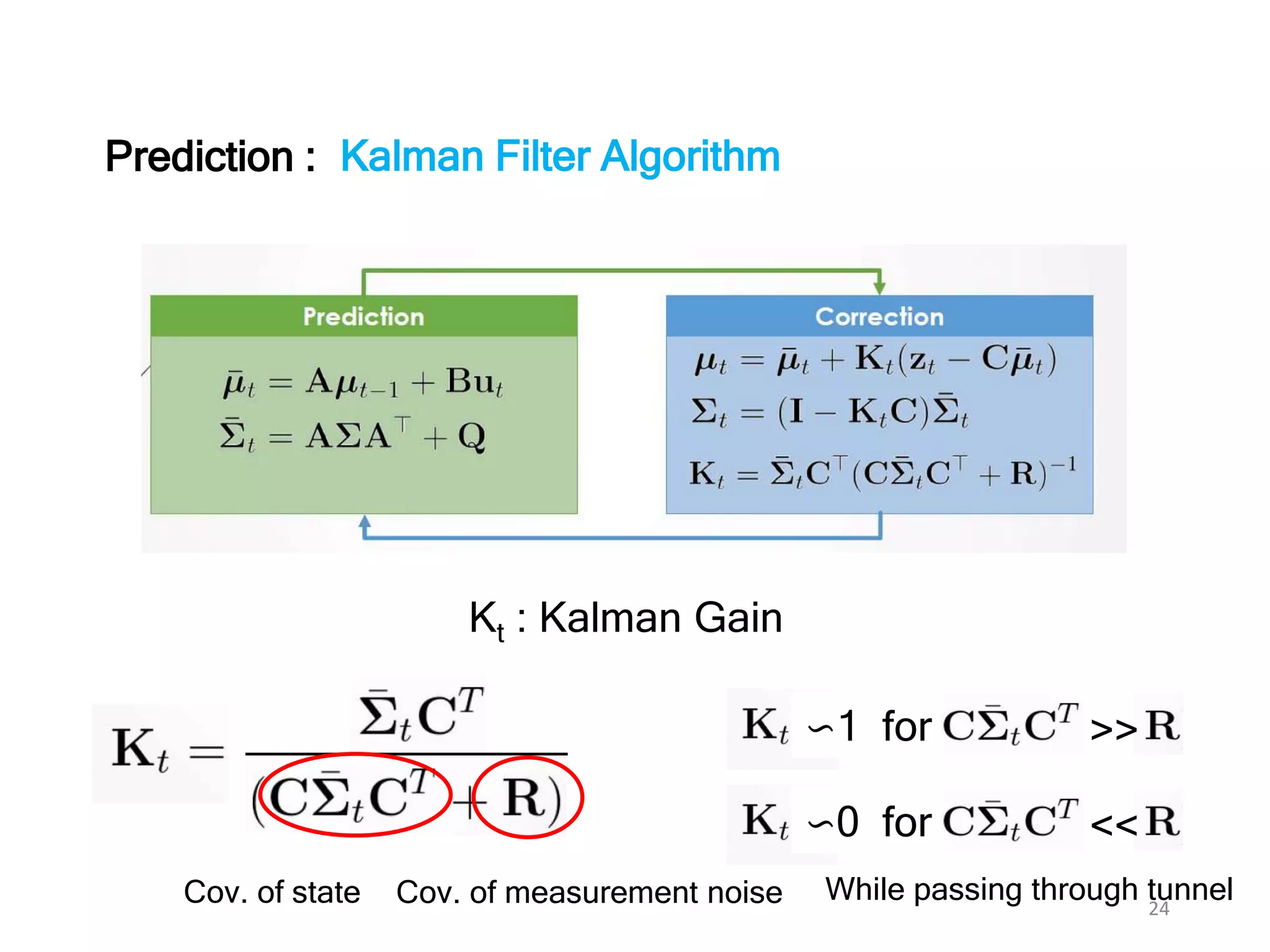

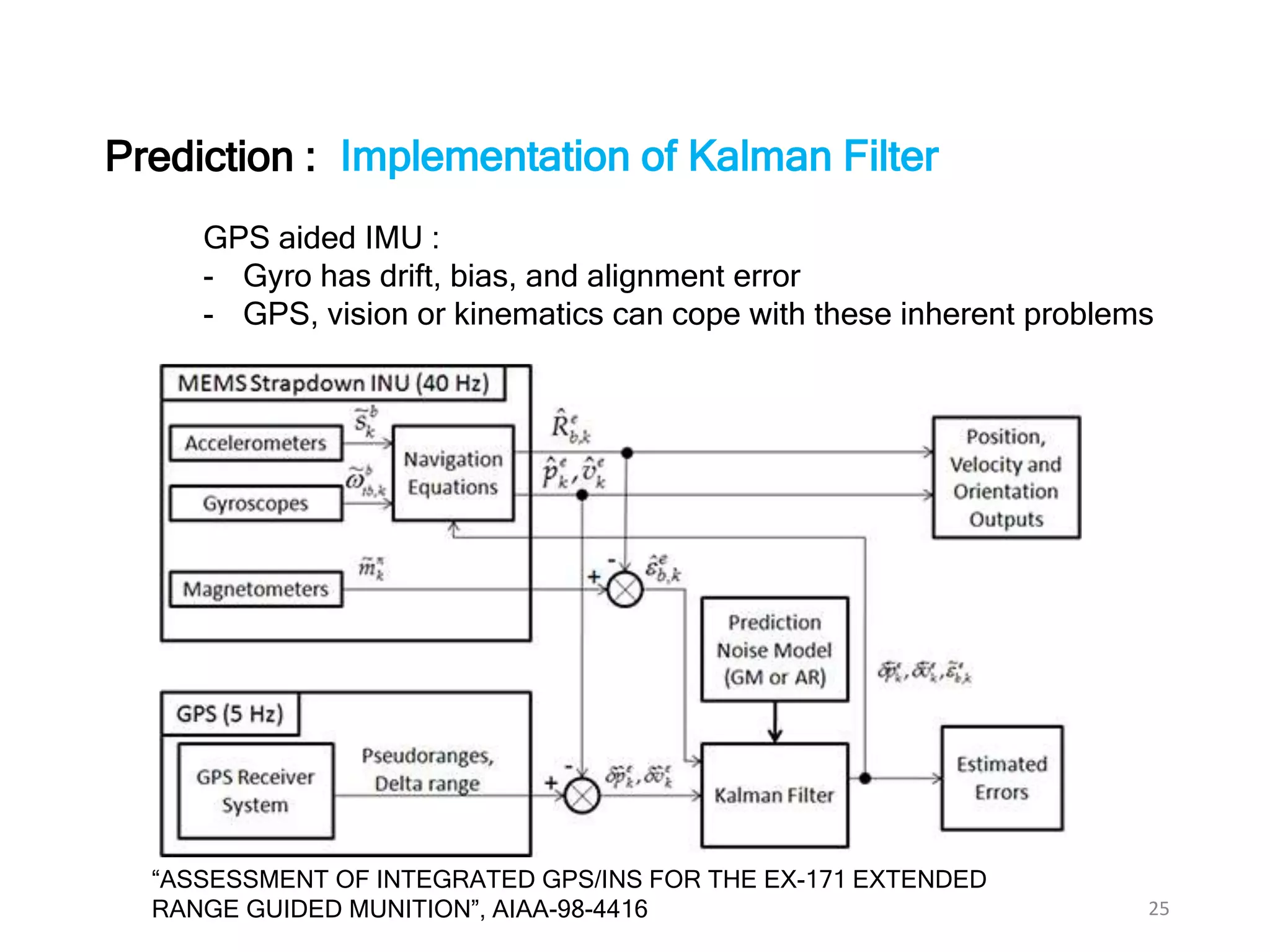

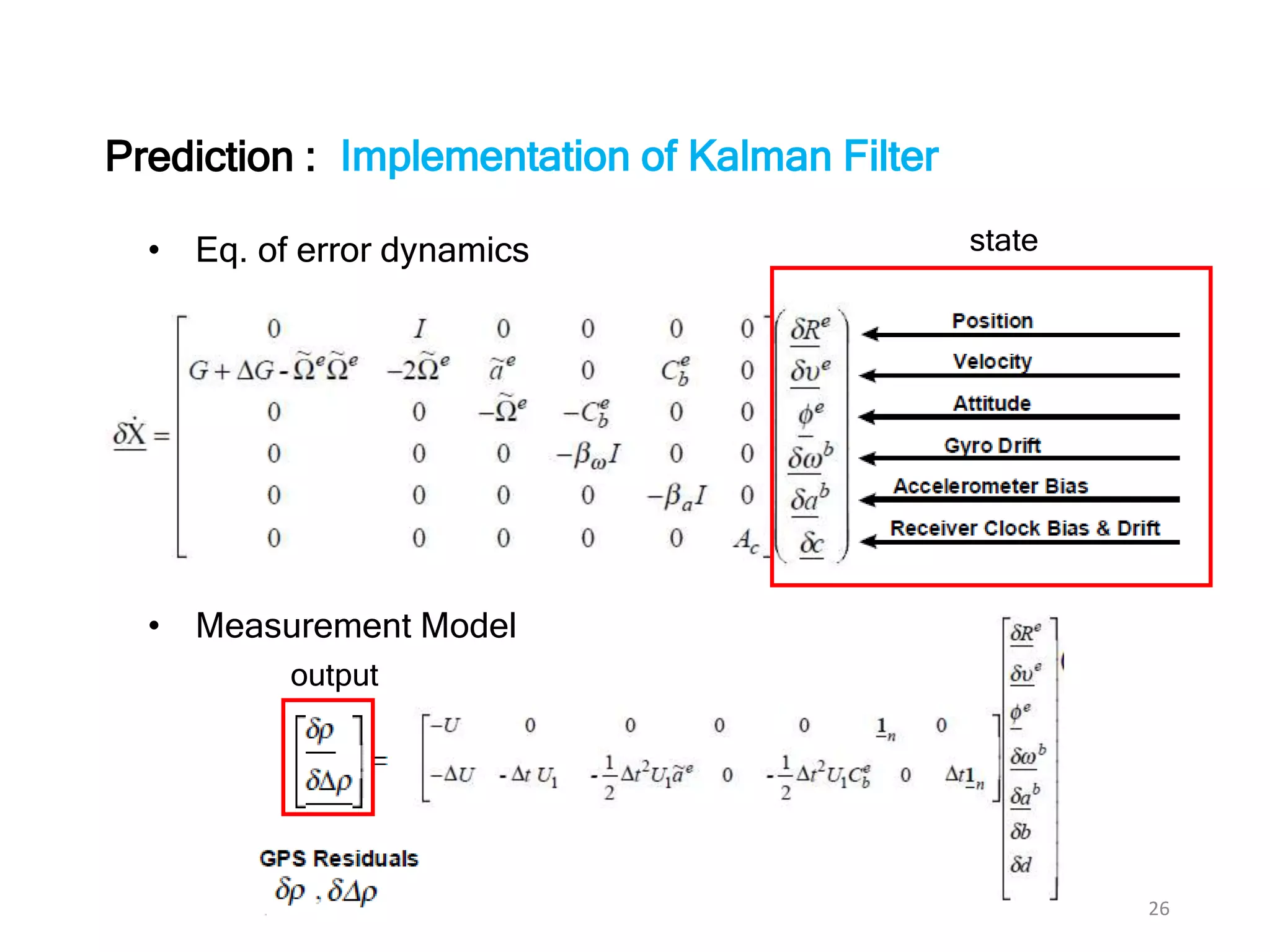

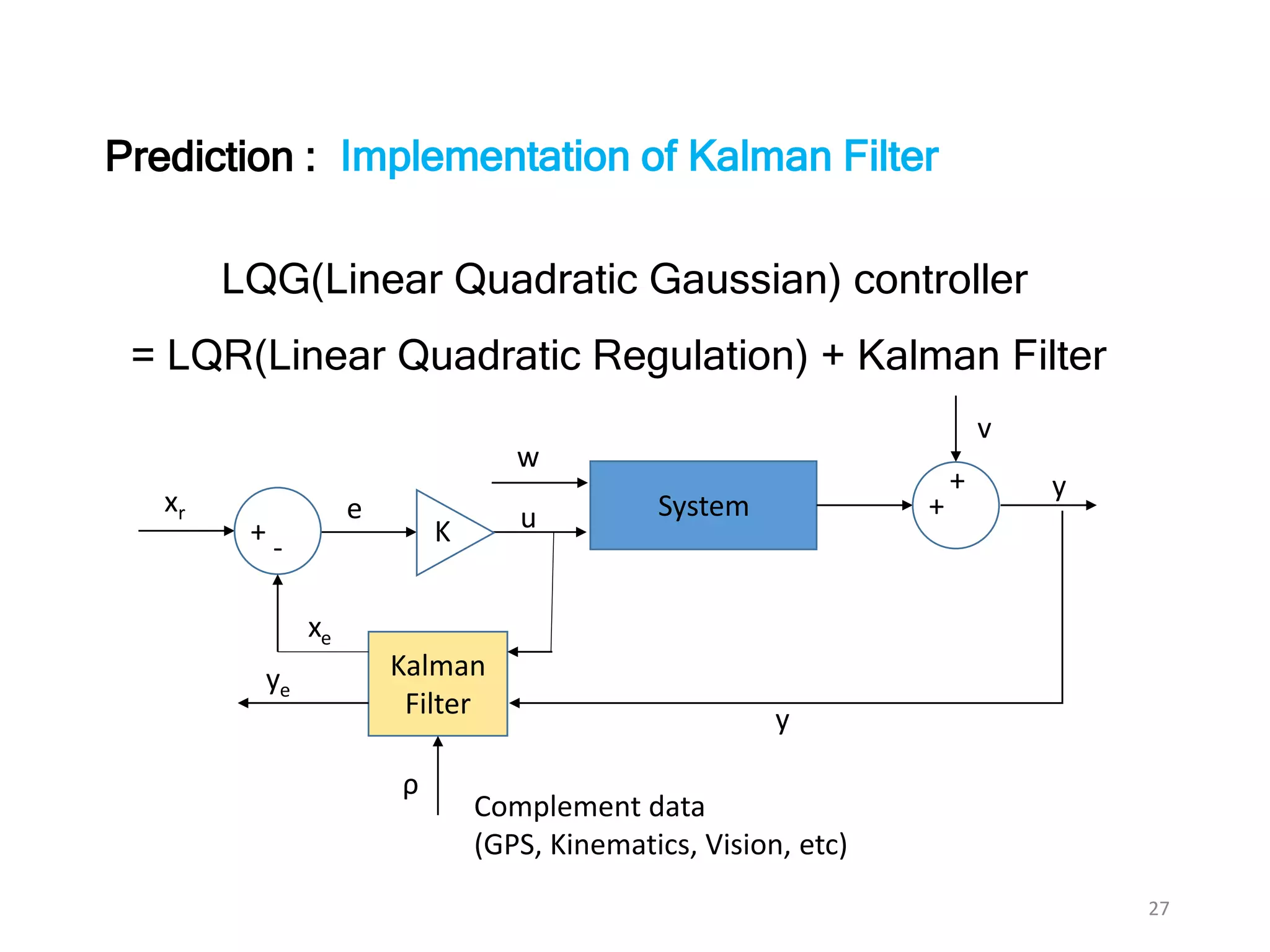

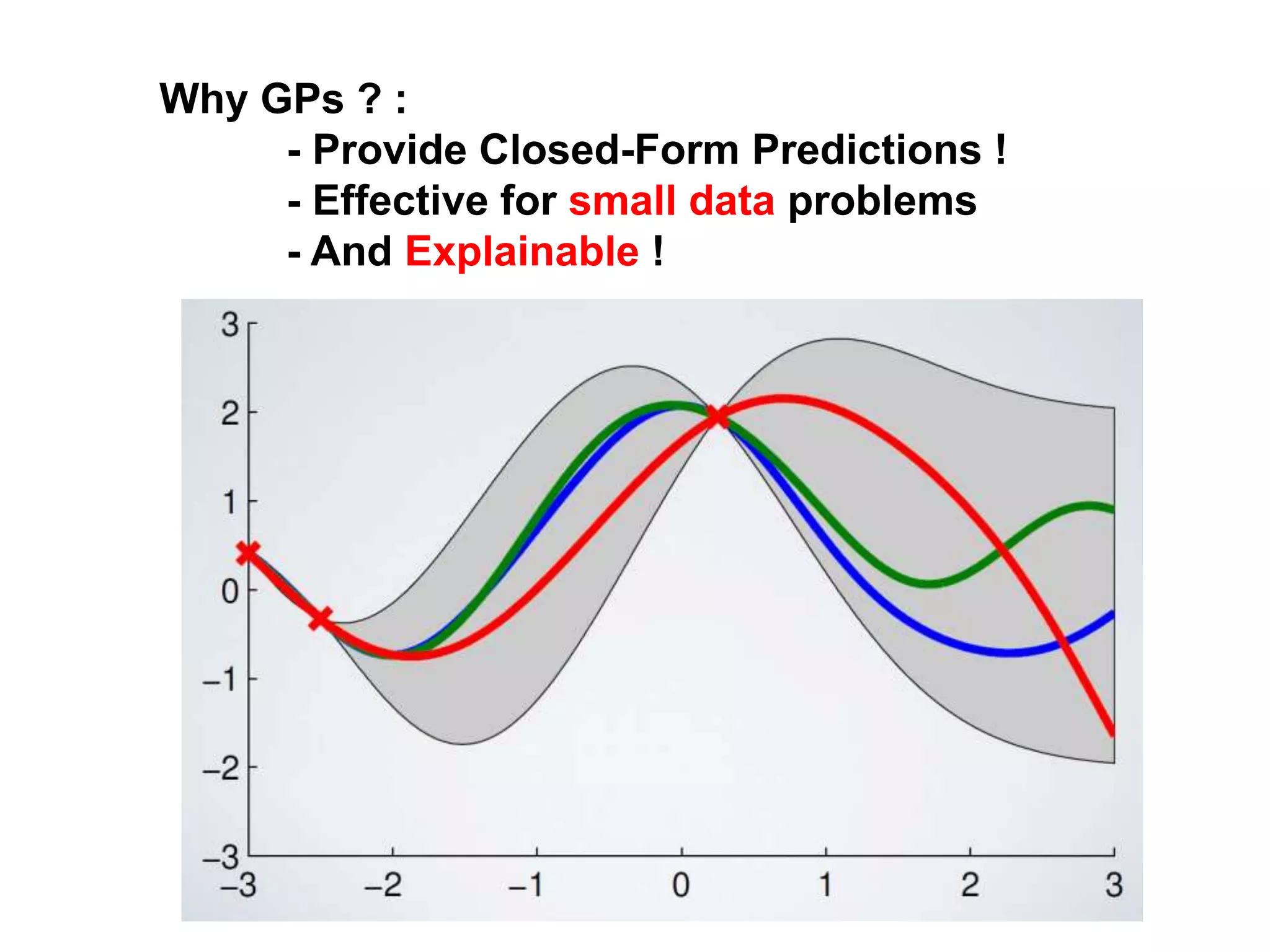

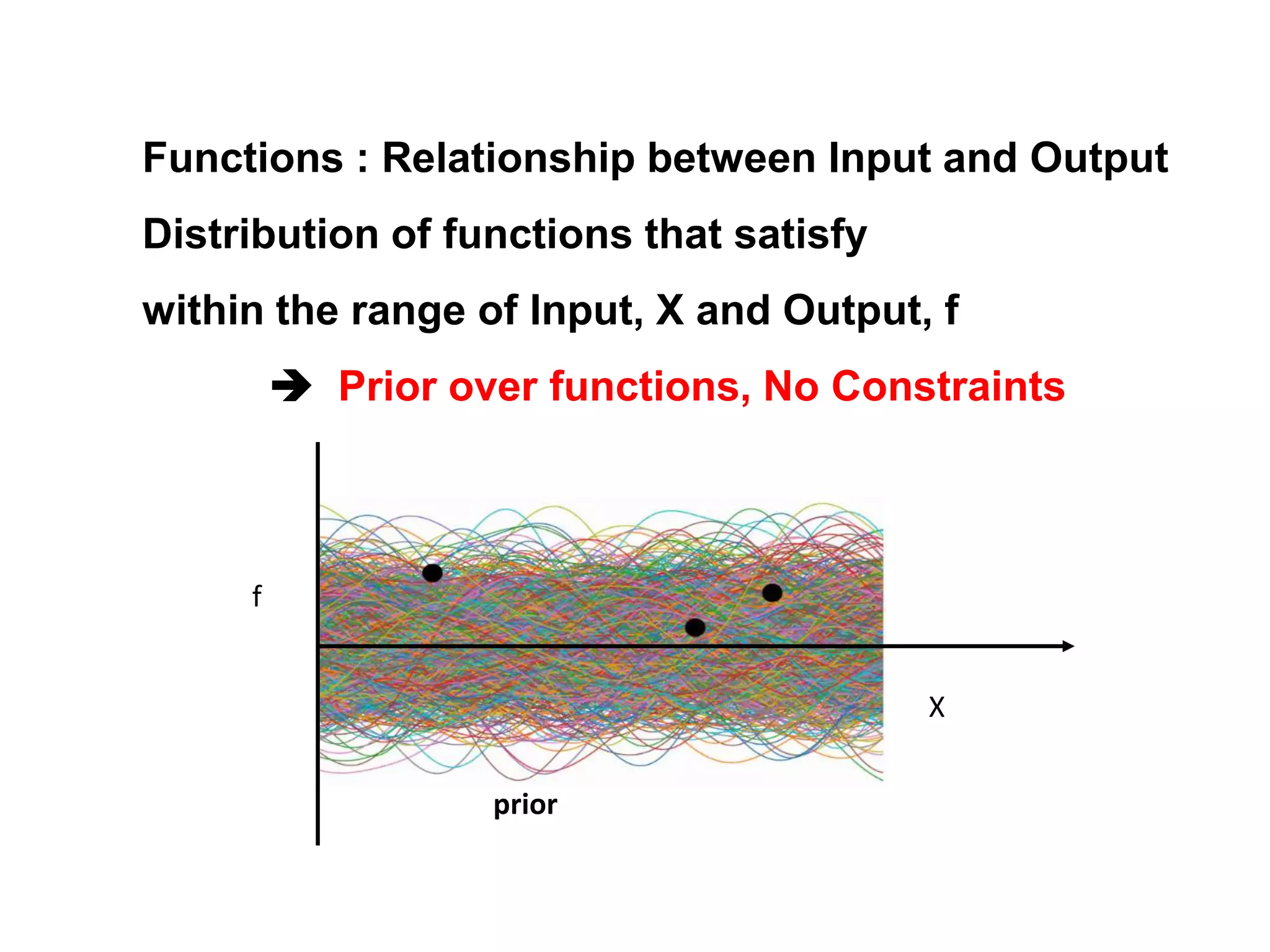

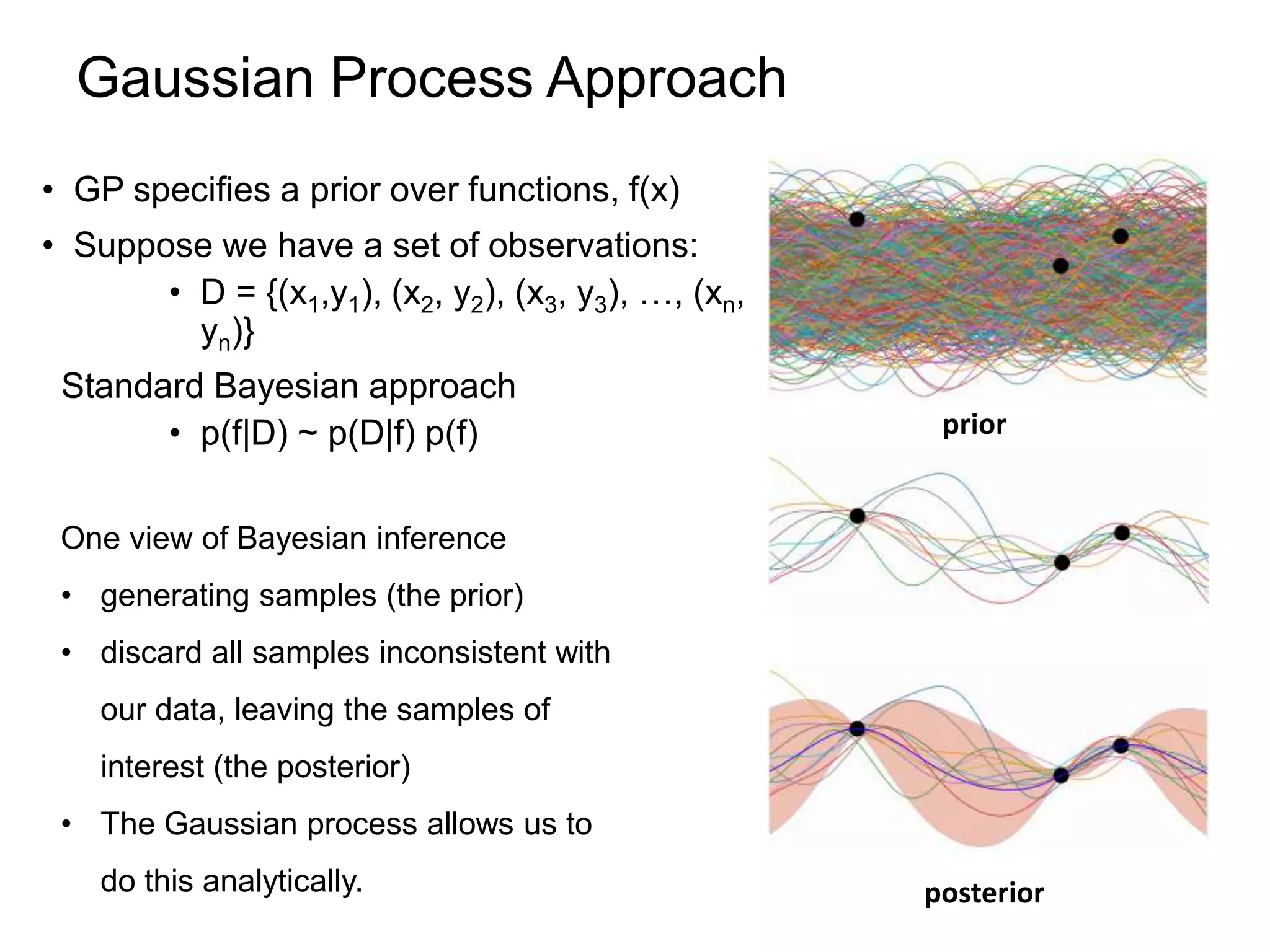

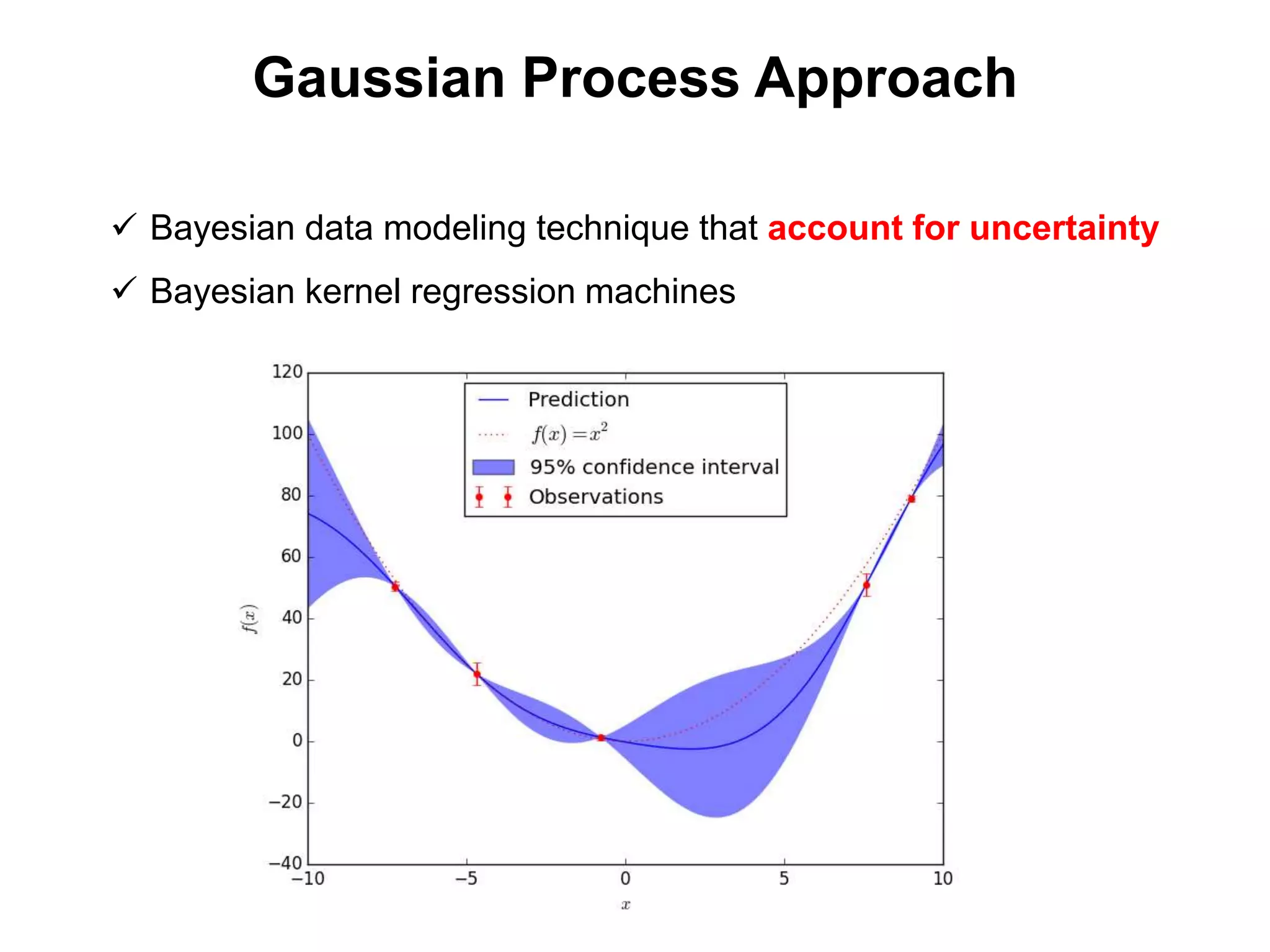

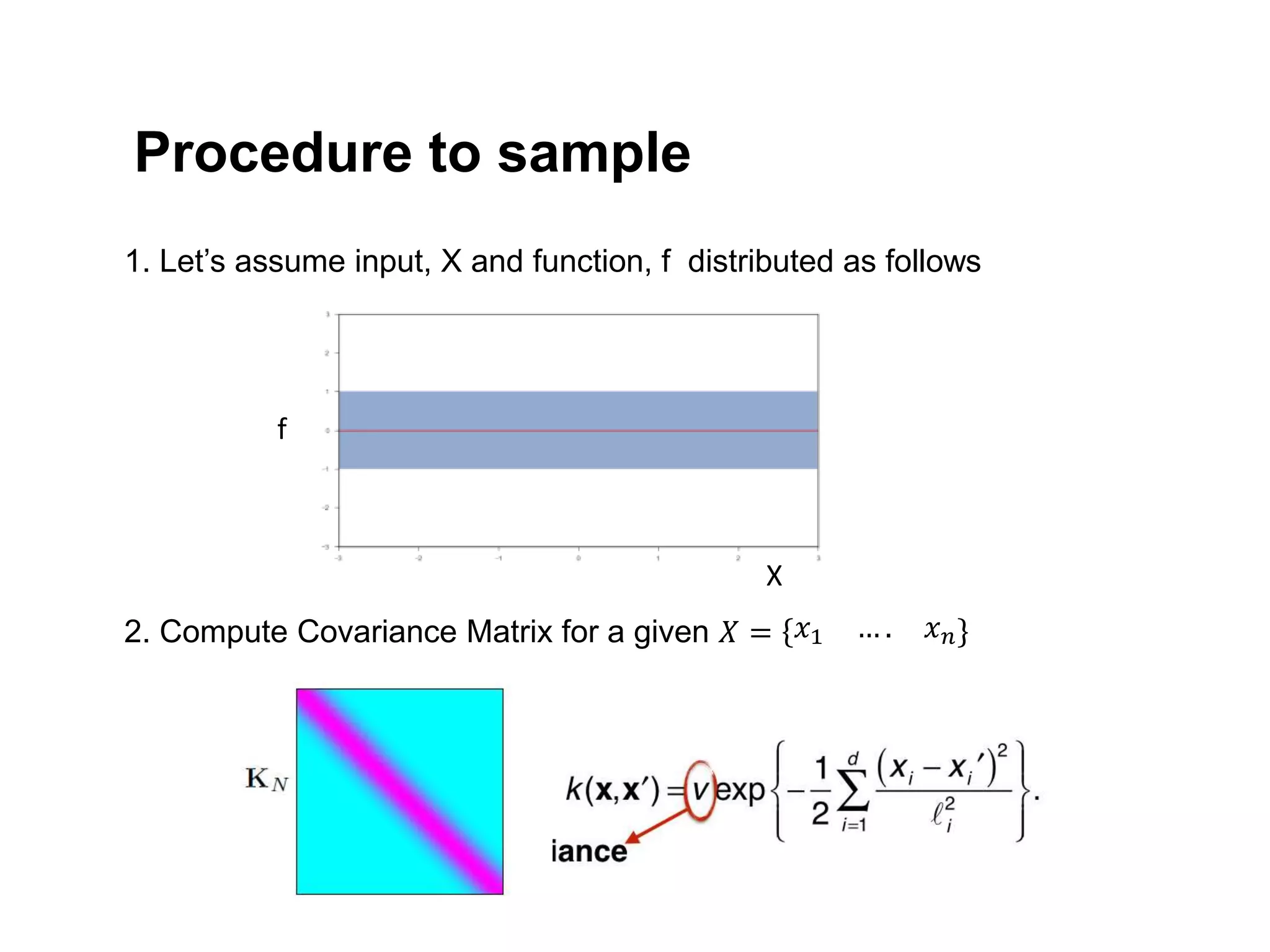

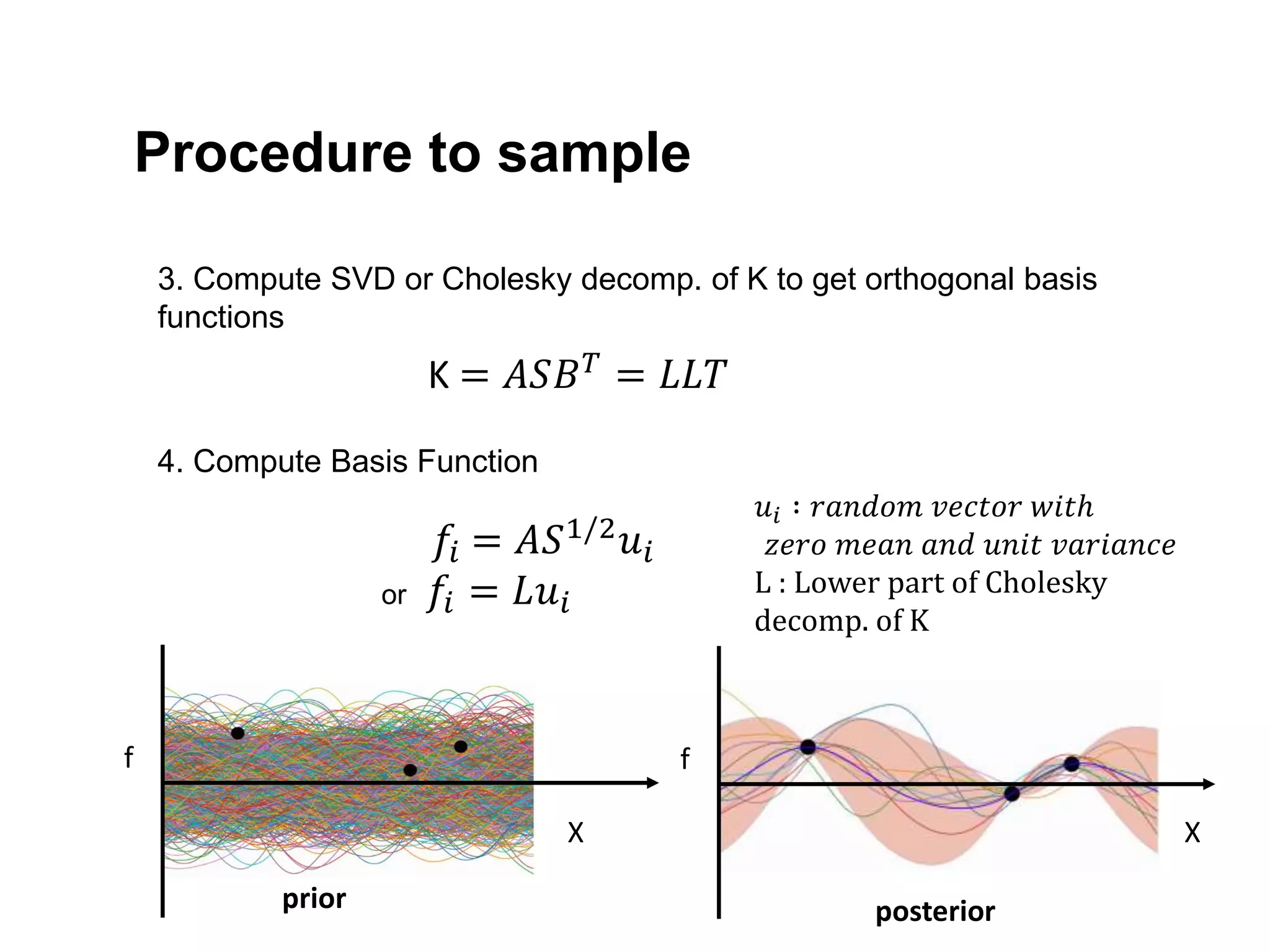

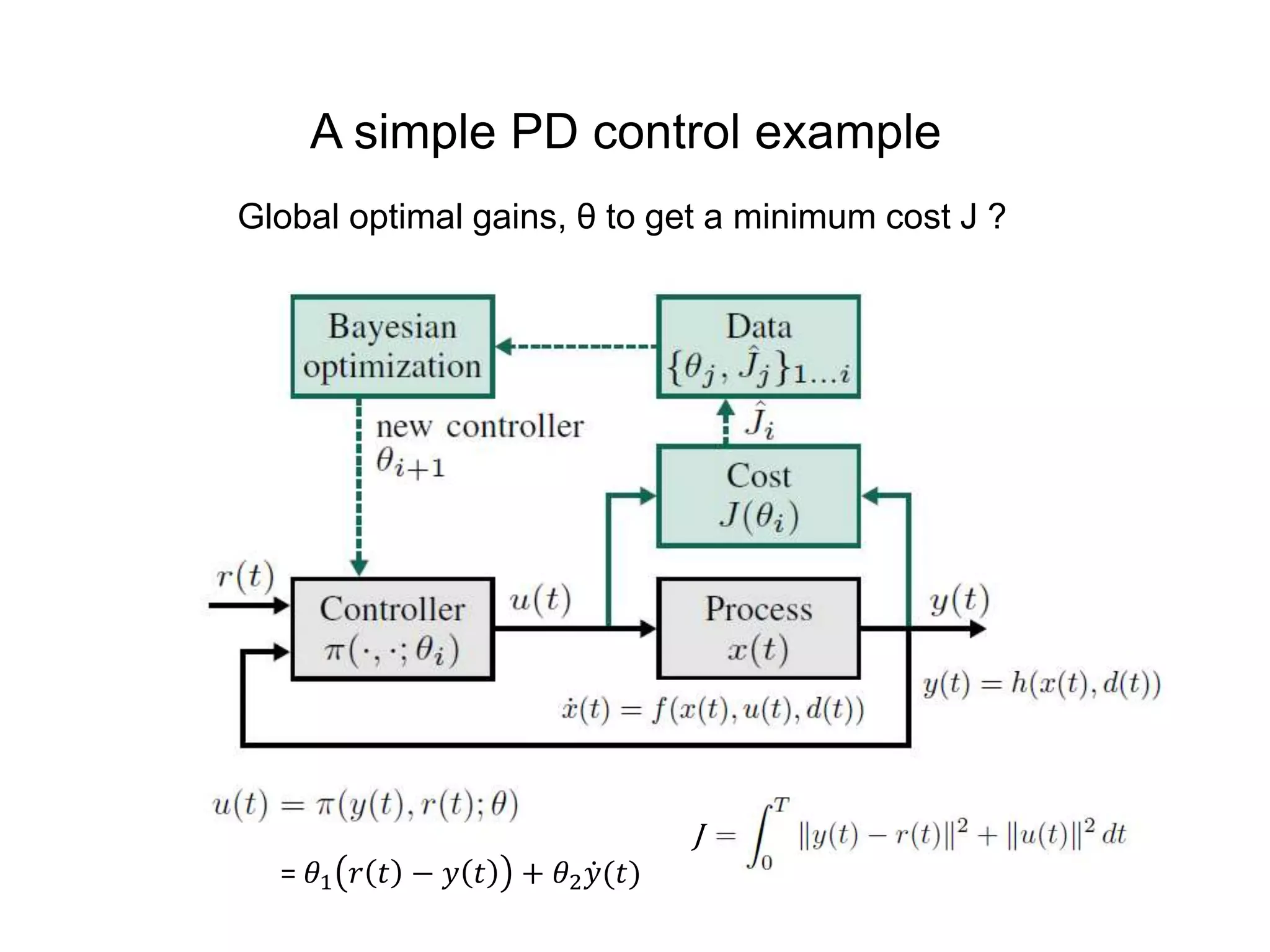

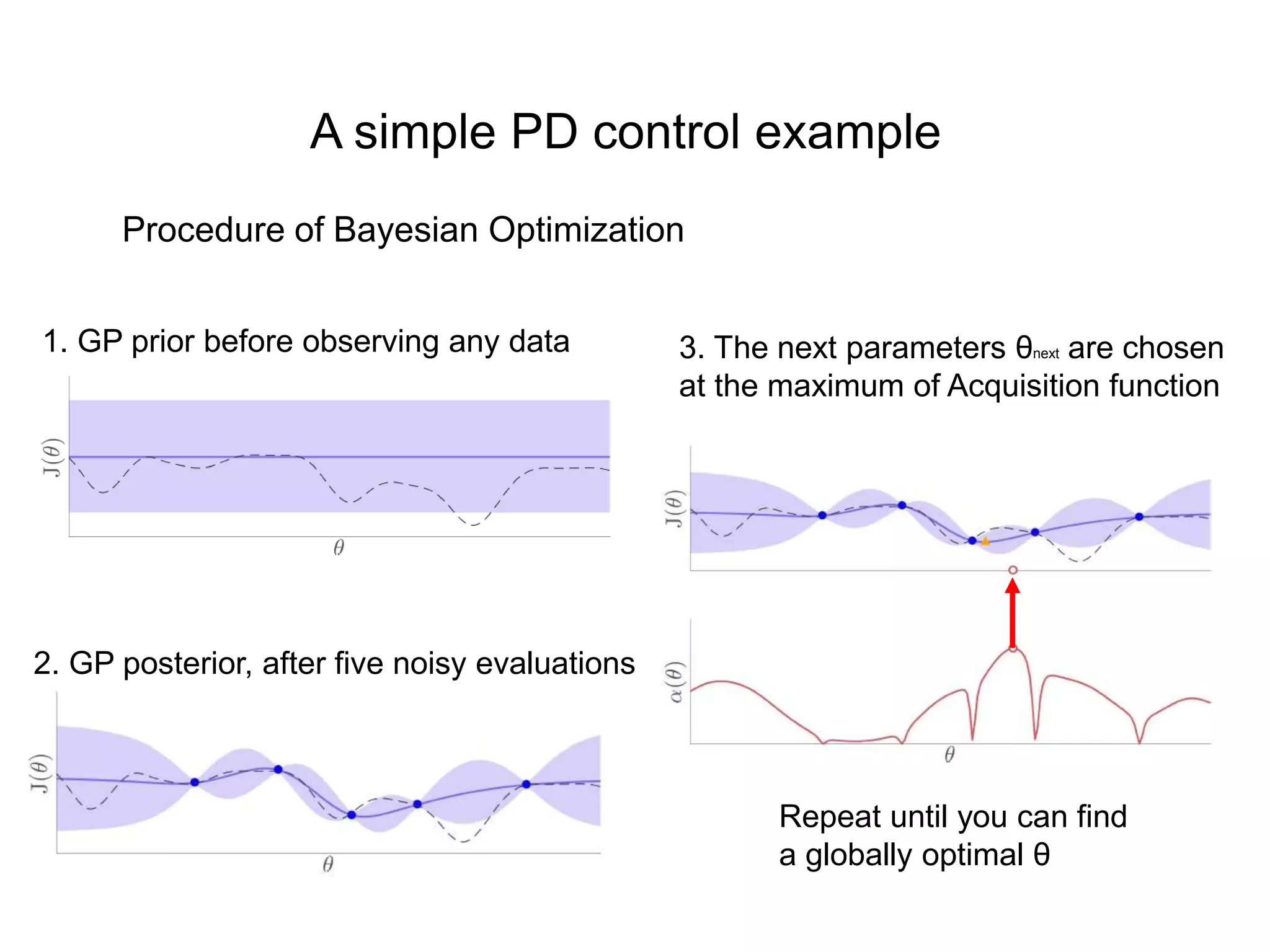

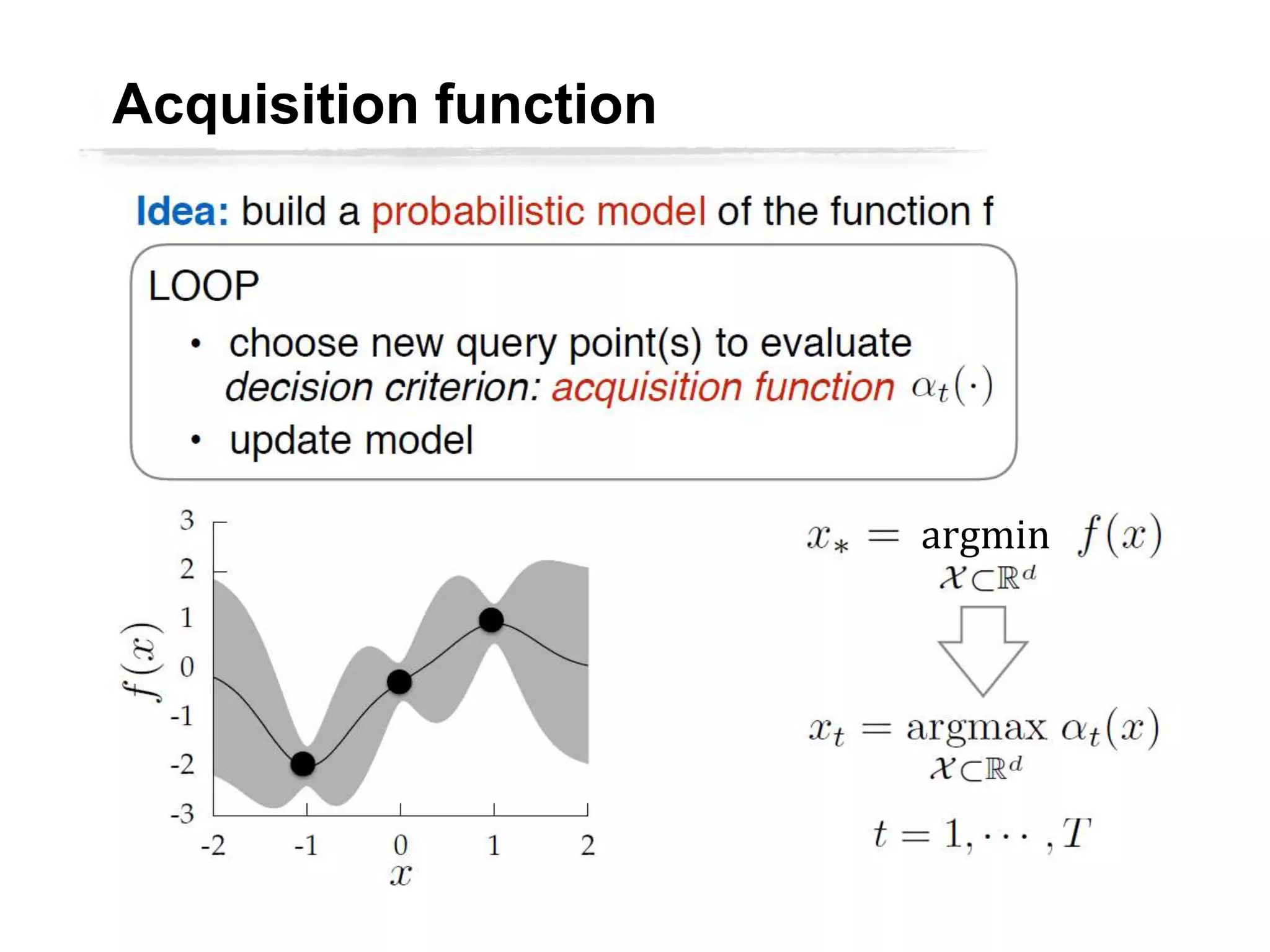

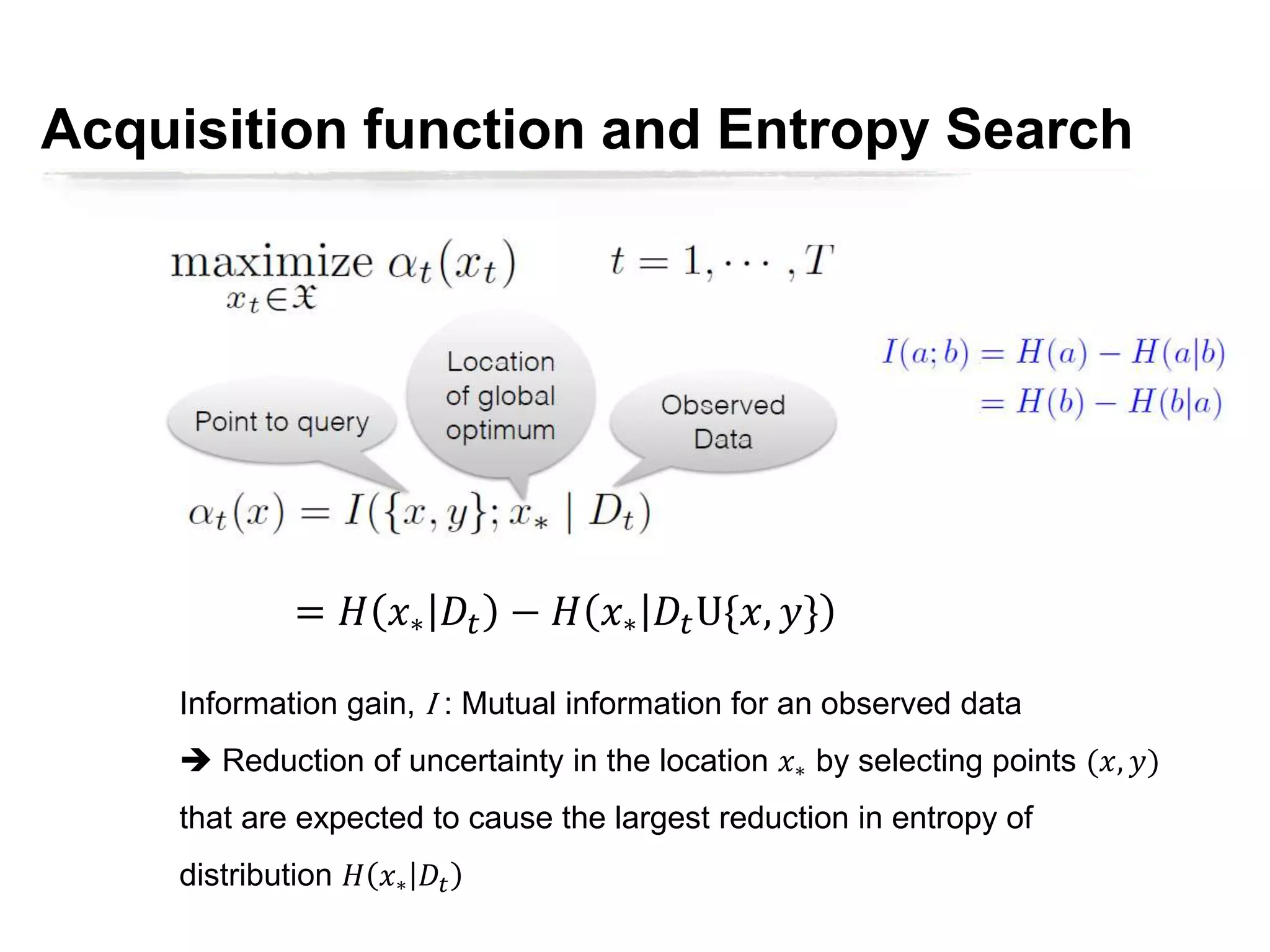

The document discusses Bayesian inference, focusing on learning, prediction, and optimization, with a particular emphasis on tools like the Kalman filter and Bayesian optimization techniques. It covers concepts such as maximum a posteriori estimation, regularized logistic regression, and Gaussian processes for modeling uncertainty in data. Additionally, it outlines the algorithmic implementations and methodologies for applying these Bayesian techniques in various settings.