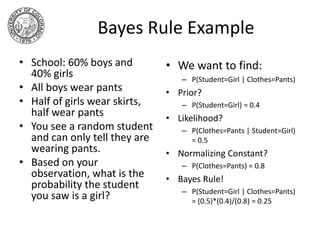

1) Probabilistic state estimation techniques like the Kalman filter, extended Kalman filter, unscented Kalman filter, and particle filters allow estimating the state of a system using probabilistic models and sensor measurements with uncertainty.

2) The Kalman filter provides a recursive solution to the linear filtering problem by estimating the current state as a weighted average of the prior state and new measurements, with the weights based on their uncertainties.

3) Extensions to the Kalman filter like the extended and unscented Kalman filters allow handling nonlinear systems by linearizing around the current estimate, while particle filters represent the state distribution with random samples and are more flexible for nonlinear and non-Gaussian problems like SLAM.