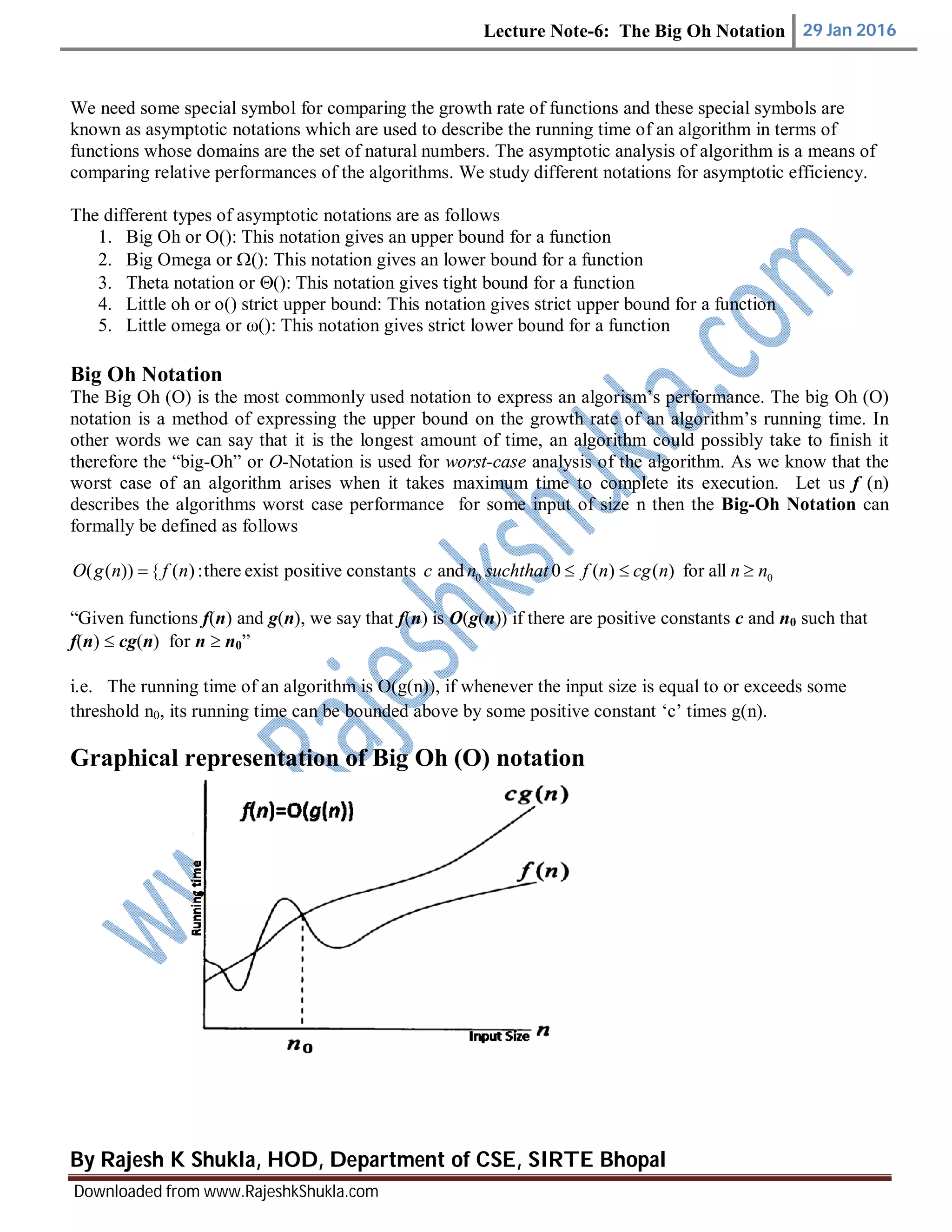

The document discusses Big O notation, which is used to describe the asymptotic upper bound of an algorithm's running time. It defines Big O notation formally as f(n) being O(g(n)) if there exist positive constants c and n0 such that f(n) is less than or equal to c * g(n) for all n greater than or equal to n0. The document provides examples of functions being Big O of other functions, such as 2n + 7 being O(n) and 2(n + 1) being O(2n). It explains that Big O notation characterizes the worst-case growth rate of an algorithm.