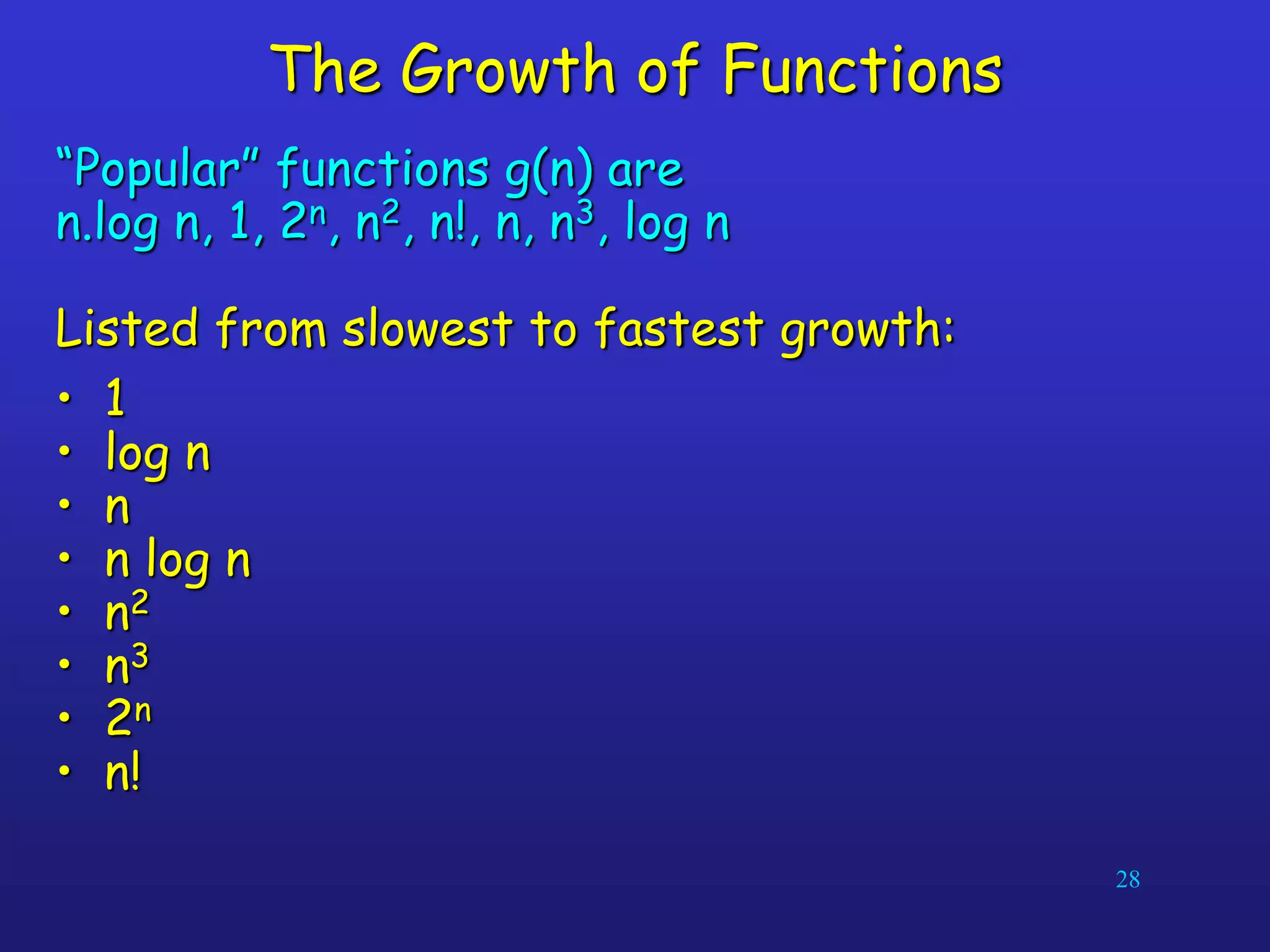

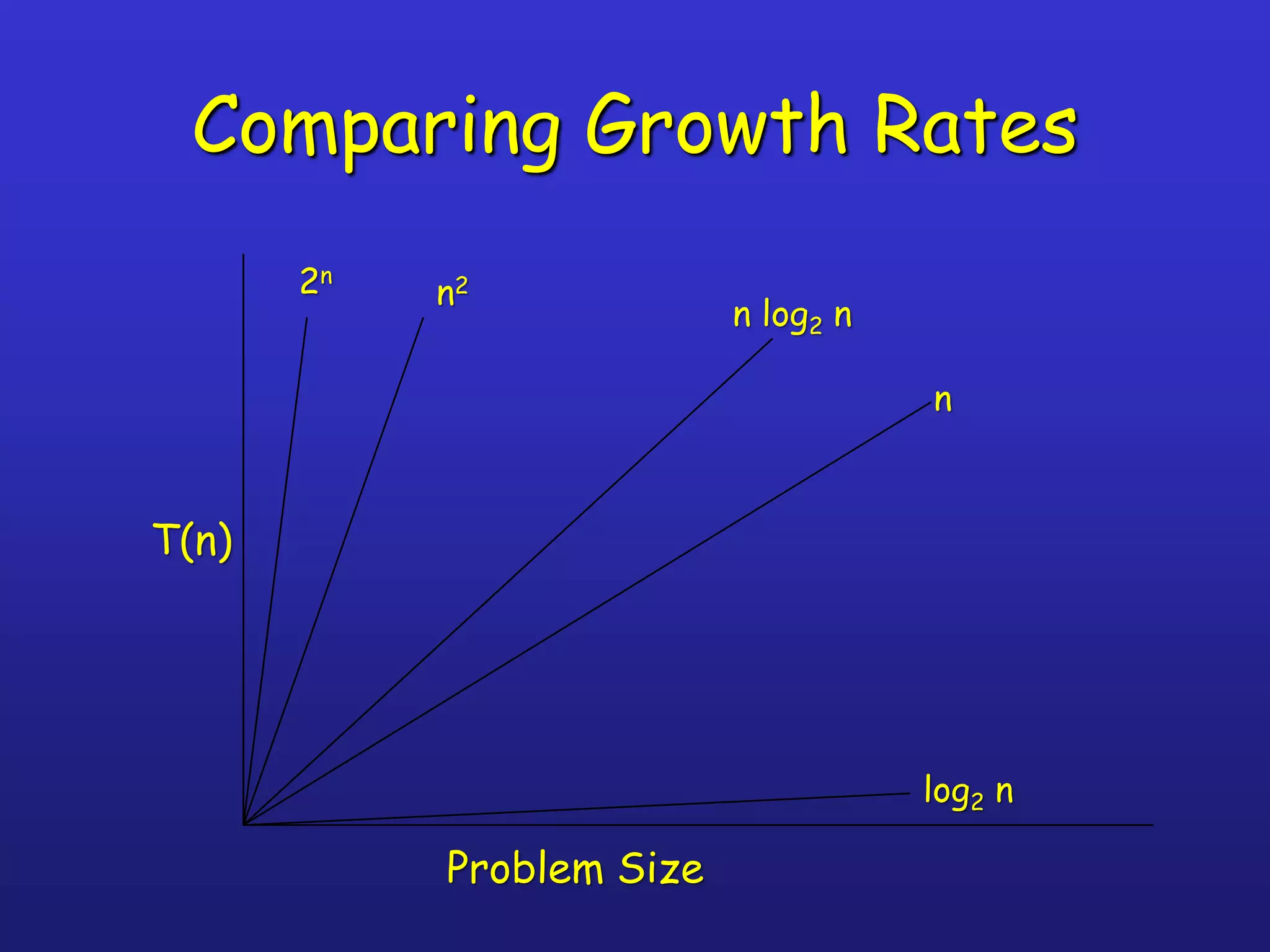

An algorithm is a well-defined computational procedure that takes input values and produces output values. It has several key properties including being well-defined, producing the correct output for any possible input, and terminating after a finite number of steps. When analyzing algorithms, asymptotic complexity is important as it measures how processing requirements grow with increasing input size. Common complexity classes include constant O(1), logarithmic O(log n), linear O(n), quadratic O(n^2), and exponential O(2^n) time. For large inputs, slower asymptotic growth indicates better performance.

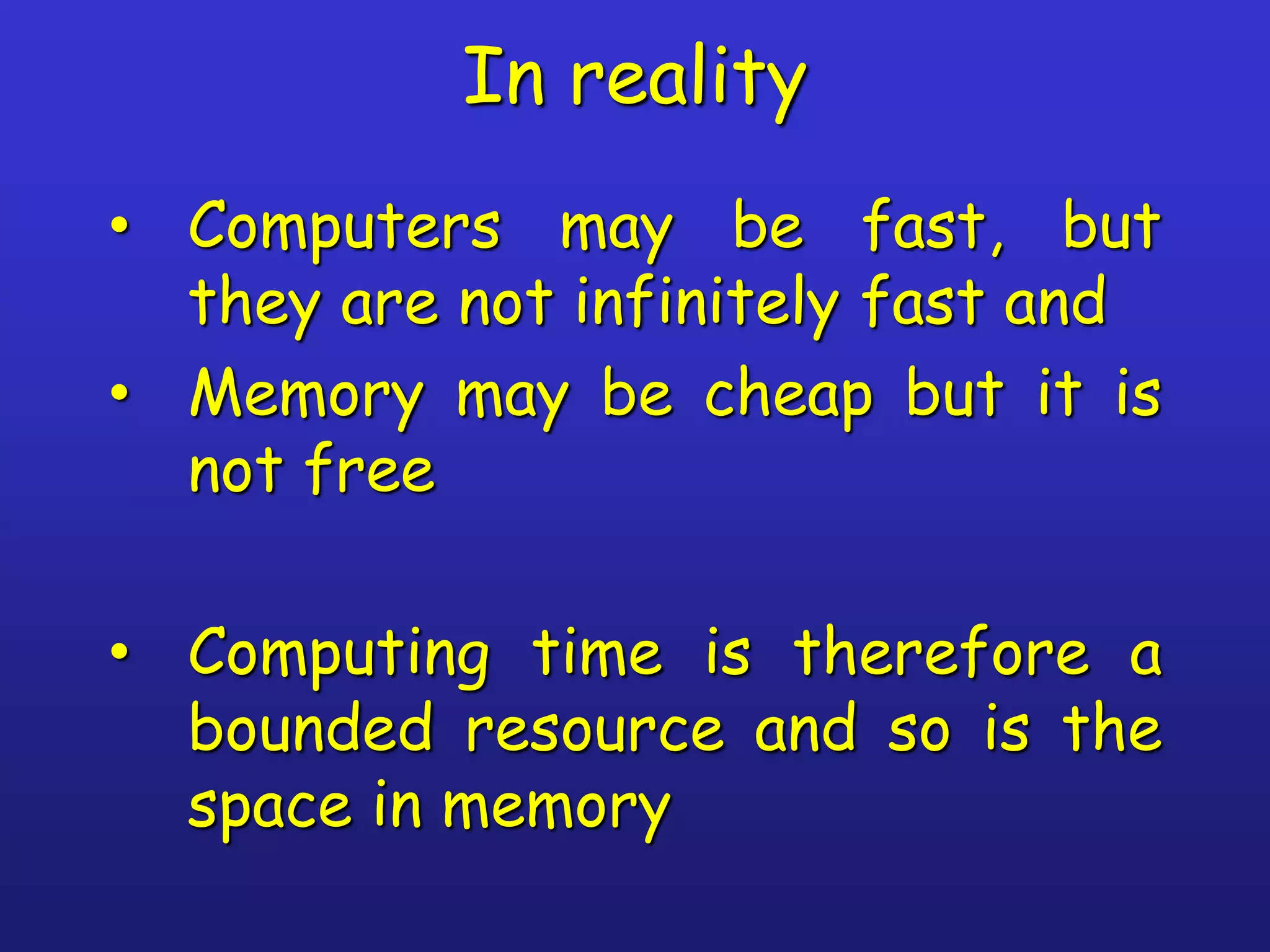

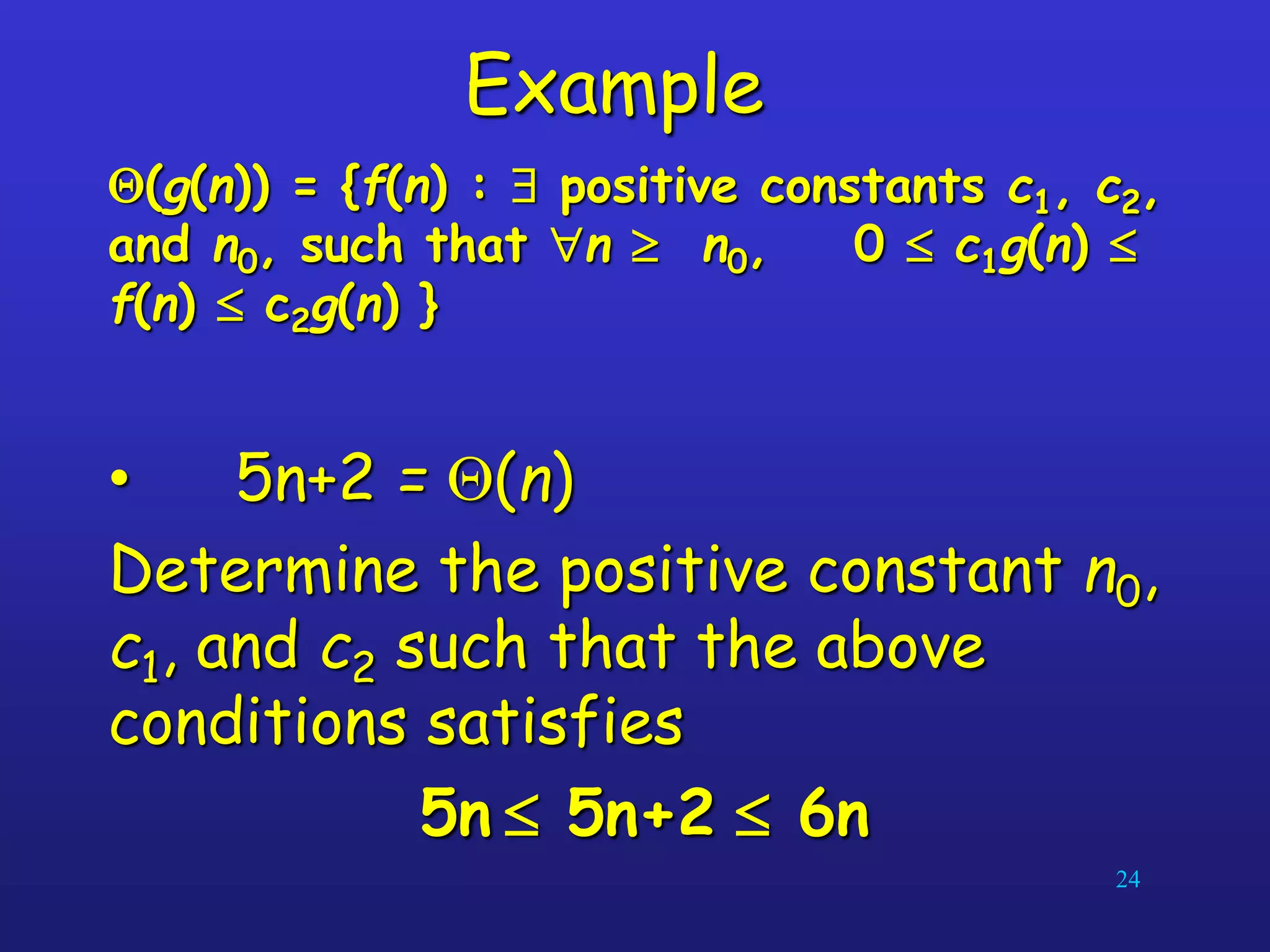

![Example: Find sum of array elements

Input size: n (number of array elements)

Total number of steps: 2n + 3 = f(n)

Algorithm arraySum (A, n)

Input array A of n integers

Output Sum of elements of A # operations

sum 0 1

for i 0 to n 1 do n+1

sum sum + A [i] n

return sum 1](https://image.slidesharecdn.com/2algorithim-200210195727/75/Algorithms-required-for-data-structures-basics-like-Arrays-Stacks-Linked-Lists-etc-30-2048.jpg)

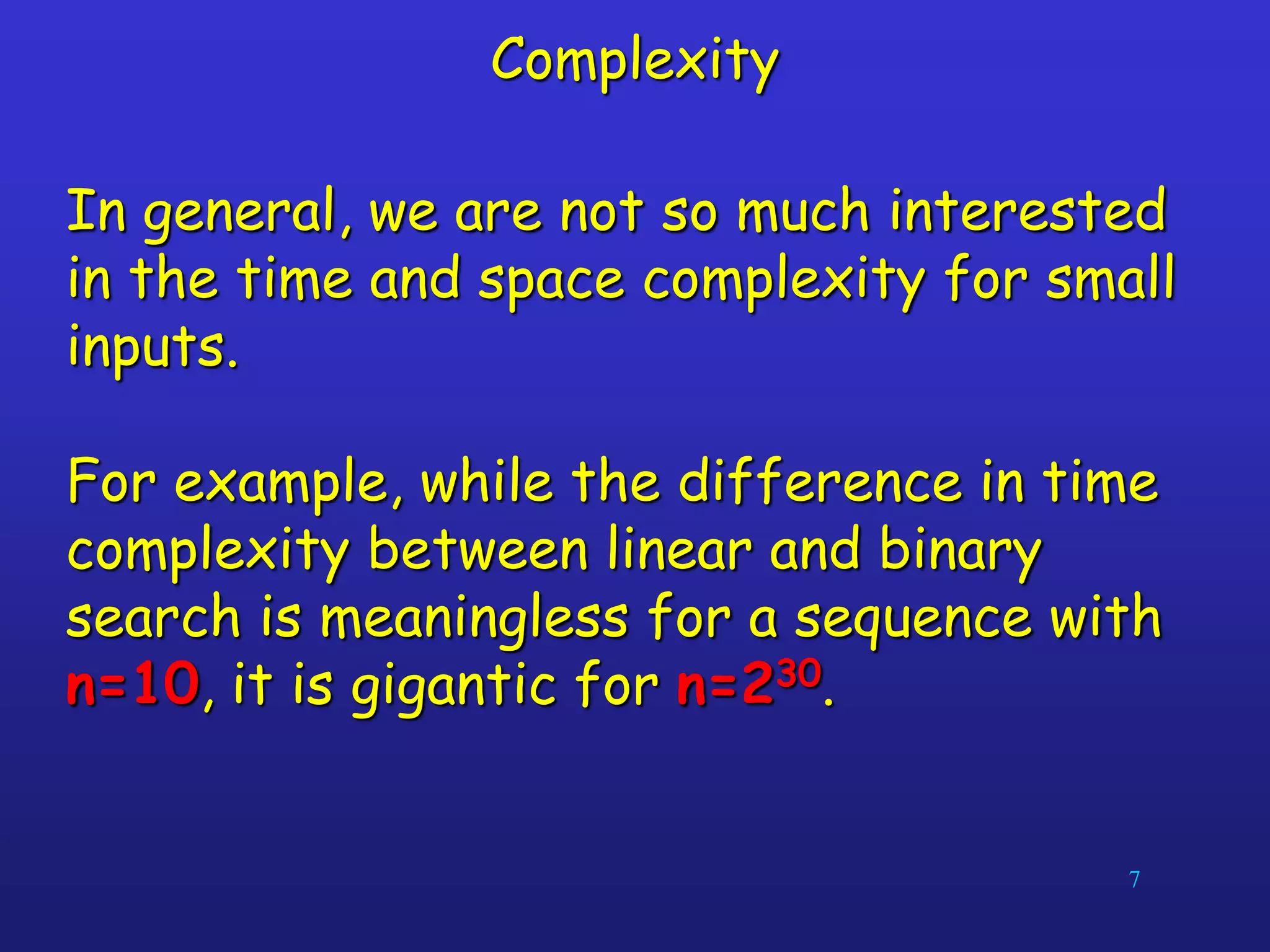

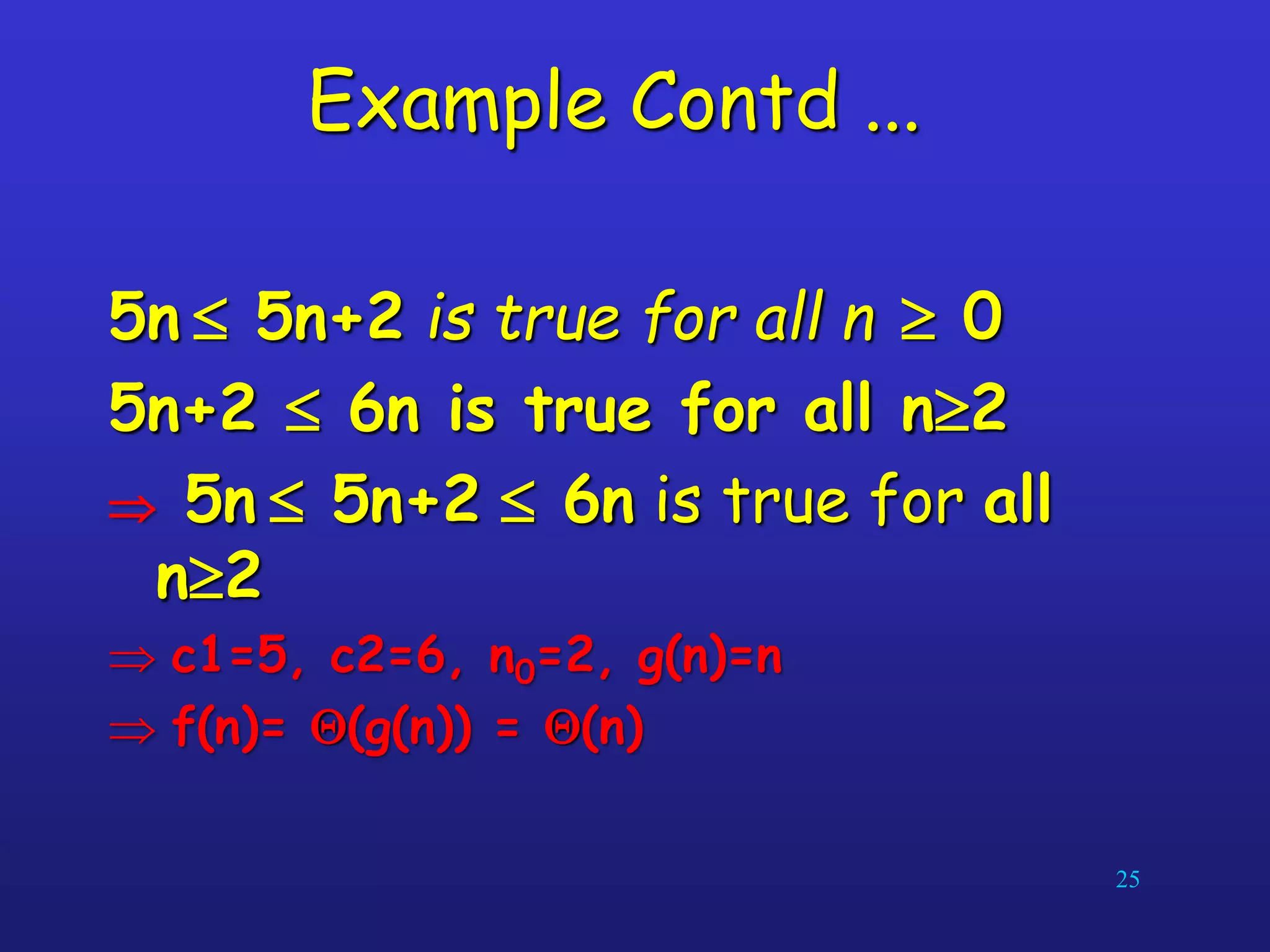

![Algorithm arrayMax(A, n)

Input array A of n integers

Output maximum element of A # operations

currentMax A[0] 1

for i 1 to n 1 do n

if A [i] currentMax then n -1

currentMax A [i] n -1

return currentMax 1

Example: Find max element of an

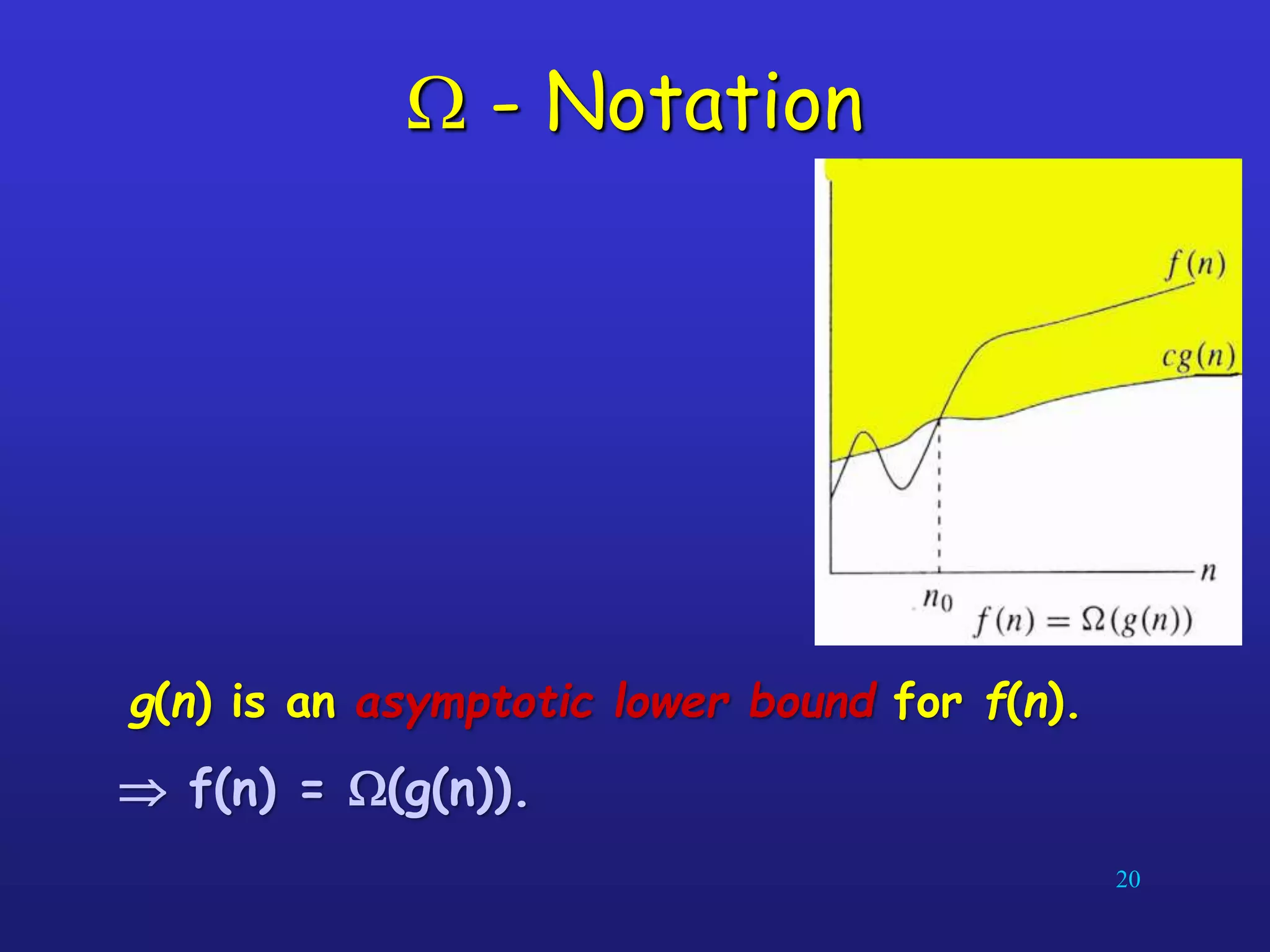

array

Input size: n (number of array elements)

Total number of steps: f(n)=3n](https://image.slidesharecdn.com/2algorithim-200210195727/75/Algorithms-required-for-data-structures-basics-like-Arrays-Stacks-Linked-Lists-etc-31-2048.jpg)