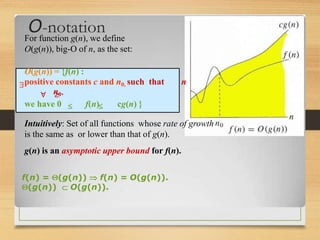

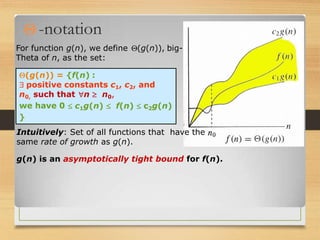

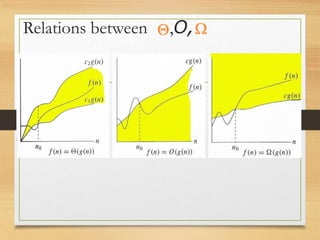

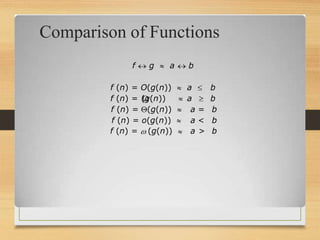

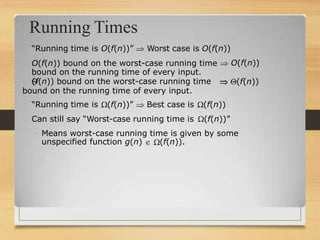

This document discusses asymptotic notation and its use in analyzing algorithms. It defines big O, Omega, and Theta notation and explains how they are used to describe the limiting behavior and growth rates of functions. Specifically, it explains that big O notation describes asymptotic upper bounds, Omega notation describes asymptotic lower bounds, and Theta notation describes asymptotically tight bounds. The document also discusses how these notations are used to analyze the time complexity of algorithms and classify their running times as inputs approach infinity.