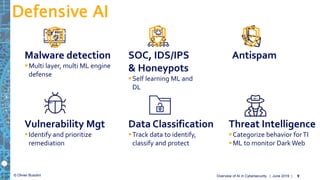

The document provides an overview of the role and challenges of artificial intelligence (AI) in cybersecurity, aimed at helping Chief Information Security Officers (CISOs) navigate the complexities and hype surrounding AI solutions. It discusses the types of AI used, potential applications in cybersecurity, machine learning challenges, and the need for integration within existing systems while emphasizing that AI is not a silver bullet for cybersecurity issues. Additionally, it highlights the importance of careful evaluation of AI's effectiveness and its associated risks for informed decision-making.