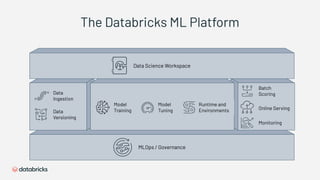

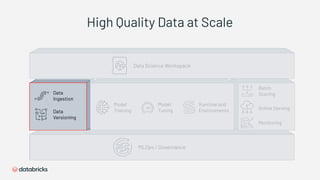

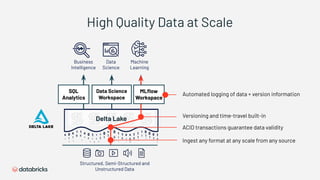

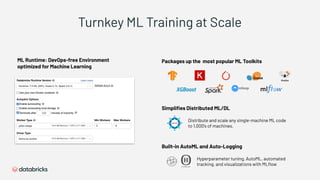

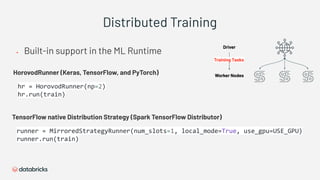

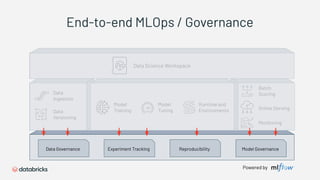

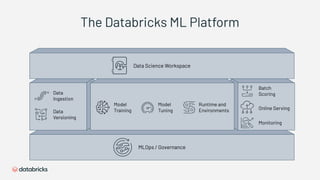

1) Databricks provides a machine learning platform for MLOps that includes tools for data ingestion, model training, runtime environments, and monitoring.

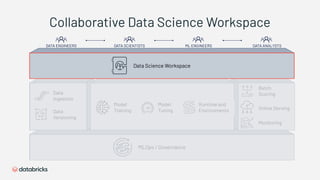

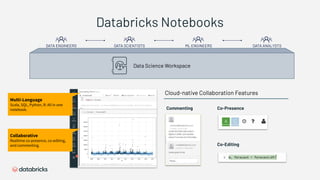

2) It offers a collaborative data science workspace for data engineers, data scientists, and ML engineers to work together on projects using notebooks.

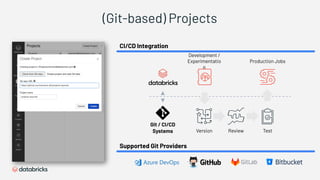

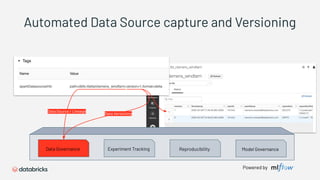

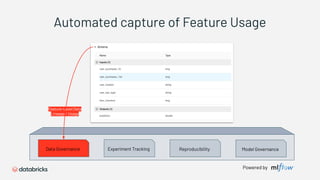

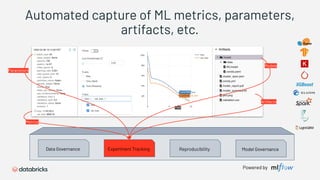

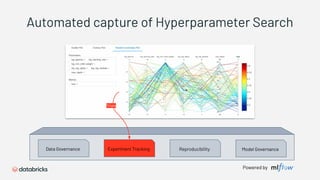

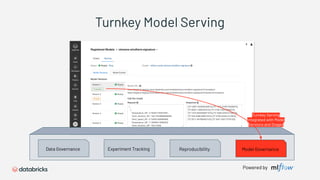

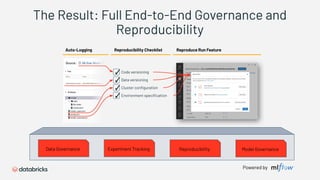

3) The platform provides end-to-end governance for machine learning including experiment tracking, reproducibility, and model governance.