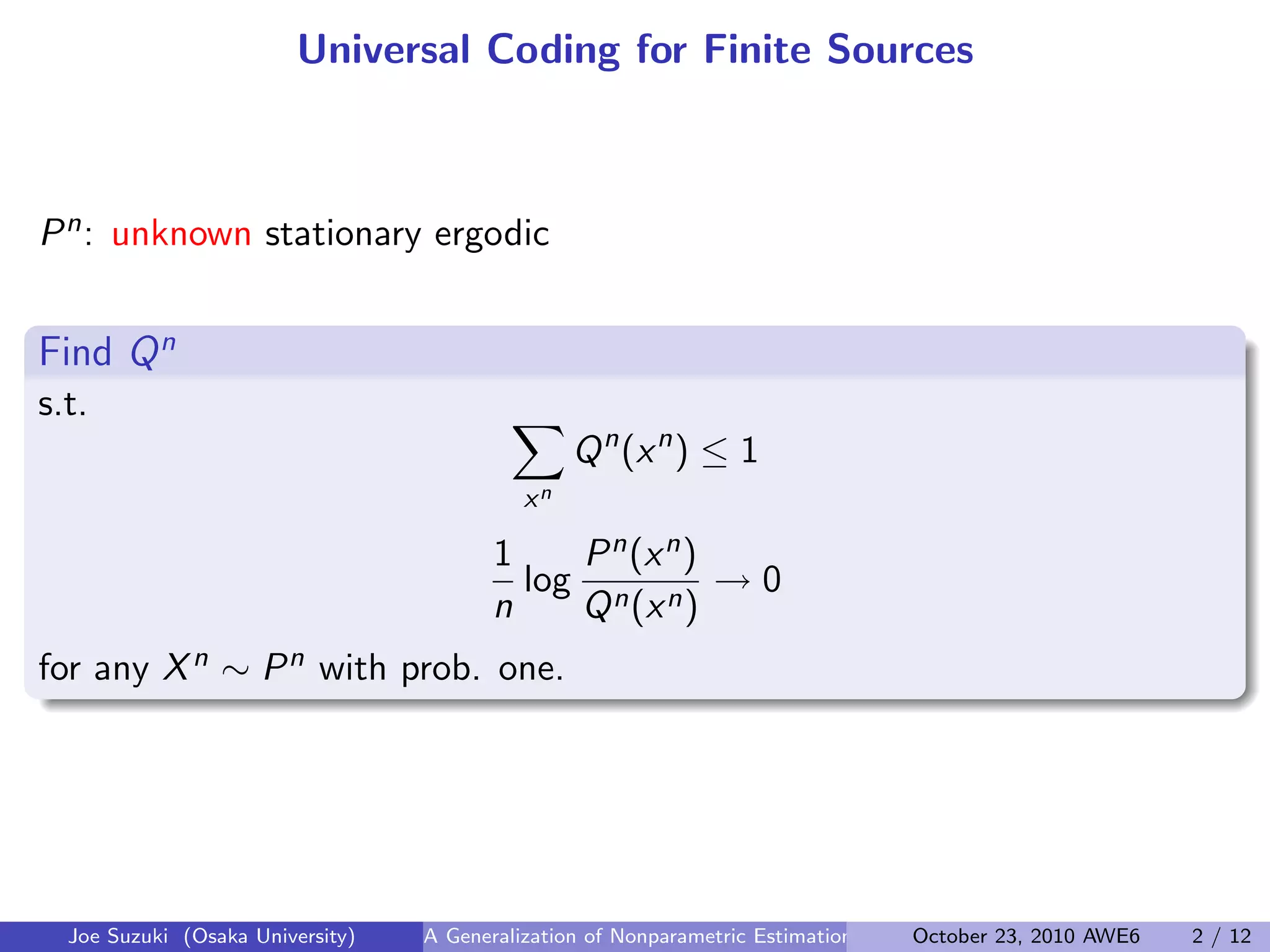

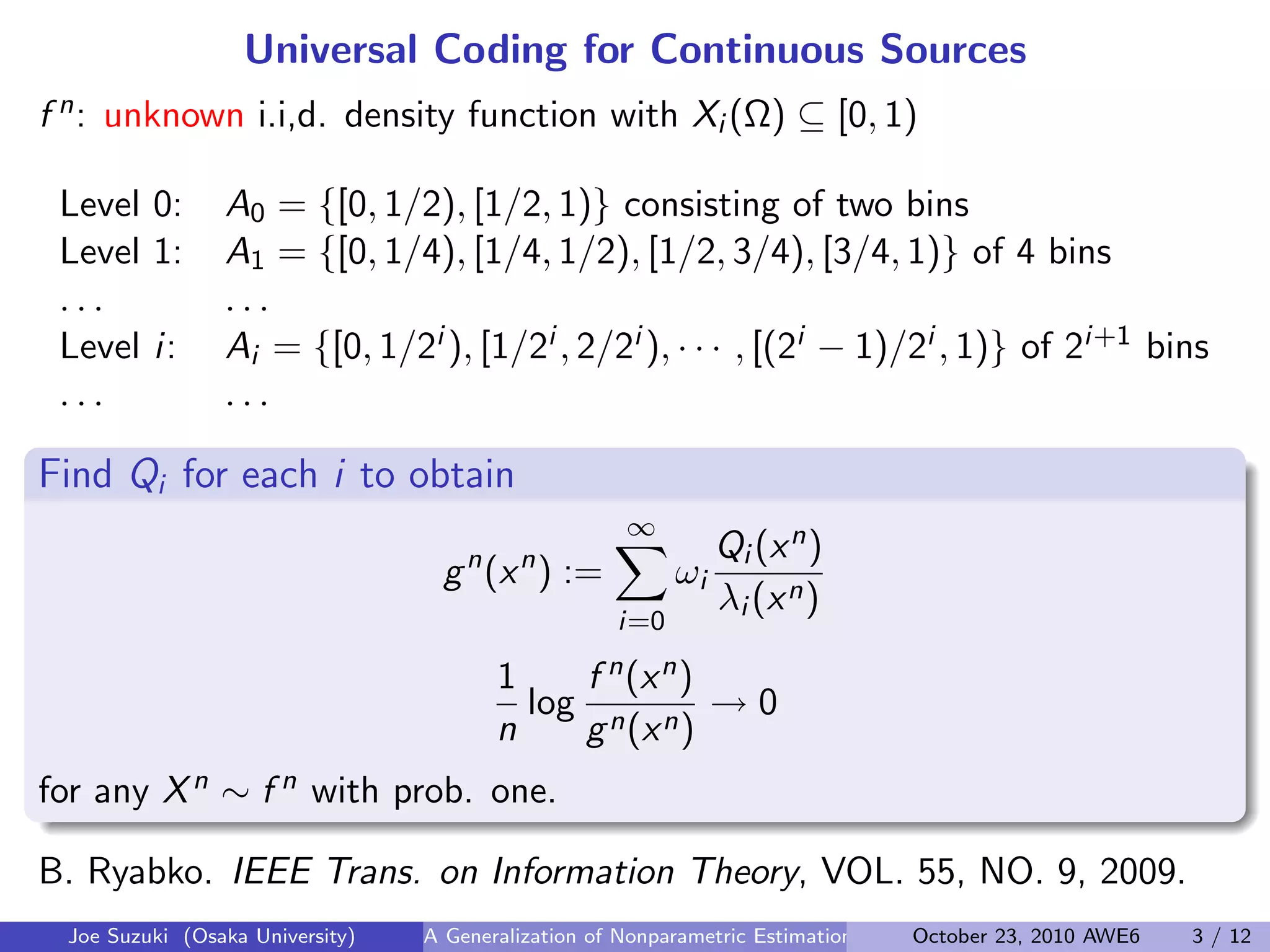

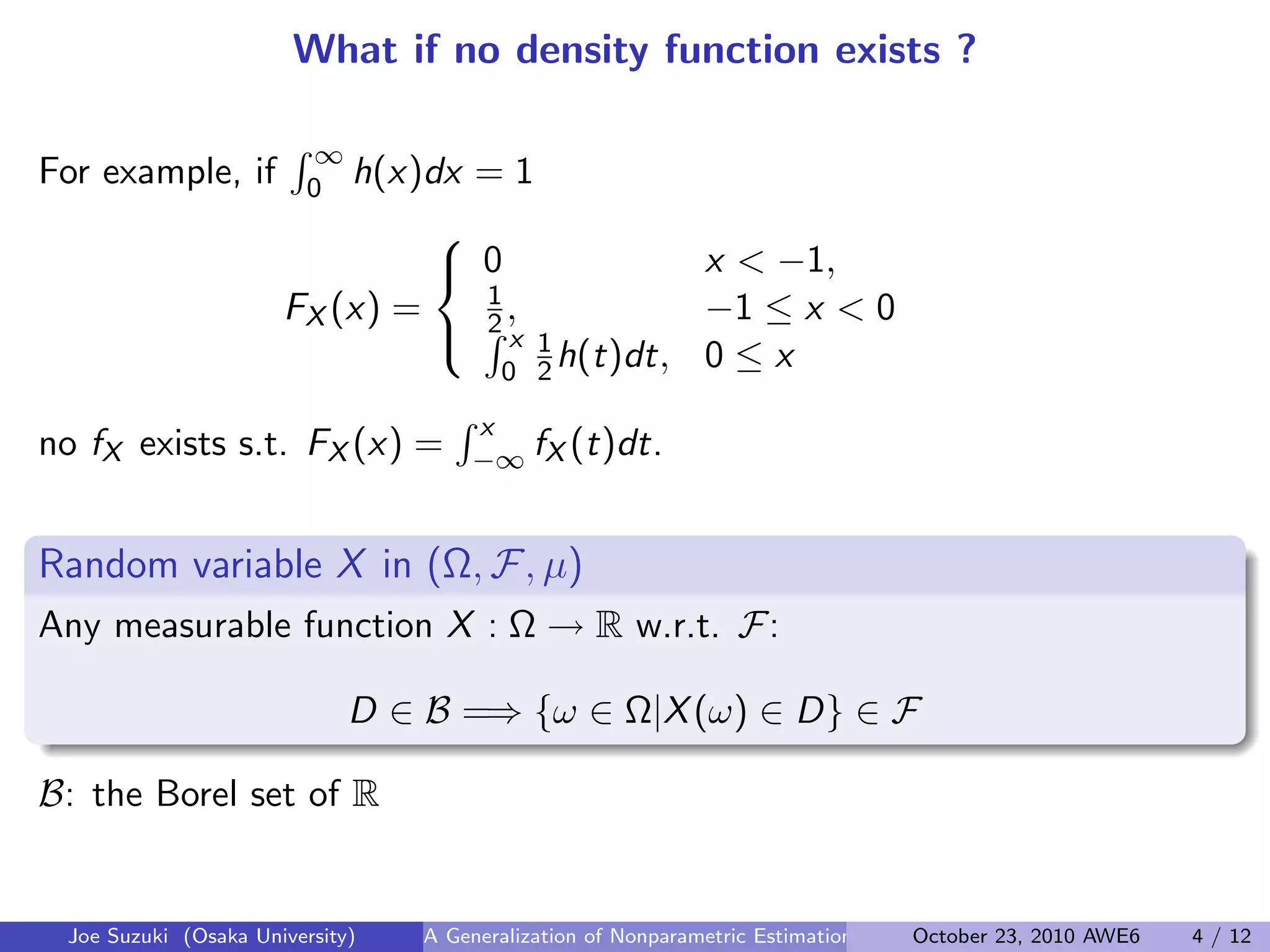

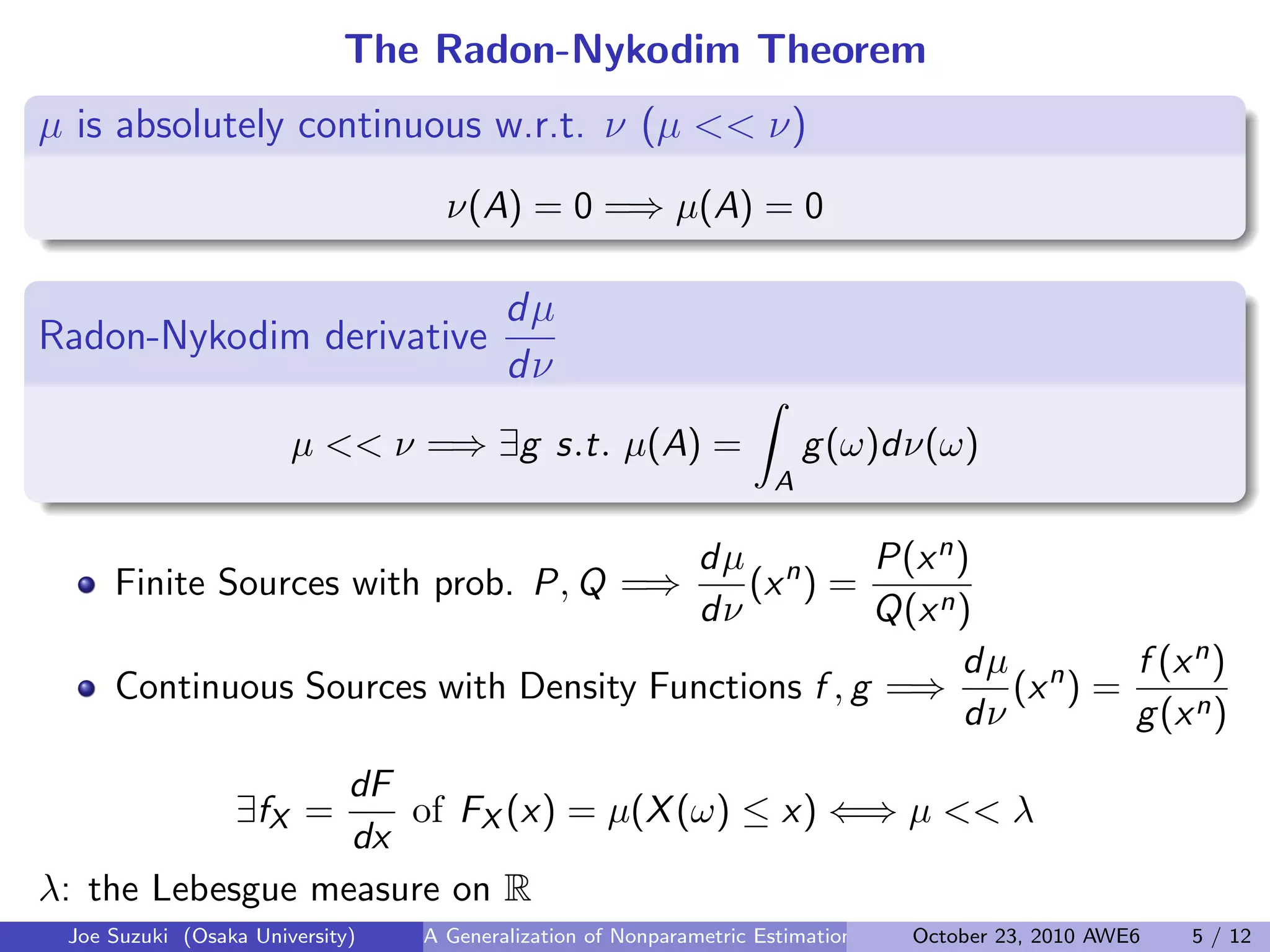

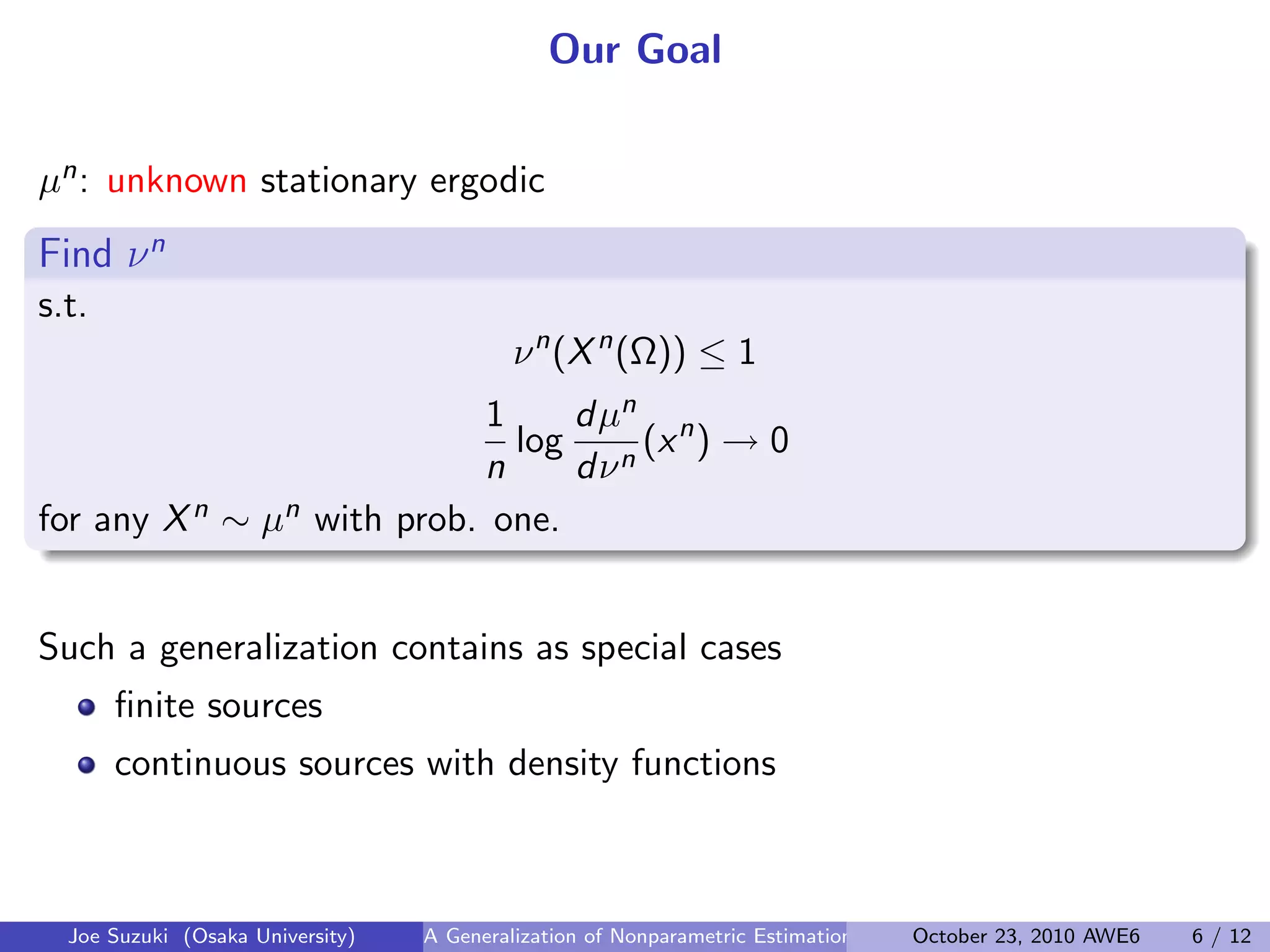

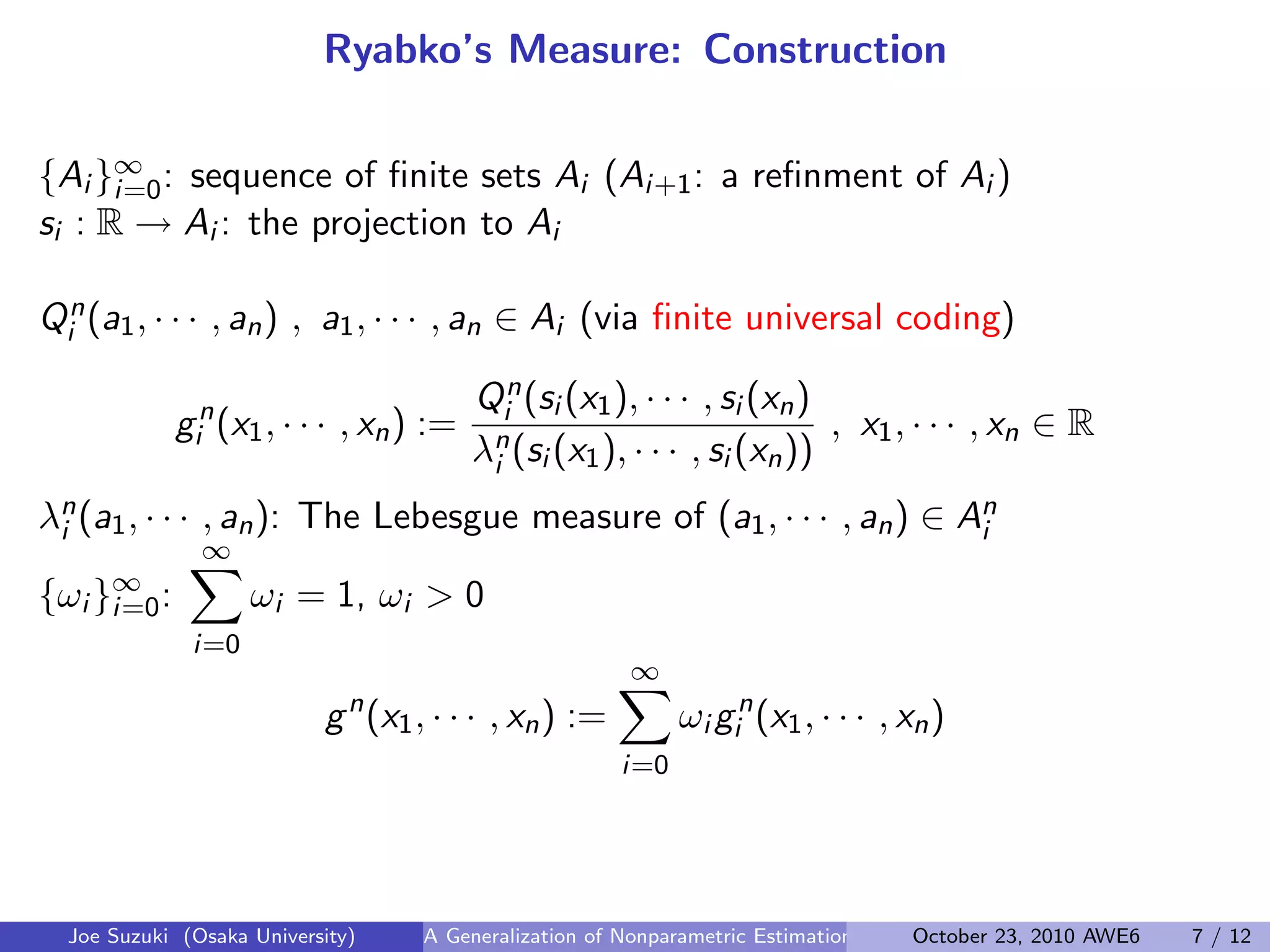

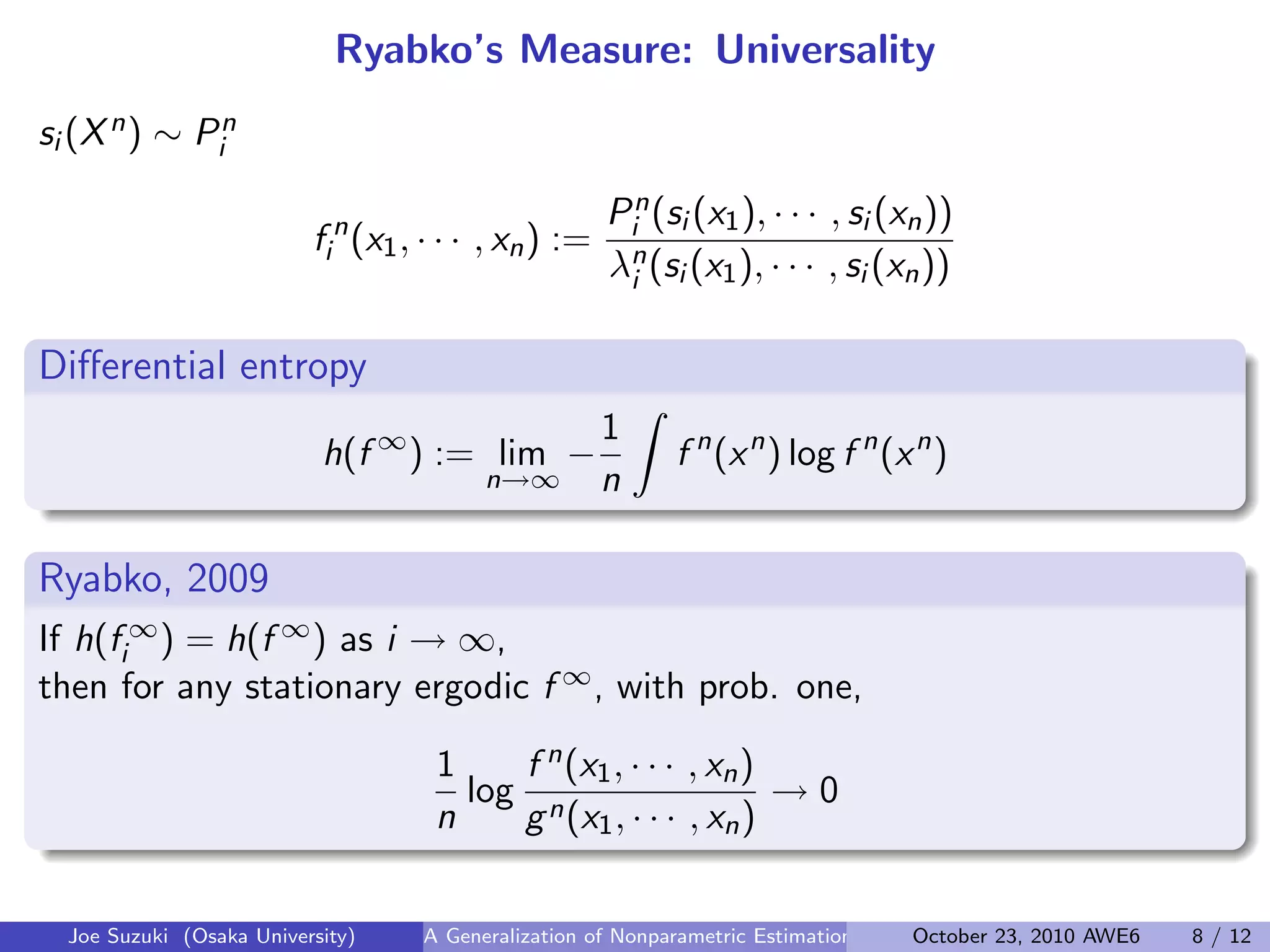

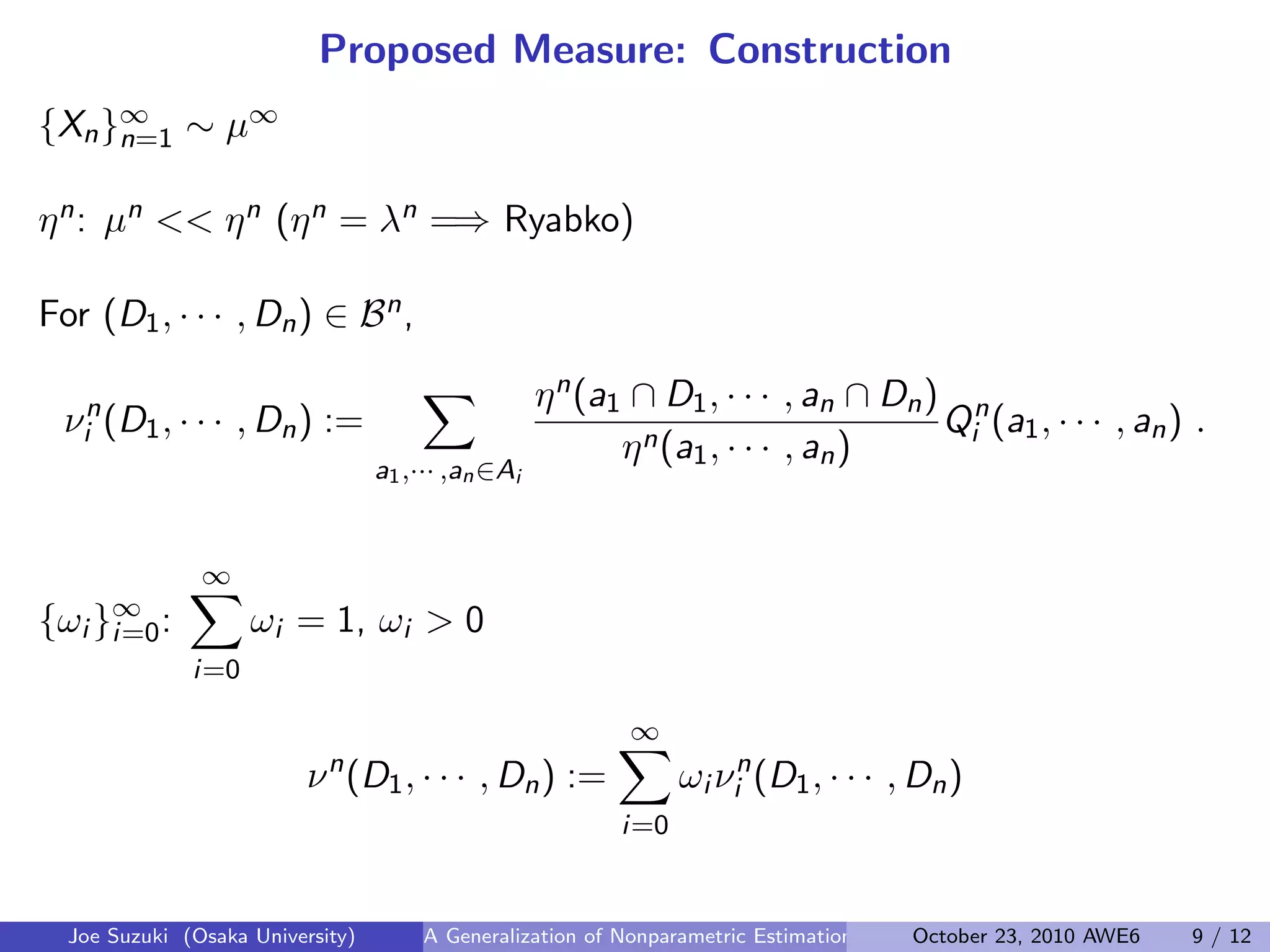

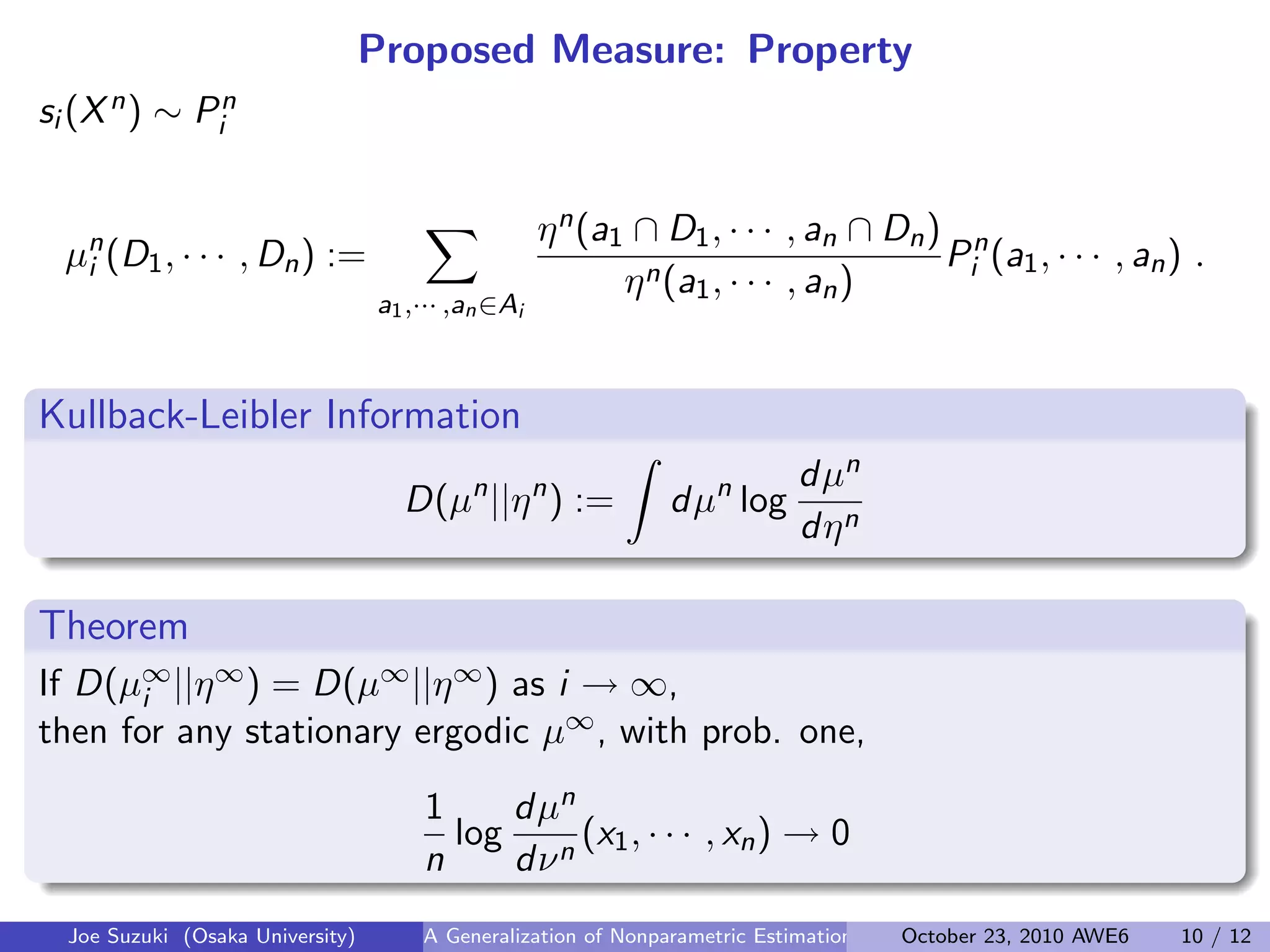

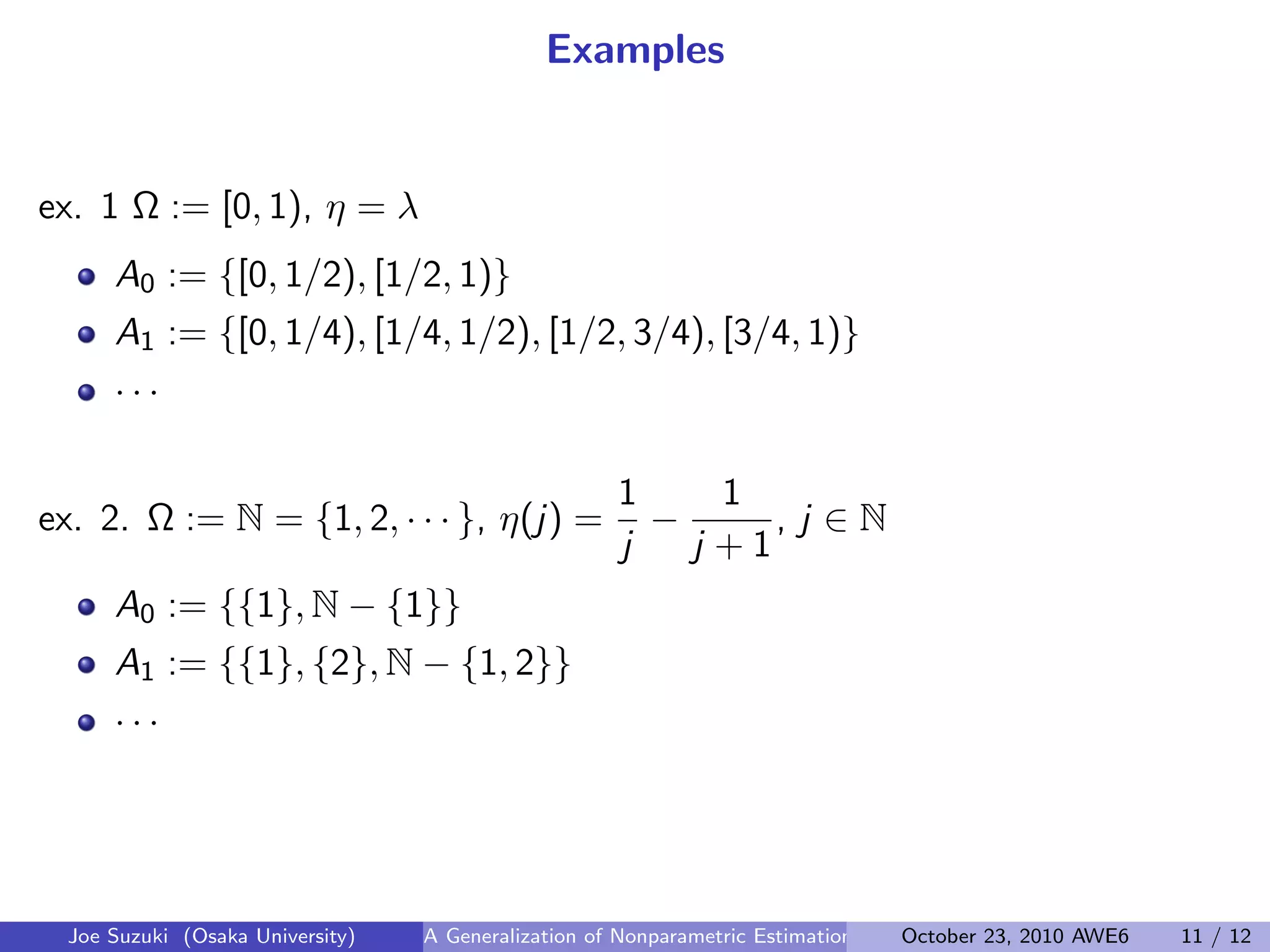

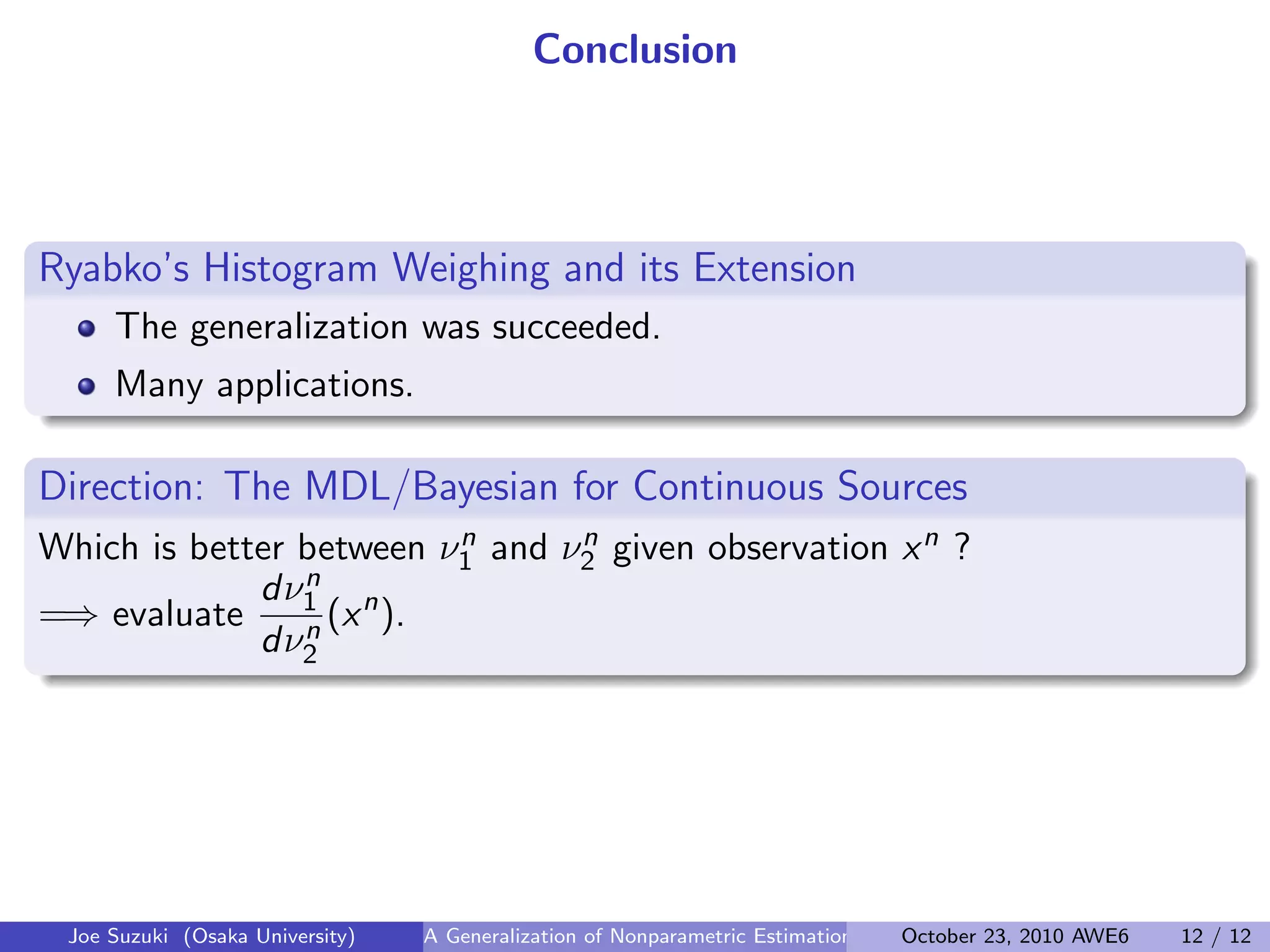

This document presents a generalization of Ryabko's measure for universal coding of stationary ergodic sources. The generalization allows constructing a measure νn that achieves universal coding for sources without a density function, such as those represented by a measure μn on a measurable space. νn is defined by projecting the source onto increasing finer partitions and weighting the projections. If the Kullback-Leibler divergence between the source and weighting measure converges across partitions, νn achieves universal coding for any stationary ergodic source μn. Examples demonstrate how the approach extends Ryabko's histogram weighting to new source types.