This document summarizes results on analyzing stochastic gradient descent (SGD) algorithms for minimizing convex functions. It shows that a continuous-time version of SGD (SGD-c) can strongly approximate the discrete-time version (SGD-d) under certain conditions. It also establishes that SGD achieves the minimax optimal convergence rate of O(t^-1/2) for α=1/2 by using an "averaging from the past" procedure, closing the gap between previous lower and upper bound results.

![Approximation results

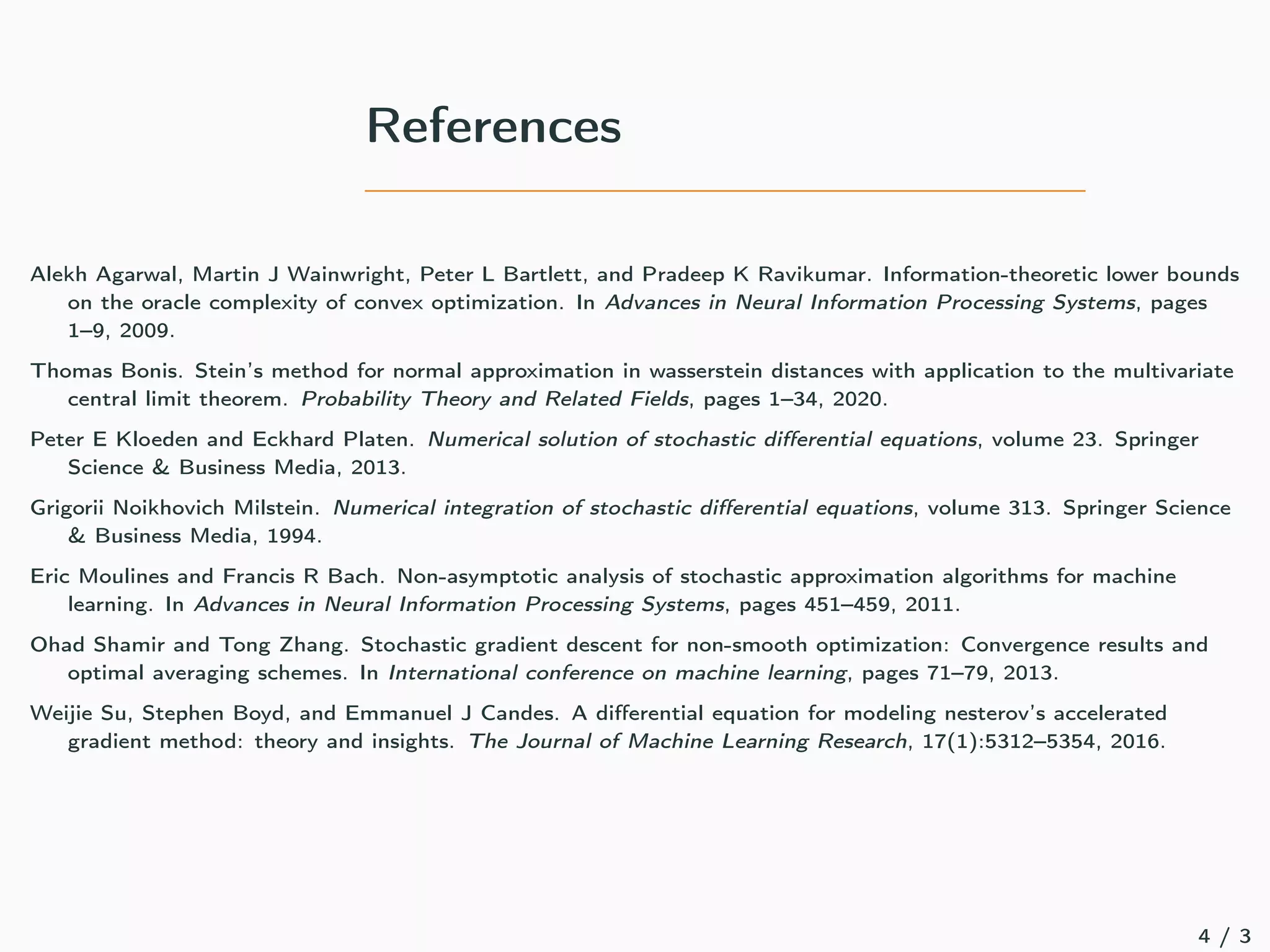

QU: can we show that SGD-d is close to SGD-c? Yes!

Finite horizon strong approximation

For any T > 0, there exists CT > 0 such that for any t ∈ [0, T],

E1/2

"

sup

t∈[0,T]

kXbtγαc − Xtk2

#

6 CT (ε1/2

γδ

+ γ)(1 + log(1/γ)) ,

with δ = min(1, 1/(2 − 2α)) and

ε = sup

nγα6T

E

W2

2(νn, N(0, Σ(Xn)))

,

with νn the distribution of H(Xn, ·) − ∇f (Xn) conditionally to Xn.

Proof based on Milstein (1994) and Kloeden and Platen (2013).

If H(x, {zi }M

i=1) = M−1

PM

k=1 ∇ˆ

f (x, zi ) then ε = O(M−2

) using recent advances

in Stein’s method Bonis (2020) (effect of the batch size).

2 / 3](https://image.slidesharecdn.com/hausdorff-210310143447/75/Continuous-and-Discrete-Time-Analysis-of-SGD-2-2048.jpg)

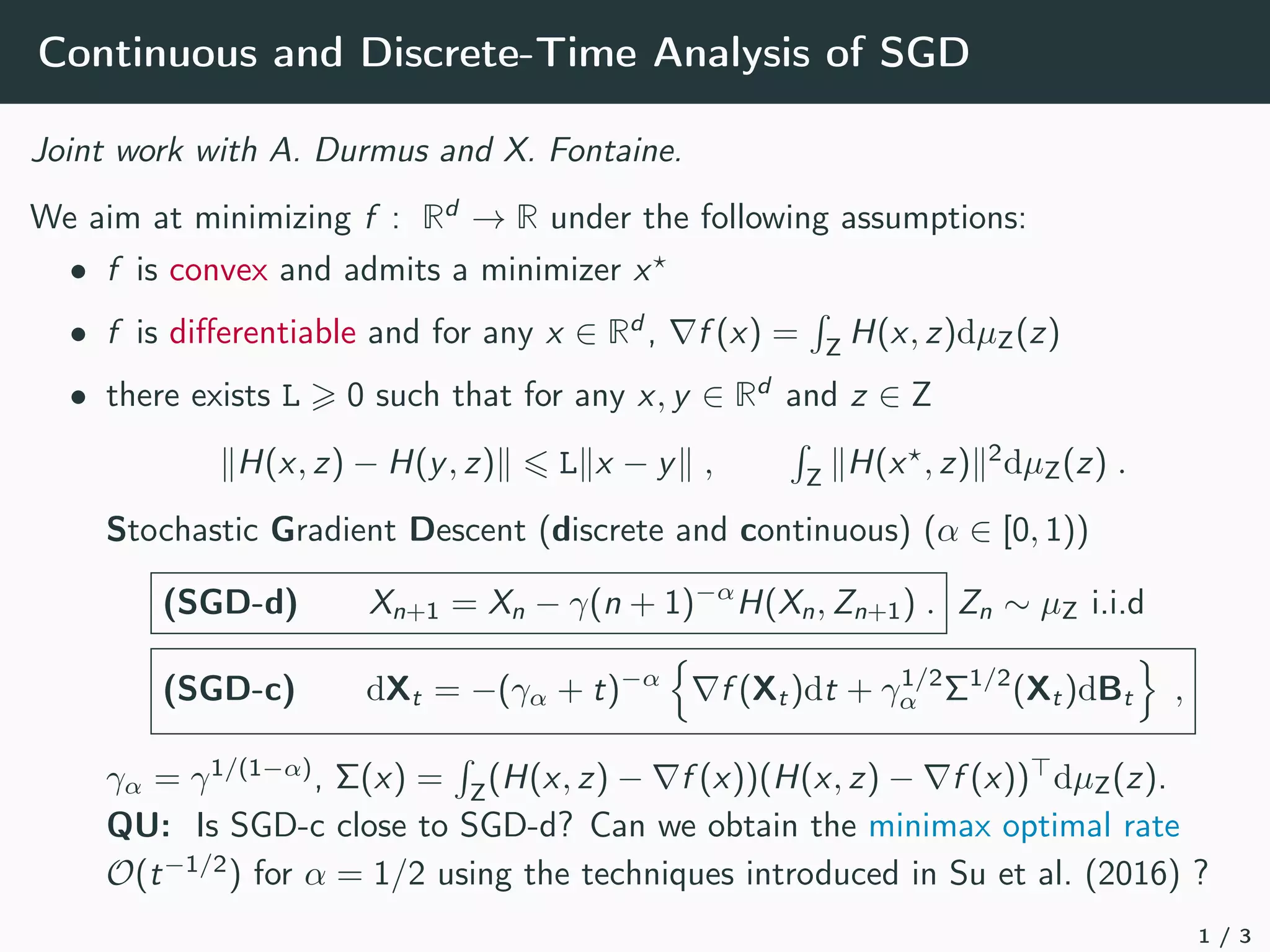

![Convergence results

QU: what is the optimal convergence rate?

Previous works:

• Minimax lower-bound → O(t−1/2

) (Agarwal et al. (2009))

• Bounded gradient case → O(t−1/2

) (Shamir and Zhang (2013))

• Our setting → O(t−1/3

) (Moulines and Bach (2011))

We close the gap between lower and upper bounds.

Optimal convergence rates

In our setting, for any α ∈ [0, 1) there exists Cα 0 such that for any n ∈ N

E [f (Xn) − f (x?

)] 6 Cα max(n−α

, n−1+α

) .

The proof relies on the “averaging from the past” procedure of Shamir and Zhang

(2013) and is also valid for SGD-c.

3 / 3](https://image.slidesharecdn.com/hausdorff-210310143447/75/Continuous-and-Discrete-Time-Analysis-of-SGD-3-2048.jpg)