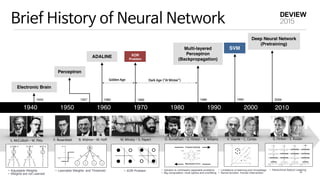

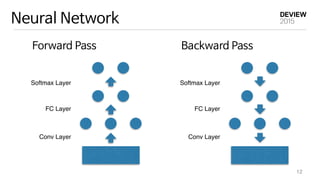

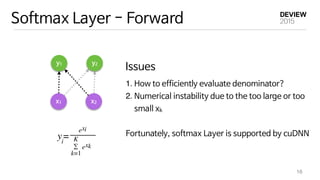

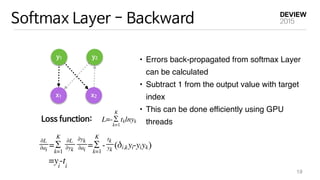

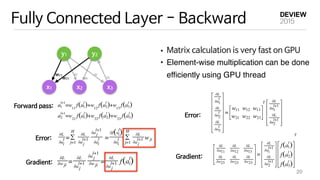

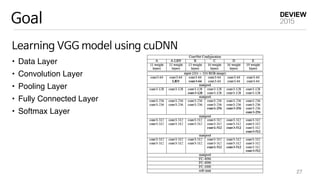

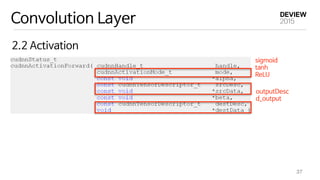

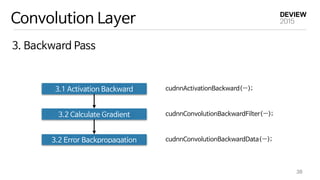

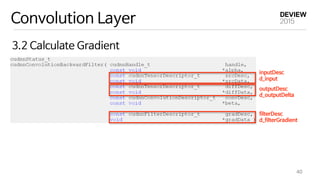

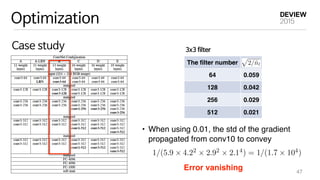

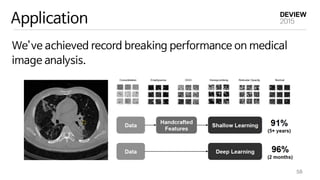

This document provides an overview of deep learning and implementation on GPU using cuDNN. It begins with a brief history of neural networks and an introduction to common deep learning models like convolutional neural networks. It then discusses implementing deep learning models using cuDNN, including initialization, forward and backward passes for layers like convolution, pooling and fully connected. It covers optimization issues like initialization and speeding up training. Finally, it introduces VUNO-Net, the company's deep learning framework, and discusses its performance, applications and visualization.