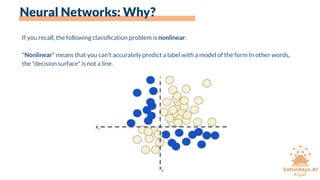

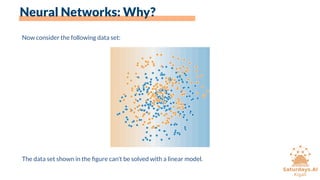

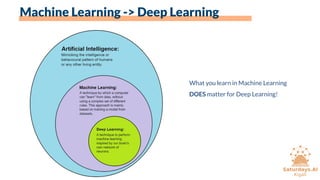

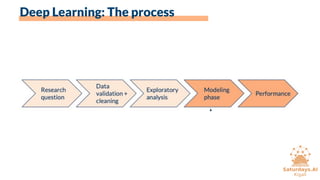

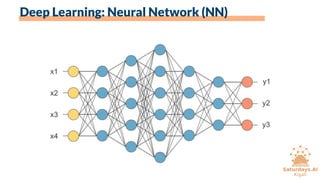

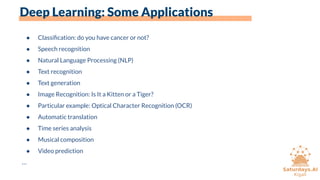

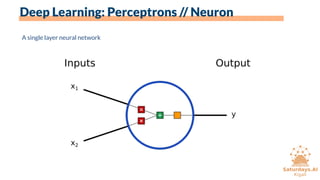

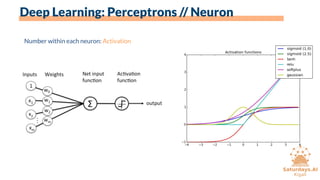

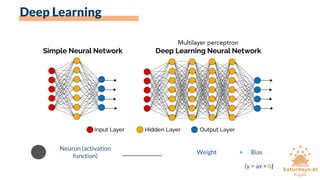

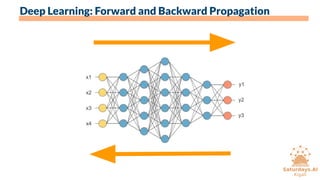

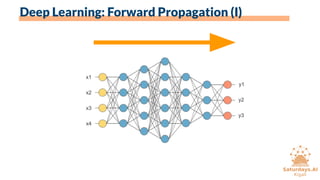

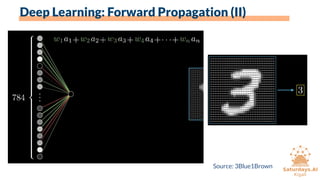

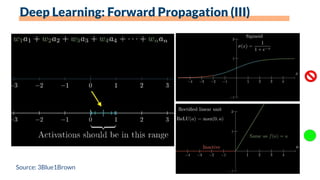

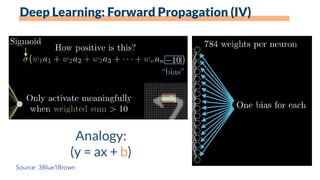

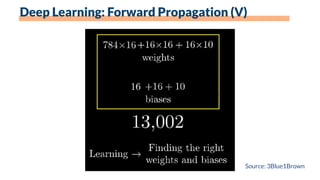

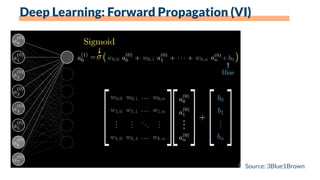

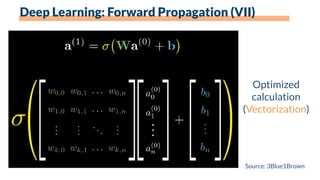

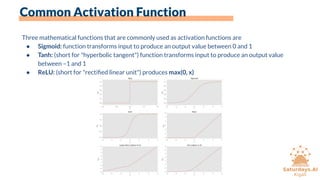

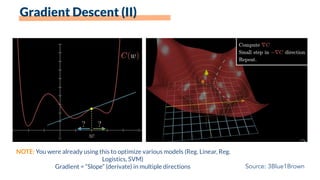

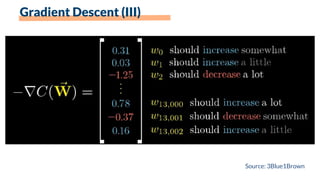

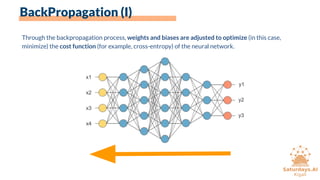

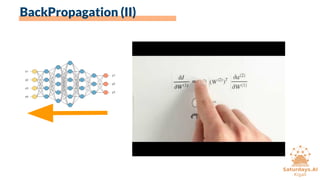

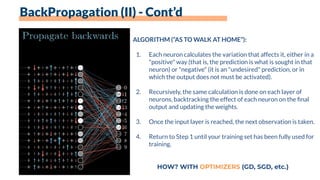

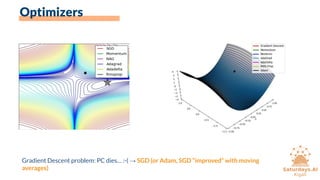

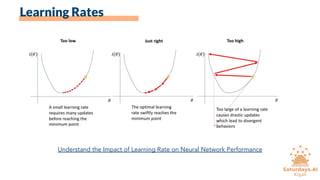

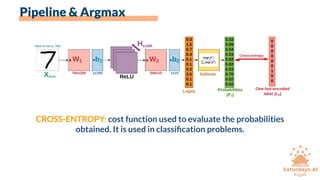

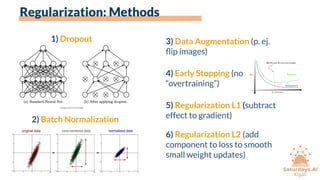

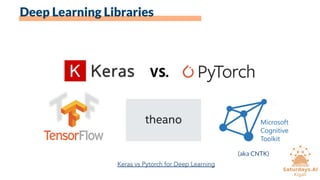

The document outlines the agenda for a workshop on neural networks and deep learning scheduled to start at 14:00, covering topics such as activation functions, gradient descent, and backpropagation. It explains the workings of neural networks, including the roles of artificial neurons, activation functions, and various training methods. Additionally, it highlights applications of deep learning in fields like image recognition and natural language processing, while emphasizing the importance of understanding concepts like regularization and optimization.