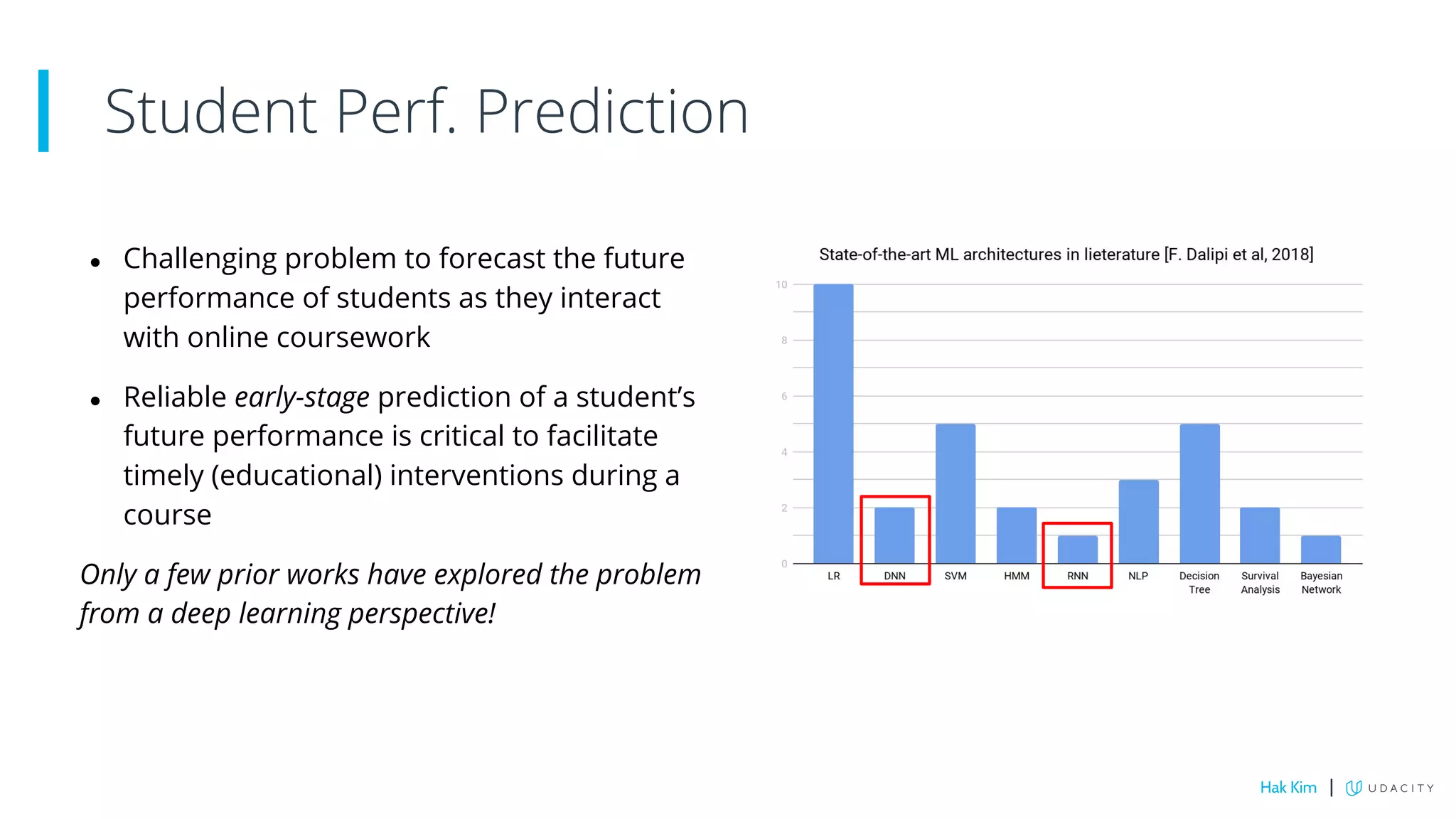

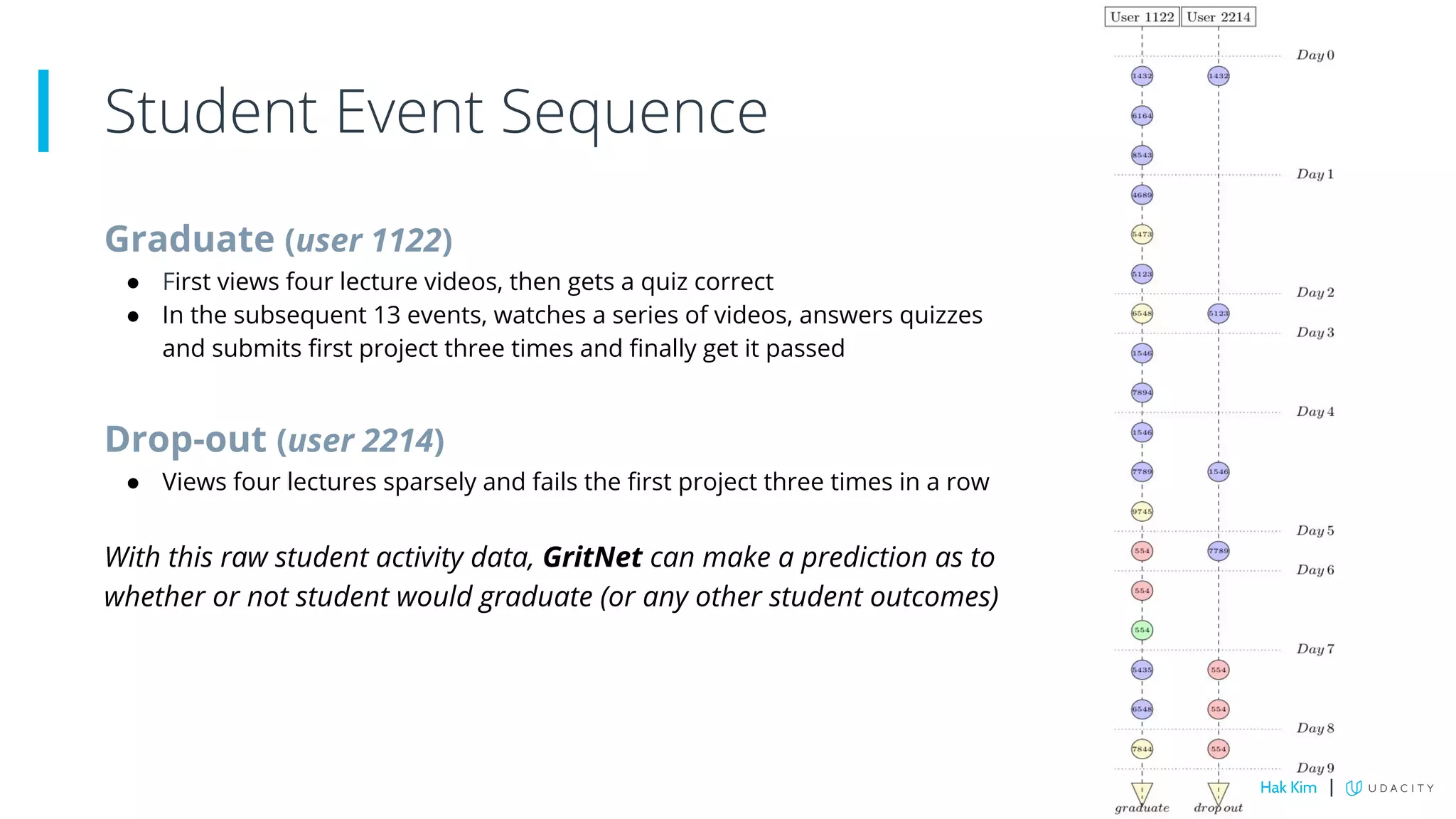

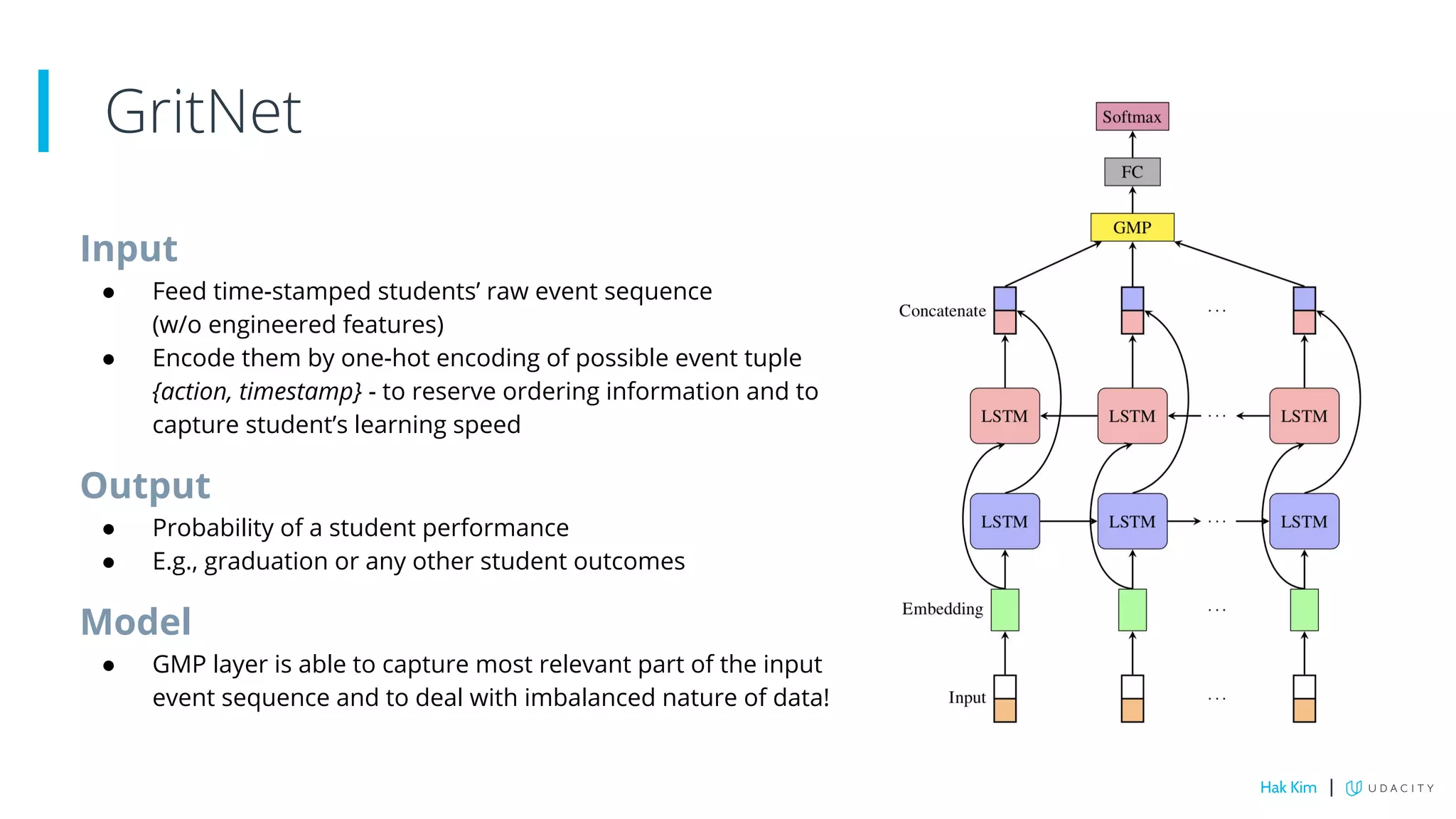

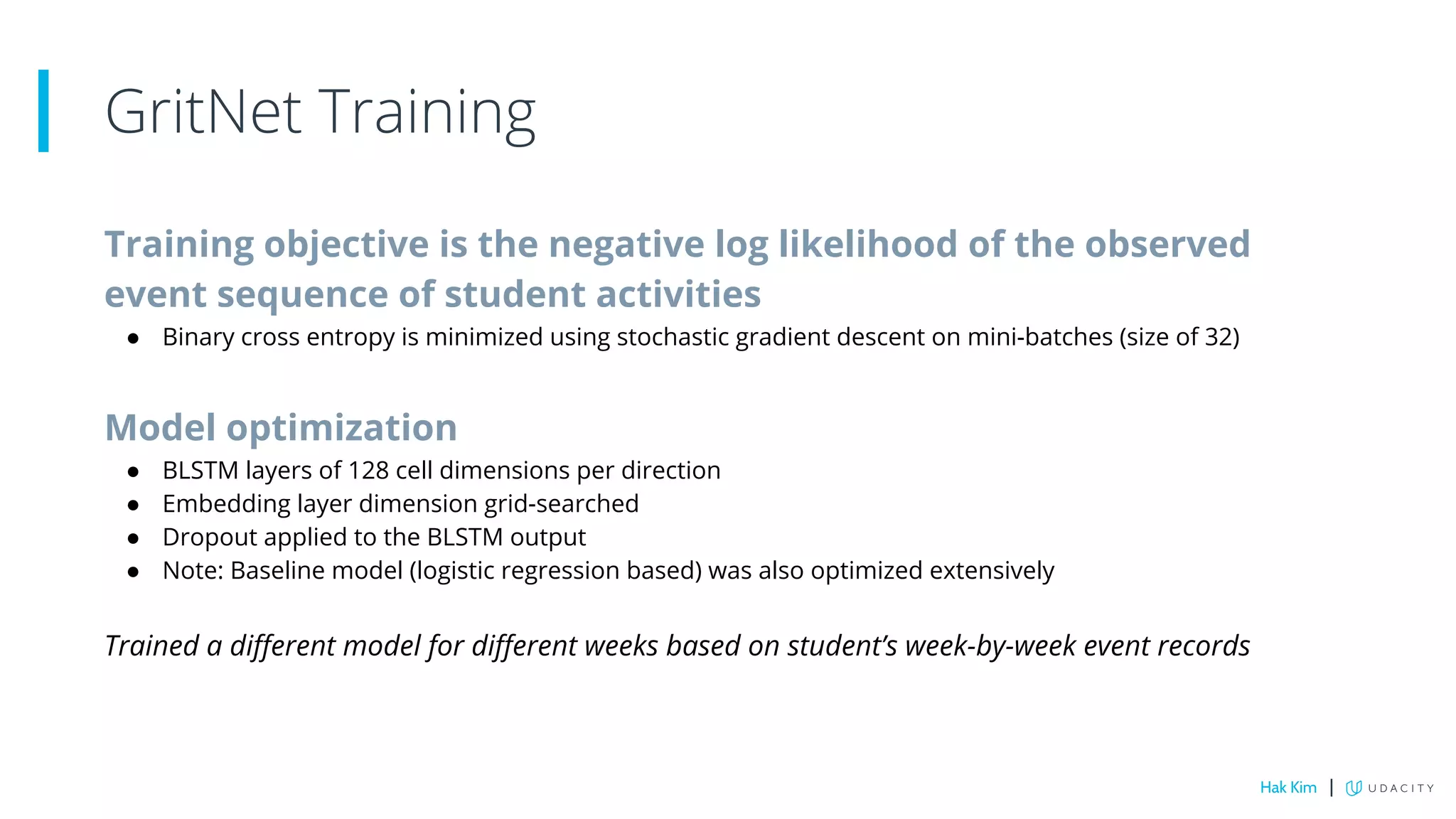

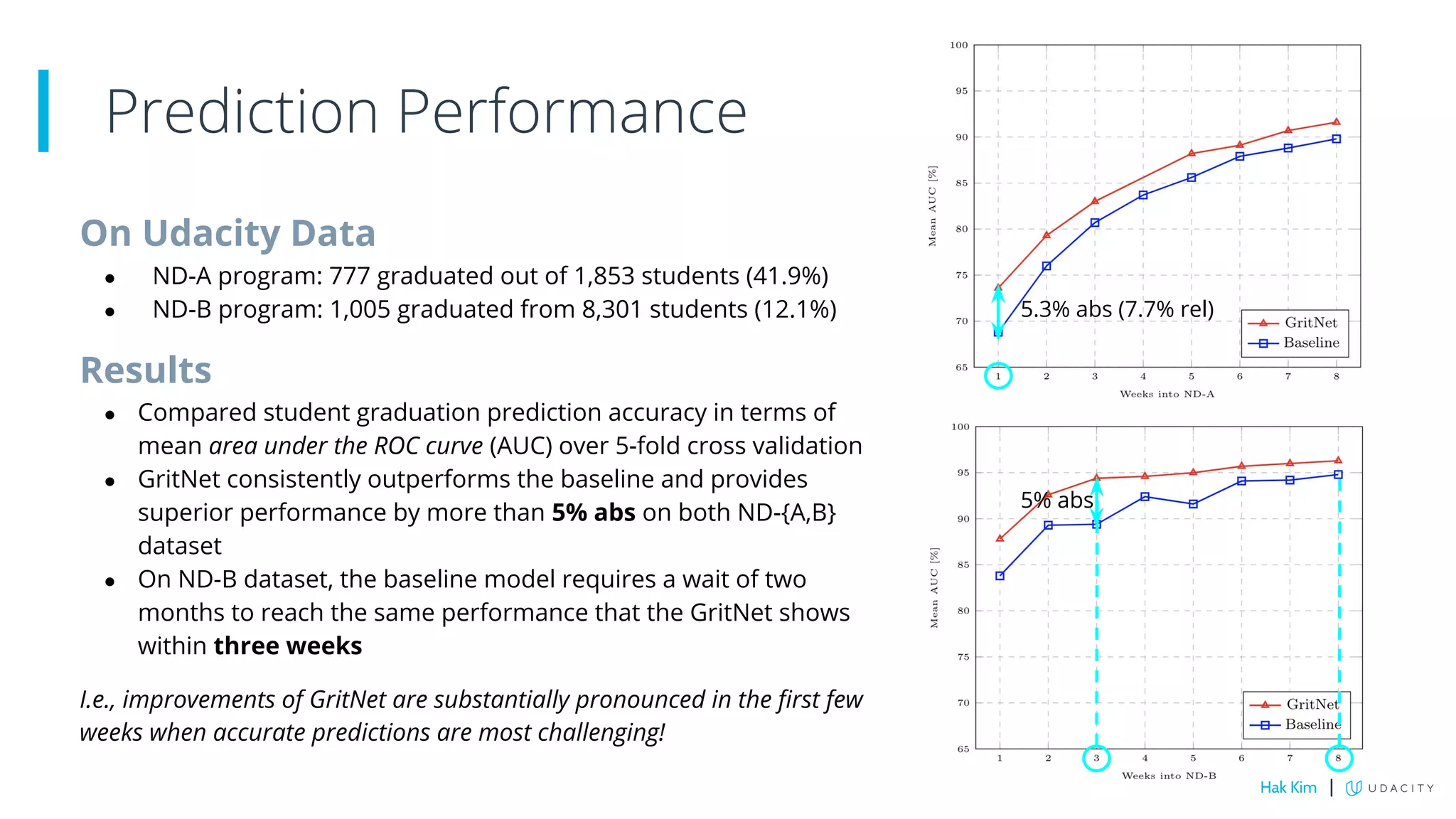

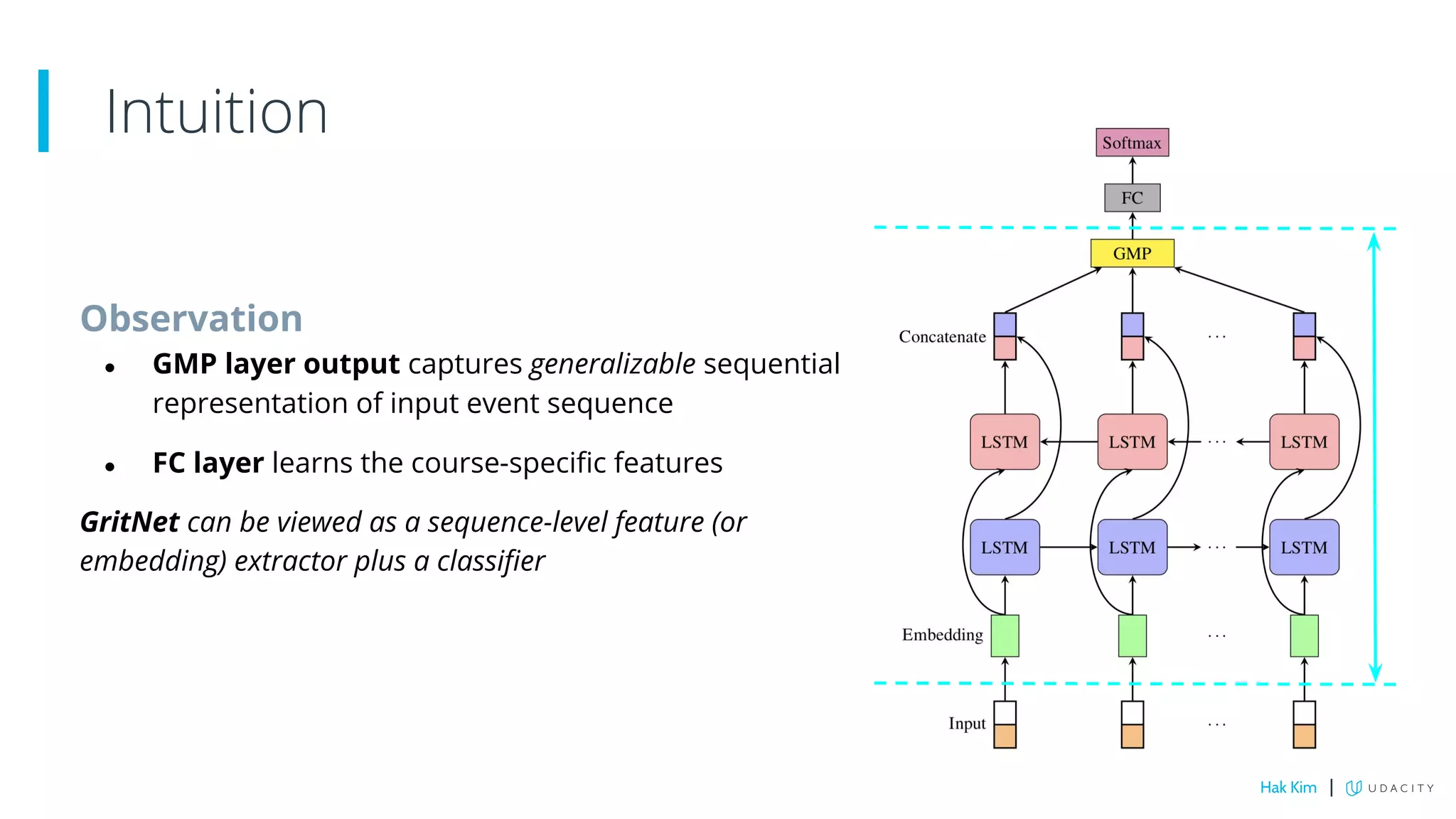

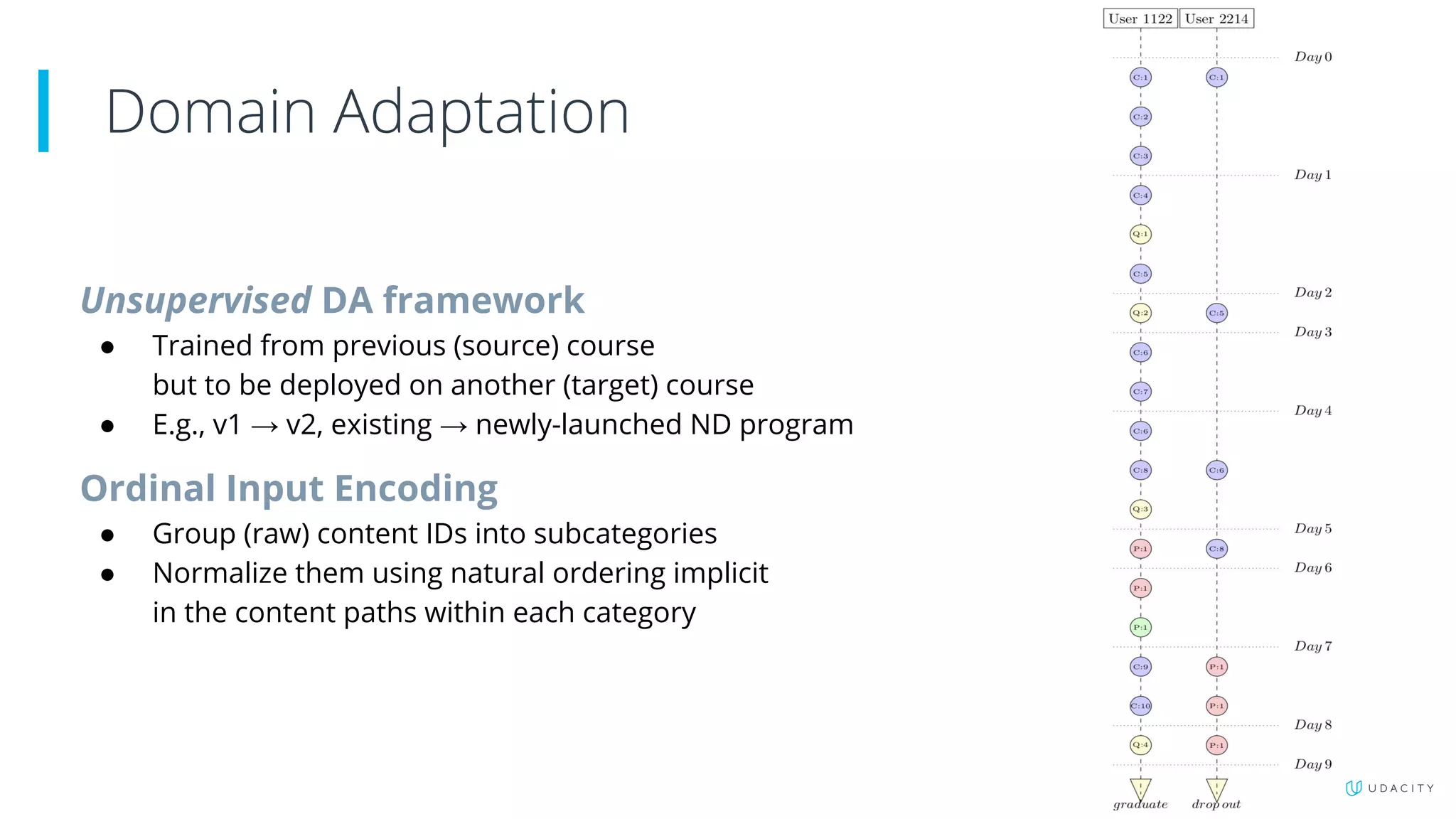

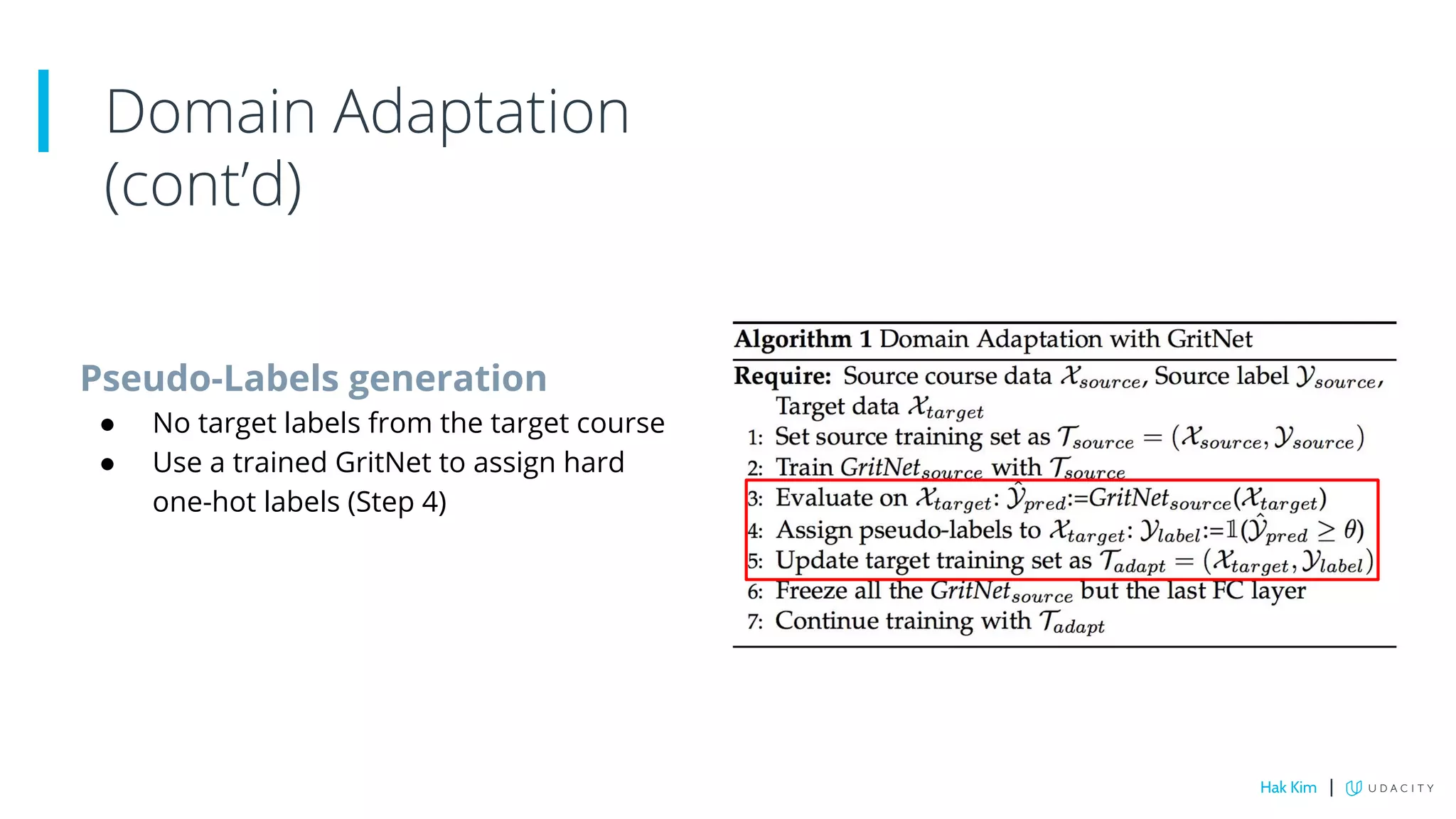

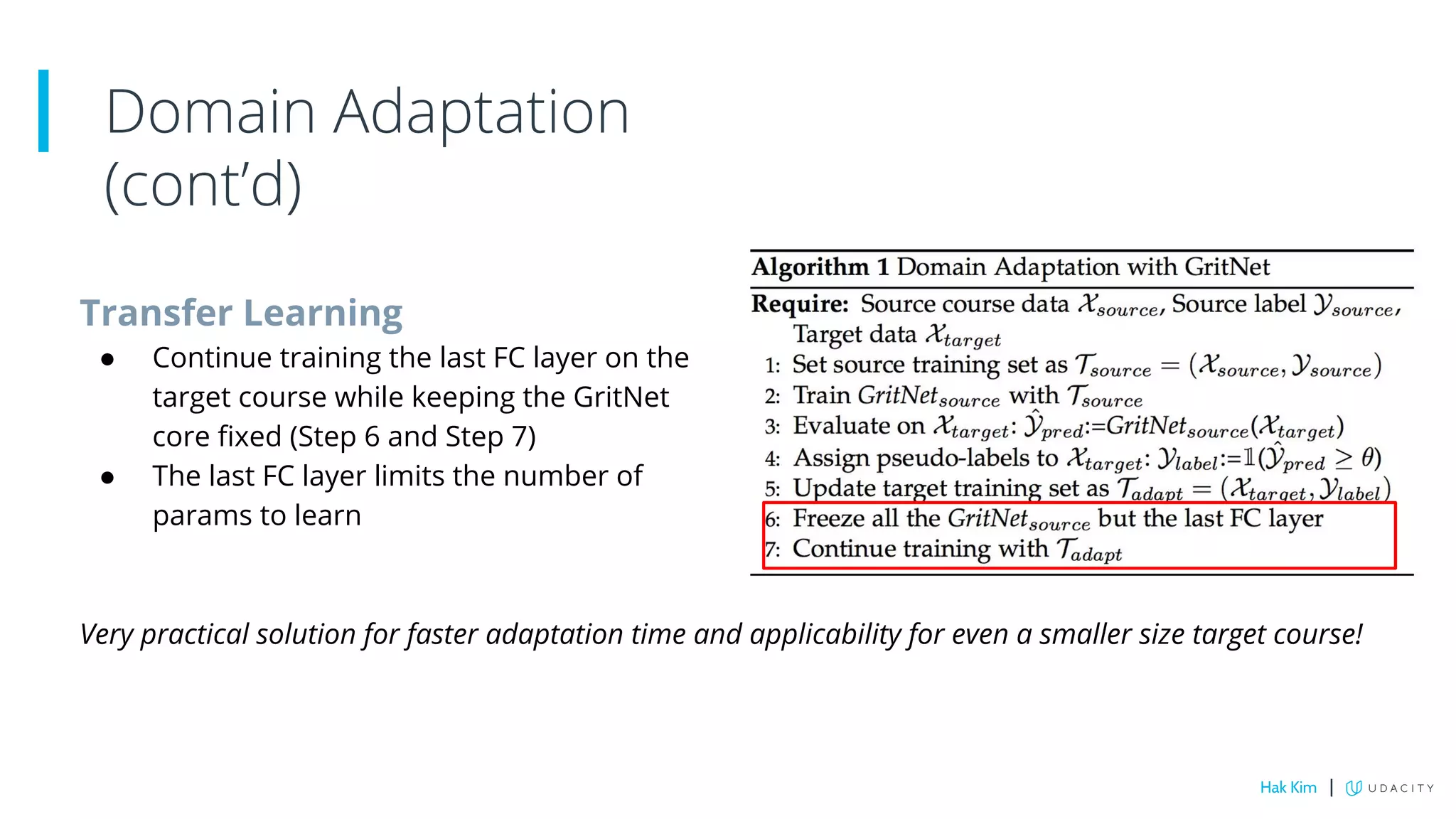

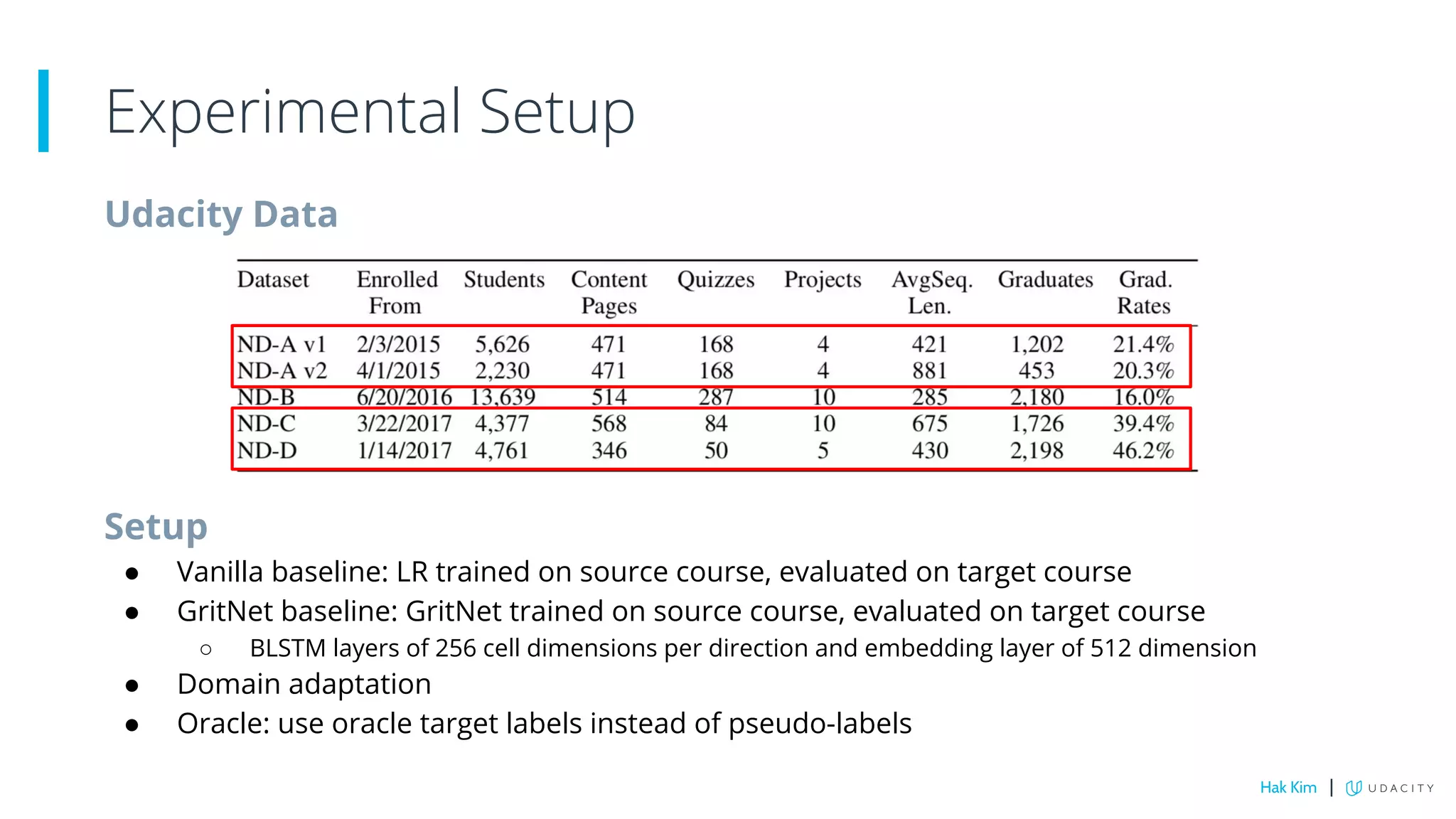

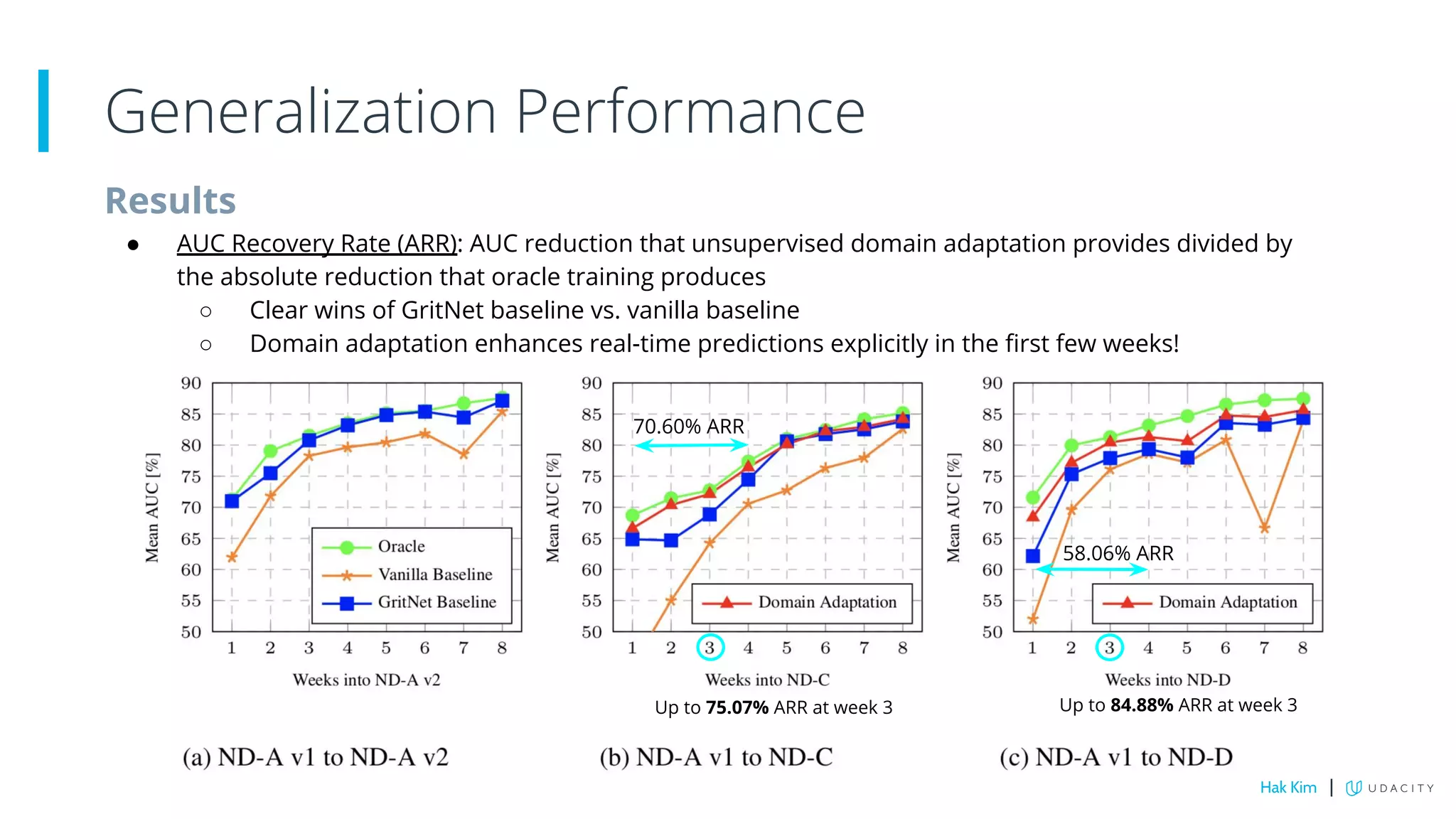

This document describes research on using deep learning to predict student performance in massive open online courses (MOOCs). It introduces GritNet, a model that takes raw student activity data as input and predicts outcomes like course graduation without feature engineering. GritNet outperforms baselines by more than 5% in predicting graduation. The document also describes how GritNet can be adapted in an unsupervised way to new courses using pseudo-labels, improving predictions in the first few weeks. Overall, GritNet is presented as the state-of-the-art for student prediction and can be transferred across courses without labels.