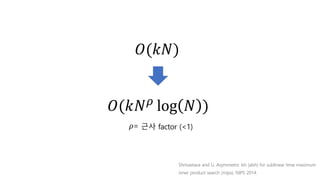

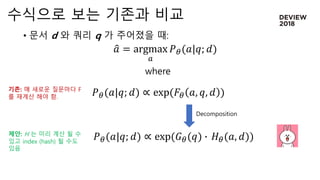

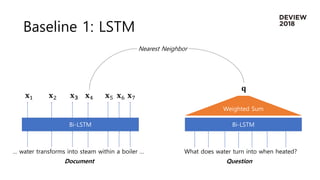

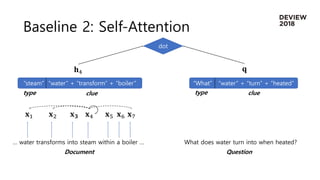

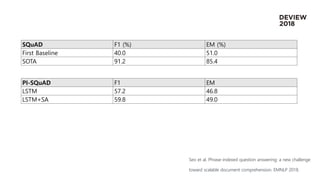

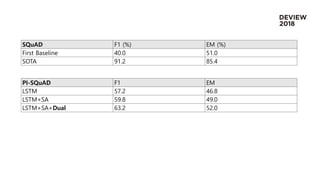

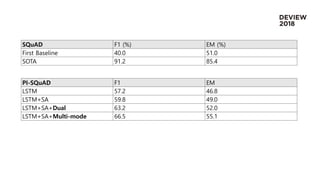

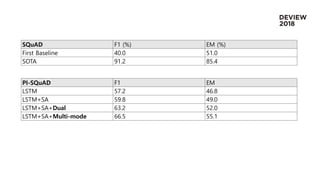

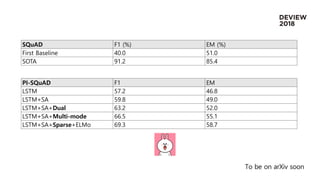

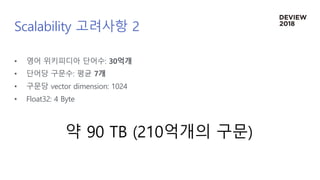

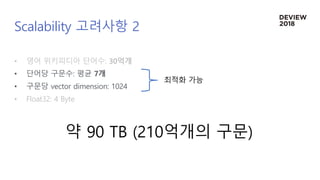

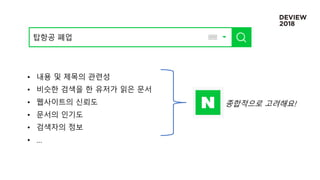

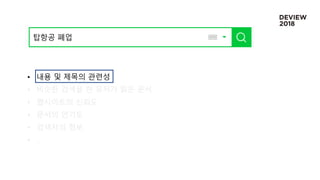

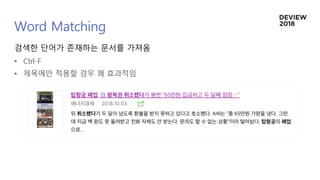

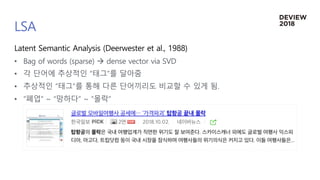

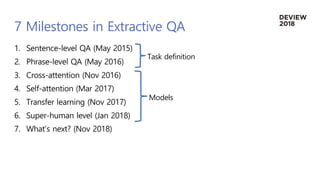

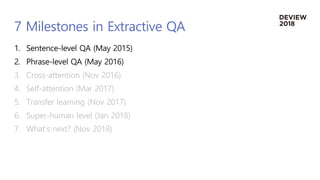

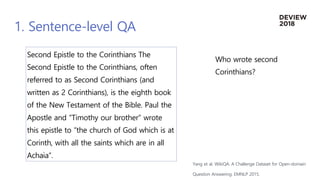

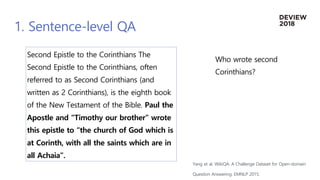

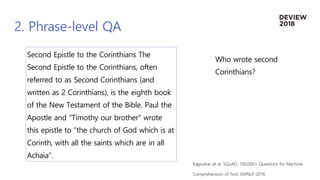

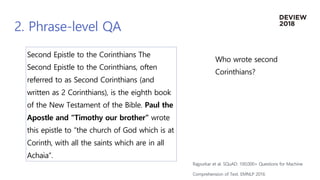

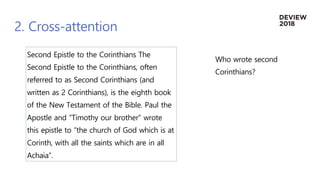

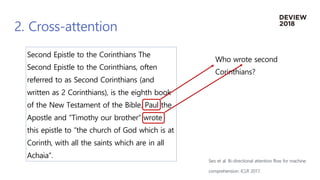

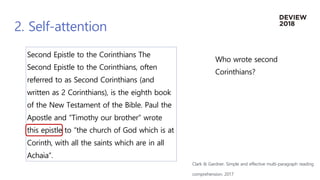

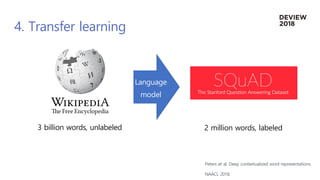

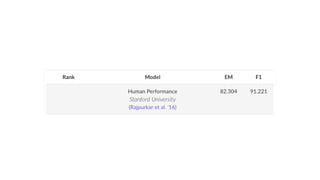

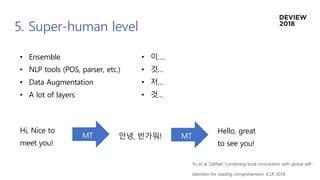

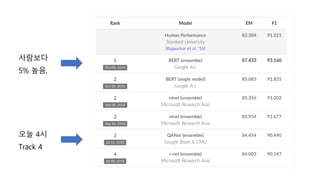

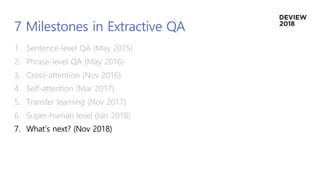

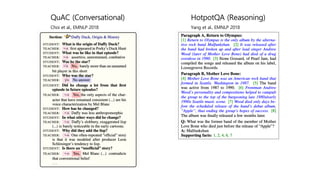

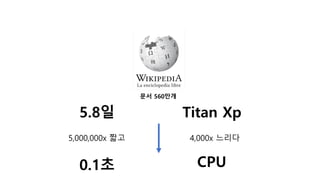

The document discusses machine reading comprehension (MRC) techniques for question answering (QA) systems, comparing search-based and natural language processing (NLP)-based approaches. It covers key milestones in the development of extractive QA models using NLP, from early sentence-level models to current state-of-the-art techniques like cross-attention, self-attention, and transfer learning. It notes the speed and scalability benefits of combining search and reading methods for QA.

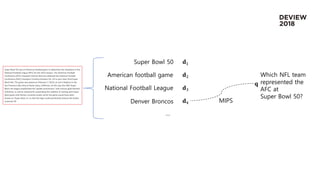

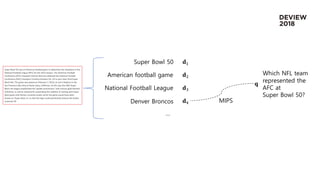

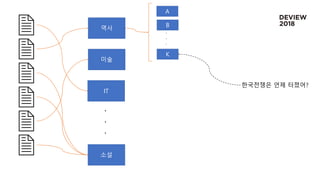

![[0.3, 0.5, …]

[0.7, 0.1, …]

[0.6, 0.2, …]

.

.

.

[0.4, 0.4, …]

한국전쟁은

언제 터졌어?

[…]

[…]

[…]

.

.

.

[0.5, 0.1, …]

[0.3, 0.4, …]

[0.4, 0.5, …]

[0.8, 0.1, …]

[0.4, 0.4, …]

[0.4, 0.3, …]

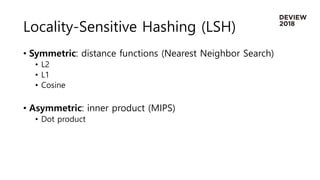

Locality-Sensitive Hashing

비슷한 아이템의 충돌을 최대화

MIPS](https://image.slidesharecdn.com/2232018-181012010149/85/223-QA-NLP-77-320.jpg)