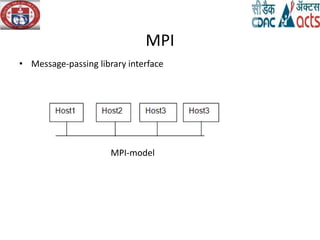

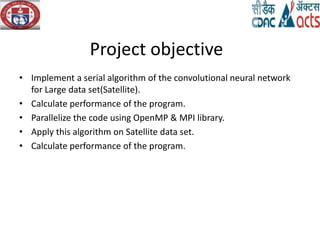

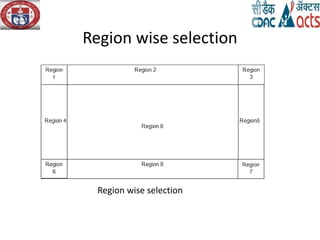

This document discusses optimizing convolutional neural networks (CNNs) using OpenMP and MPI for image processing applications like satellite data analysis. It covers the challenges of overfitting in neural networks and presents a hybrid implementation approach combining serial and parallel algorithms to enhance performance. Additionally, it includes a literature survey and a detailed exploration of various CNN architectures, objectives, and methodologies employed throughout the project.

![Convolutional Neural Networks

• 1)Convolutional layer

• 2)Pooling Layer

• 3)Fully-Connected

LeNet [12]](https://image.slidesharecdn.com/dp2pptbybikramjitchowdhuryfinal-180711052654/85/Dp2-ppt-by_bikramjit_chowdhury_final-8-320.jpg)

![Literature survey

Referred Paper Authors/Publication Explanation Conclusion

[1] Efficient

Multitraining

Framework

of Image Deep

Learning on GPU

Cluster

• Chun-Fu (Richard)Chen

• Gwo Giun (Chris) Lee

•Yinglong Xia

•W. Sabrina Lin

•Toyotaro Suzumura

•Ching-Yung Lin

2015, IEEE

Deep learning model

is developed base on

pipelining schema for

image on GPU cluster.

This framework

saves time in

training multiple

models using large

dataset with

complicated

network

[13]A MapReduce

Computing

Framework Based

on GPU Cluster

•Heng Gao

•Jie Tang

•Gangshan Wu

2013 IEEE

It is parallel GPU

programming

framework based on

MapReduce.

In this framework, a

distributed file system

(GlusterFS) is used to

store data distributed.

The dynamic load

balancing was taken

into consideration

more specifically](https://image.slidesharecdn.com/dp2pptbybikramjitchowdhuryfinal-180711052654/85/Dp2-ppt-by_bikramjit_chowdhury_final-12-320.jpg)

![Literature survey

Referred Paper Authors/Publication Explanation Conclusion

[14] Theano-MPI •He Ma

•Fei Mao,

•Graham W,

arXiv:1605.08325v1

[cs.LG] 26 May 2016

It is a training

framework that can

utilize GPUs across

nodes in a cluster .

It accelerates the training

of deep learning models

based on data parallelism

and parameter exchange

among GPUs is based on

CUDA-aware MPI.

[15]CNNLab

•Chao Wang,

•Yuan Xie

arXiv:1606.06234v1[

cs.LG] 2016

This framework

defined an API-like

library for CNN

operation using GPU

and OpenCL.

Based on the framework

in CNN lab, the tasks can

be distributed to either

GPU and FPGA-based

accelerators.](https://image.slidesharecdn.com/dp2pptbybikramjitchowdhuryfinal-180711052654/85/Dp2-ppt-by_bikramjit_chowdhury_final-13-320.jpg)

![Literature survey

Referred Paper Authors/Publication Explanation Conclusion

[21]SegNet Vijay

Badrinarayanan,

Alex Kendall,

Roberto Cipolla,

Senior Member of

IEEE

Reduced version of

FCN.

It show algorithm can

improved 10 times higher

speed up in common FCN.

[22]Efficient

Convolutional

Neural Network for

pixel-wish

Classification on

Heterogeneous

HardwareSystem

Fabian Tschopp,

Julien N. P. Martel,

Srinivas C. Turaga,

Matthew Cook, Jan

Funke

It reduce time

complexity by

replacing sliding

window by strided

kernel.

It show the performance

improved by 52 times. It is

also improved in parallel

version of GPU cluster.](https://image.slidesharecdn.com/dp2pptbybikramjitchowdhuryfinal-180711052654/85/Dp2-ppt-by_bikramjit_chowdhury_final-14-320.jpg)

![Literature survey

Referred Paper Authors/Publication Explanation Conclusion

[23]Learning

Deconvolution

Network for

Semantic

Segmentation

Hyeonwoo Noh

Seunghoon Hong

Bohyung Han

It is used encryption

and decryption

neural network like

FCN.

It used FCN with addition

of future learning on

Deconvolutional Layer](https://image.slidesharecdn.com/dp2pptbybikramjitchowdhuryfinal-180711052654/85/Dp2-ppt-by_bikramjit_chowdhury_final-15-320.jpg)

![References

[1] Chun-Fu (Richard) Chen , Gwo Giun (Chris) Lee , Yinglong Xia ,W. Sabrina Lin ,

Toyotaro Suzumura , Ching-Yung Lin ,”Efficient Multi-Training Framework of Image

Deep Learning on GPU Cluster”,978-1-5090-0379-2/15 $31.00 © 2015 IEEE

[2] Ming Chen, Lu Zhang, Jan P. Allebach ,”LEARNING DEEP FEATURES FOR

IMAGE EMOTION CLASSIFICATION”,978-1-4799-8339-1/15/$31.00 ©2015 IEEE

[3] Zhilu Chen , Jing Wang , Haibo He , Xinming Huang ,”A Fast Deep Learning

System Using GPU”,978-1-4799-3432-4/14/$31.00 ©2014 IEEE

[4] Bonaventura Del Monte, Radu Prodan ,”A Scalable GPU-enabled Framework for

Training Deep Neural Networks”,978-1-4673-6615-1/16/$31.00 ©2016 IEEE

[5] Teng Li, Yong Dou, lingfei liang, Yueqing Wang, Qi Lv,” Optimized Deep Belief

Networks on CUDA GPUs”,978-1-4799-1959-8/15/$31.00 ©2015 IEEE

[6] Salima Hassairi, Ridha Ejbali and Mourad Zaied,”Supervised image classification

using Deep Convolutional Wavelets Network”,1082-3409/15 $31.00 © 2015 IEEE

[7] Zhan Wu , Min Peng , Tong Chen,”Thermal Face Recognition Using Convolutional

Neural Network”,978-1-5090-0880-3/16/$31.00 ©20 16 IEEE

[8] http://cdac.in/index.aspx?id=ev_hpc_hypack13_about_downloads

[9] Alex Krizhevsky,Ilya Sutskever,Geoffrey E. Hinton ,” ImageNet Classification with

Deep Convolutional Neural Networks”

[10] Tianyi Liu, Shuangsang Fang, Yuehui Zhao, Peng Wang, Jun

Zhang,”Implementation of Training Convolutional Neural Networks”

[11] Abu Asaduzzaman,Angel Martinez,Aras Sepehri,”A time-efficient image

processing algorithm for multicore/manycore parallel computing”,978-1-4673-7300-

5 ,IEEE 2015](https://image.slidesharecdn.com/dp2pptbybikramjitchowdhuryfinal-180711052654/85/Dp2-ppt-by_bikramjit_chowdhury_final-29-320.jpg)

![References

[12] Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner, “Gradient-based learning applied to document

recognition,” Proceedings of the IEEE,1998

[13] Heng Gao, Jie Tang, Gangshan Wu, State Key Laboratory for Novel Software Technology,Department of

Computer Science And Technology,Nanjing University Nanjing, China,A MapReduce Computing

Framework Based on GPU Cluster,2013 IEEE International Conference on High Performance Computing

and Communications & 2013 IEEE International Conference on Embedded and Ubiquitous Computing

[14] He Ma,School of Engineering, University of Guelph,Fei Mao,SHARCNET, Compute Canada,and Graham

W, School of Engineering, University of Guelph,. Taylor,Theano-MPI:a Theano-based Distributed Training

Framework

[15] http://cs231n.github.io

[16] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “Imagenet classification with deep convolutional neural

networks,” in NIPS, 2012.

[17] https://en.wikipedia.org/wiki/Image_analysis

[18] http://www.ida.liu.se/~746A27/Literature/Image%20Processing%20and%20Analysis.pdf

[19] Hui Wu, Hui Zhang, Jinfang Zhang, FanjiangXu,Institute of Software Chinese Academy of Sciences,

China,"FAST AIRCRAFT DETECTION IN SATELLITE IMAGES BASED ON CONVOLUTIONAL NEURAL

NETWORKS",978-1-4799-8339-1/15/$31.00 ©2015 IEEE

[20] Vijay Badrinarayanan, Alex Kendall, Roberto Cipolla,SegNet, arXiv:1511.00561v3 [cs.CV] 10 Oct 2016

[21] Fabian Tschopp, Julien N. P. Martel, Srinivas C. Turaga, Matthew Cook, Jan Funke, Efficient

Convolutional Neural Network for pixel-wish Classification on Heterogeneous HardwareSystem,

arXiv:1509.03371v1 [cs.CV] 11 Sep 2015

[22] Hyeonwoo Noh Seunghoon Hong Bohyung Han ,Learning Deconvolution Network for Semantic

Segmentation, arXiv:1505.04366v1 [cs.CV] 17 May 2015](https://image.slidesharecdn.com/dp2pptbybikramjitchowdhuryfinal-180711052654/85/Dp2-ppt-by_bikramjit_chowdhury_final-30-320.jpg)