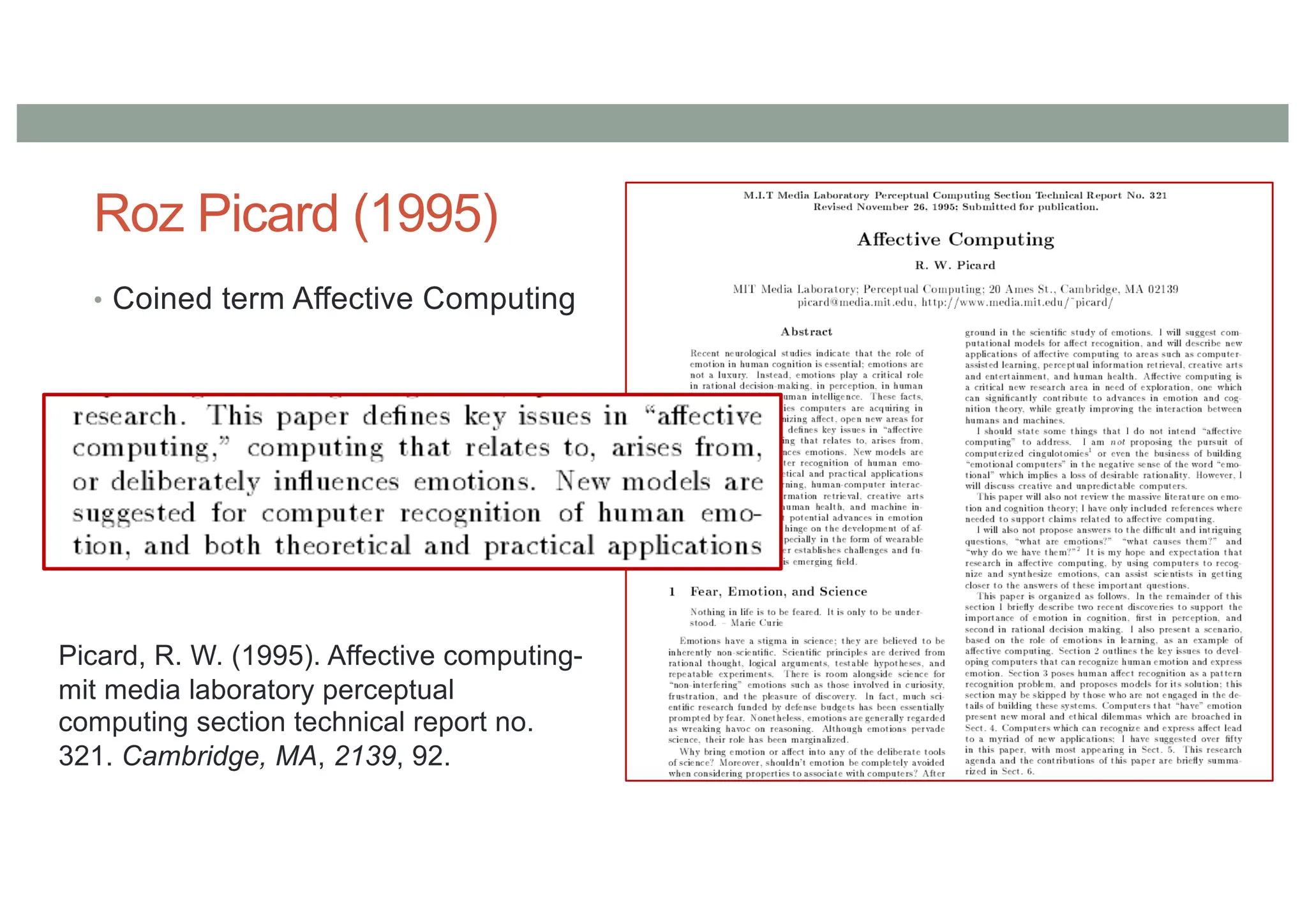

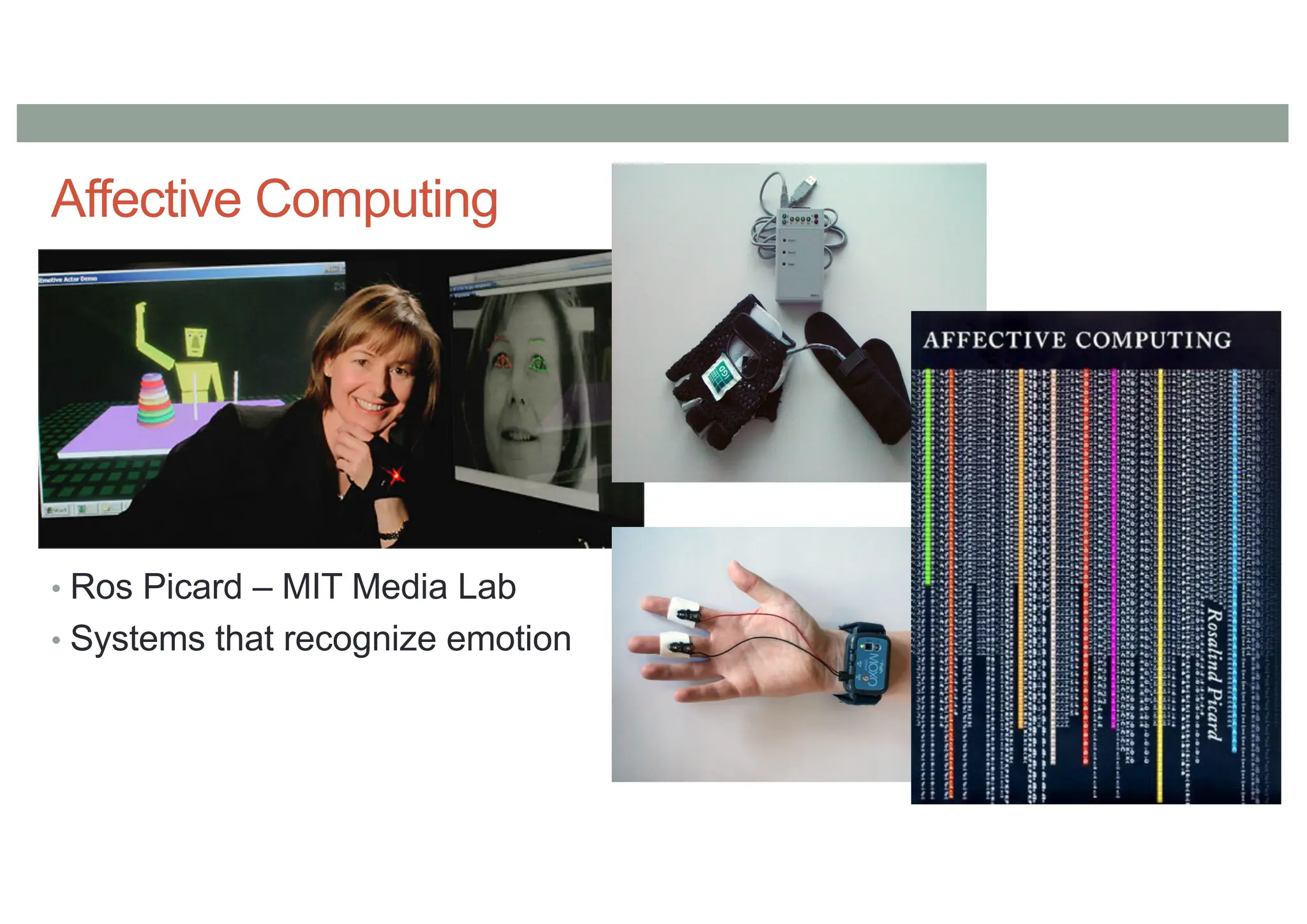

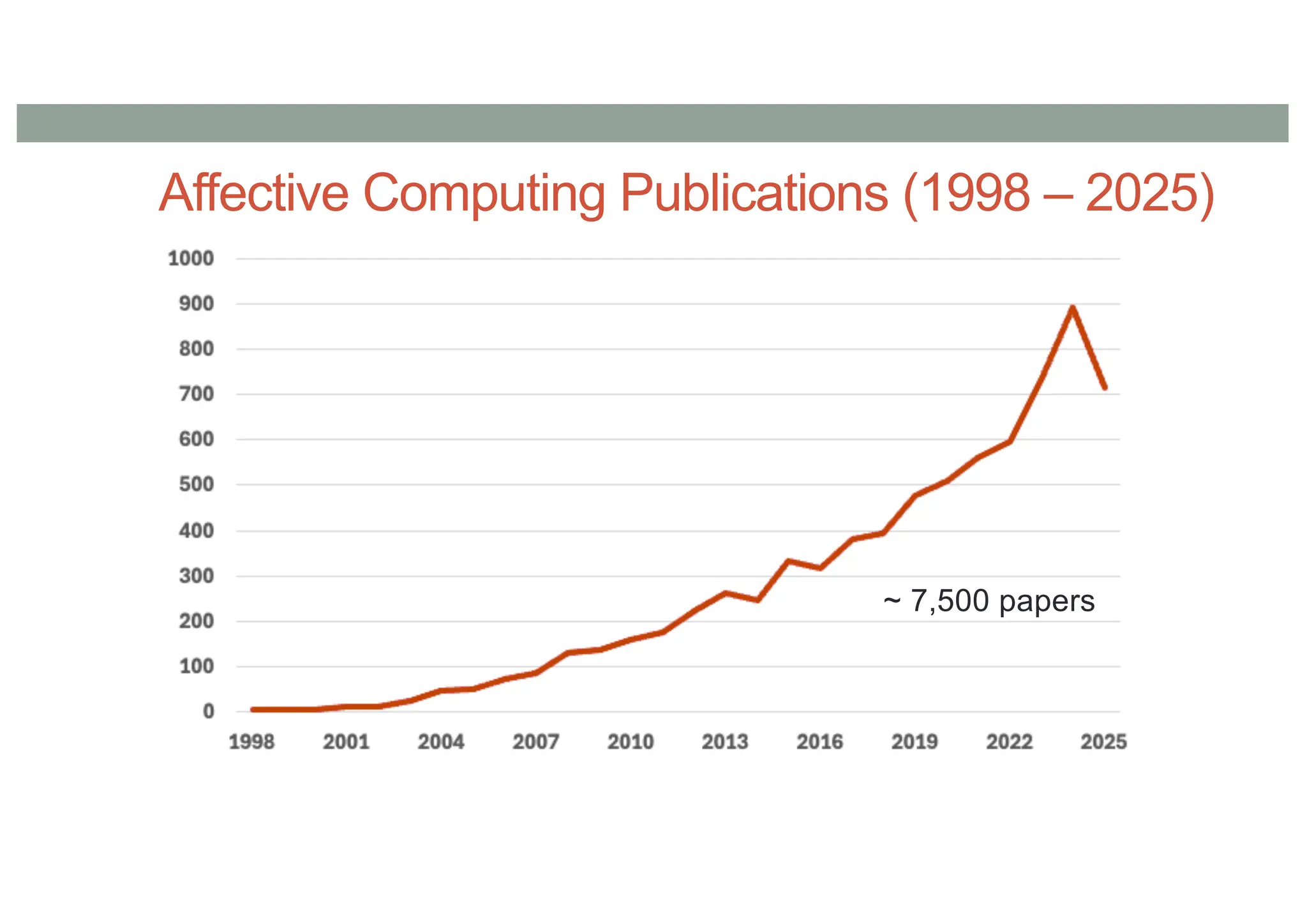

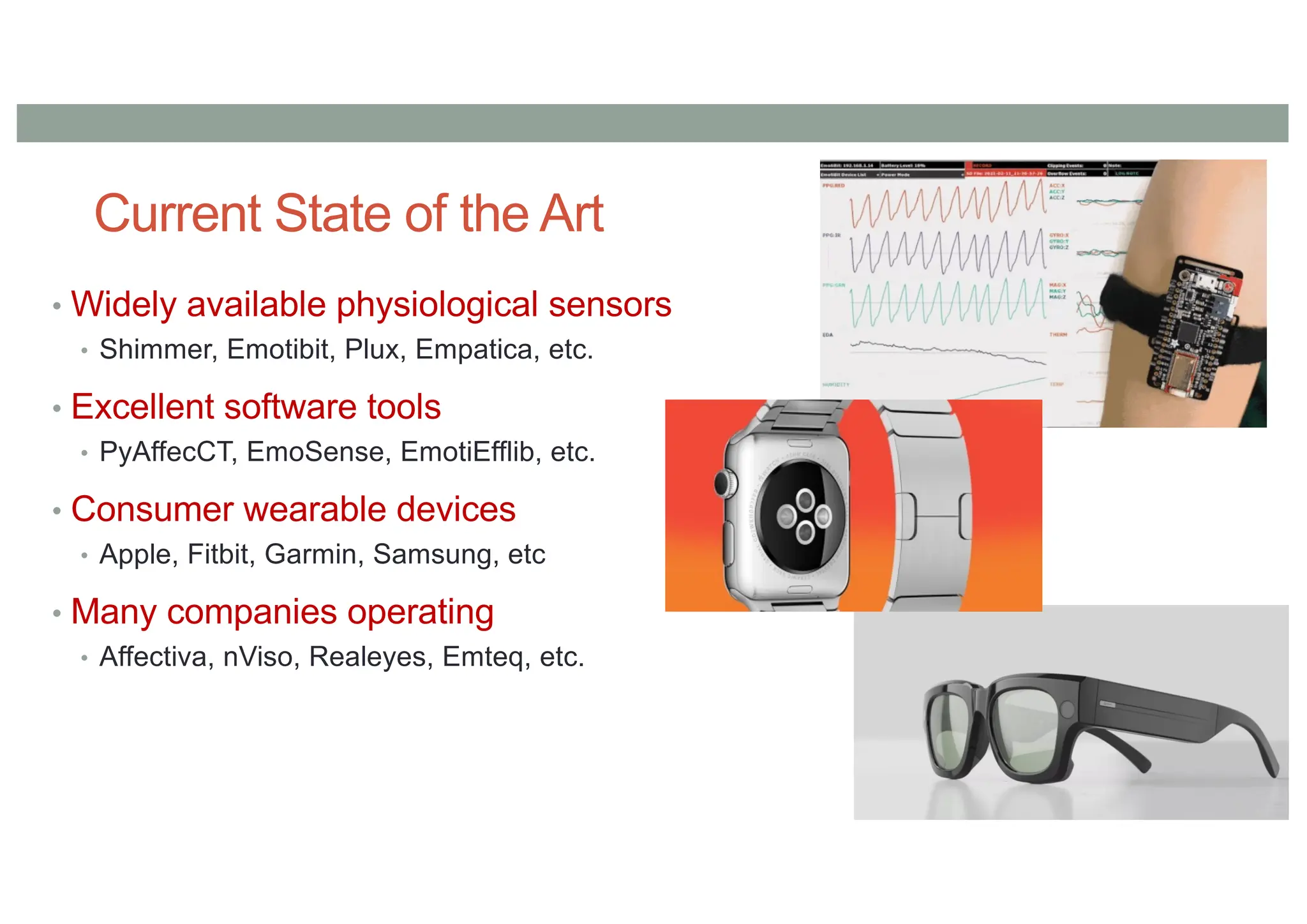

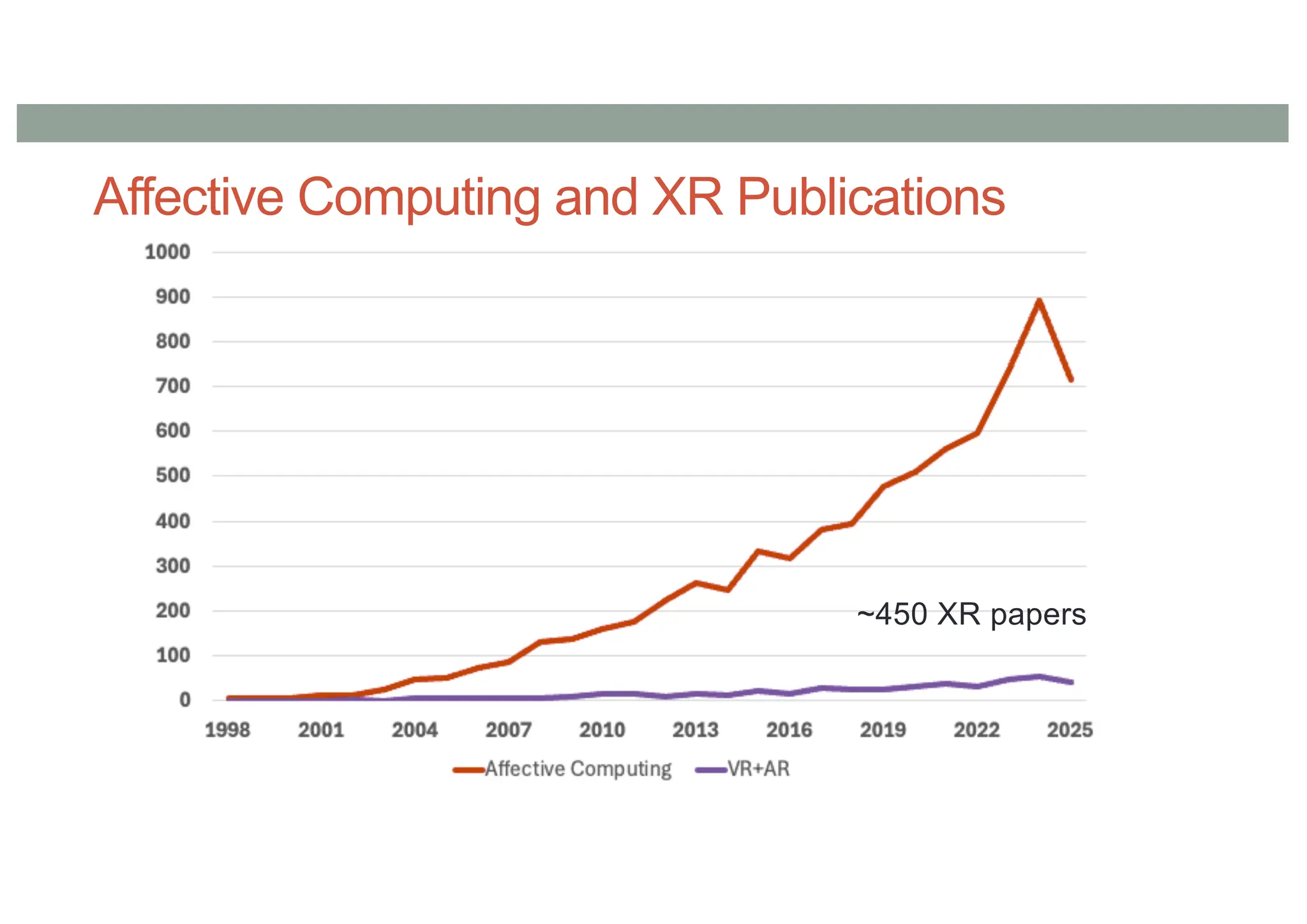

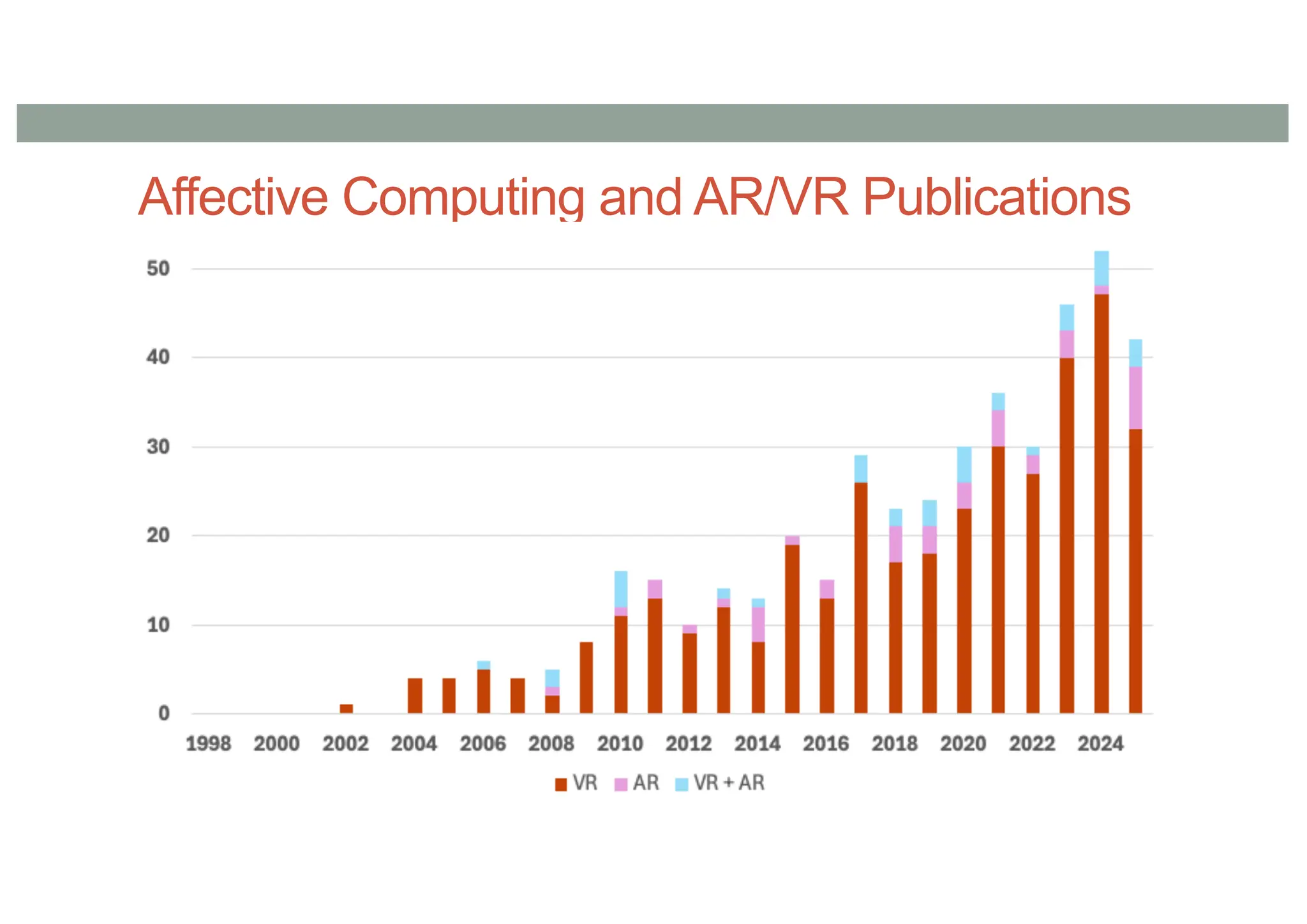

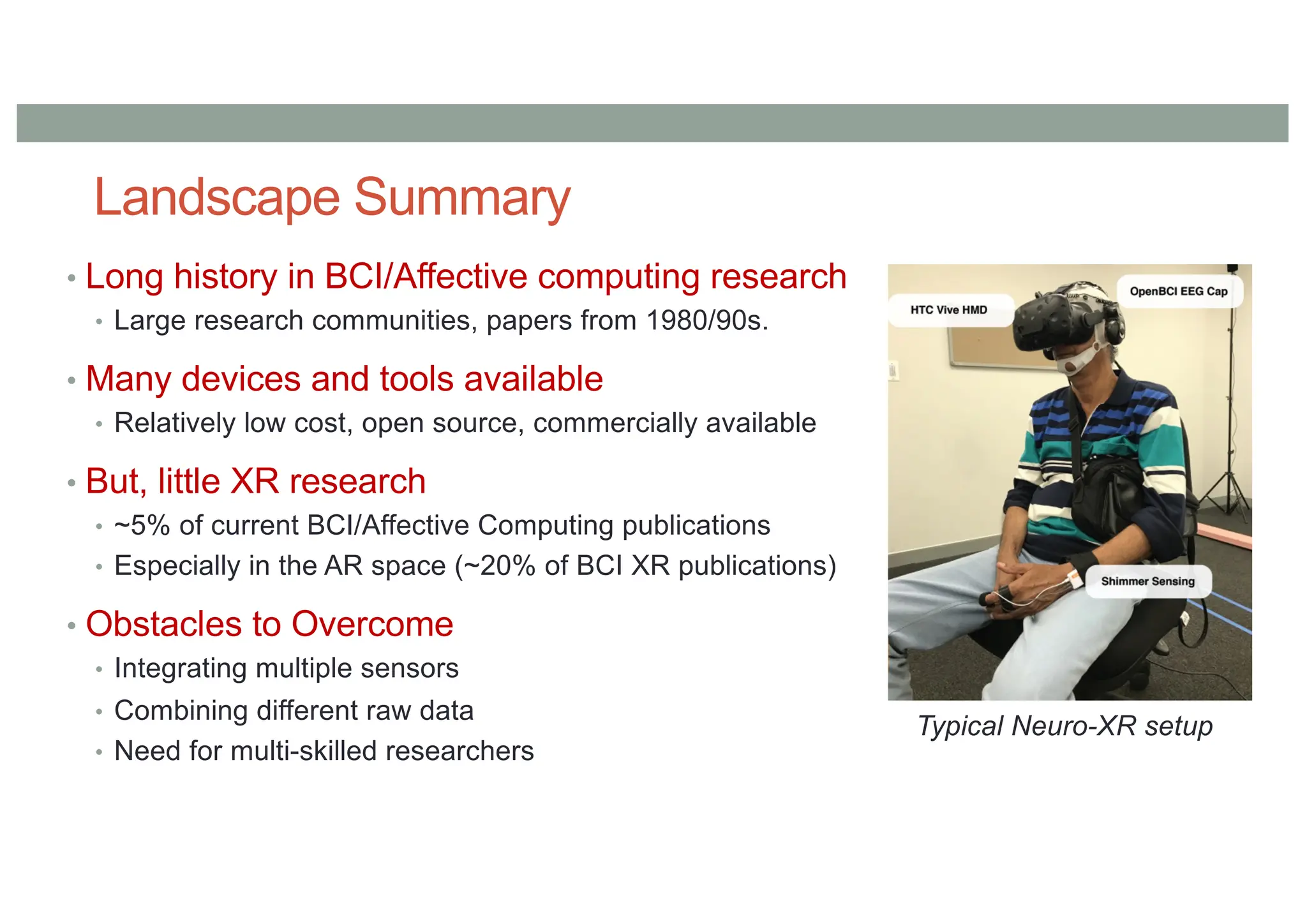

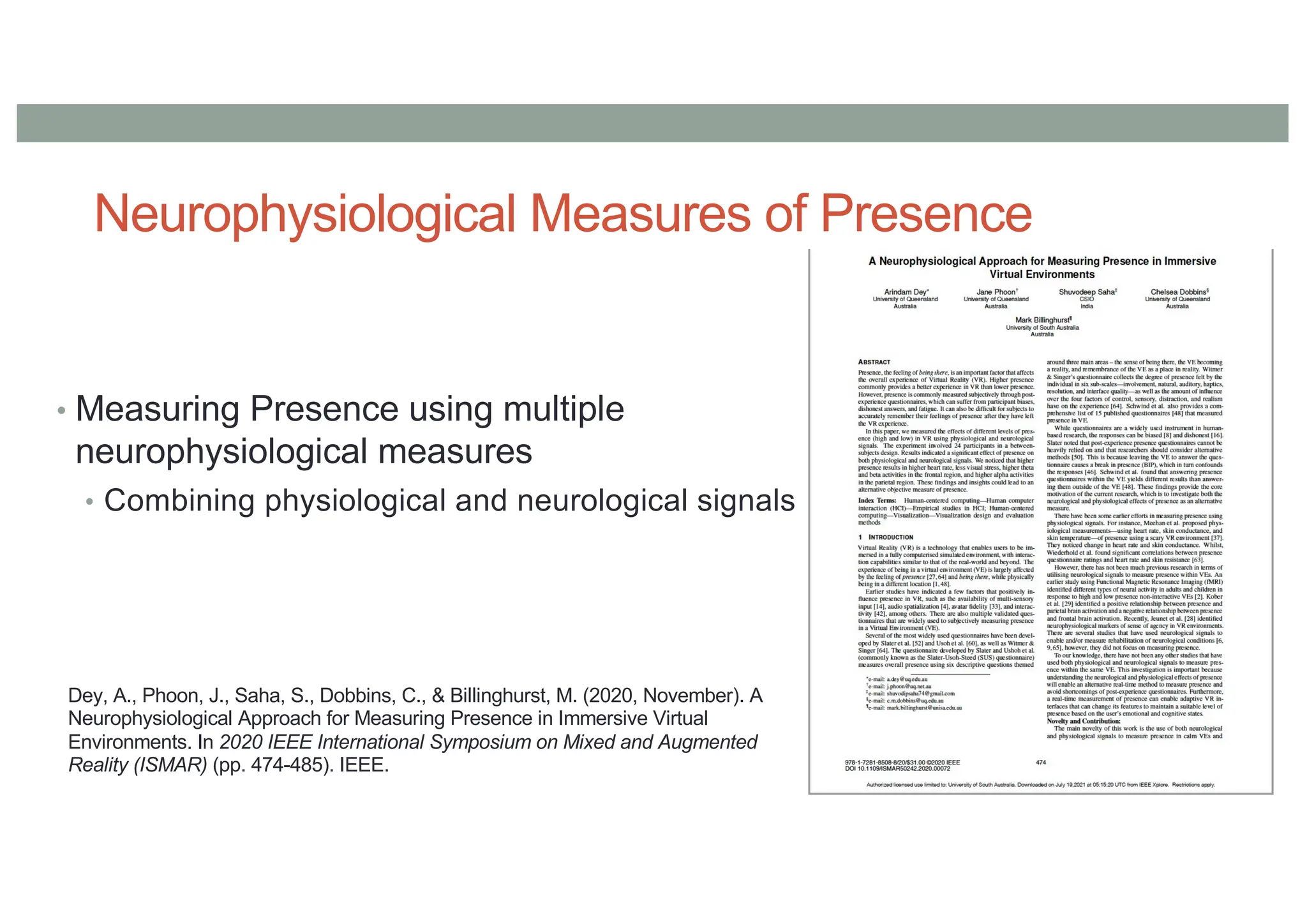

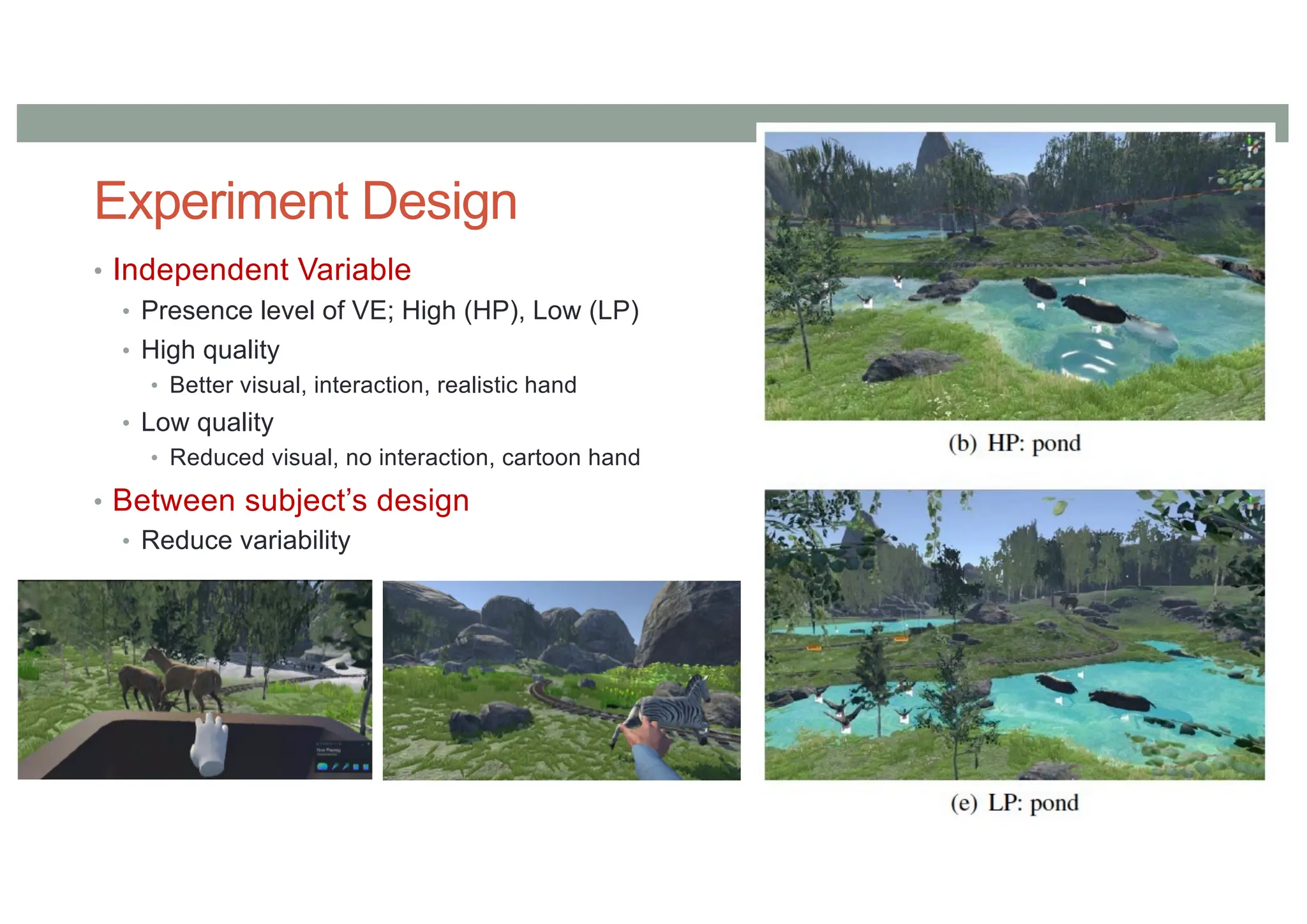

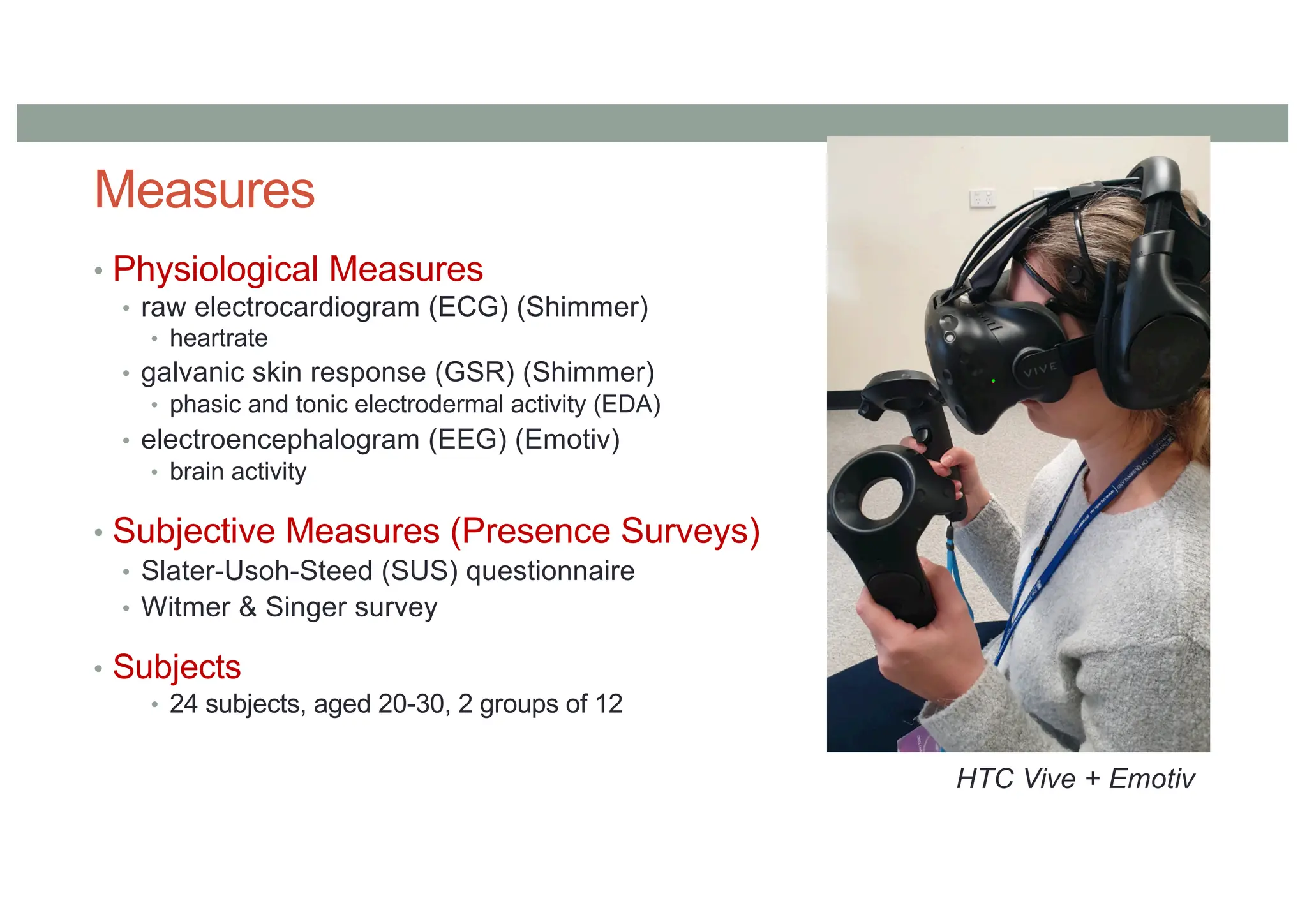

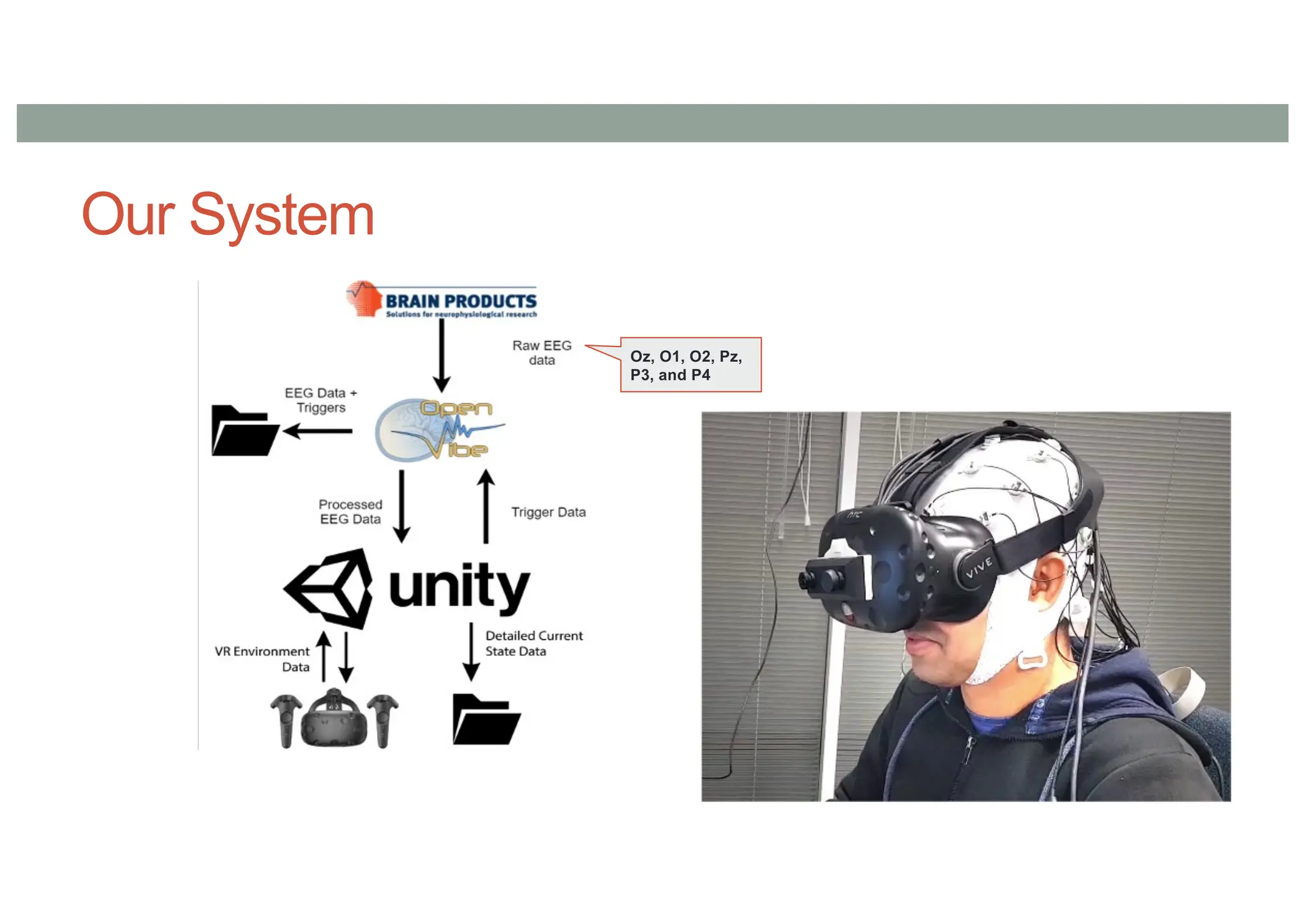

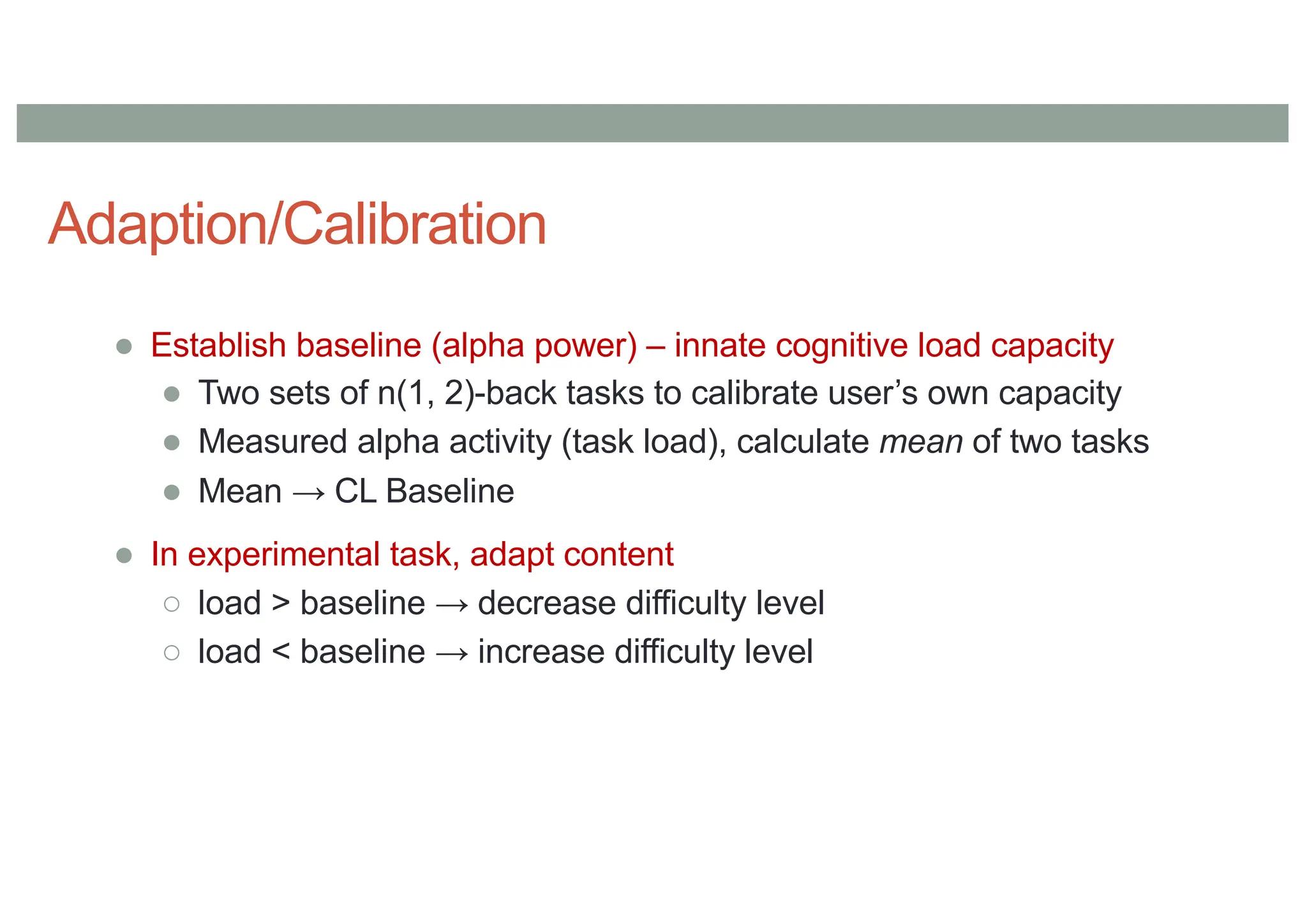

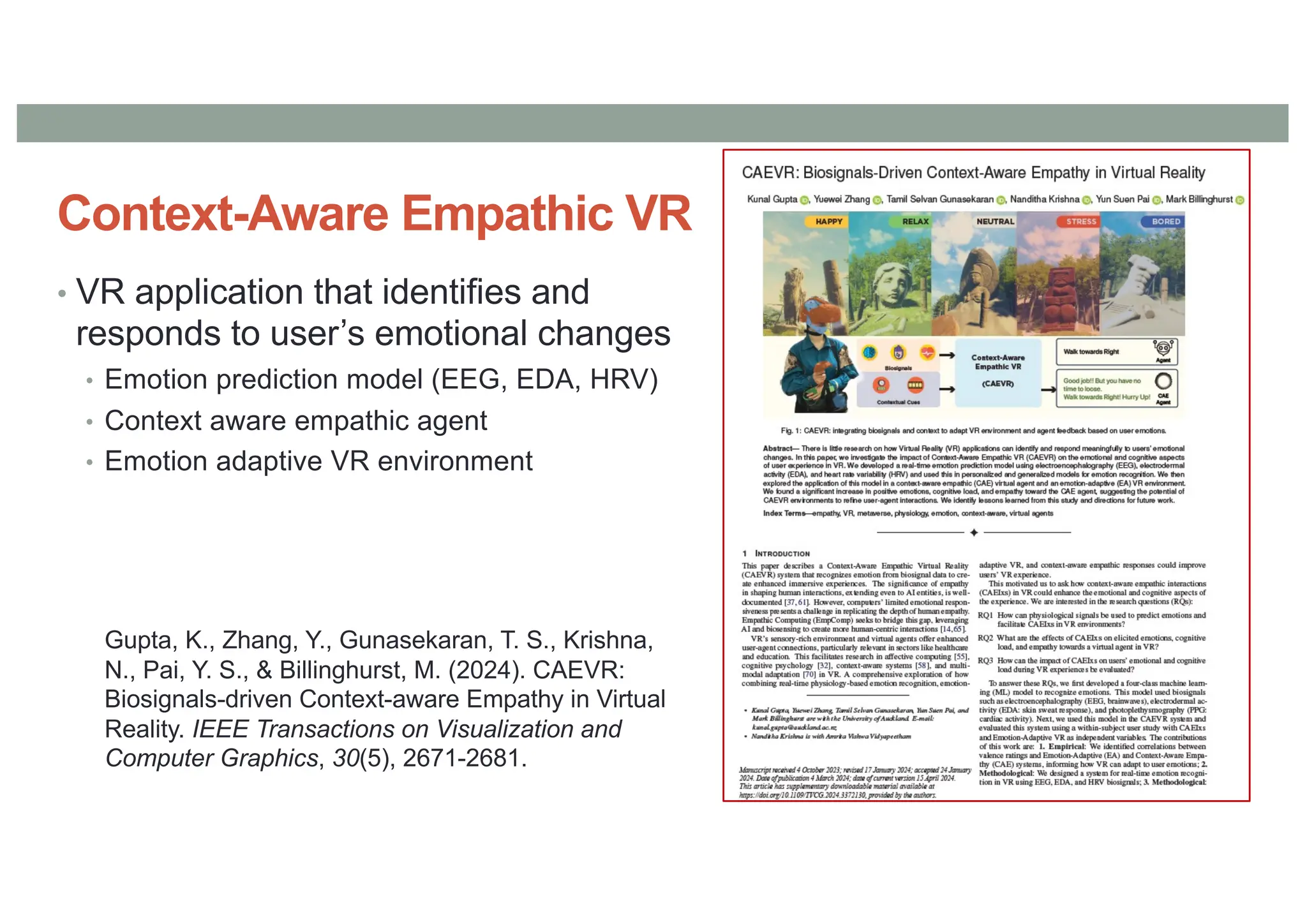

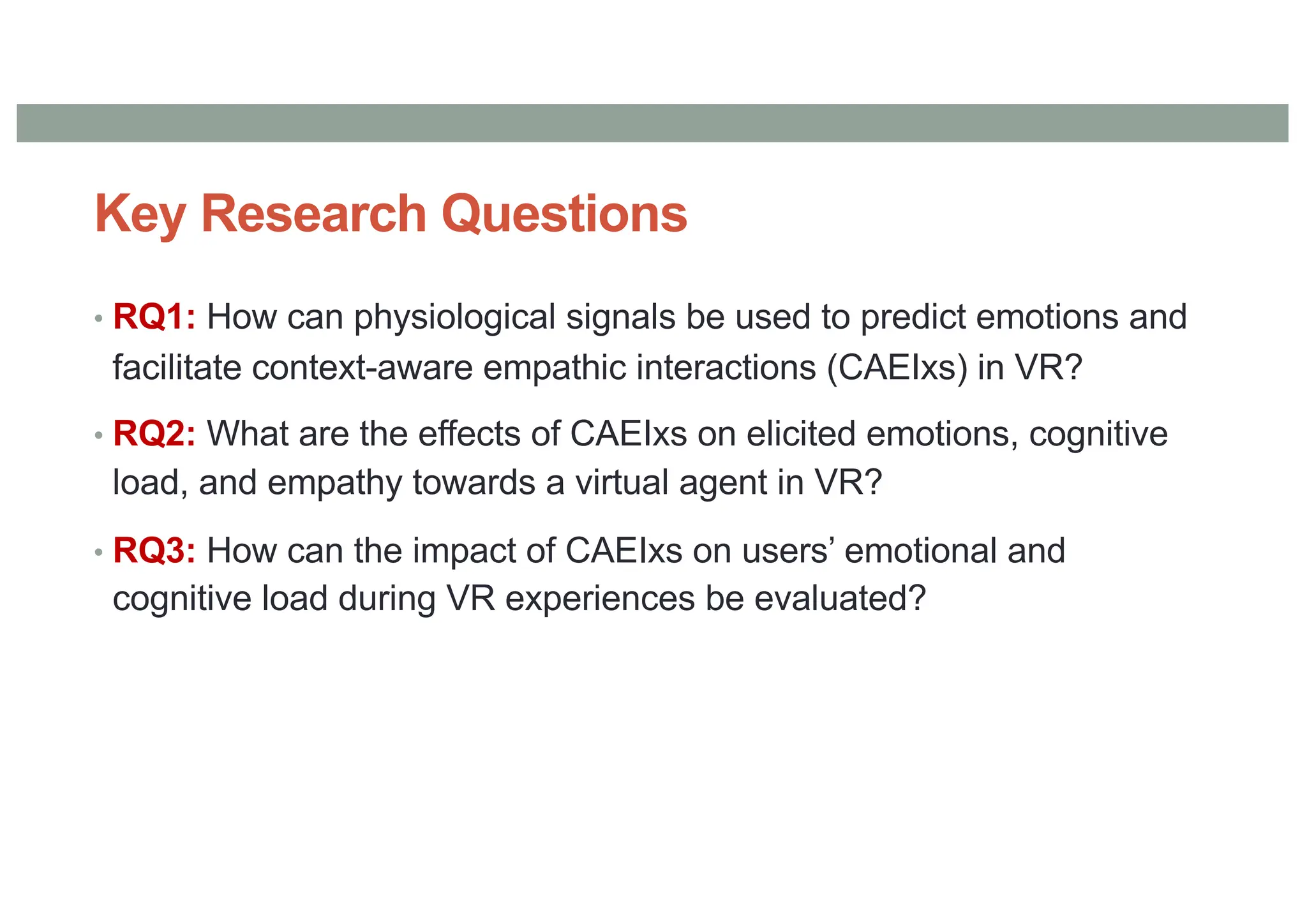

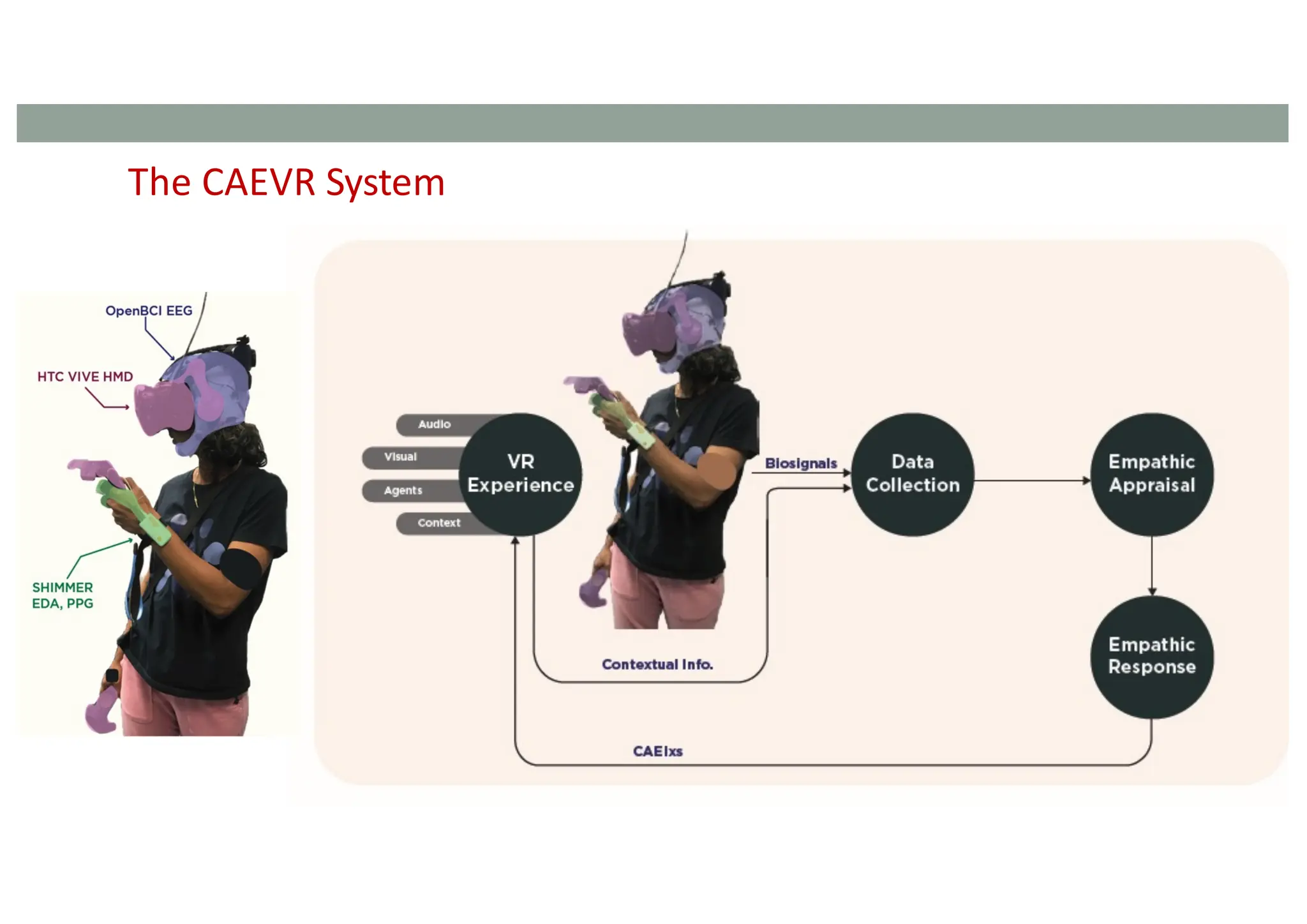

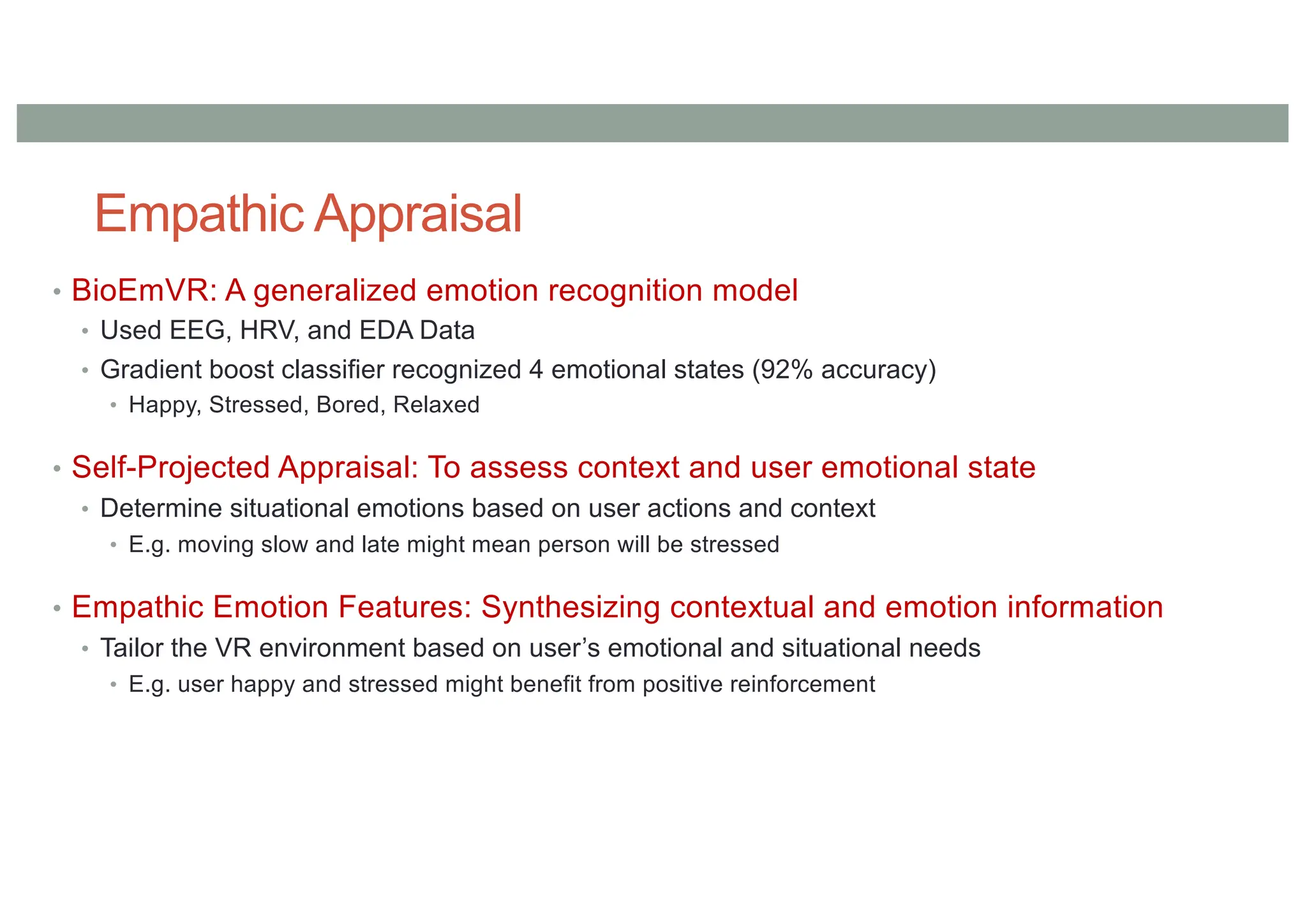

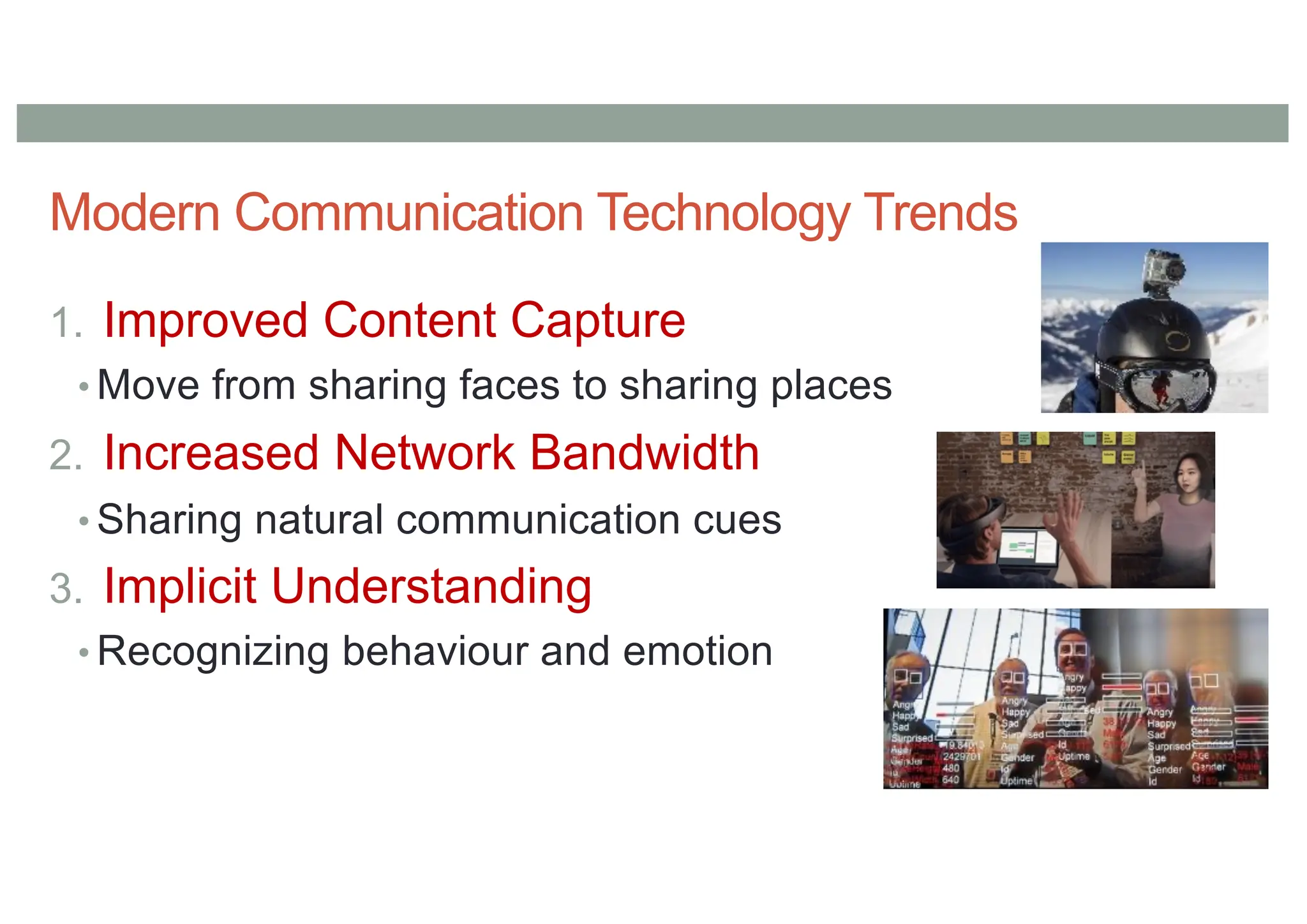

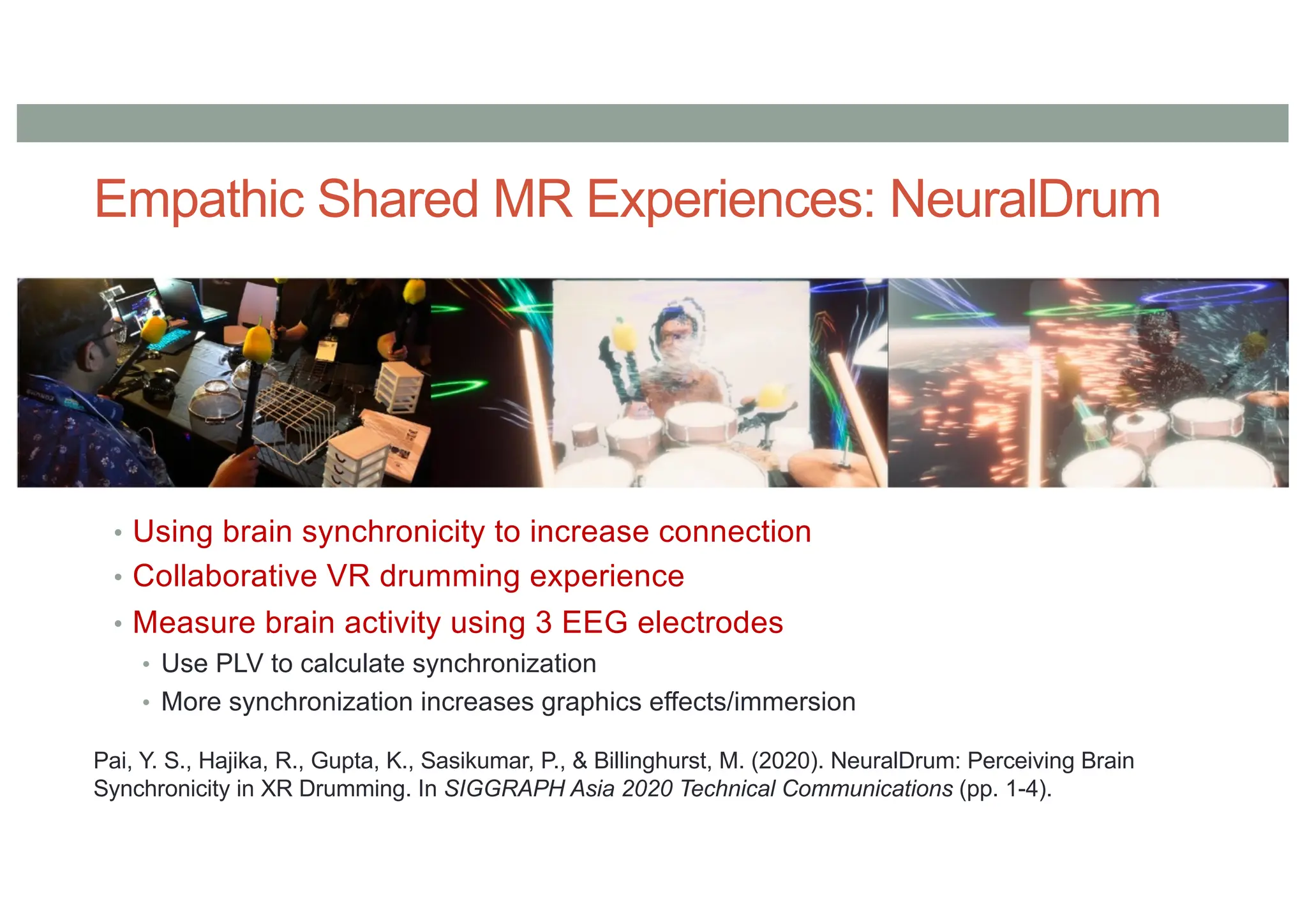

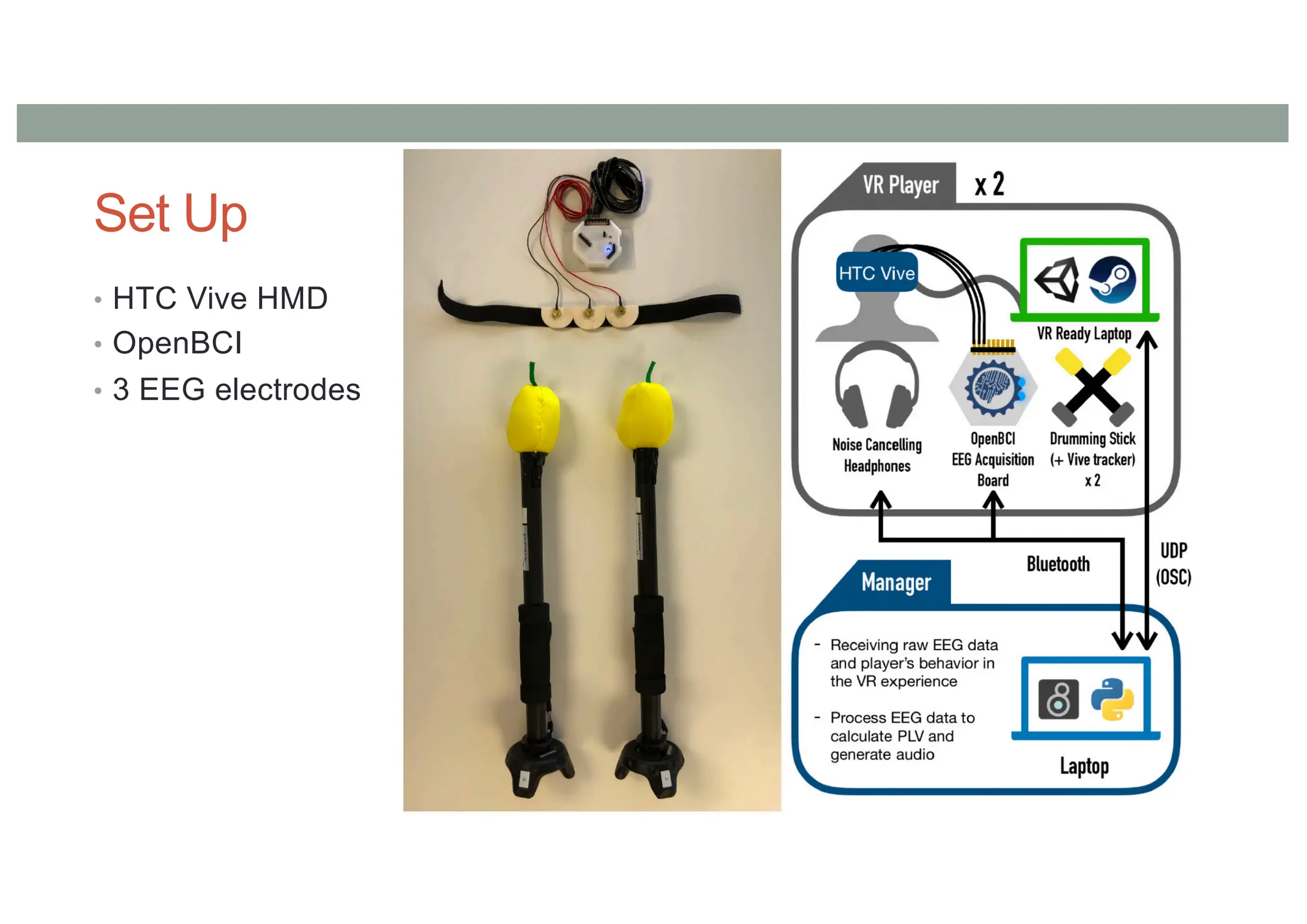

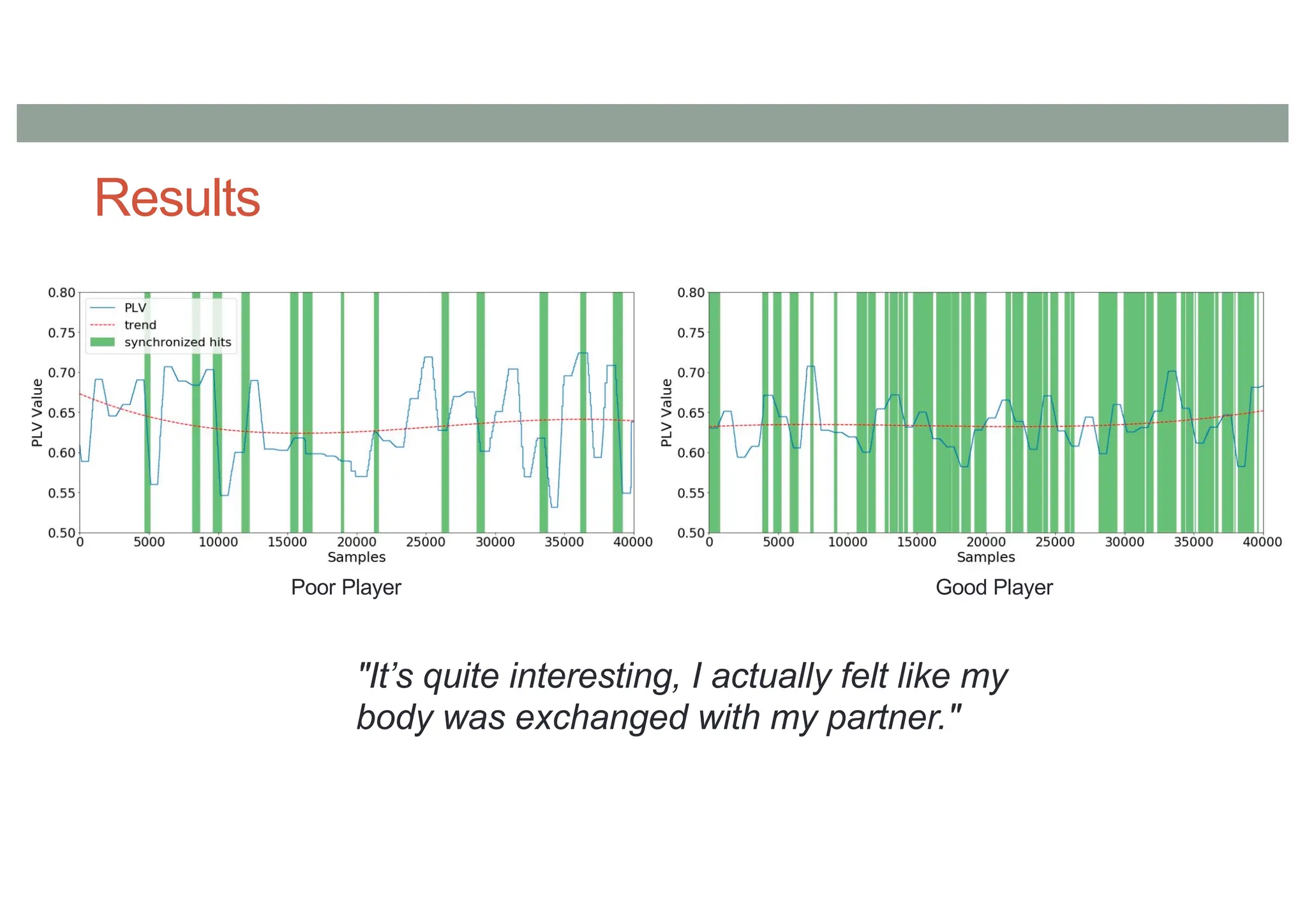

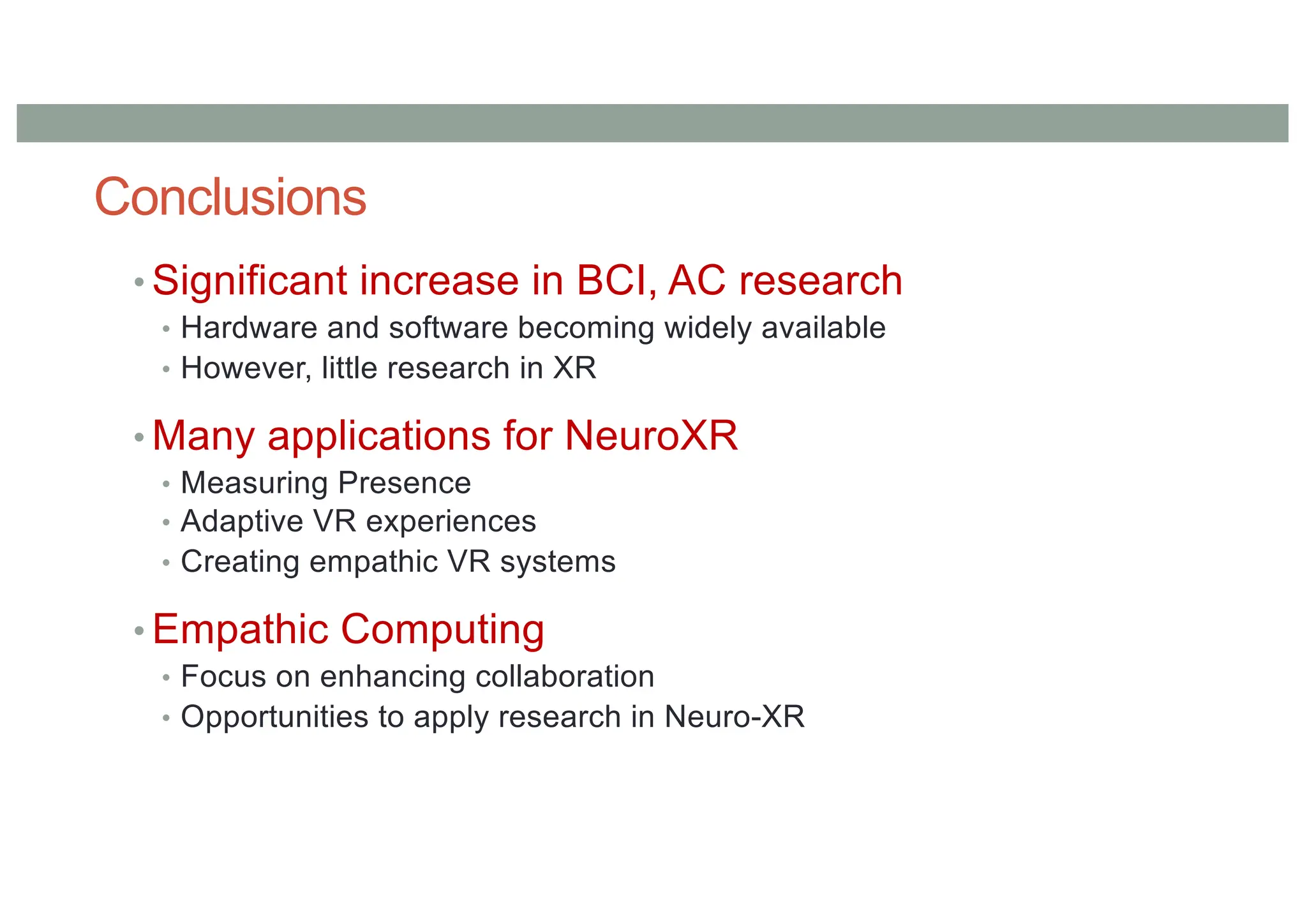

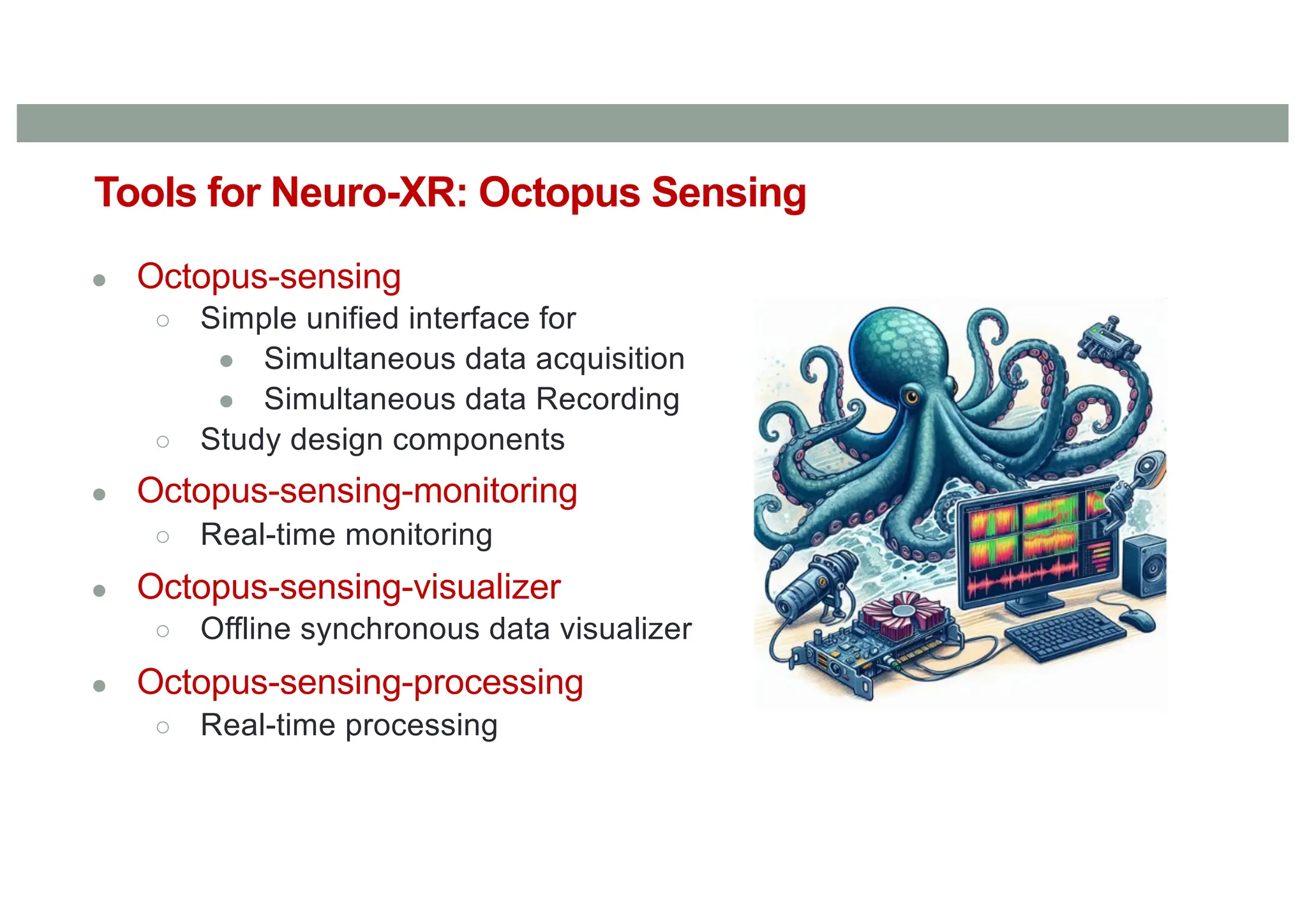

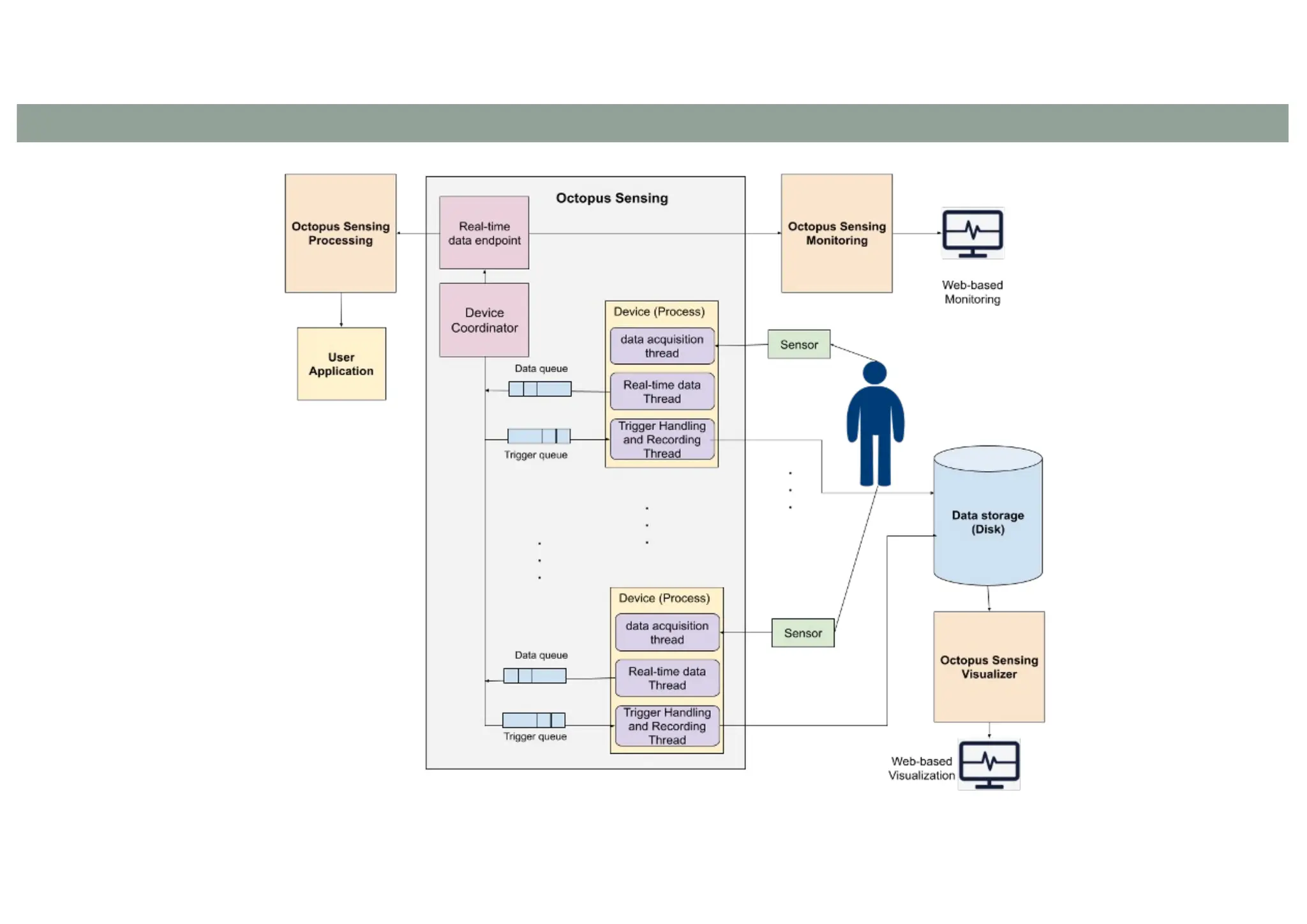

A keynote speech given by Mark Billinghurst at the NeuroXR workshop at the ISMAR 2025 conference. This talk was given on October 12th 2025. It describes current research and opportunities for the use of neurophysiological signals and affective computing in Extended Reality.