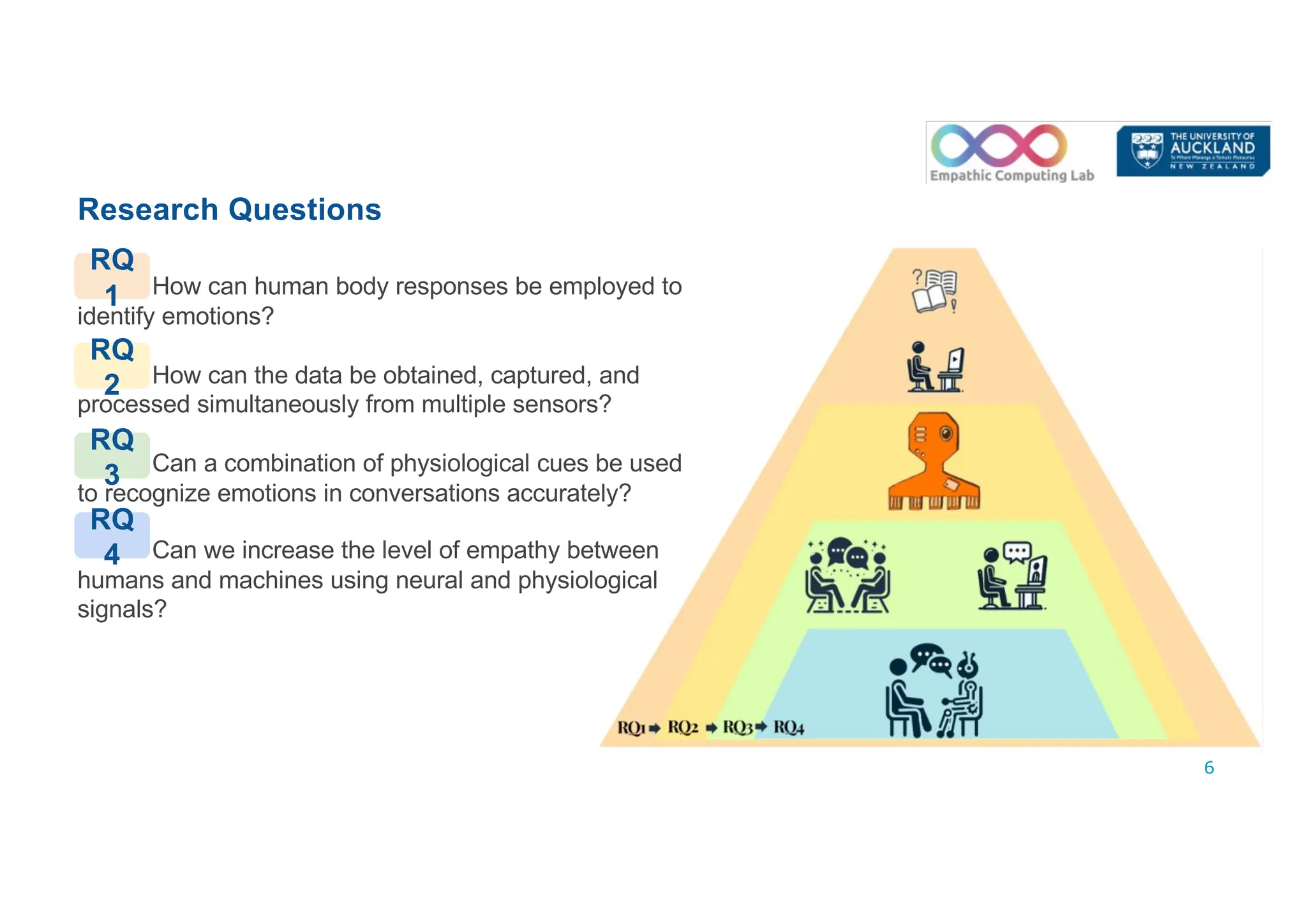

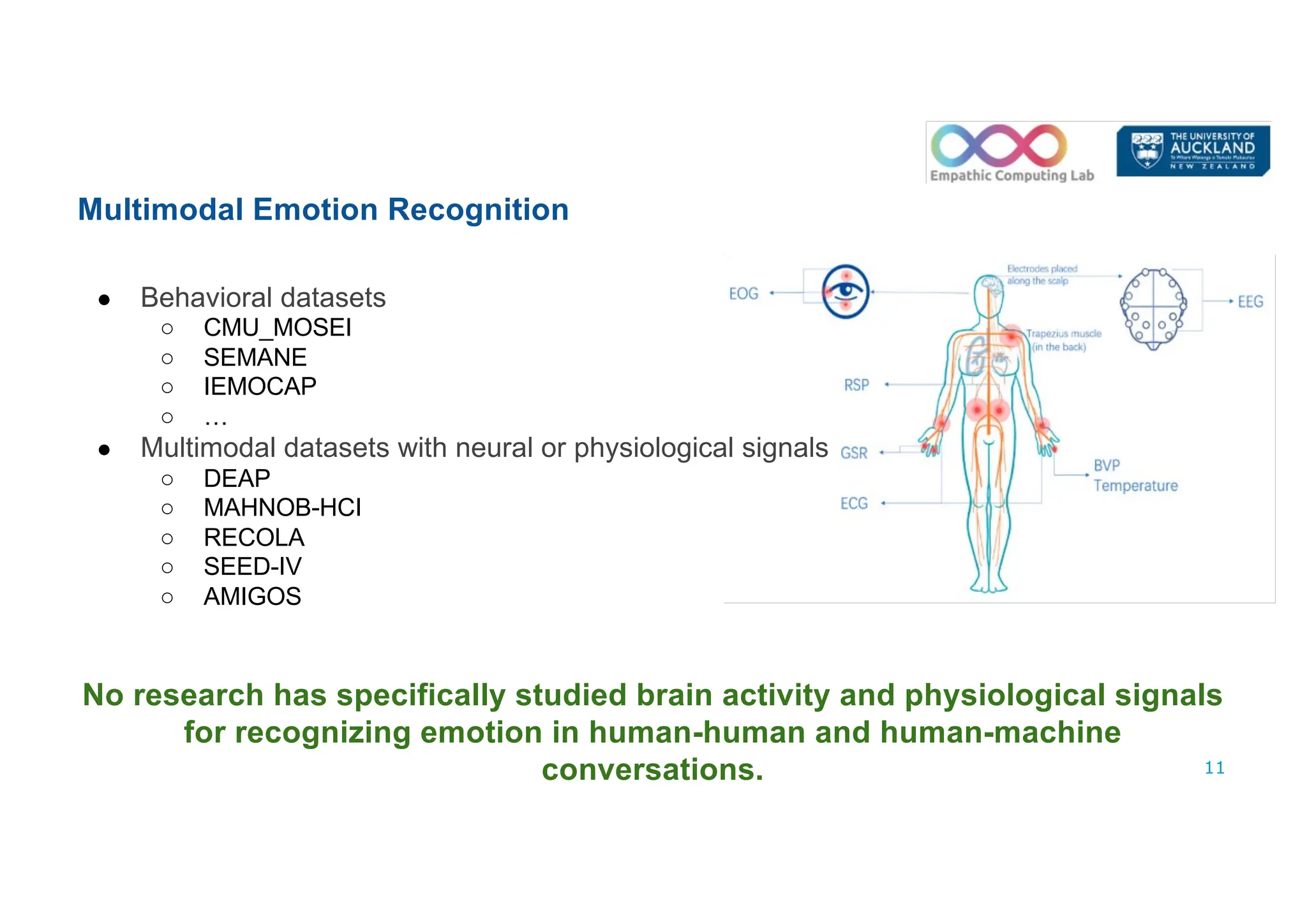

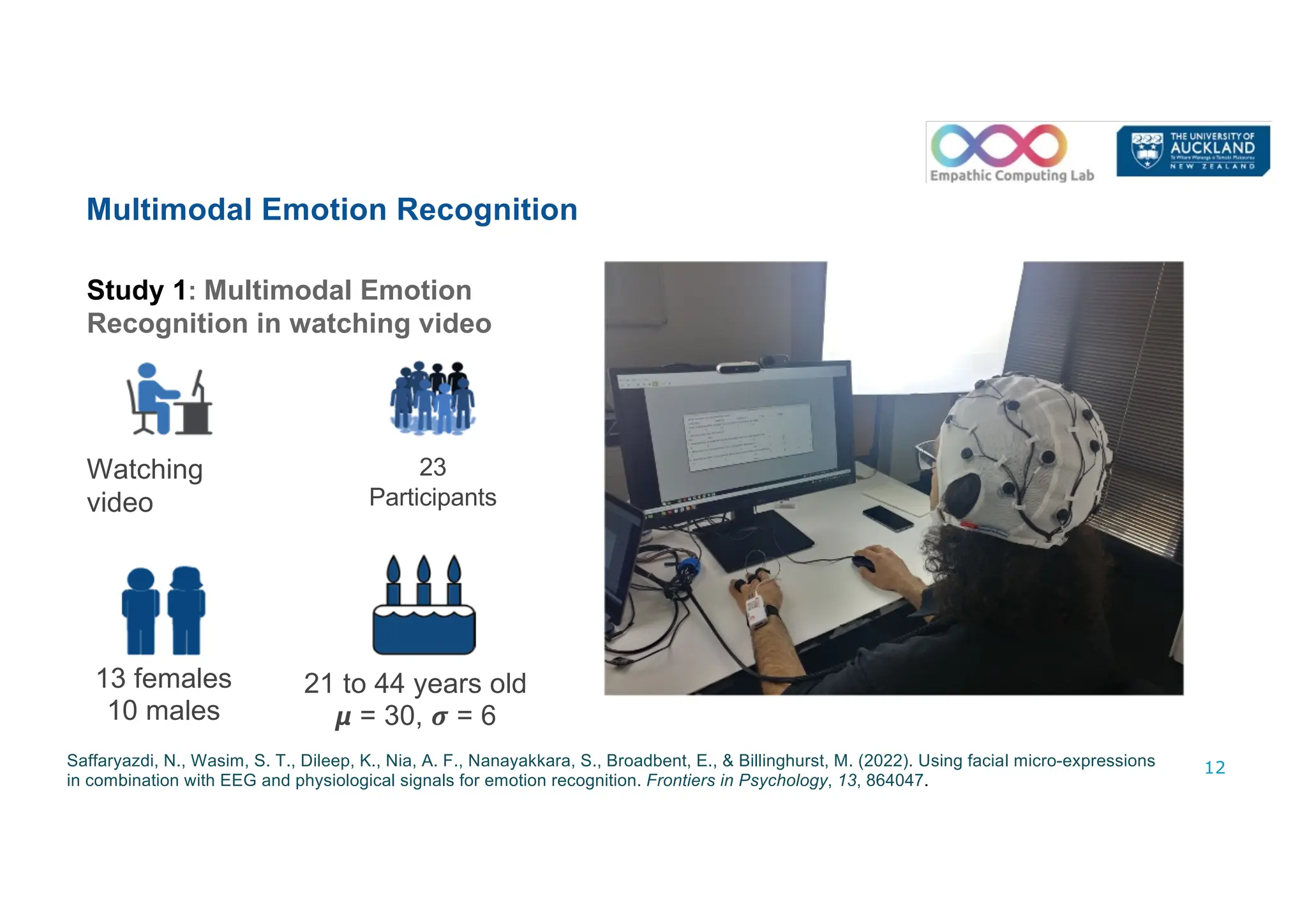

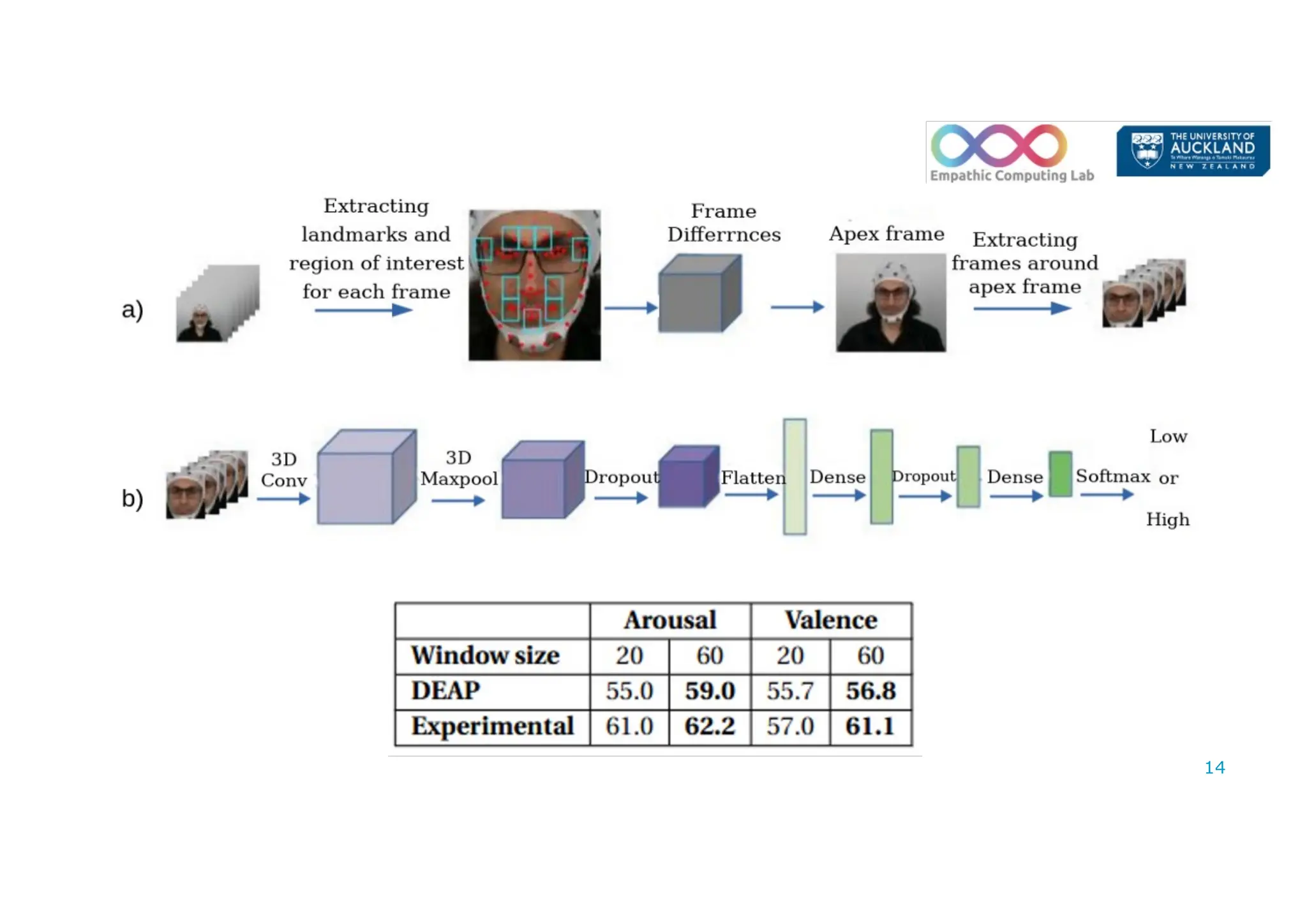

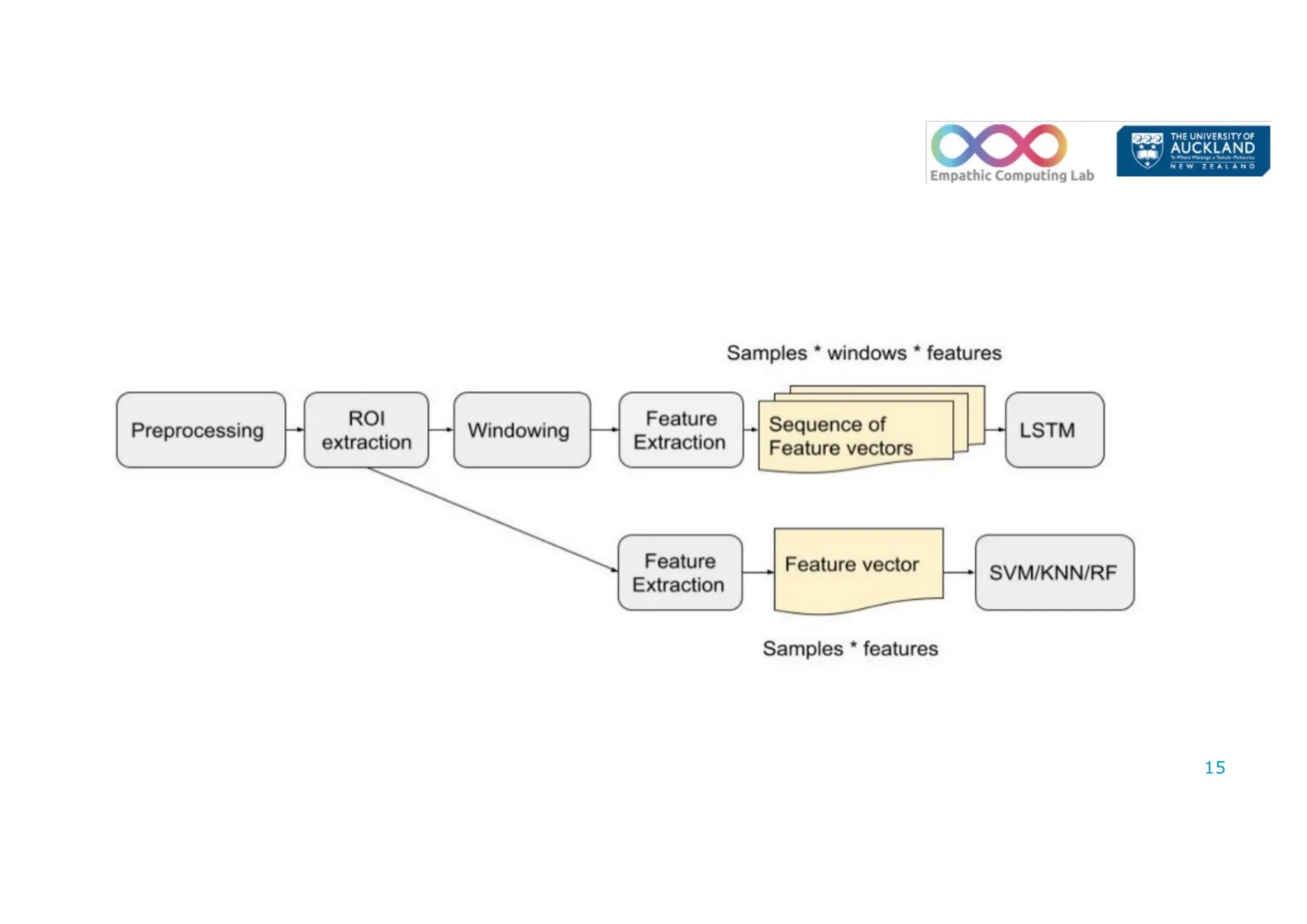

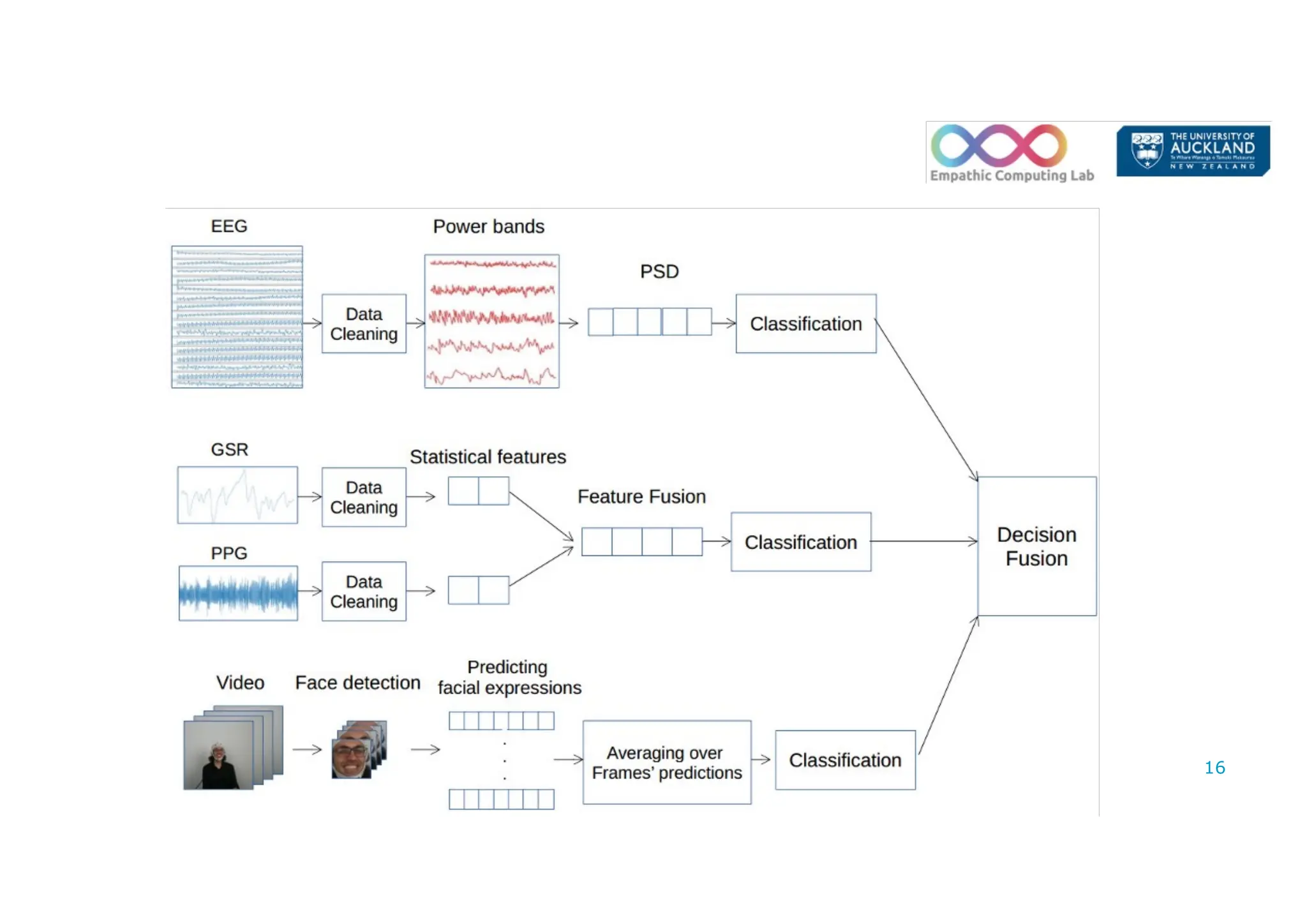

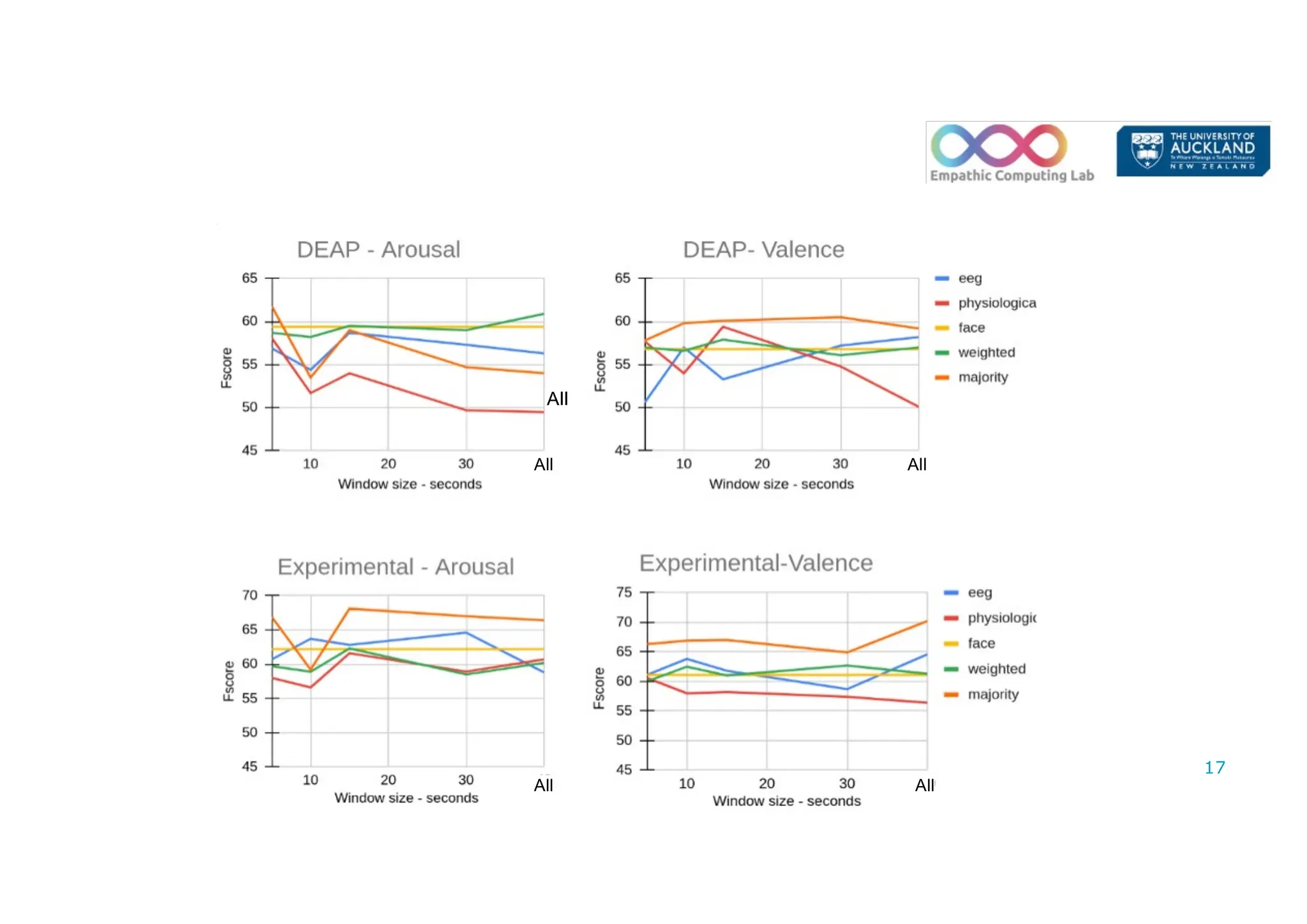

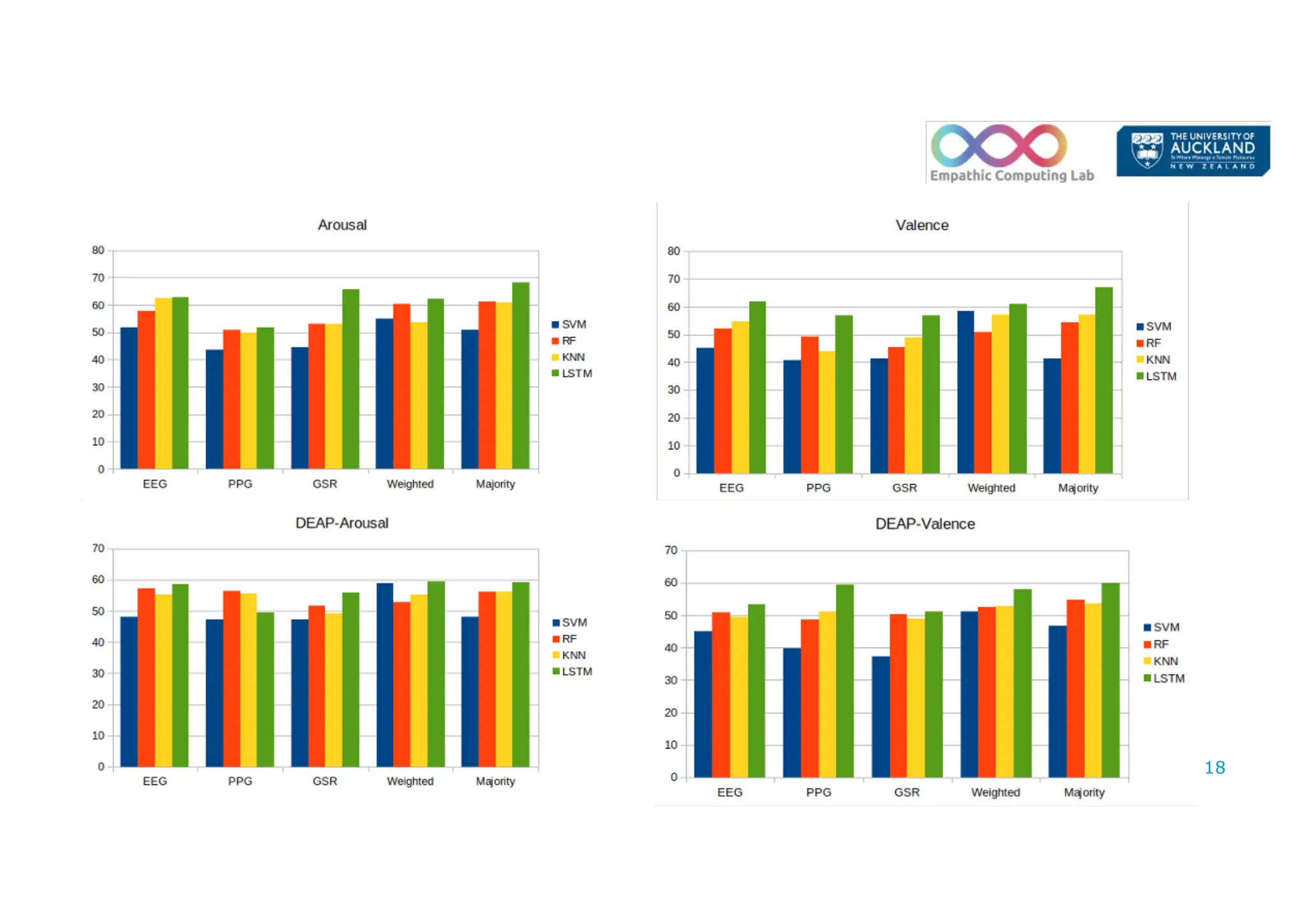

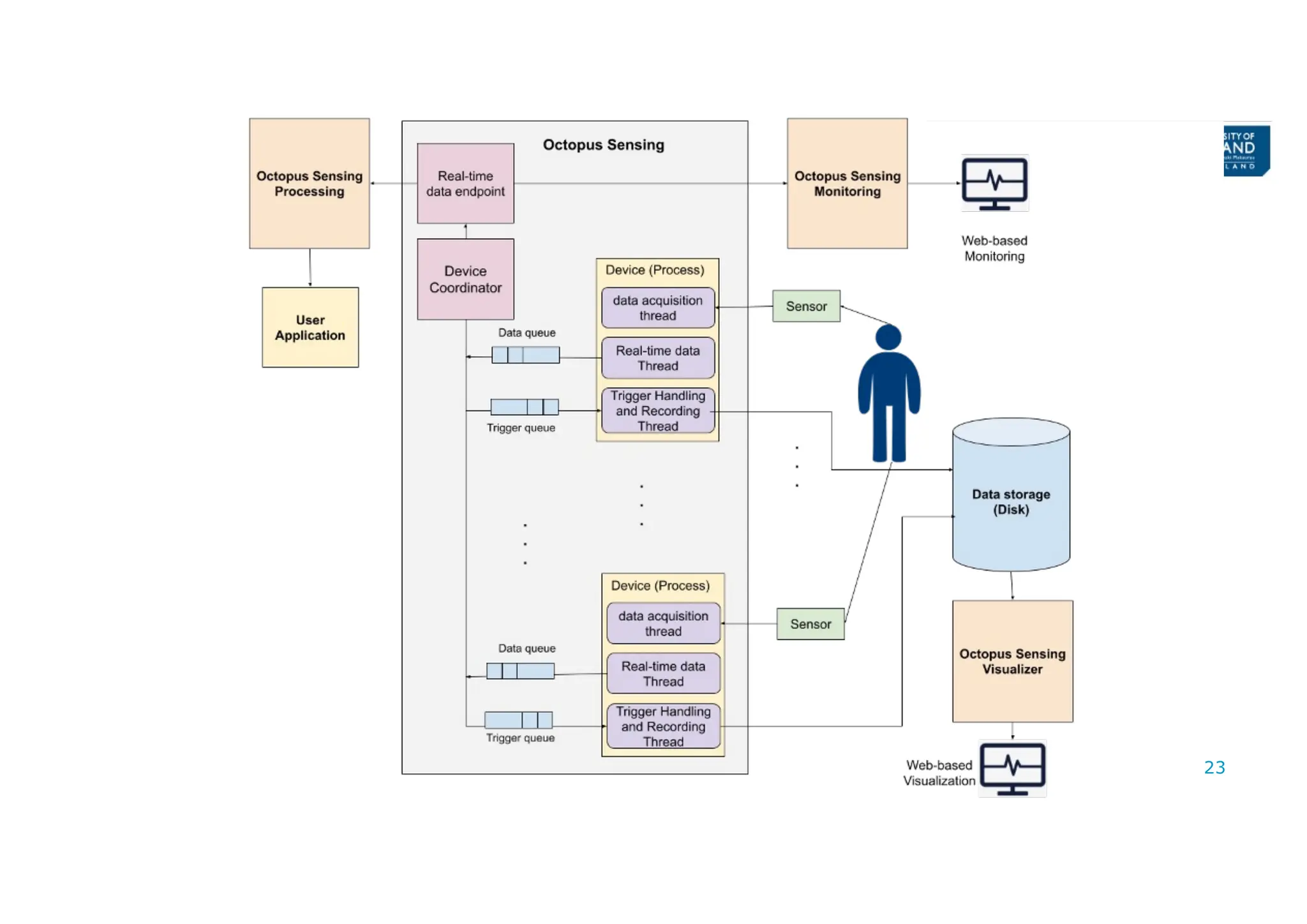

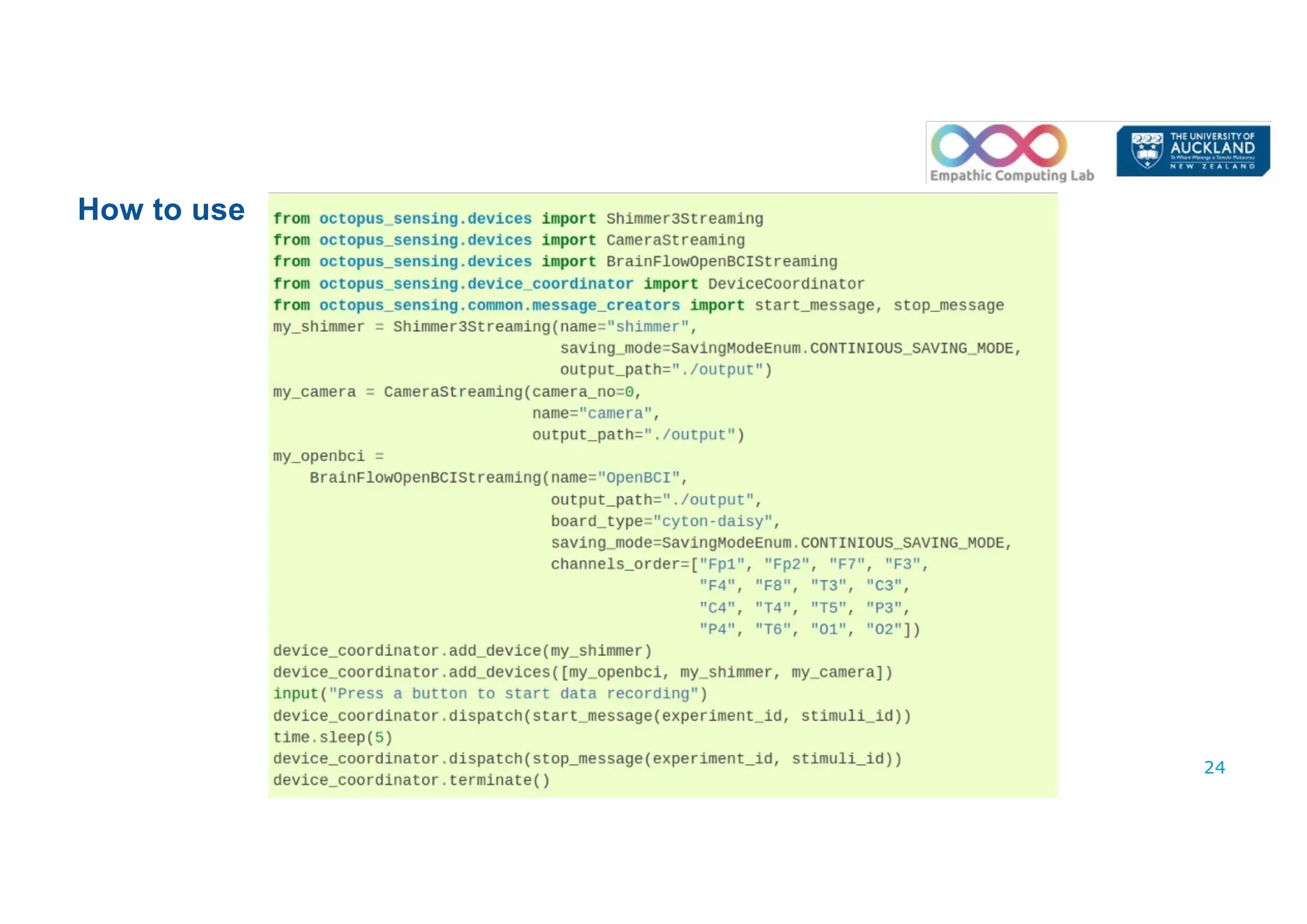

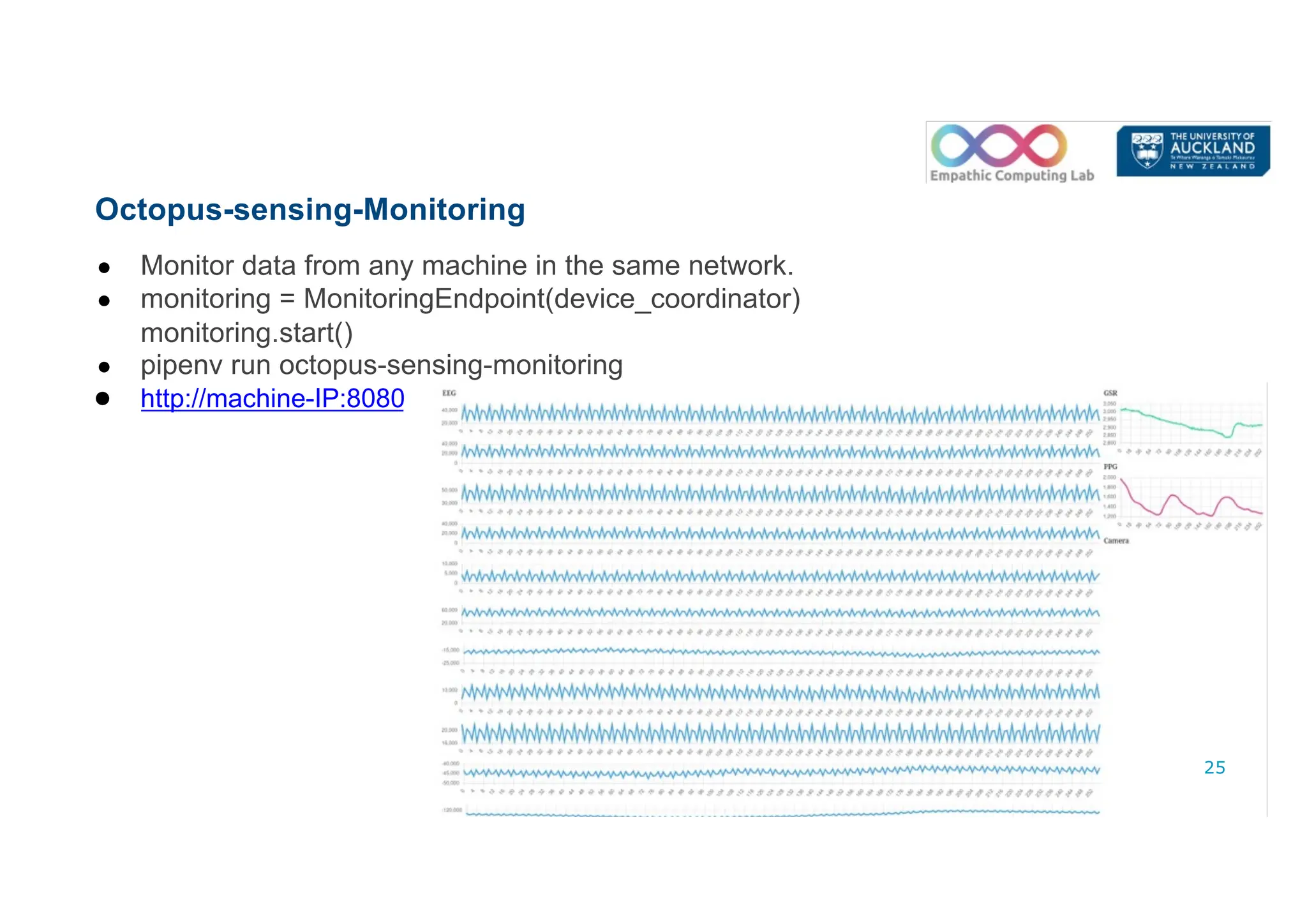

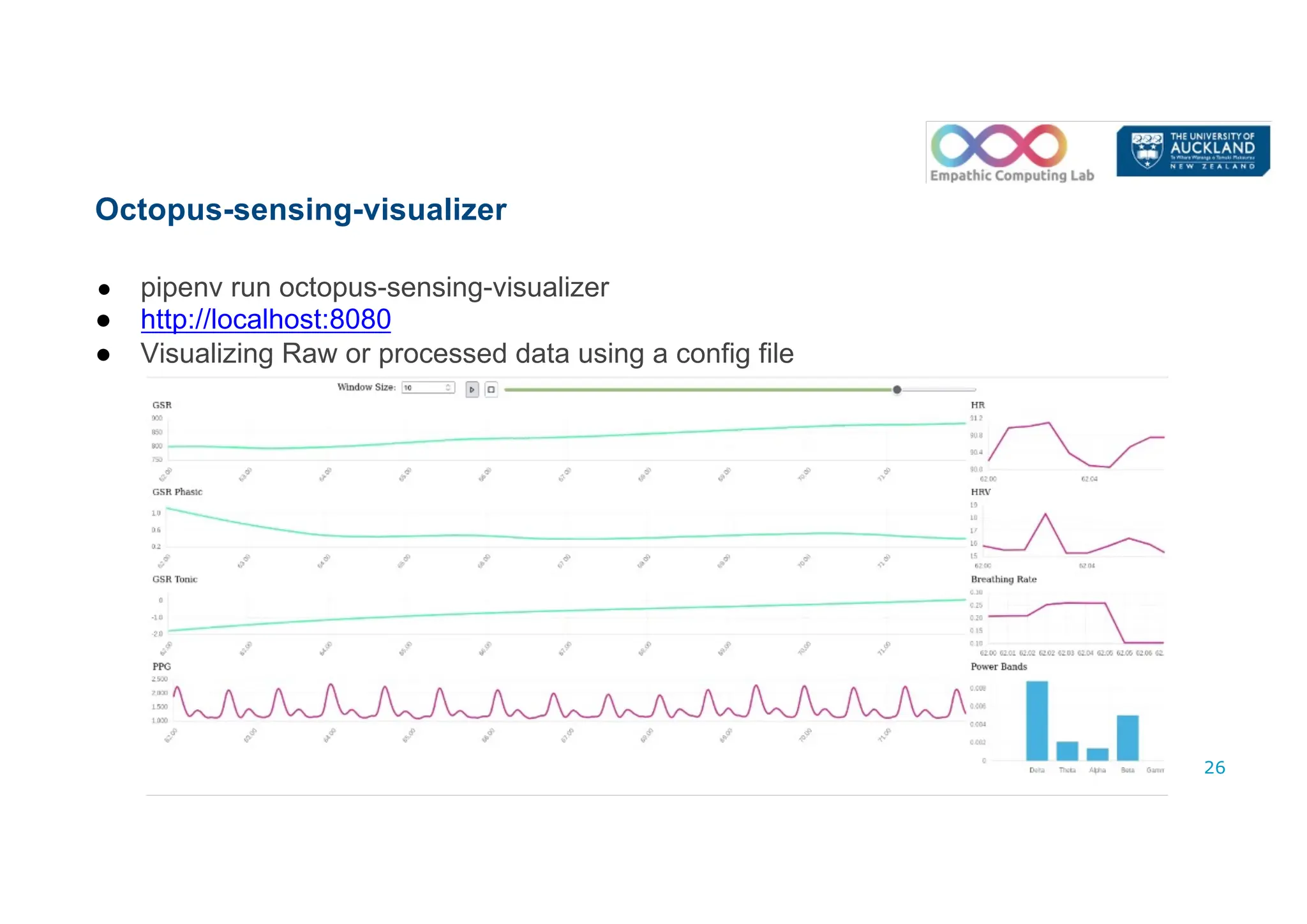

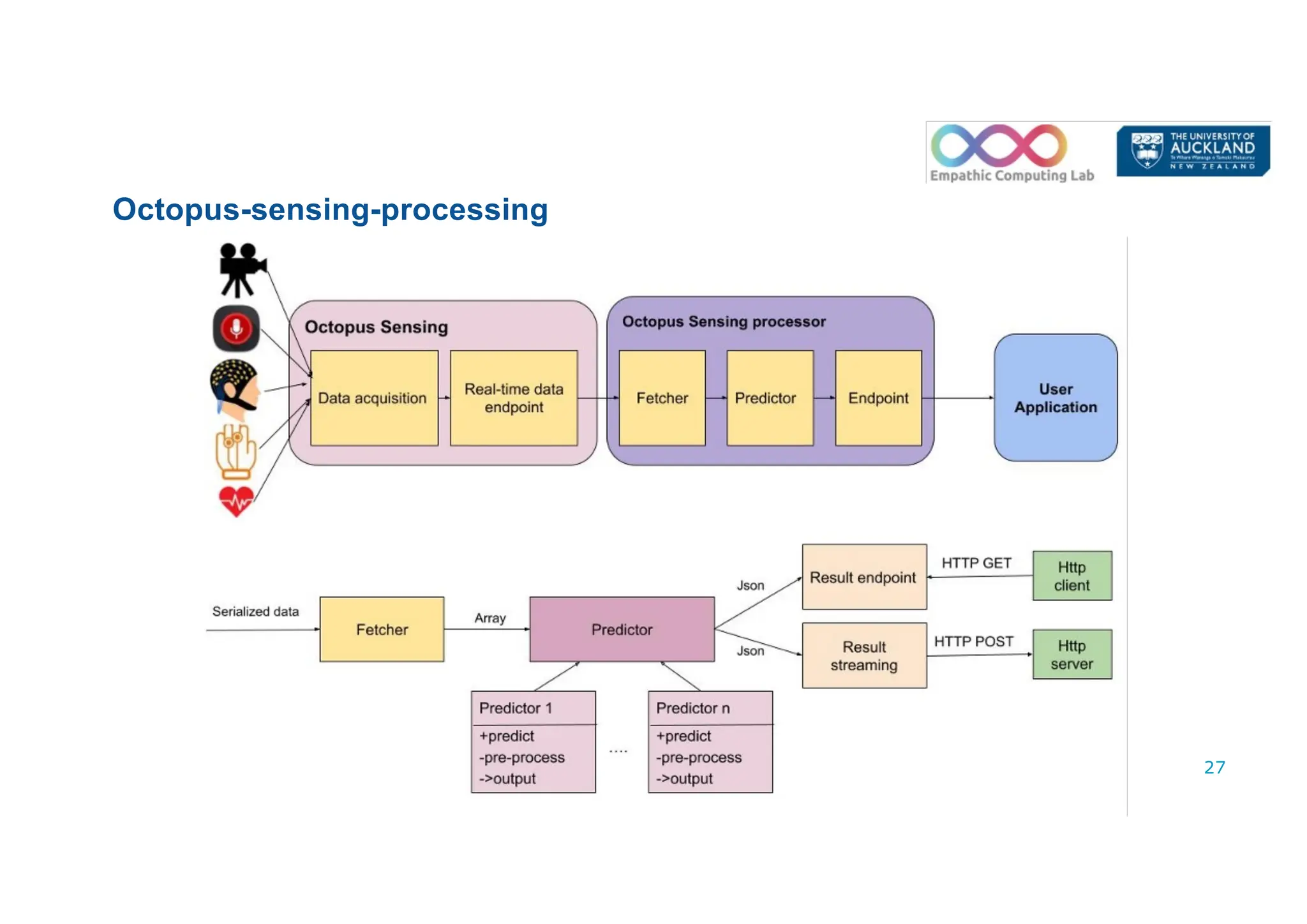

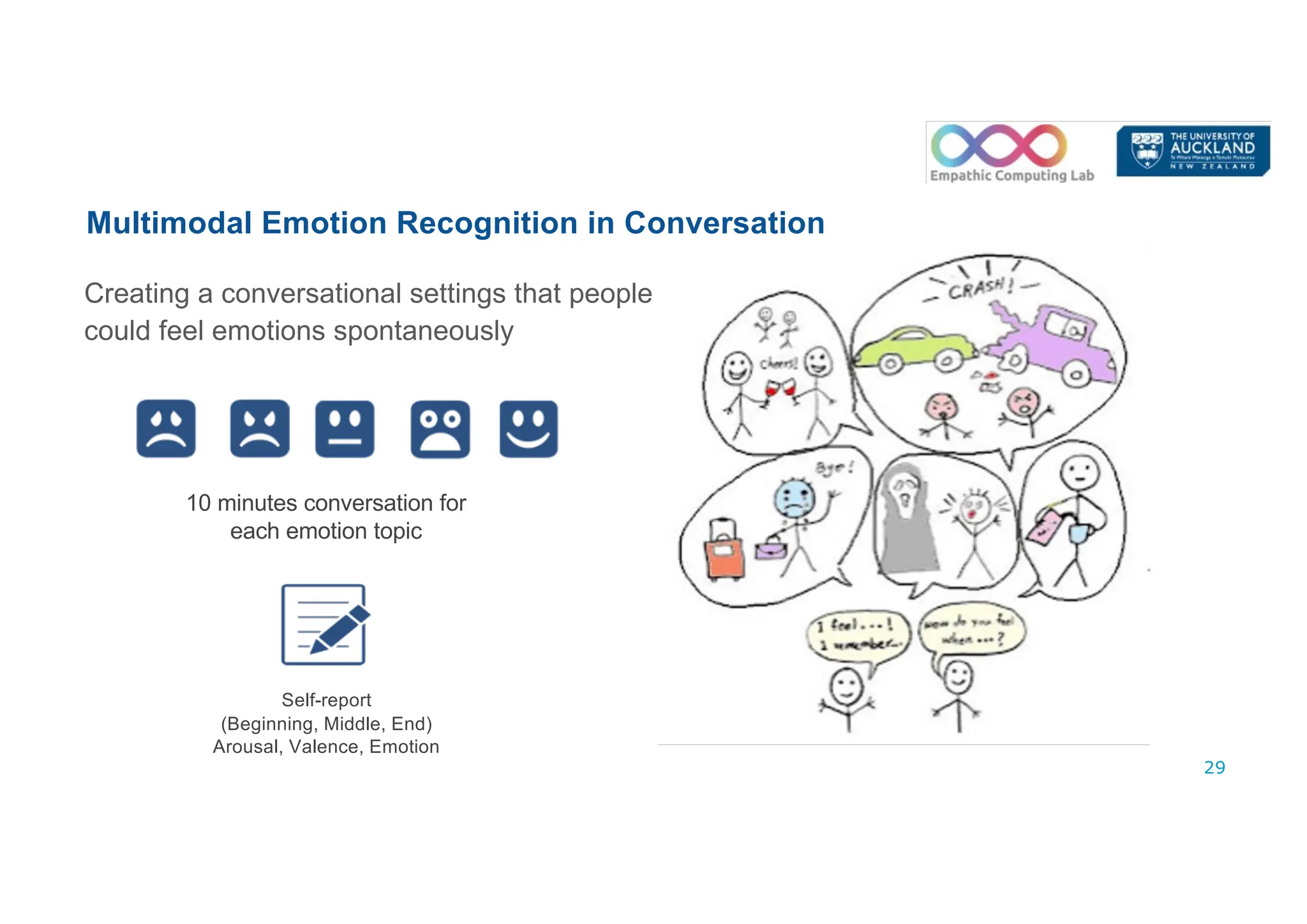

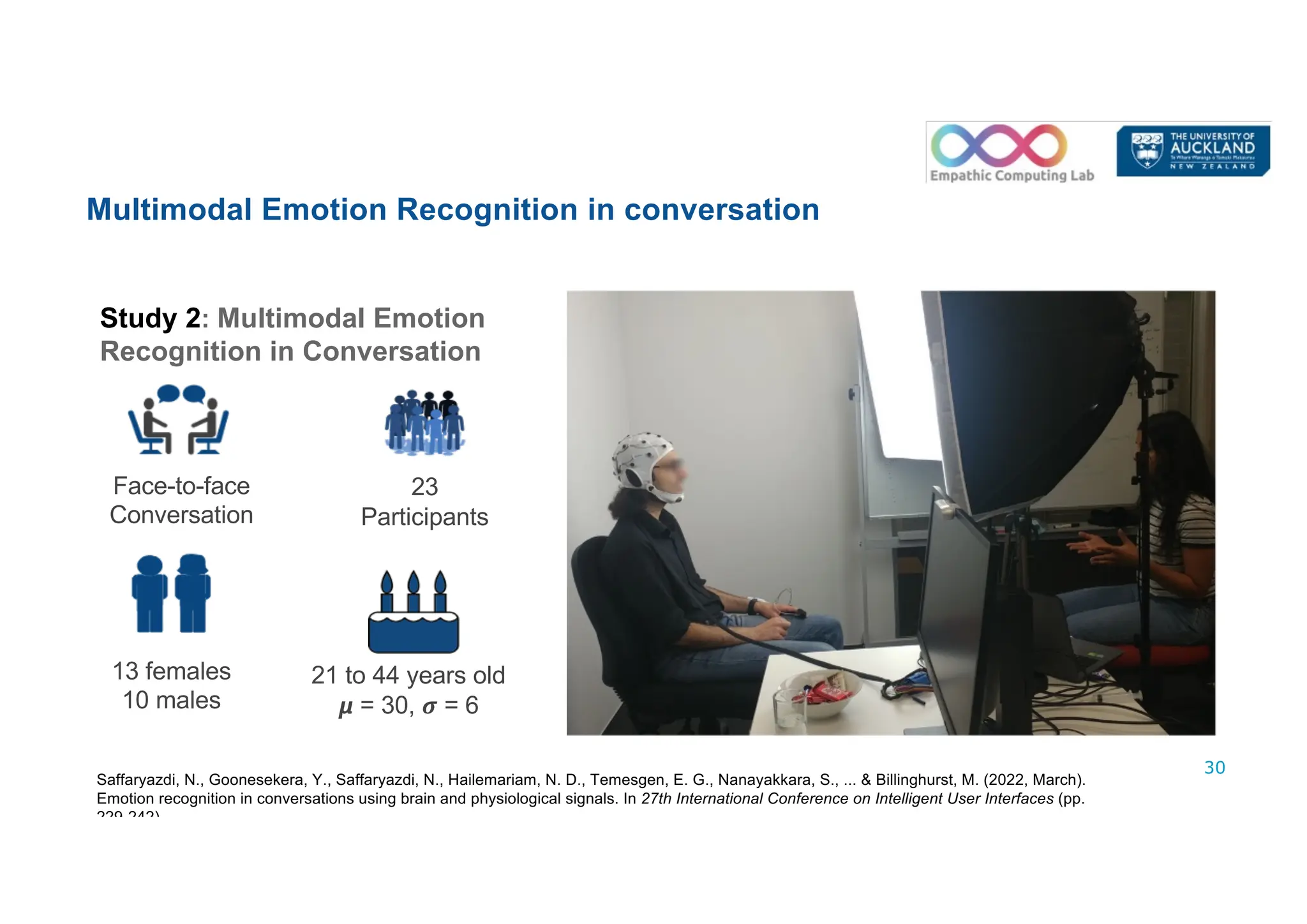

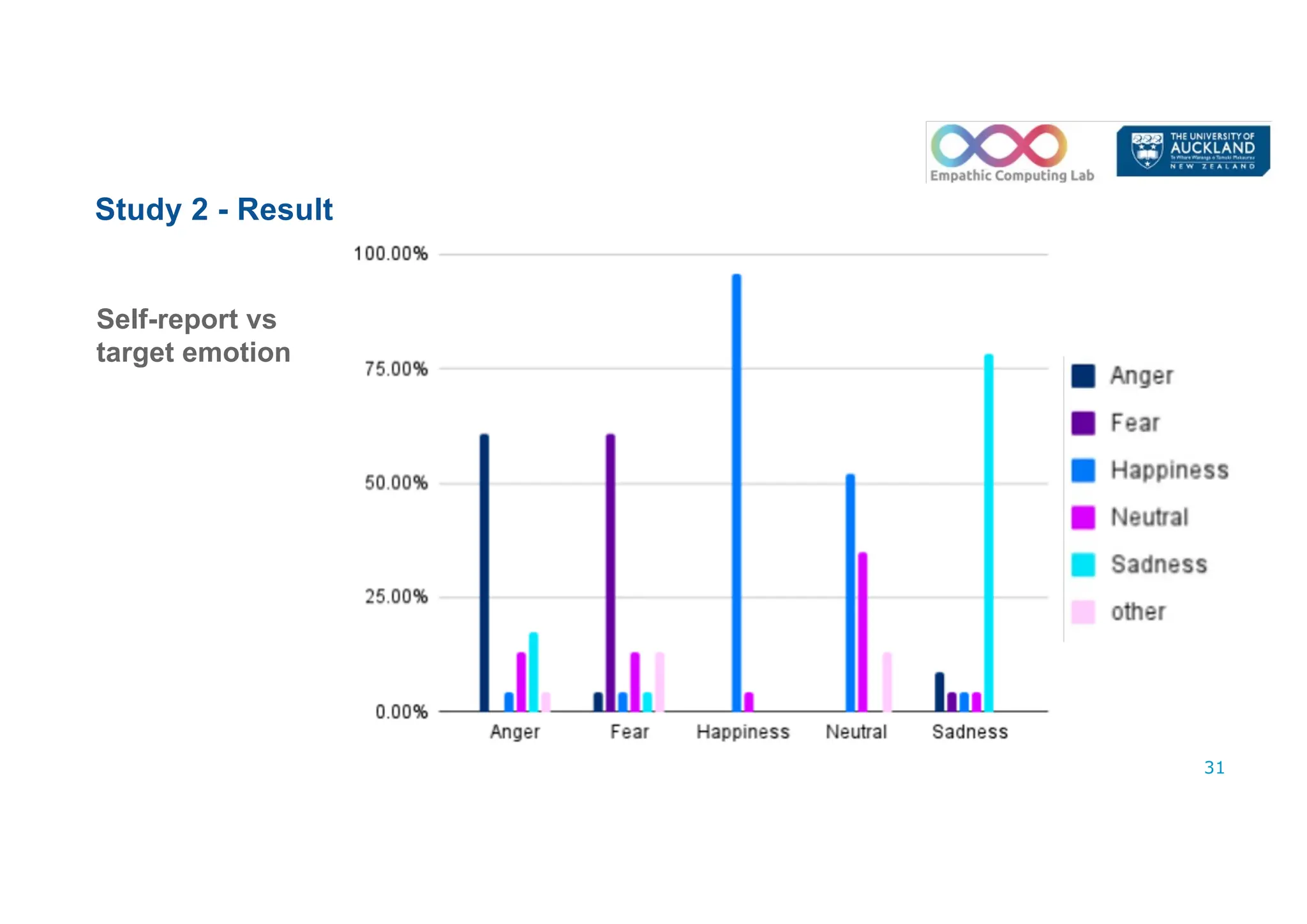

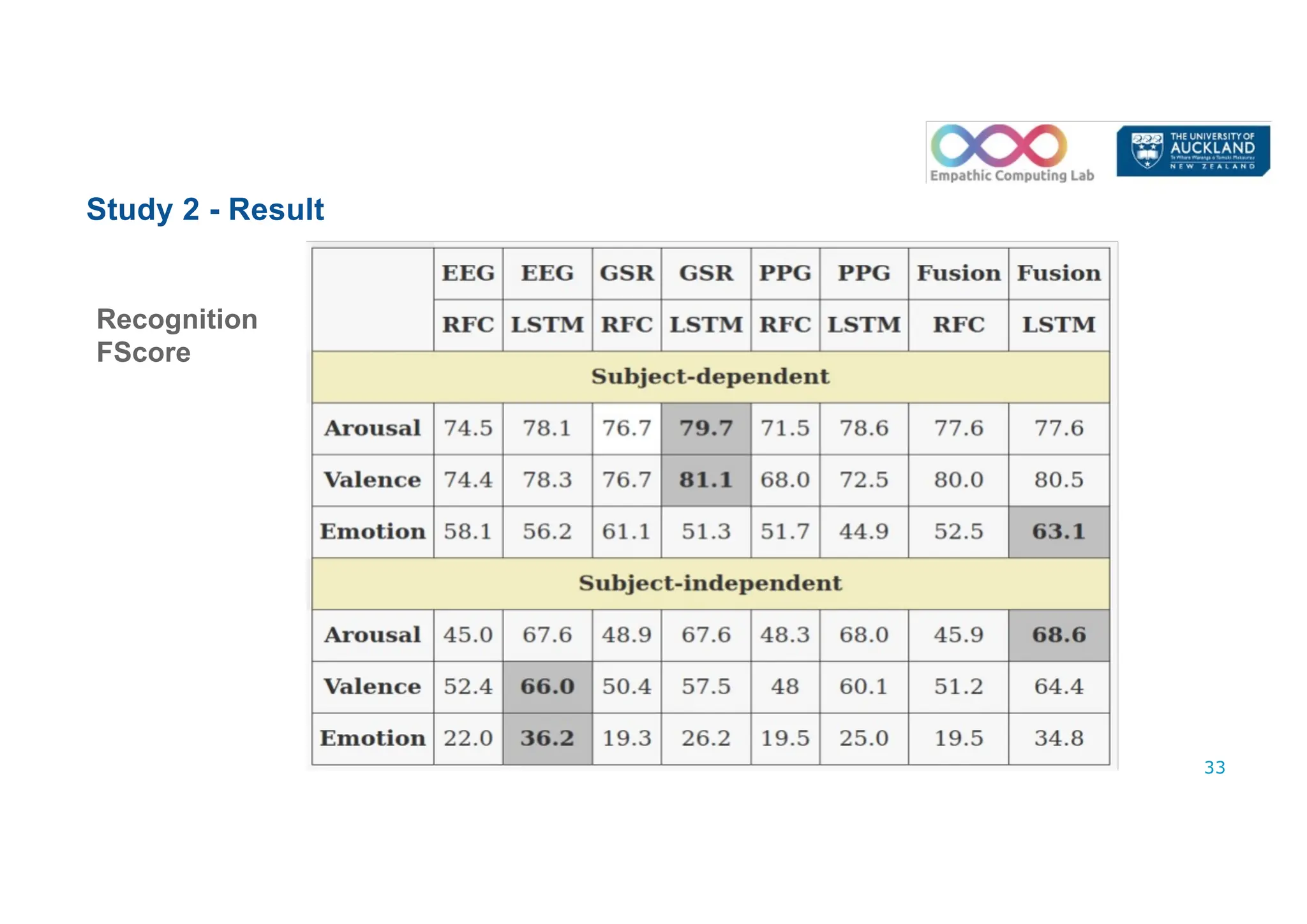

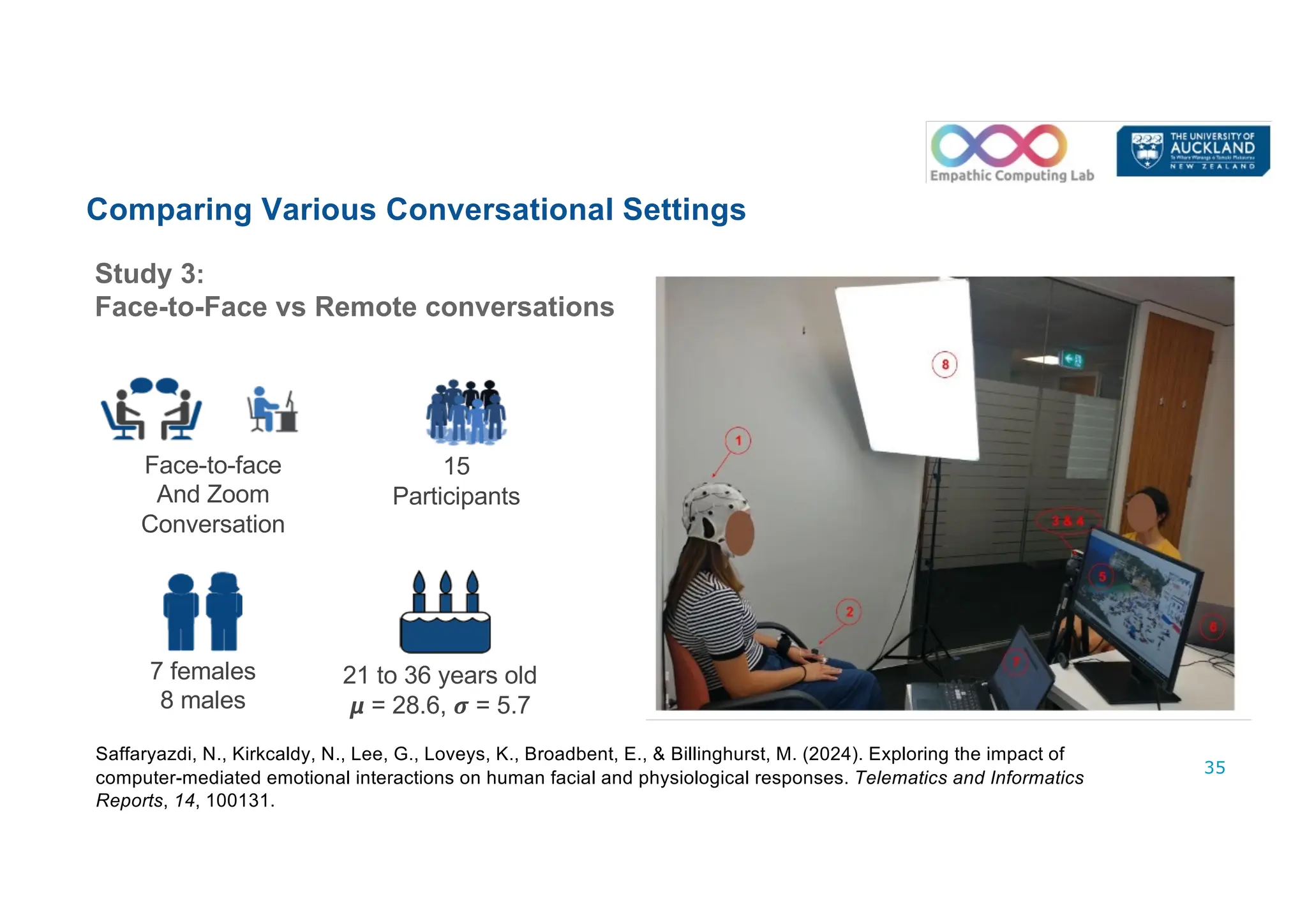

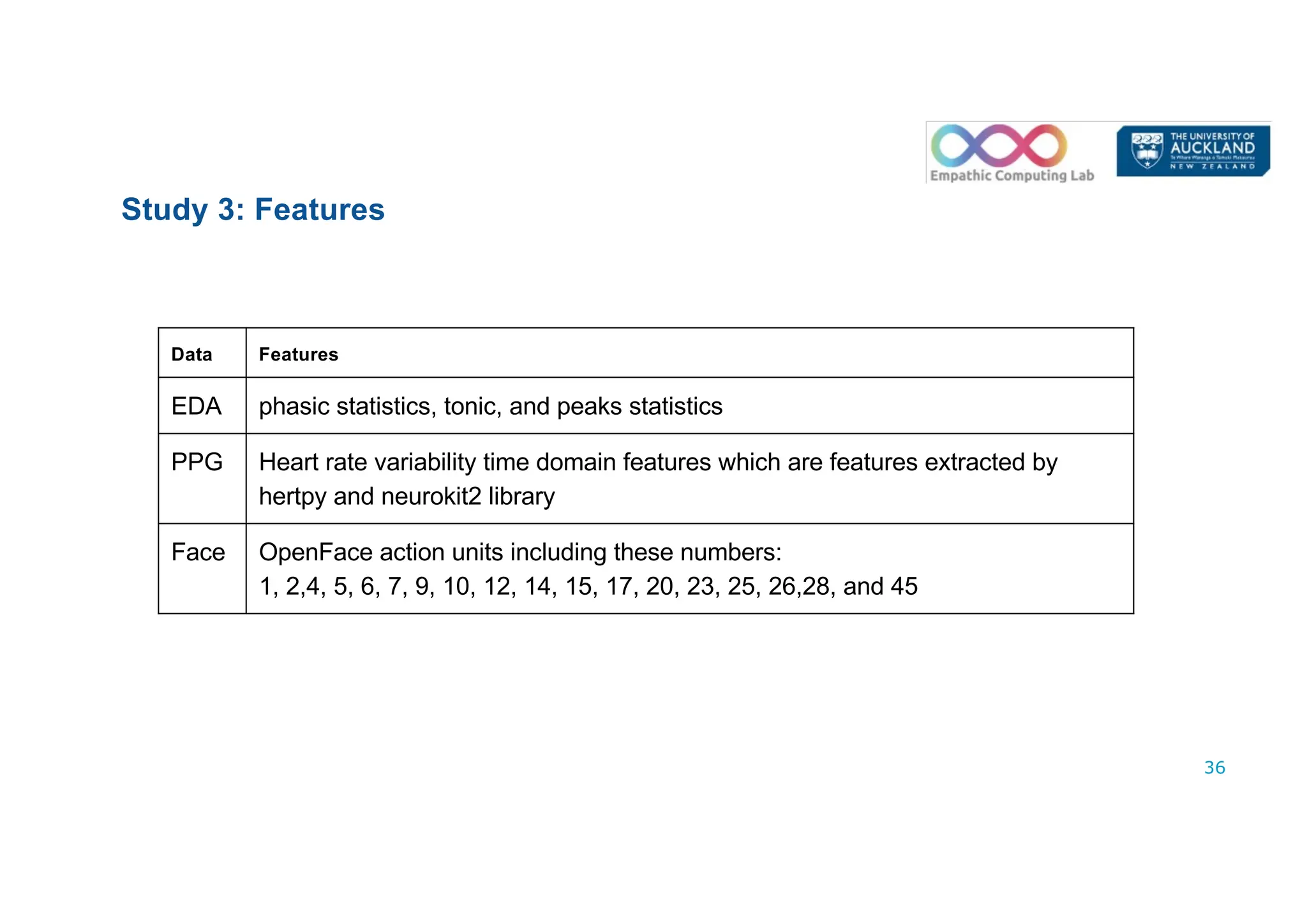

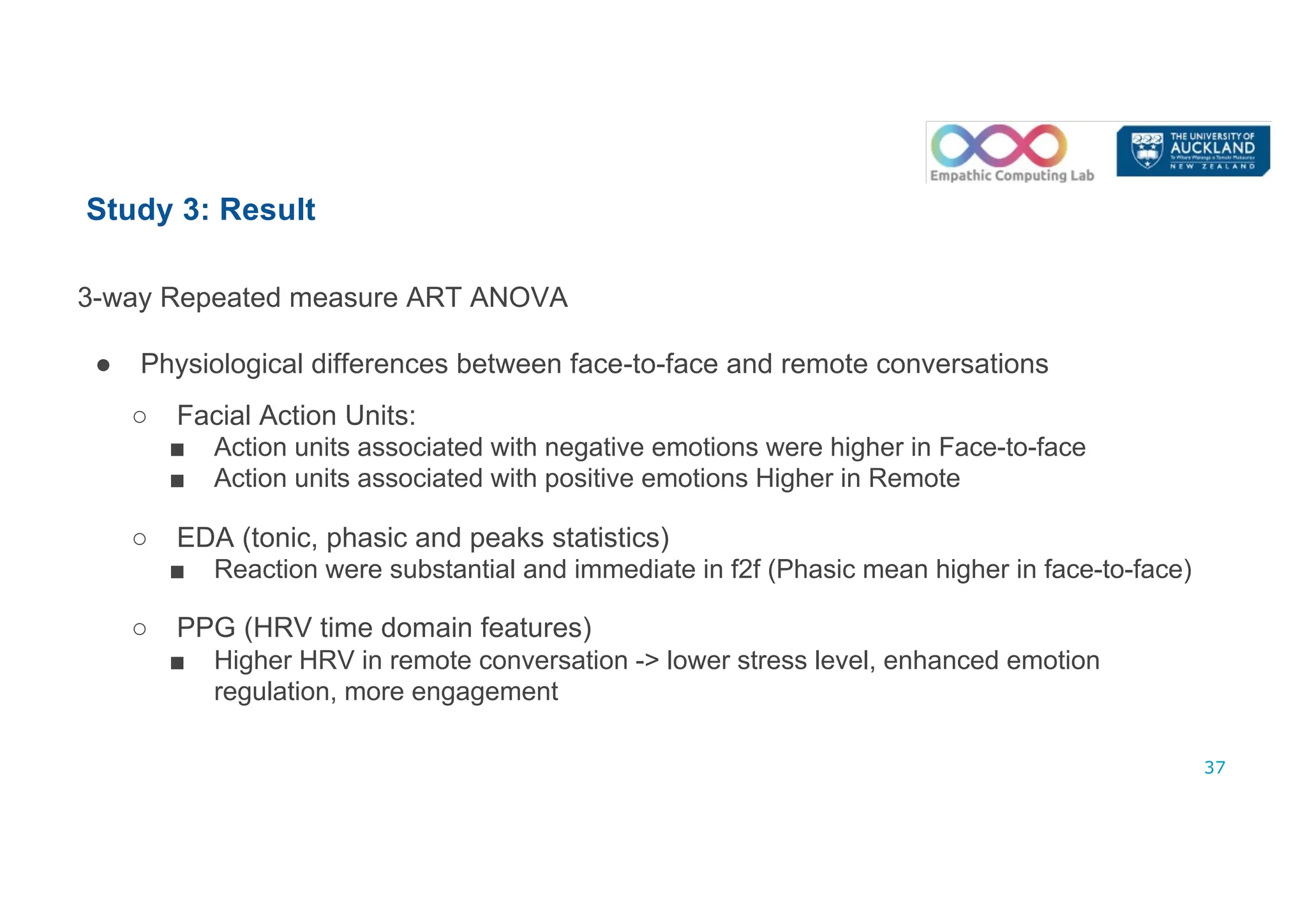

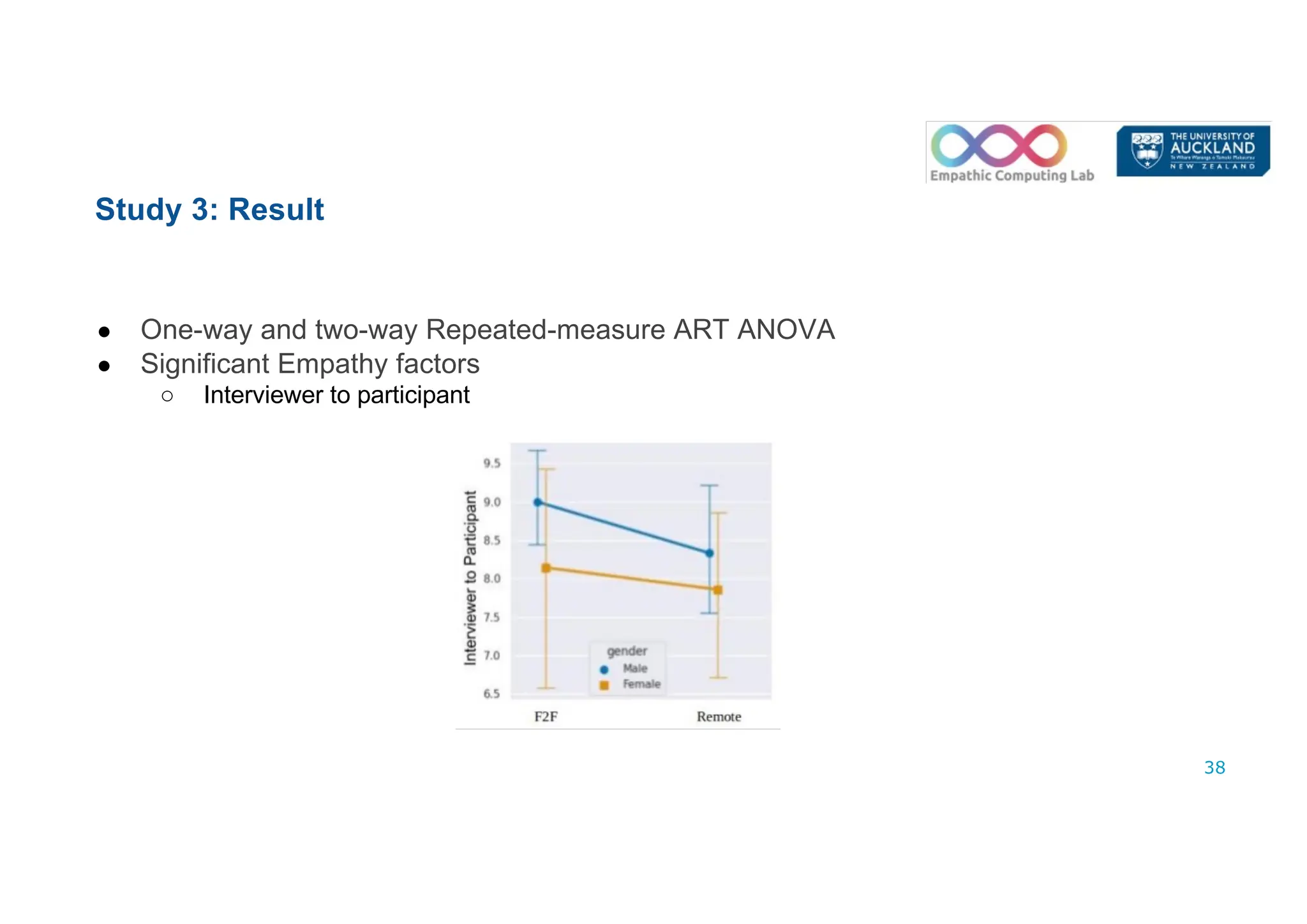

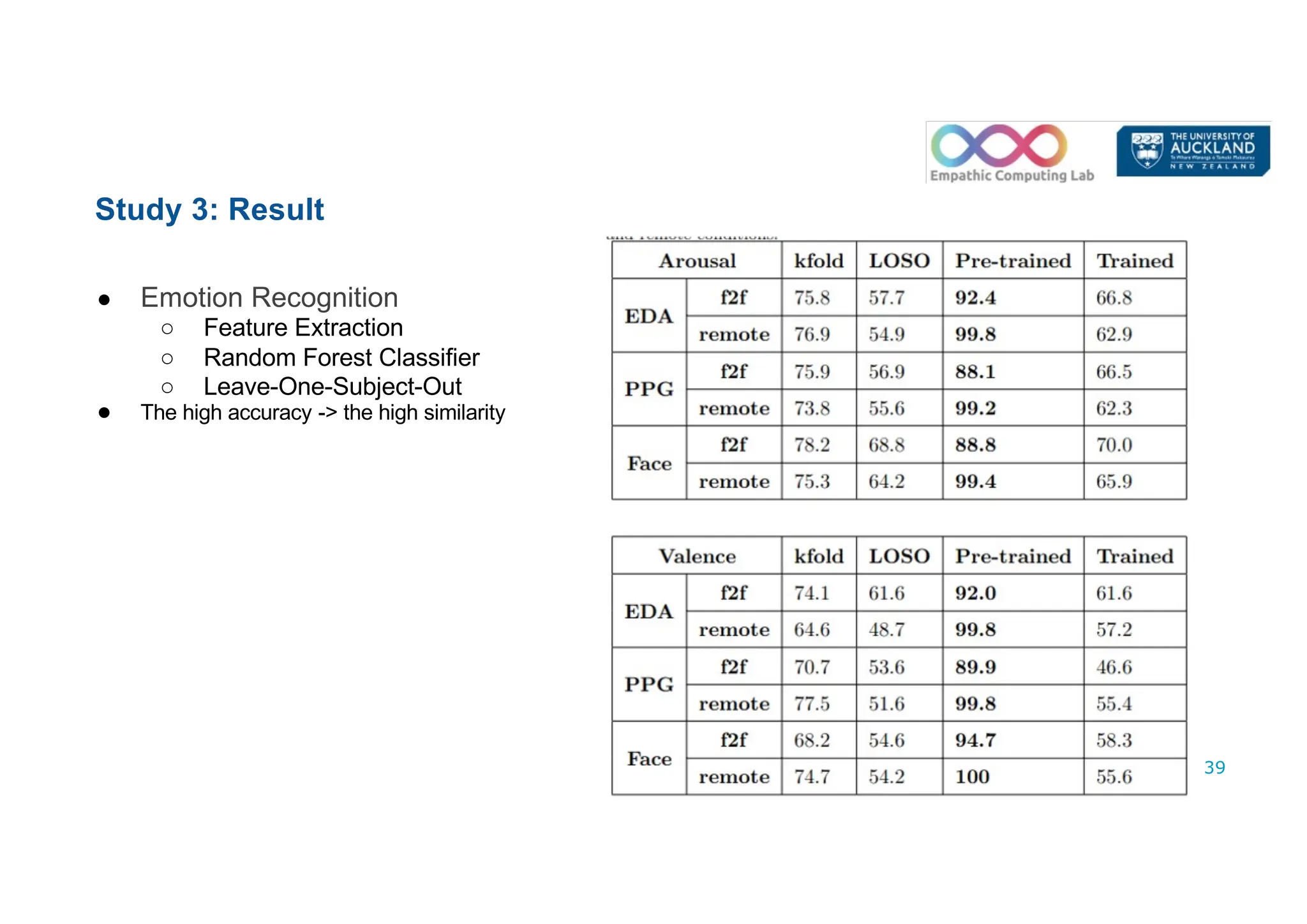

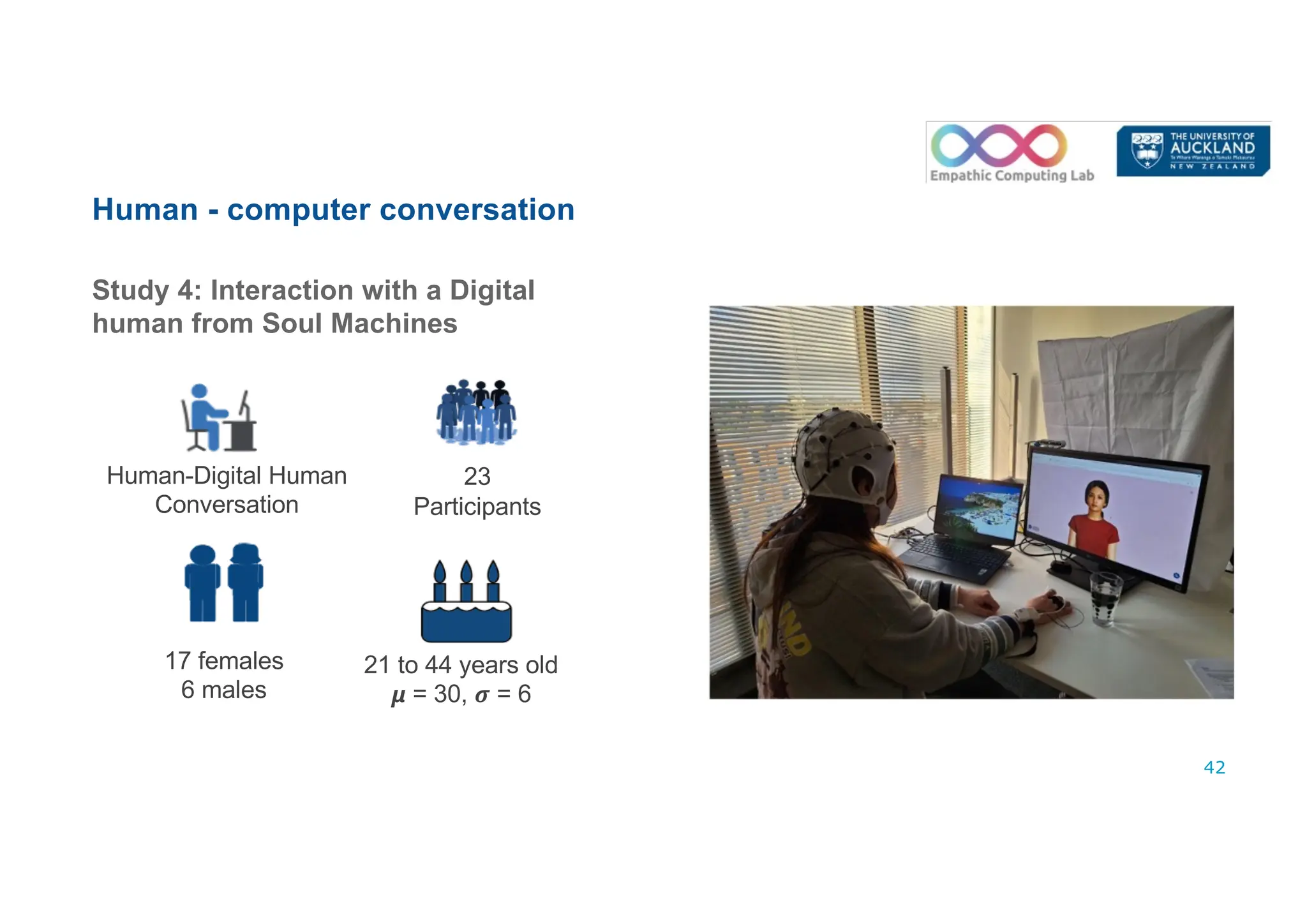

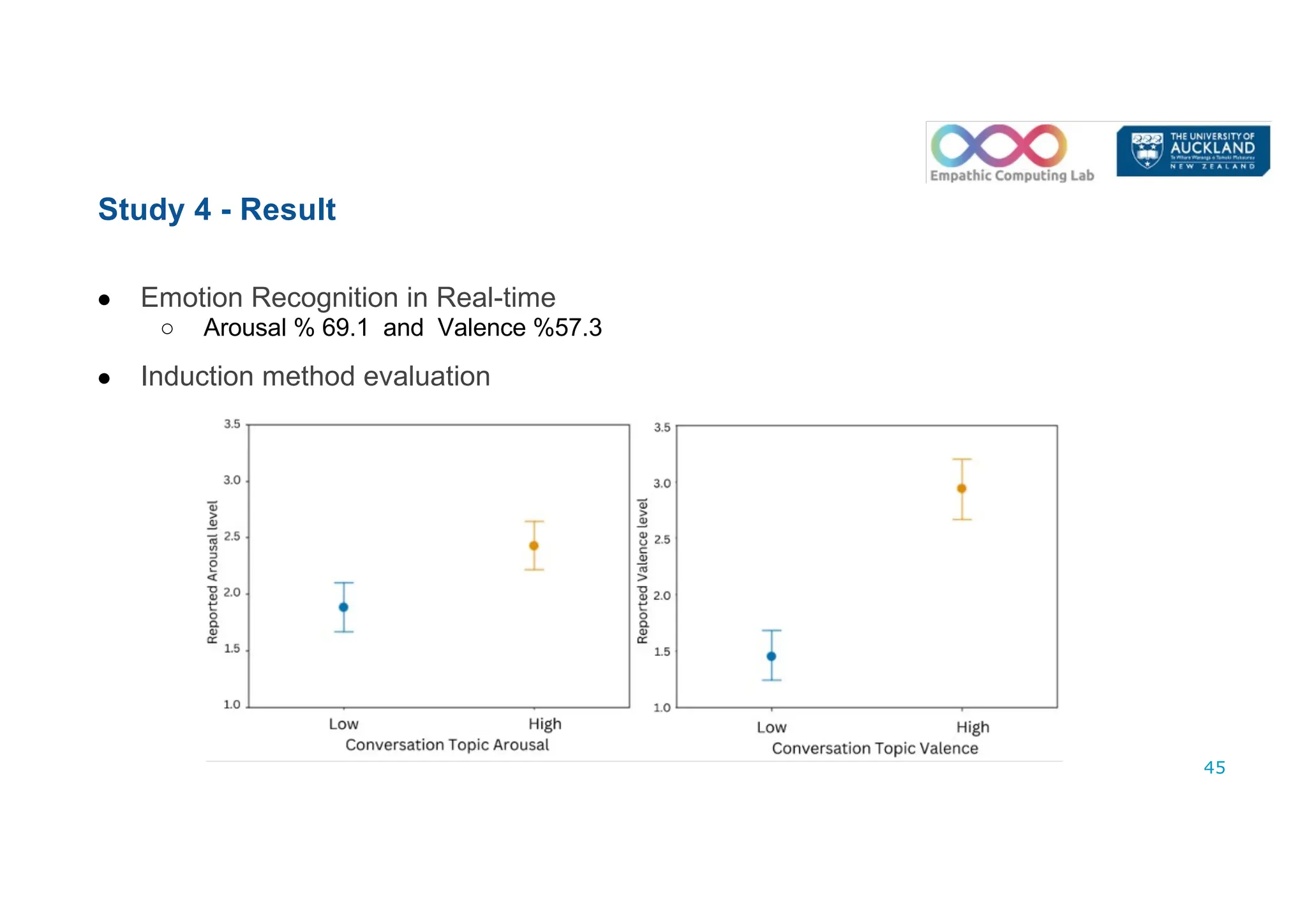

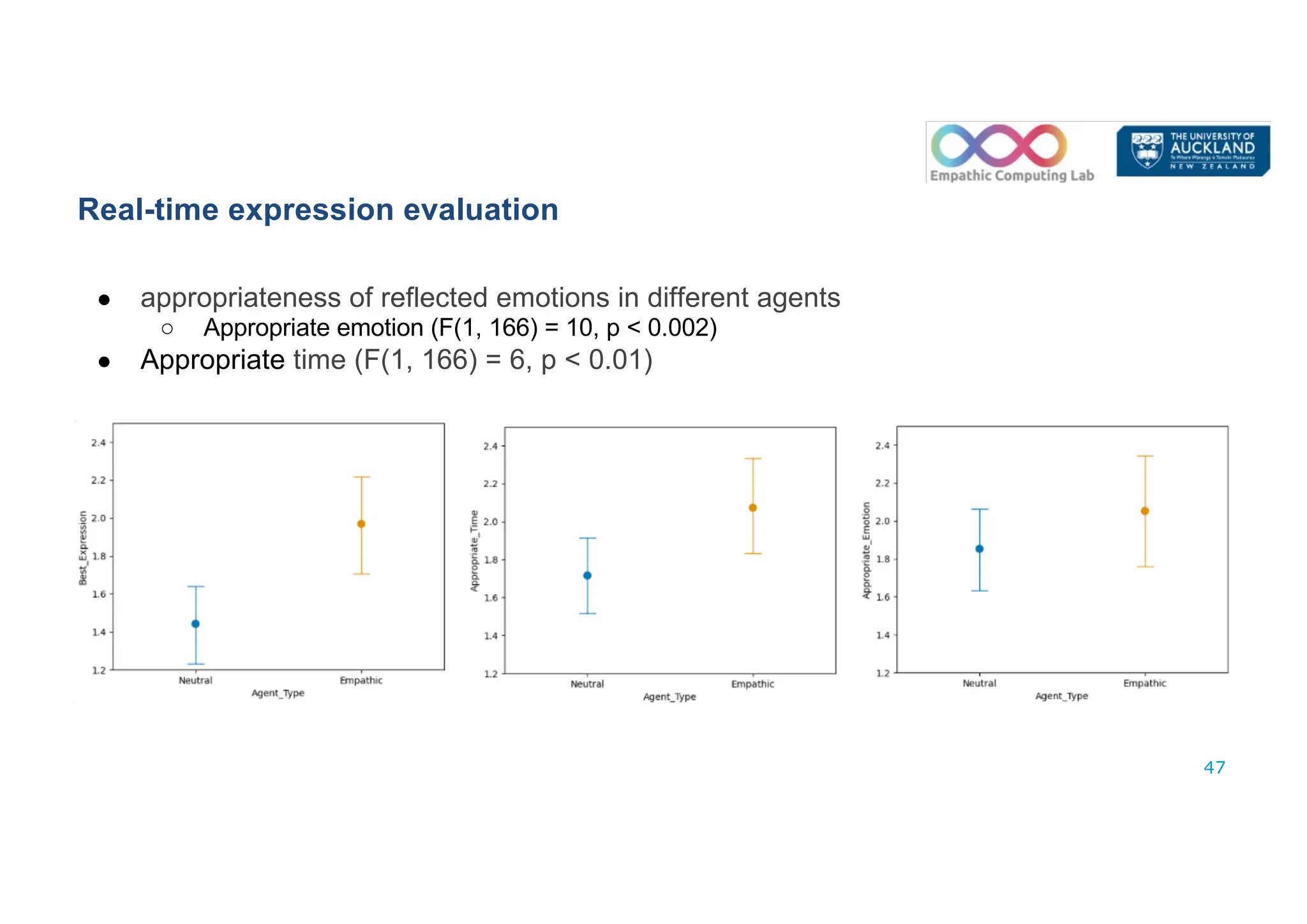

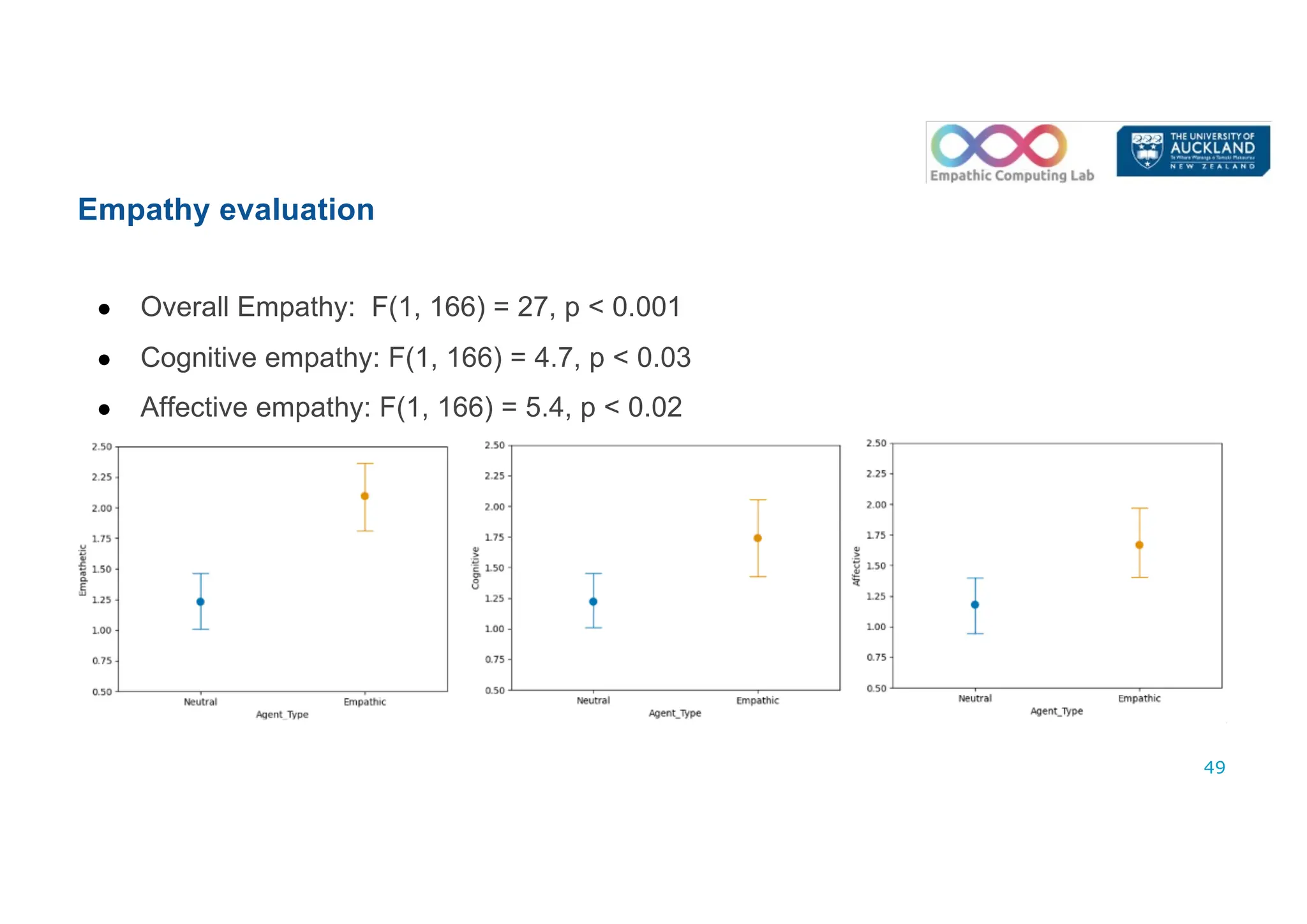

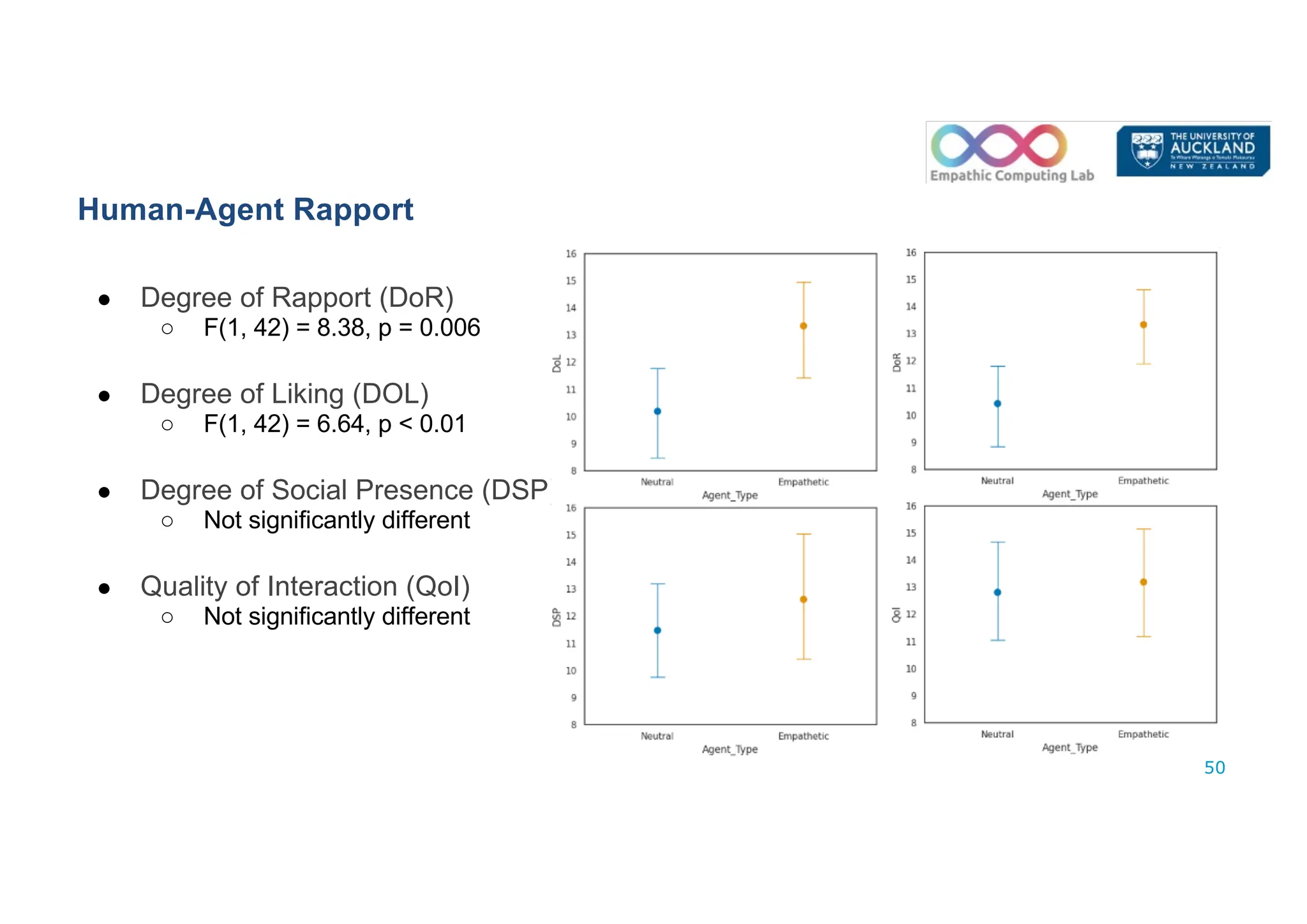

The document discusses multimodal emotion recognition in conversational settings, emphasizing the integration of behavioral and physiological cues to enhance empathy in human-machine interactions. It addresses various research questions regarding data collection from multiple sensors and the development of systems for real-time emotion recognition. The findings suggest that physiological data can improve empathy and emotional understanding in conversations, with studies demonstrating differences in responses between face-to-face and remote interactions.