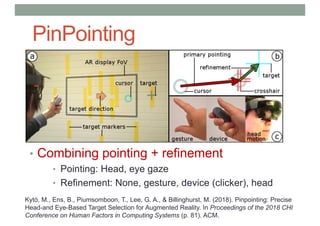

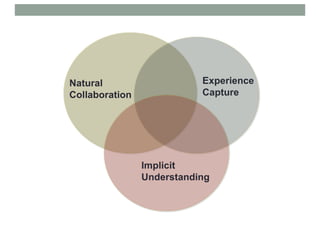

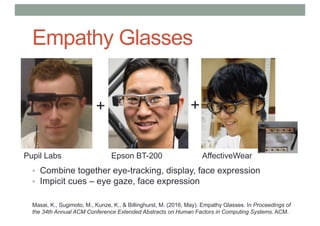

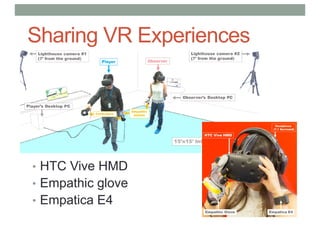

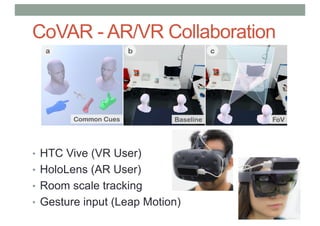

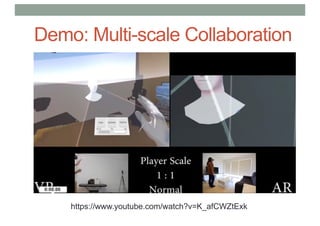

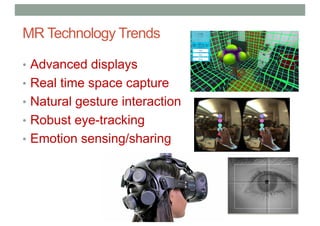

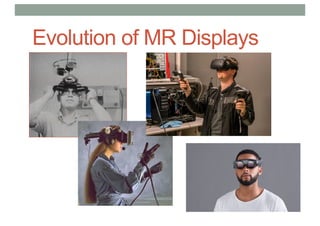

The document discusses the evolution and techniques of multimodal, multisensory interaction in mixed reality (MR), highlighting user-defined gestures, the integration of speech and gaze, and the importance of natural interaction modalities. It emphasizes improvements in hand and eye tracking technologies for user interfaces and explores applications in empathic computing and remote collaboration. The findings suggest that combining gestures, speech, and gaze enhances user experience in MR environments.

![What Gesture Do People Want to Use?

• Limitations of Previous work in AR

• Limited range of gestures

• Gestures designed for optimal recognition

• Gestures studied as add-on to speech

• Solution – elicit desired gestures from users

• Eg. Gestures for surface computing [Wobbrock]

• Previous work in unistroke getsures, mobile

gestures](https://image.slidesharecdn.com/markbillinghurstmultisensorymrnovideo-180922193851/85/Multimodal-Multi-sensory-Interaction-for-Mixed-Reality-18-320.jpg)