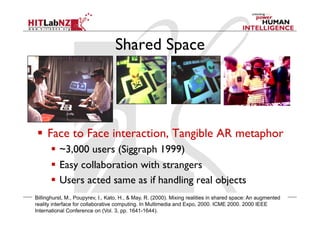

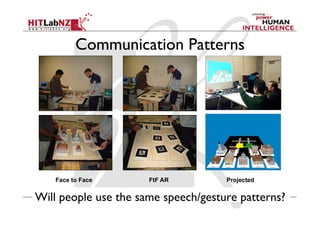

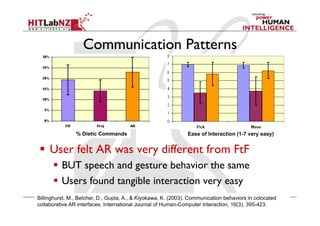

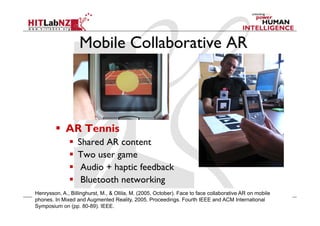

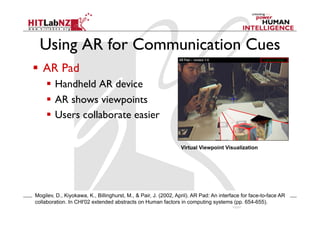

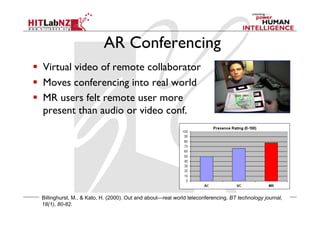

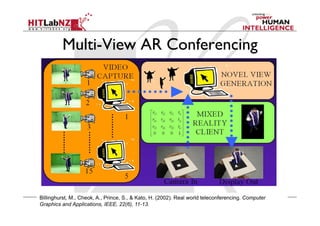

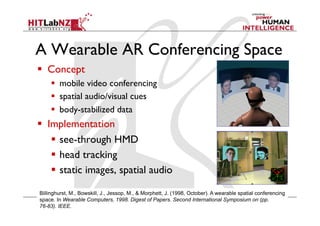

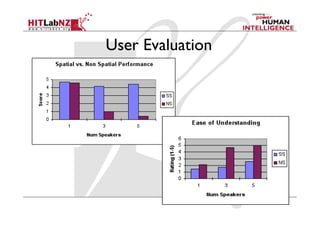

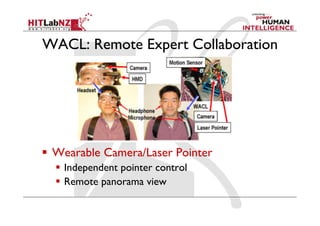

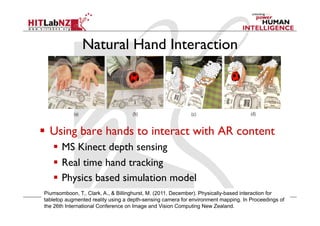

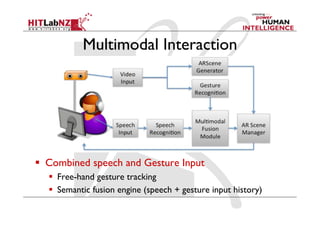

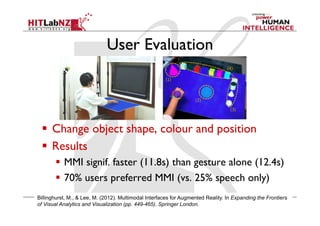

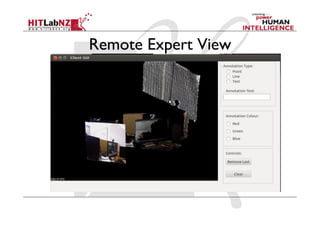

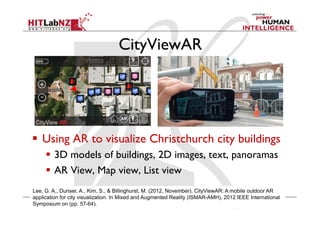

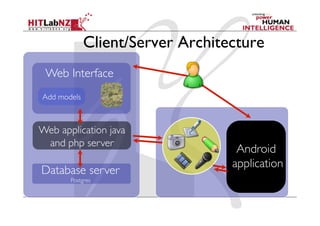

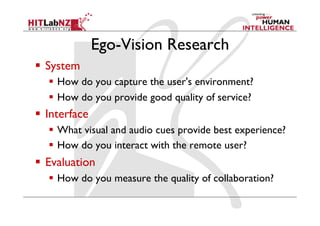

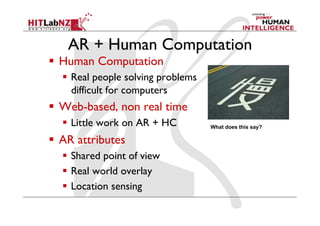

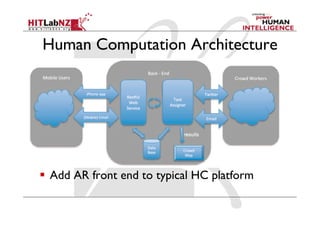

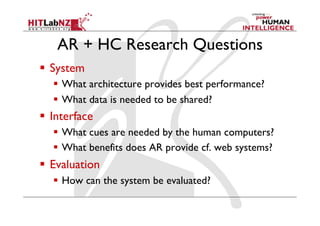

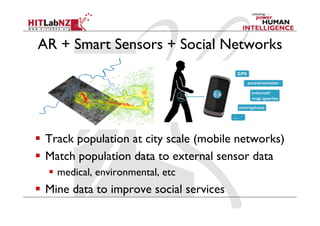

Mark Billinghurst has researched how augmented reality can enhance collaboration. His work shows that AR can provide spatial cues to make remote collaboration feel more present. While AR introduces seams between real and virtual spaces, studies found people use similar speech and gestures in AR as in face-to-face settings. Current areas of focus include natural hand interaction, real world capture on mobile devices, and lightweight asynchronous collaboration using handheld AR. Future opportunities lie in ego-centric AR collaboration, combining AR with human computation, and scaling augmentation to city-wide levels using sensors and social networks.