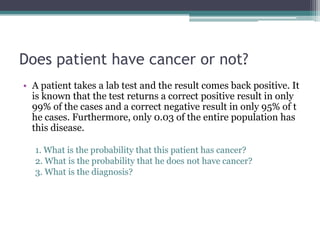

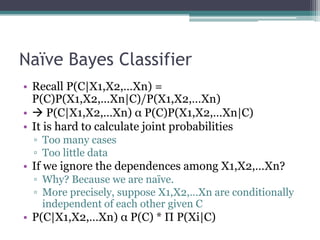

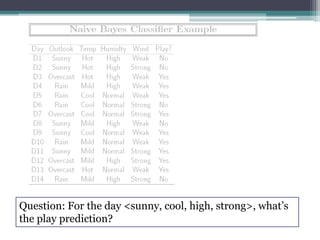

This document discusses Naïve Bayes classifiers. It begins by introducing Bayesian probability and Bayes' theorem. It then explains that naïve Bayes classifiers make the assumption that features are conditionally independent given the class. This allows the joint probabilities to be replaced with simpler individual probabilities, improving performance even when the independence assumptions are not strictly true. The document concludes by mentioning some extensions of the basic naïve Bayes classifier approach.