Embed presentation

Download as PDF, PPTX

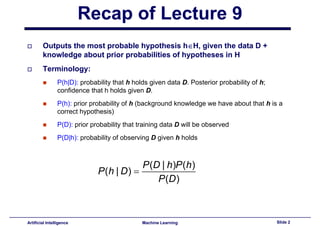

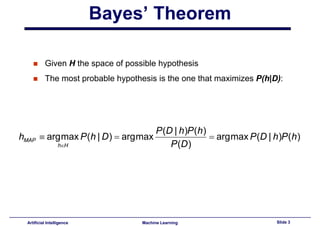

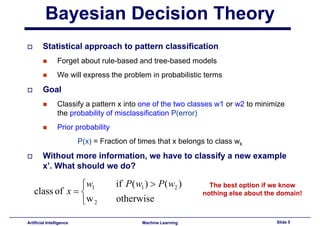

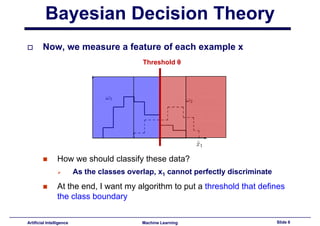

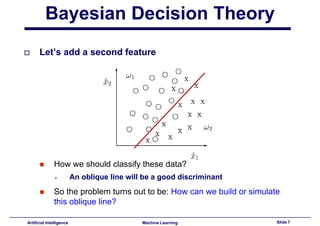

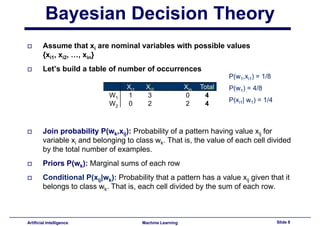

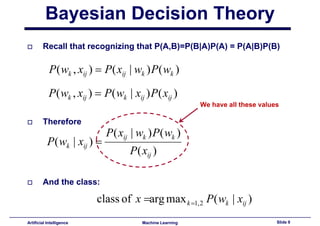

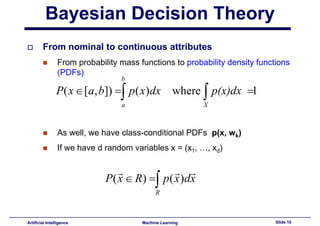

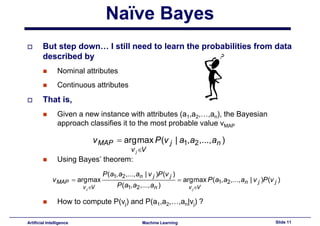

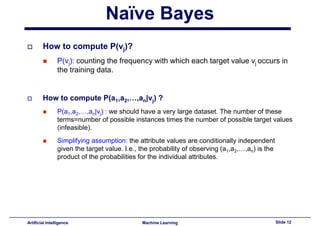

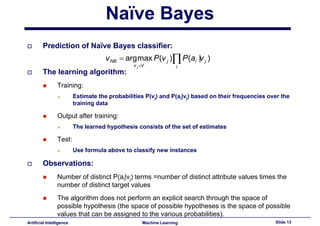

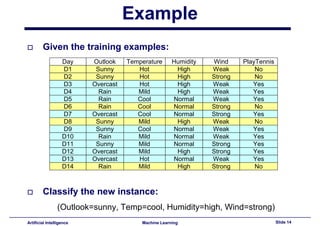

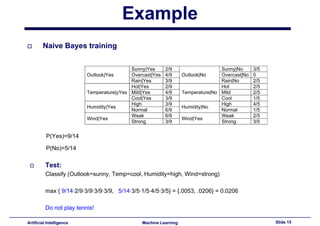

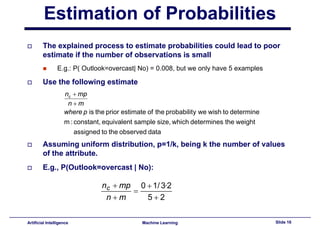

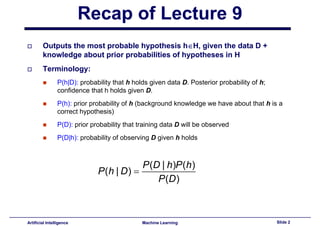

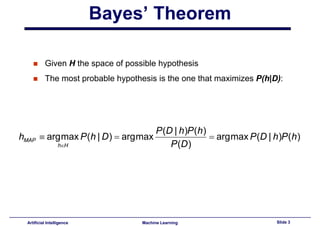

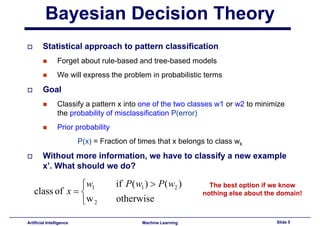

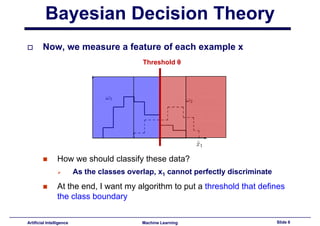

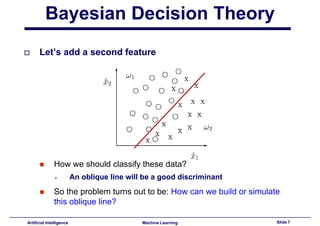

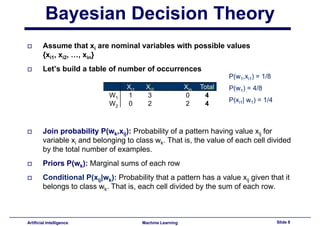

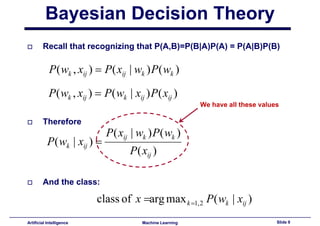

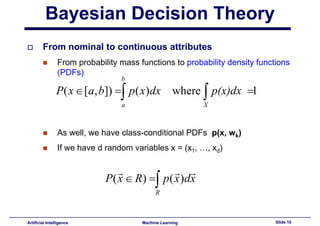

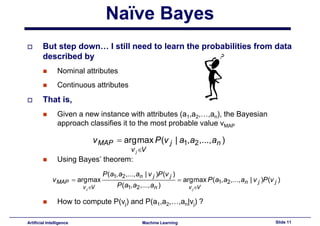

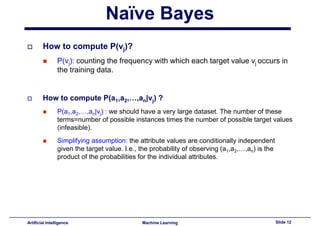

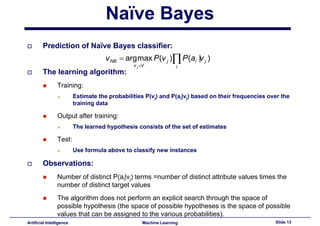

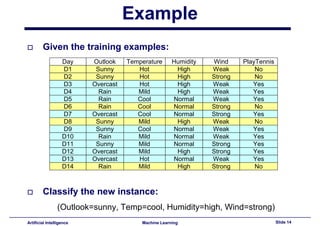

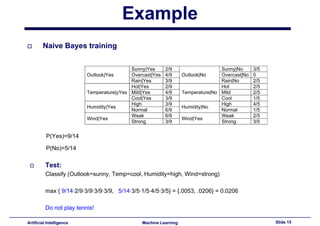

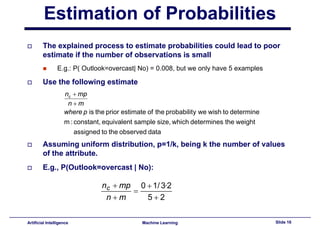

This document provides a summary of Lecture 10 on Bayesian decision theory and Naive Bayes machine learning algorithms. It begins with a recap of Lecture 9 on using probability to classify patterns into categories. It then discusses how to apply these probabilistic concepts to both nominal and continuous variables. A medical example is presented to illustrate Bayesian classification. The document concludes by explaining the Naive Bayes algorithm for classification tasks and providing a worked example of how it is trained and makes predictions.