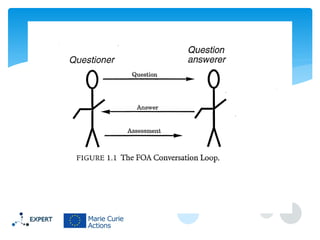

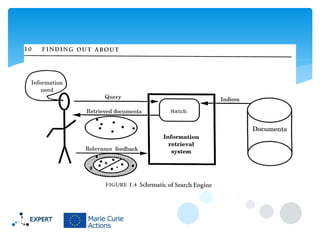

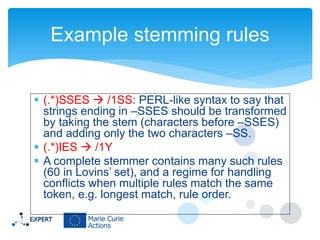

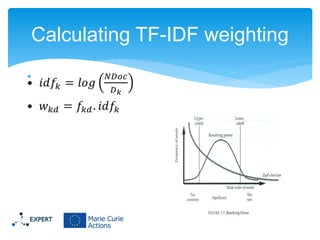

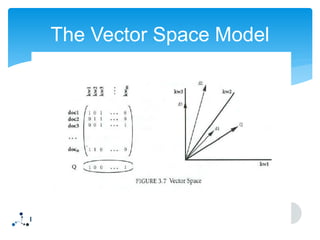

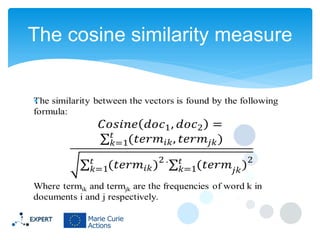

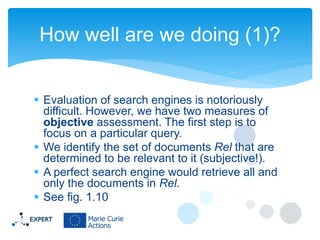

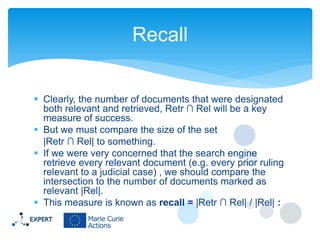

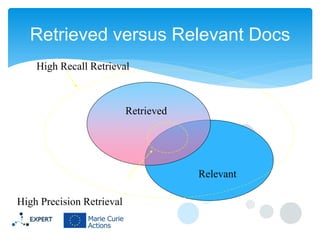

This document discusses information retrieval and describes its three main phases: 1) asking a question to define an information need, 2) constructing an answer by matching queries to documents, and 3) assessing the relevance of the retrieved answers. It also covers several important information retrieval concepts like keywords, indexing documents, stemming words, calculating TF-IDF weights, and evaluating system performance using recall and precision.