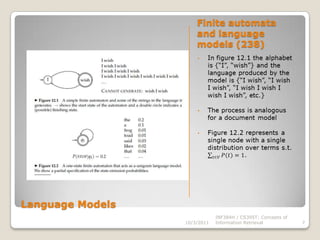

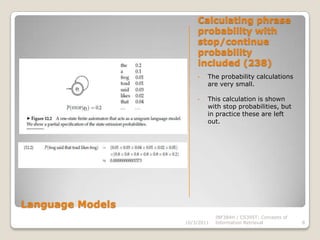

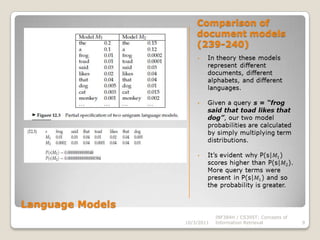

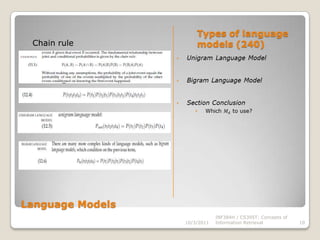

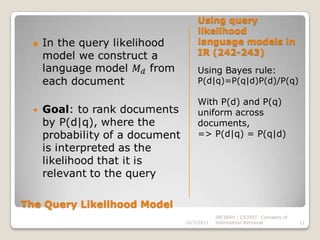

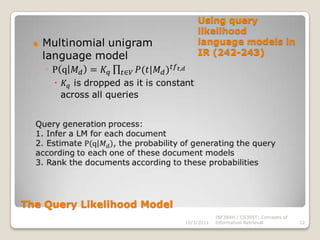

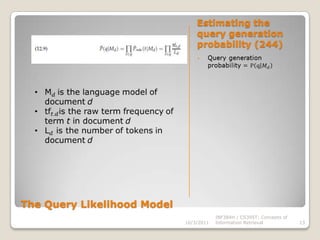

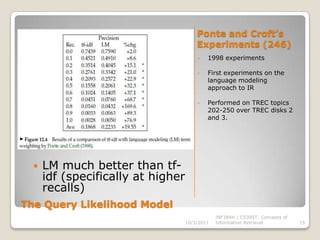

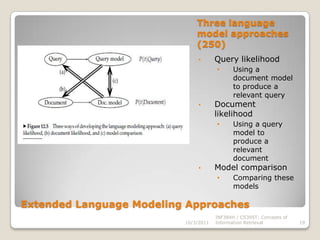

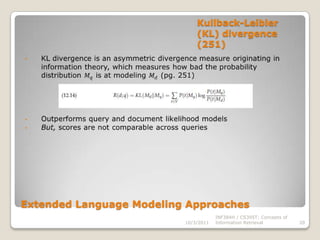

The document provides background information on Christopher Manning, Prabhakar Raghavan, and Hinrich Schutze, who are authors of the book "Introduction to Information Retrieval: Language models for information retrieval". It then outlines the presentation which discusses language models for information retrieval, including query likelihood models, estimating query generation probabilities, and experiments comparing language modeling approaches to other IR techniques.