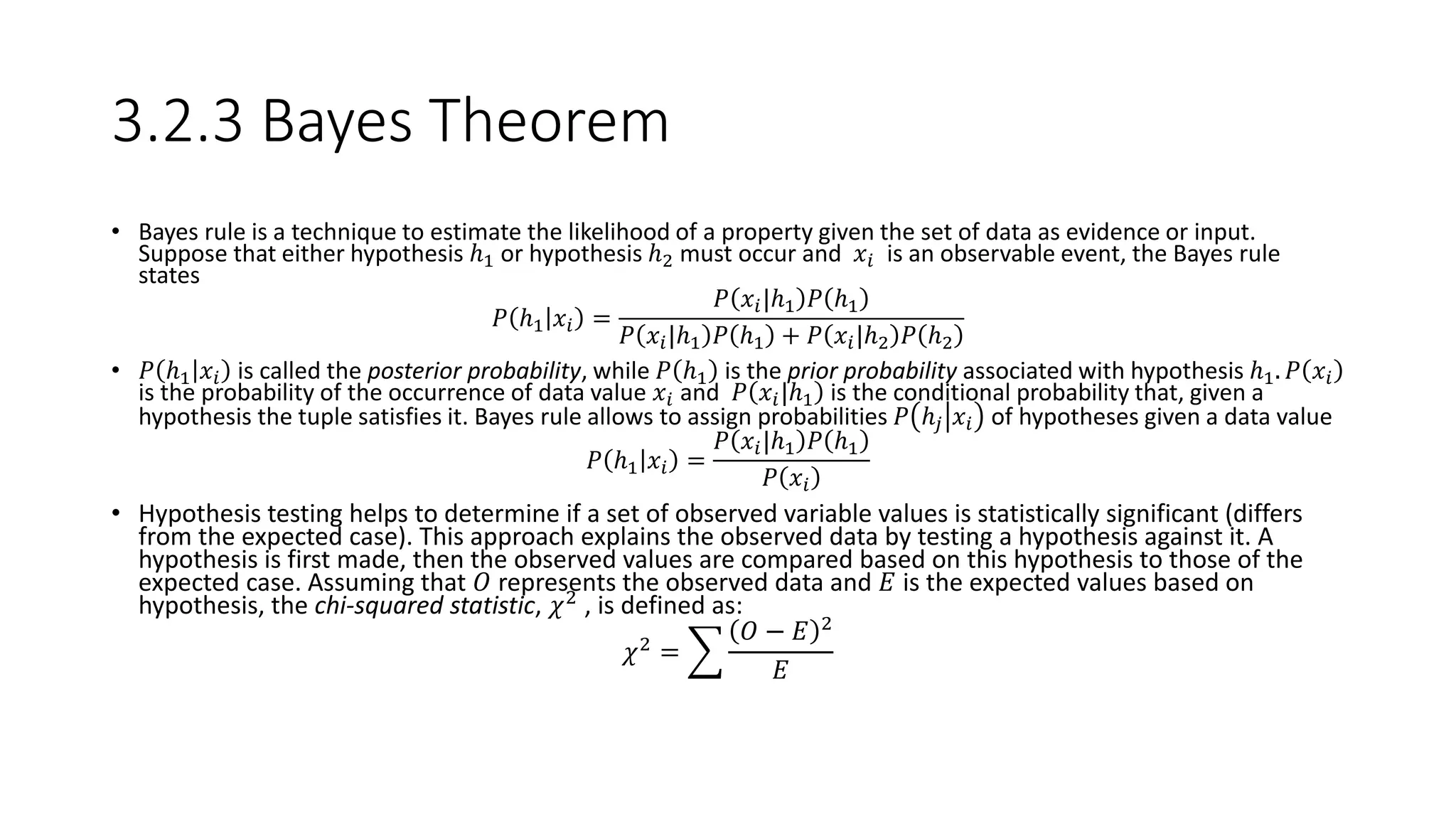

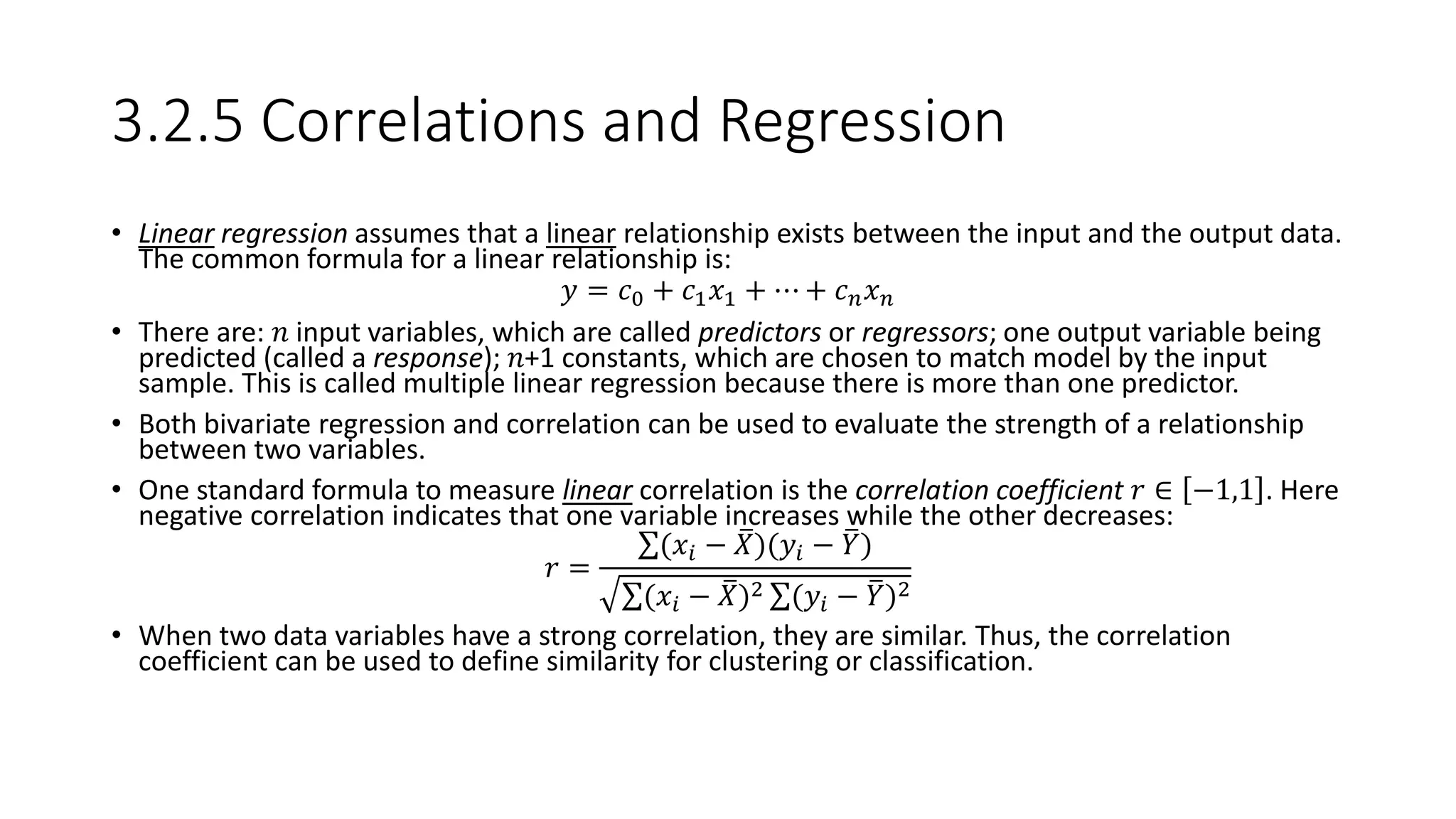

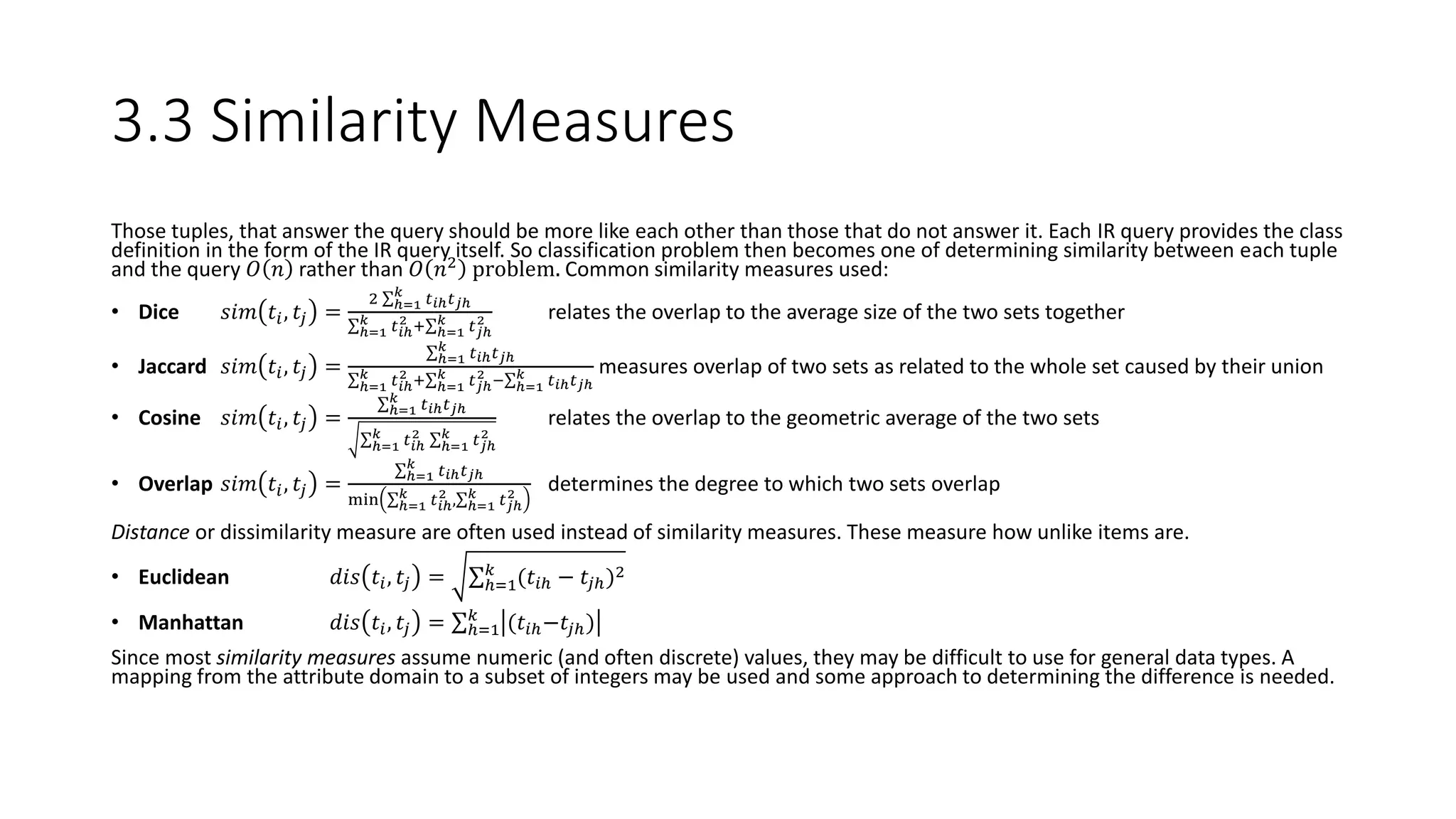

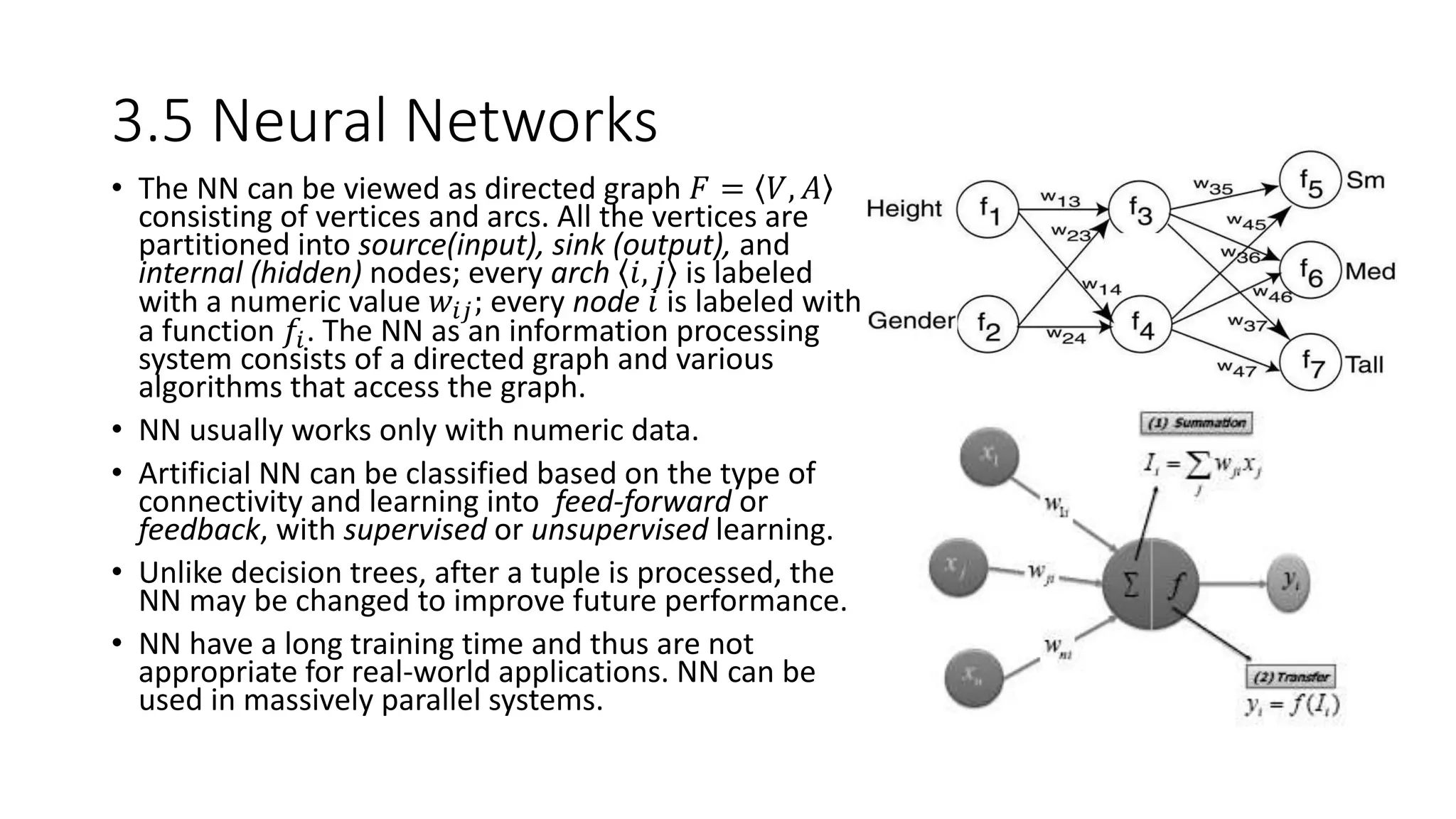

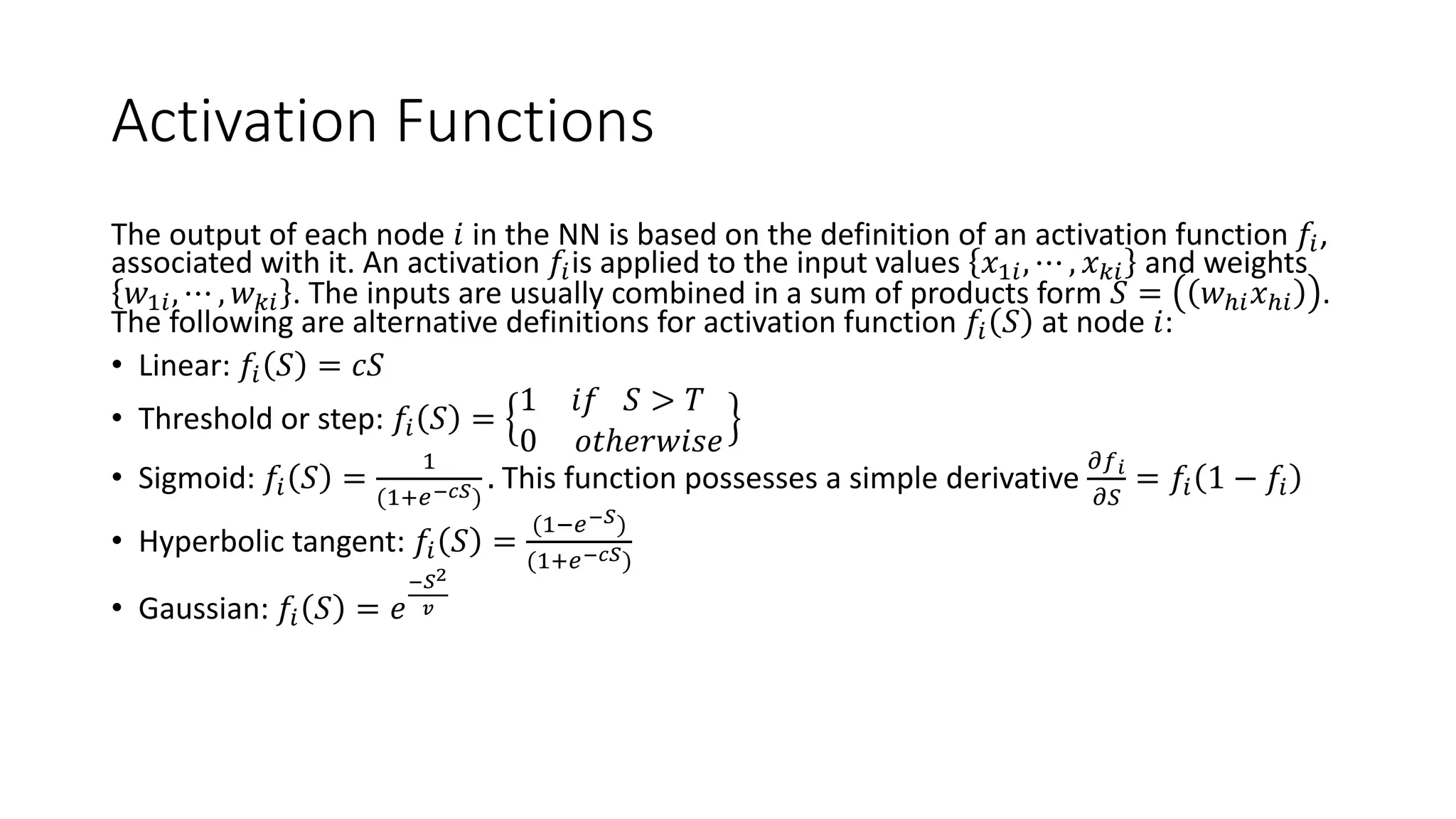

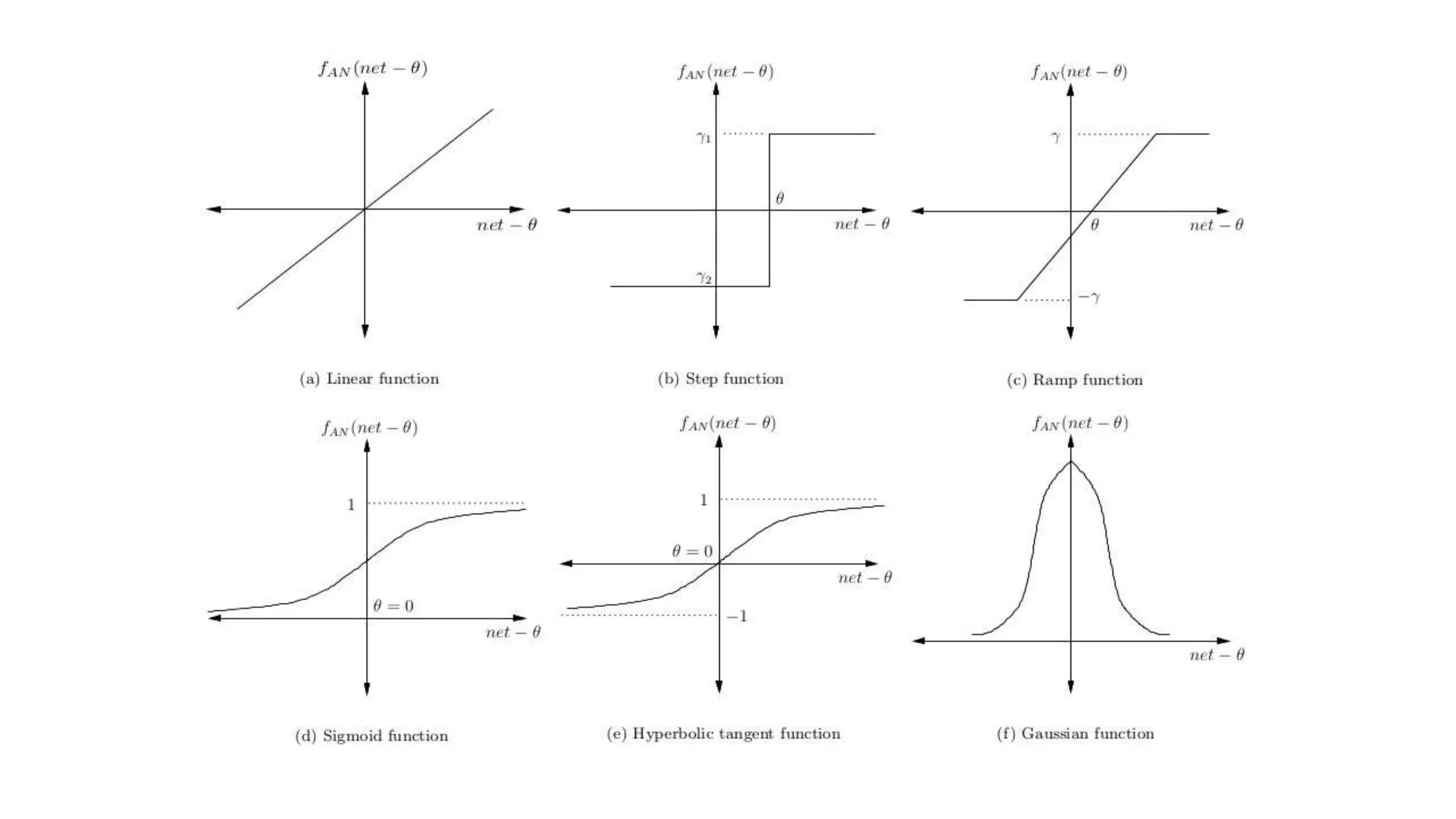

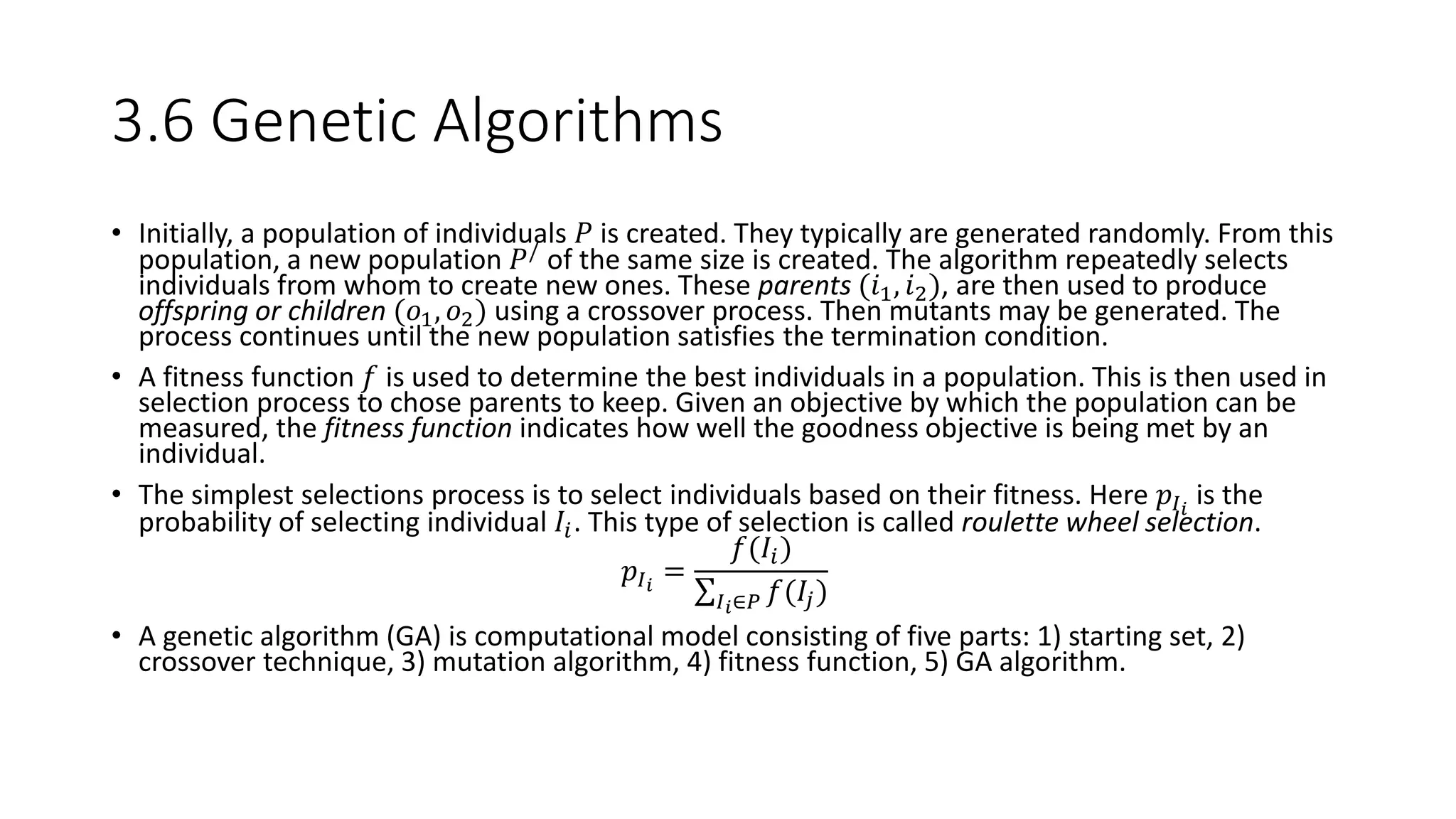

This document provides an overview of data mining techniques discussed in Chapter 3, including parametric and nonparametric models, statistical perspectives on point estimation and error measurement, Bayes' theorem, decision trees, neural networks, genetic algorithms, and similarity measures. Nonparametric techniques like neural networks, decision trees, and genetic algorithms are particularly suitable for data mining applications involving large, dynamically changing datasets.