Chapter 7 focuses on tensor visualization, primarily through diffusion tensor MRI (DT-MRI) to explore brain anatomy using principal component analysis. Techniques discussed include visualizing tensor components, anisotropy metrics, fiber tracking, and employing hyperstreamlines to illustrate complex tensor data. The chapter emphasizes the importance of methods for simplifying and contextualizing data to enhance understanding of neural pathways and structures.

![certain confidence level. The hue of the vector coloring can indicate their direction, by using the following

colormap:

R = |e1 · x|

G = |e1 · y|

B = |e1 · z|

.

The luminance can indicate the measurement confidence level. A relatively popular technique in this

class is to simply color map the major eigenvector direction.

Visualizing a single eigenvector or eigenvalue at a time may not be enough. In many cases the ratios of

eigenvalues, rather than their absolute values, are of interest.

7.5 Tensor Glyphs

We sample the dataset domain with a number of representative sample points. For each sample point, we

construct a tensor glyph that encodes the eigenvalues and eigenvectors of the tensor at that point. For

a 2 × 2 tensor dataset we construct a 2D ellipse whose half axes are oriented in the directions of the two

eigenvectors and scaled by the absolute values of the eigenvalues. For a 3 × 3 tensor we construct a 3D

ellipsoid in a similar manner.

Besides ellipsoids, several other shapes can be used: like parallelepipeds (cuboids), or cylinders instead

of ellipsoids. Smooth glyph shapes like those provided by the ellipsoids provide a less-distracting picture,

than shapes with sharp edges, such as the cuboids and cylinders.

Superquadric shapes are parameterized as functions of the planar and linear certainty metrics cl and cp,

respectively.

Another tensor glyph used is an axes system, formed by three vector glyphs that separately encode

the three eigenvectors scaled by their corresponding eigenvalues. This method is easier to interpret for 2D

datasets, however in 3D they create too much confusion due to spatial overlap.

Eigenvalues can have a large range, so directly scaling the tensor ellipsoids by their values can easily lead

to overlapping and (or) very thin or very flat glyphs. We can solve this problem as we did for vector glyphs

by imposing a minimal and maximal glyph size, either by clamping or by using a nonlinear value-to-size

mapping function.

7.6 Fiber Tracking

In case of a DT-MRI tensor dataset, regions of high anisotropy in general, and of high values of the cl

linear certainty metric in particular, correspond to neural fibers aligned with the major eigenvector e1. If

we want to visualize the location and direction of such fibers, it is natural to think of tracking the direction

of this eigenvector over regions of high anisotropy by using the streamline technique. First, a seed region is

identified. This is a region where the fibers should intersect, so it can be detected by thresholding one of the

anisotropy metrics presented in section 7.3. Second, streamlines are densely seeded in this region and traced

(integrated) both forward and backward in the major eigenvector field e1 until a desired stop criterion is

reached (minimal value of anisotropy reached, or a maximal distance from other tracked fibers).

After the fibers are tracked, they can be visualized using the stream tubes technique. The constructed

tubes can be colored to show the value of a relevant scalar field, the major eigenvalue, anisotropy metric, or

some other quantity scanned along with the tensor data.

Focus and context. Fiber tracks are most useful when shown in context of the anatomy of the brain

structure being explored.

Fiber clustering. Given two fibers a = a(t) and b = b(t) with t ∈ [0, 1] we first define distance:

d(a, b) =

1

2N

N

i=1

(||a(i/N), b|| + ||b(i/N), a||) ,

as symmetric mean distance of N sample points on a fiber to the (closest points on) other fiber. The

directional similarity of two fibers is defined as the inverse of the distance. Using the distance, the tracked

fibers are next clustered in order of increasing distance, i.e. from the most to the least similar, until the desired

3](https://image.slidesharecdn.com/7-170117191335/75/07-Tensor-Visualization-3-2048.jpg)

![What have you learned in this chapter?

Chapter provides an overview of a number of methods for visualizing tensor data. It explains principal

component analysis as a technique used to process a tensor matrix and extract from it information that

can directly be used in its visualization. It forms a fundamental part of many tensor data processing and

visualization algorithms. Section 7.4 shows how the results of the principal component analysis can be

visualized using the simple color-mapping techniques. Next parts of the chapter explain how same data can

be visualized using tensor glyphs, and streamline-like visualization techniques.

In contrast to Slicer, which is a more general framework for analyzing and visualizing 3D slice-based data

volumes, the Diffusion Toolkit focuses on DT-MRI datasets, and thus offers more extensive and easier to use

options for fiber tracking.

What surprised you the most?

• New rendering techniques, such as volume rendering with data-driven opacity transfer functions

are also being developed to better convey complex structures emerging from the tracking process.

• Fiber tracking in DT-MRI datasets is an active area of research.

• Fiber bundling is a promising direction for the generation of simplified structural visualizations of fiber

tracts for DTI fields.

What applications not mentioned in the book you could imagine for the techniques ex-

plained in this chapter?

A hologram 3D glyph can be used in rendering (i.e., semitransparent hyperstreamlines) to mask discon-

tinuities caused by regular tensor glyphs.

Anisotropic bundled visualization of the fiber dataset. We can render the bundled fibers with a translu-

cent sprite texture rendered with alpha blending, while using a kernel of small radius to estimate the

fiiber density ρ. Instead of using an anisotropic sphrical kernel to estimate the fiber density, we use an ellip-

soidal kernel, whose axes are oriented along the directions of the eigenvectors of the DTI tensor field, and

scaled by the reciprocals of the eigenvalues of the same field. In linear anisotropy regions, fibers will strongly

bundle towards the local density center, but barely shift in their tangent directions. In planar anisotropy

regions, fibers will strongly bundle towards the implicit fiber-plane, but barely shift across this plane. We use

the values of cl and cp to render the above two fiber types differently. For fibers in linear anisotropy regions

(cl large), we render point sprites using sphere textures. For fiber points located in planar anisotropy regions

(cp large), we render translucent 2D quads oriented perpendicular to the direction of the eigenvector

corresponding to the smallest eigenvalue.

1. EXERCISE 1

In data visualization, tensor attributes are some of the most challenging data types, due to their high

dimensionality and abstract nature. In Chapter 7 (and also in Section 3.6), we introduced tensor fields by

giving a simple example: the curvature tensor for a 3D surface. Give another example of a tensor field

defined on 2D or 3D domains. For your example

• Explain why the quantity you are defining is a tensor

• Explain how the quantity you are defining varies both as a function of position but also of direction

• Explain what are the intuitive meanings of the minimal, respective maximal, values of your quantity

in the directions of the respective eigenvectors of your tensor field.

• Stress on a material, such as a construction beam in a bridge can be an example of tensor field. Stress

is a tensor because it describes things happening in two directions simultaneously.

Another example is the Cauchy stress tensor T, which takes a direction v as input and produces the

stress T(v) on the surface normal to this vector for output:

σ = [Te1

, Te2

, Te3

] =

σ11 σ12 σ13

σ21 σ22 σ23

σ32 σ32 σ33

,

6](https://image.slidesharecdn.com/7-170117191335/75/07-Tensor-Visualization-6-2048.jpg)

![extension of IBFV that would be used to visualize 2D tensor fields. The idea is to use the major eigenvector

field to construct the IBFV animation, and, additionally, encode the minor eigenvector an (or) eigenvalue in

other attributes of the resulting visualization, such as color, luminance, or shading. How would you modify

IBFV to encode such additional attributes?

Hints: Take care that modifying luminance may adversely affect the result of IBFV, e.g., destroy the

apparent flow patterns that convey the direction of the major eigenvector field.

We can use same Noise texture advected in the direction of main eigenvector field. After obtaining

resulting texture N , we can color it based on orientation of the minor eigenvector.

13. EXERCISE 13

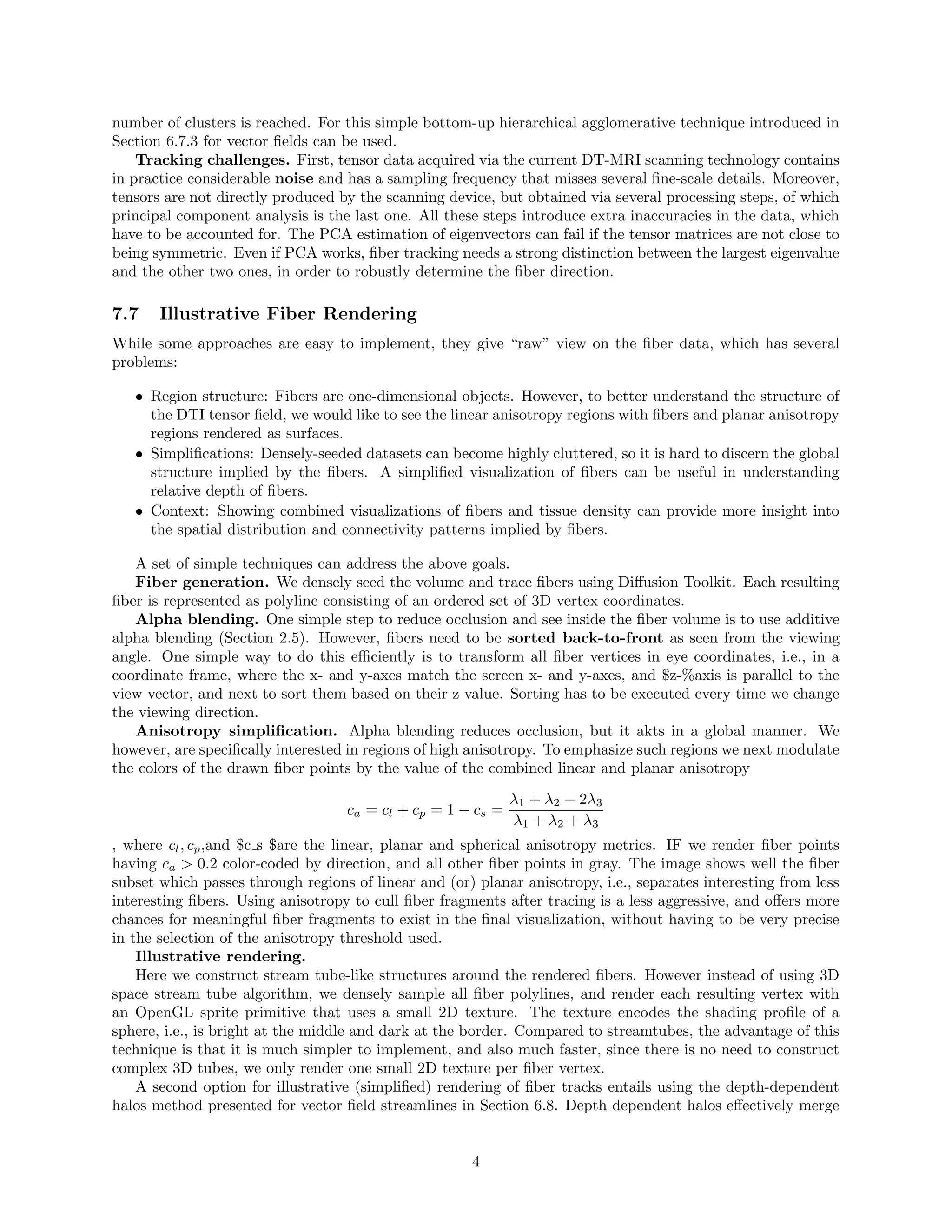

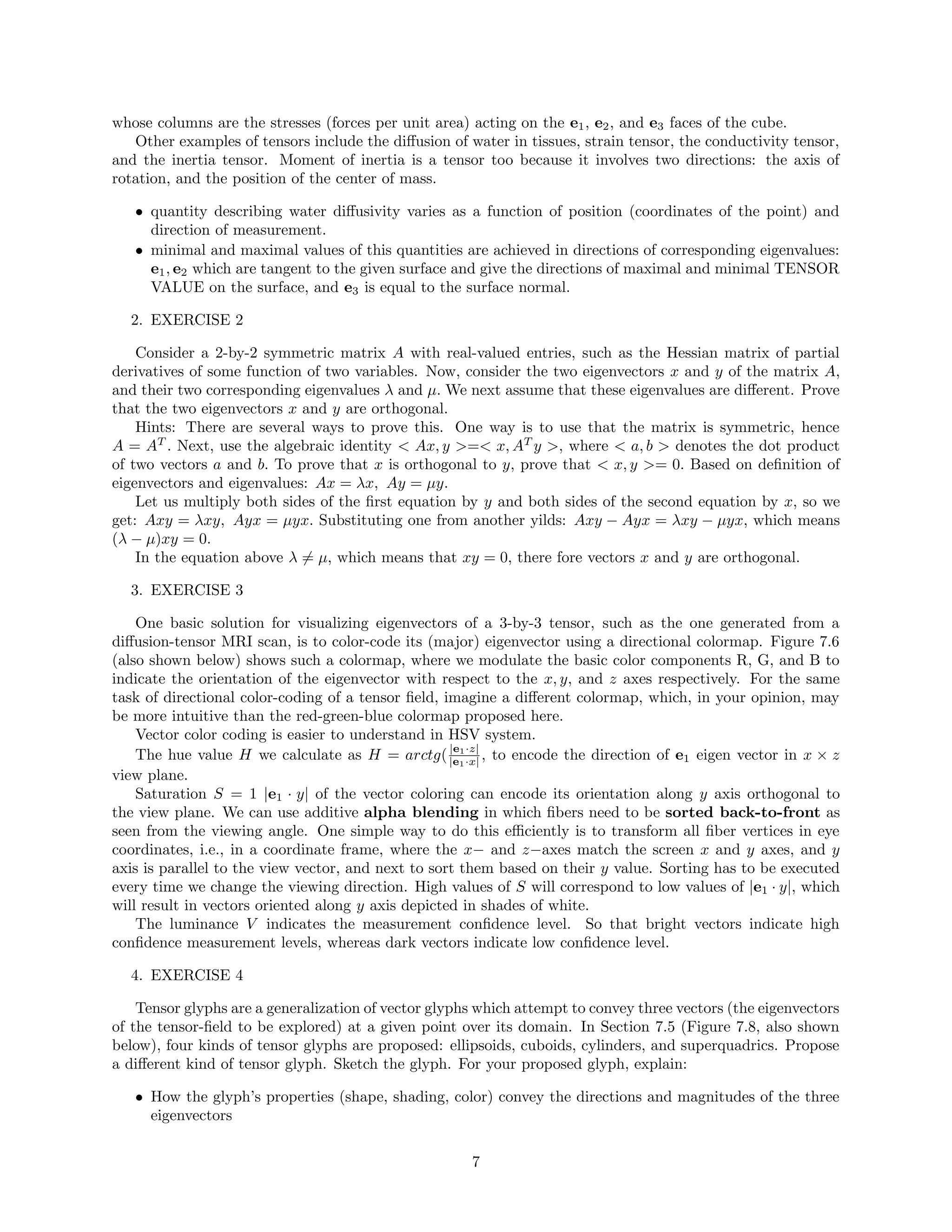

Consider a point cloud that densely samples a part of the surface of a sphere of radius R, defined in

polar coordinates θ, φ by the ranges [θmin, θmax] and [φmin, φmax]. The ‘patch’ created by this sampling is

shown in the figure below. Given the three points a, b, c indicated in the same figure, describe what are the

three eigenvectors of the principal component analysis (PCA) applied to the points’ covariance matrix for

small neighborhoods of each of these three points. The neighborhood sizes are indicated by the circles in the

figure. For this, indicate which are the directions of these eigenvectors, and (if possible from the provided

information), which are their relative magnitudes.

Figure 3: Point cloud sampling a sphere patch with three points of interest.

For the sphere λ1 = λ2 > 0. In this case we can only determine the minor eigenvector s3, and vectors s1

and s2 can be any two orthogonal vectors in the tangent plane, which are also orthogonal to s3.

Principal vector s3 is perpendicular to the tangent plane, and its magnitude equals λ3,and it coinsides

with the normal to abc plane at location C, when scaled by it’s corresponding eigenvalue: n = 1

λ3

s3.

Let’s define a centroid as follows:

C =

1

3

3

i=1

(pi − p) · (pi − p)T

, C · sj = λj · sj, j ∈ {1, 2, 3},

where k = 3 is the number of point-neighbors considered in the neighborhood of pi, so that p represents

the 3D centroid of the nearest neighbors, λj is the j-th eigenvalue of the covariance matrix, and sj the j-th

eigenvector.

12](https://image.slidesharecdn.com/7-170117191335/75/07-Tensor-Visualization-12-2048.jpg)