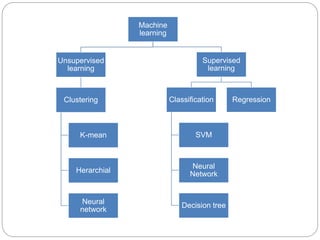

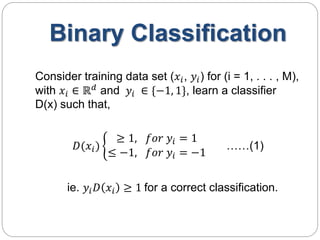

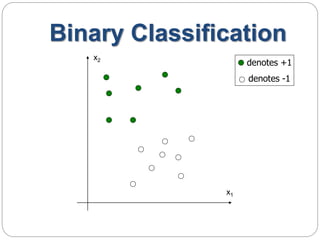

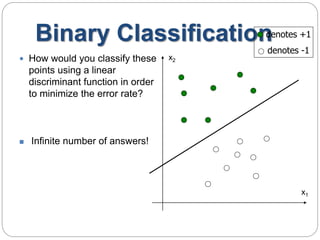

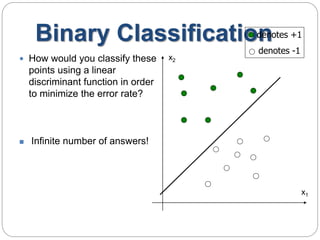

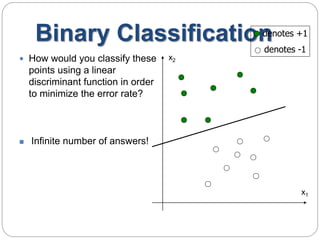

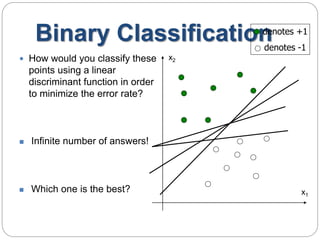

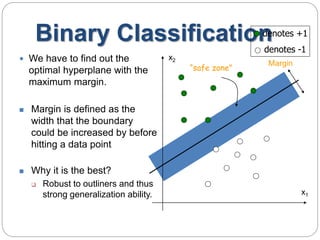

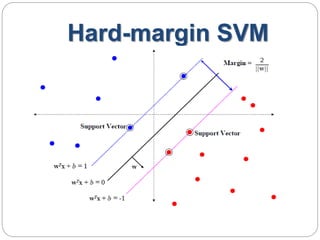

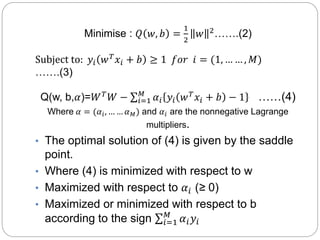

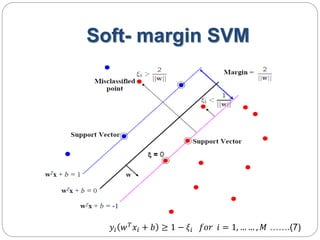

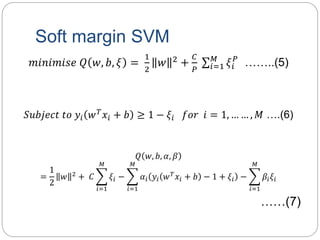

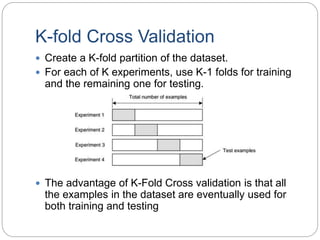

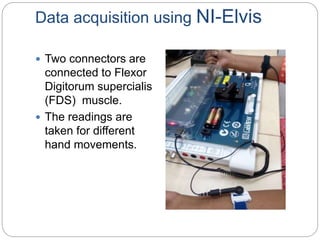

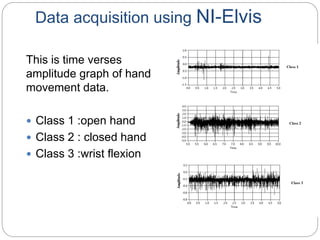

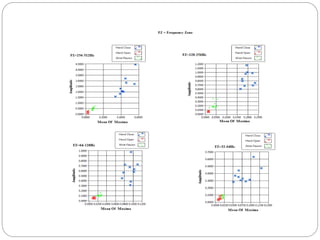

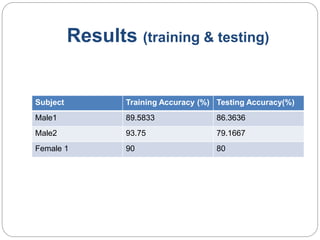

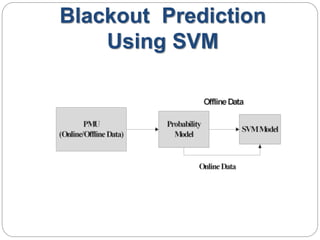

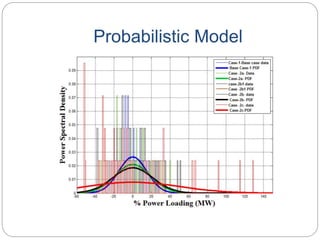

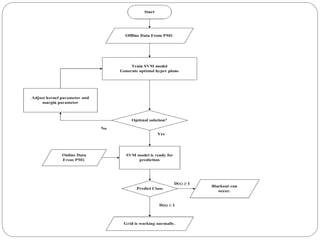

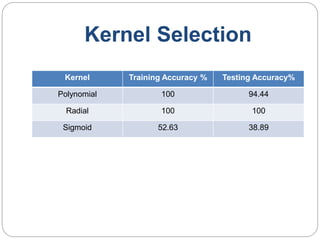

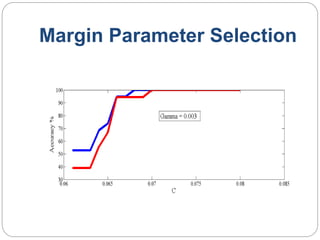

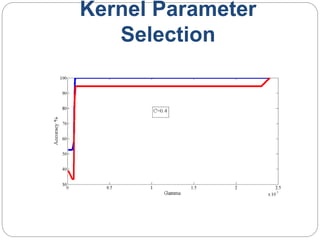

This document provides an overview of event classification and prediction using support vector machines (SVM). It begins with an introduction to classification, machine learning, and SVM. It then discusses binary classification with SVM, including hard-margin and soft-margin SVM, kernels, and multiclass classification. The document presents case studies on classifying hand movements from electromyography data and predicting power grid blackouts using SVM. It concludes that SVM is effective for these classification tasks and can initiate prevention mechanisms for predicted events.