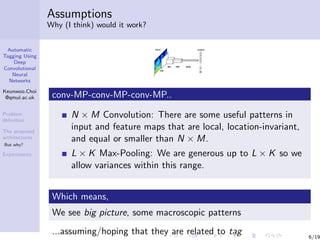

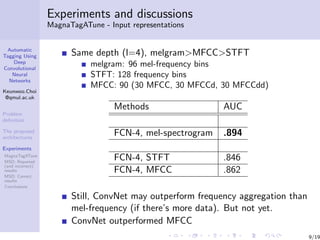

This document summarizes research on using deep convolutional neural networks for automatic music tagging. It describes the problem of automatic tagging, proposed architectures using convolutional and max pooling layers, and experiments on two datasets. The experiments showed that melgram representations with 4 convolutional layers achieved the best results, and deeper models did not significantly improve performance. Re-running the experiments on the MSD dataset with proper hyperparameter tuning yielded improved results over those originally reported.

![Automatic

Tagging Using

Deep

Convolutional

Neural

Networks

Keunwoo.Choi

@qmul.ac.uk

Problem

definition

The proposed

architectures

Experiments

MagnaTagATune

MSD: Reported

(and incorrect)

results

MSD: Correct

results

Conclusions

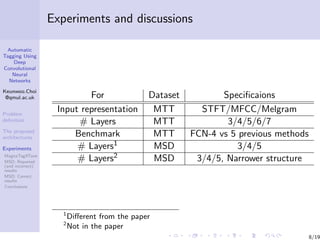

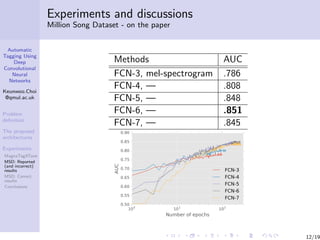

Experiments and discussions

MagnaTagATune - Number of layers

Methods AUC

FCN-3, mel-spectrogram .852

FCN-4, mel-spectrogram .894

FCN-5, mel-spectrogram .890

FCN-4, STFT .846

FCN-4, MFCC .862

FCN-4>FCN-3: Depth worked!

FCN-4>FCN-5 by .004

Deeper model might make it equal after ages of training

Deeper models requires more data

Deeper models take more time (deep residual network[4])

4 layers are enough vs. matter of size(data)?

10/19](https://image.slidesharecdn.com/slide-ismir-2016-160818032005/85/Automatic-Tagging-using-Deep-Convolutional-Neural-Networks-ISMIR-2016-10-320.jpg)

![Automatic

Tagging Using

Deep

Convolutional

Neural

Networks

Keunwoo.Choi

@qmul.ac.uk

Problem

definition

The proposed

architectures

Experiments

MagnaTagATune

MSD: Reported

(and incorrect)

results

MSD: Correct

results

Conclusions

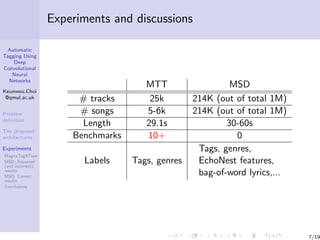

Experiments and discussions

MagnaTagATune

Methods AUC

The proposed system, FCN-4 .894

2015, Bag of features and RBM [5] .888

2014, 1-D convolutions[2] .882

2014, Transferred learning [6] .88

2012, Multi-scale approach [1] .898

2011, Pooling MFCC [3] .861

All deep and NN approaches are around .88-.89

Are we touching the glass ceiling?

Perhaps due to the noise of MTT, but tricky to prove it

26K tracks are not enough for millions of parameters

11/19](https://image.slidesharecdn.com/slide-ismir-2016-160818032005/85/Automatic-Tagging-using-Deep-Convolutional-Neural-Networks-ISMIR-2016-11-320.jpg)

![Automatic

Tagging Using

Deep

Convolutional

Neural

Networks

Keunwoo.Choi

@qmul.ac.uk

Problem

definition

The proposed

architectures

Experiments

MagnaTagATune

MSD: Reported

(and incorrect)

results

MSD: Correct

results

Conclusions

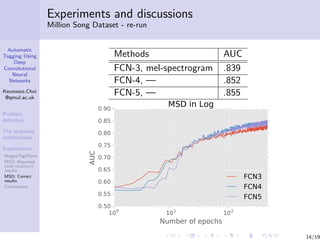

Smaller (narrower) convnet

No. of feature maps: [128@1 – 2048@5] → [32@1 – 256@5],

i.e.narrower network, because there’s no difference between

FCN-4 and FCN-5.

100 101 102

Number of epochs

0.50

0.55

0.60

0.65

0.70

0.75

0.80

0.85

0.90

AUC MSD, small convnet, log

FCN3_small

FCN4_small

FCN5_small

15/19](https://image.slidesharecdn.com/slide-ismir-2016-160818032005/85/Automatic-Tagging-using-Deep-Convolutional-Neural-Networks-ISMIR-2016-15-320.jpg)