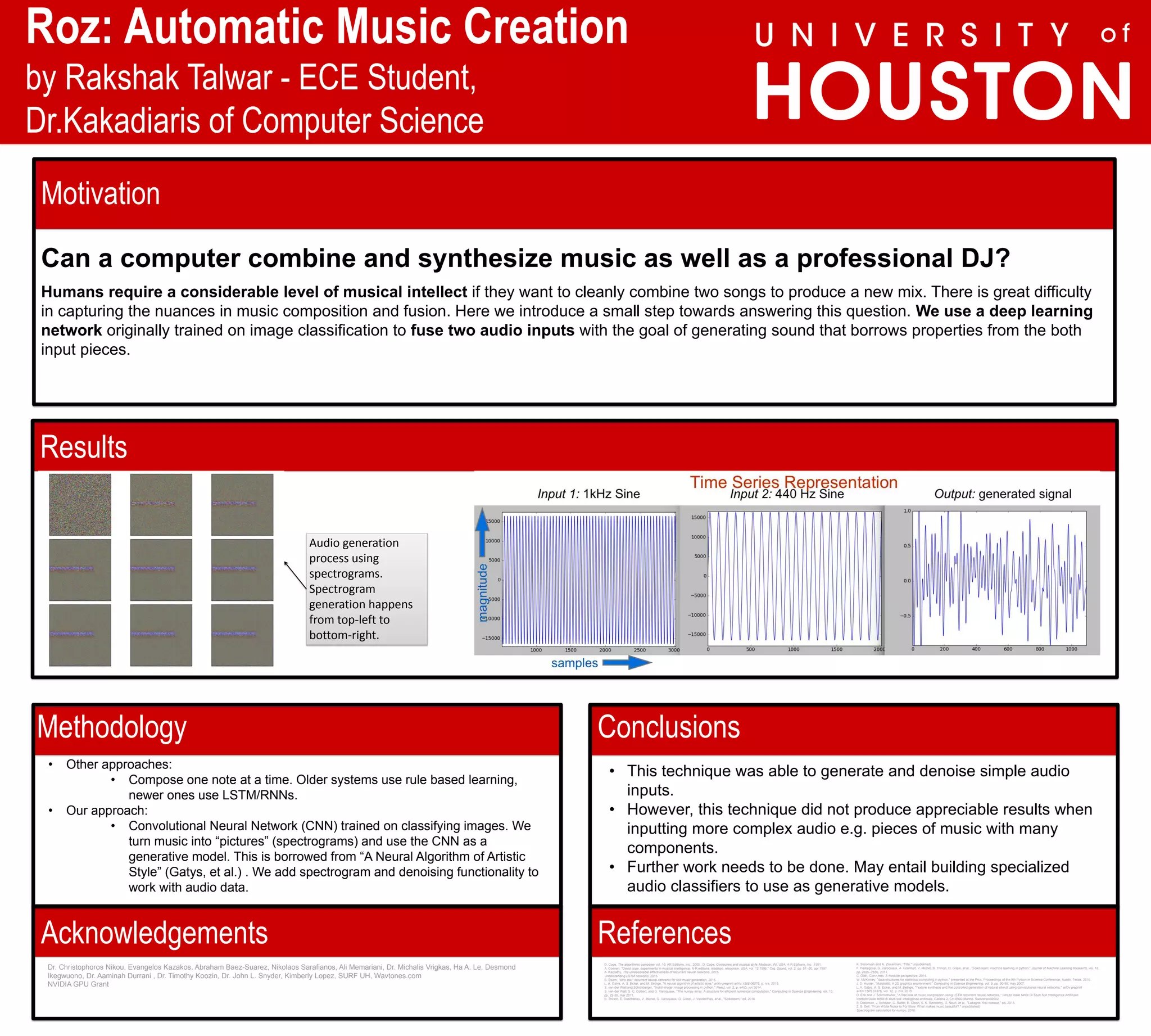

This document describes an approach to automatic music generation using convolutional neural networks. The approach uses spectrograms to represent music as images that can be input to a CNN trained for image classification. The CNN is then used as a generative model to fuse properties from two audio inputs into a new output. Initial results showed this technique could generate and denoise simple audio but had difficulty with more complex music. Further work is needed, such as building specialized audio classifiers, to improve the approach.