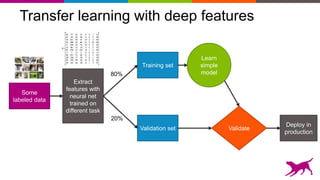

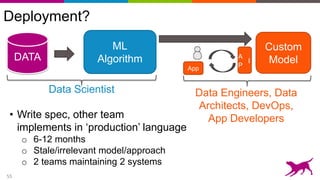

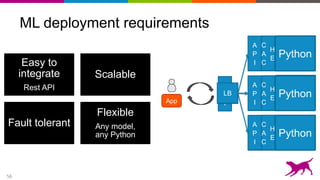

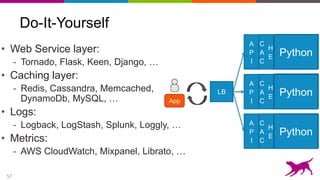

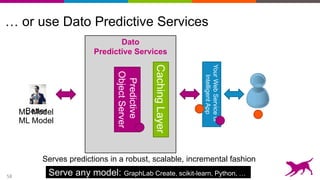

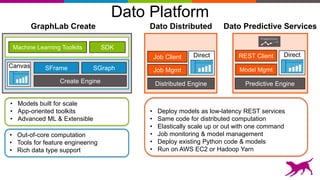

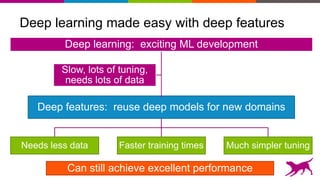

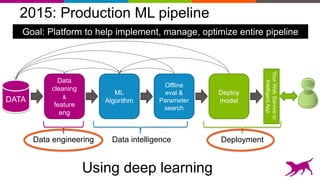

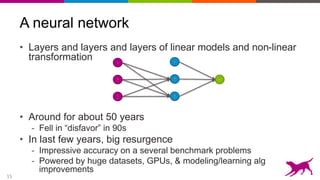

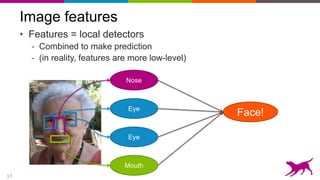

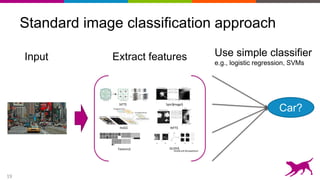

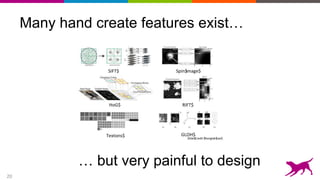

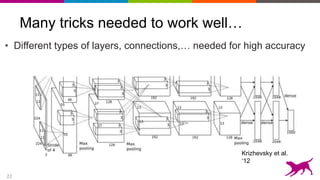

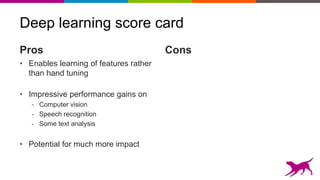

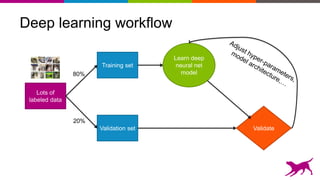

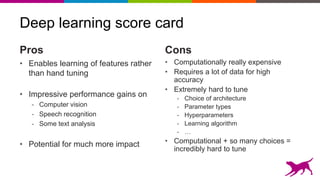

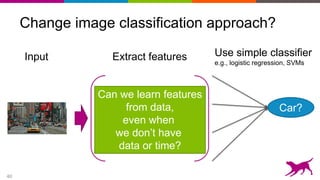

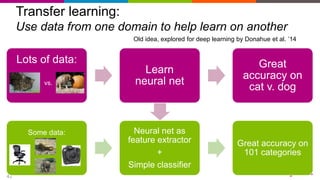

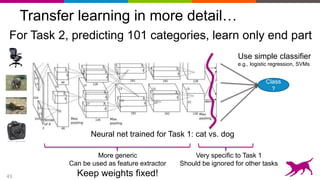

Deep learning techniques can be used to learn features from data rather than relying on hand-crafted features. This allows neural networks to be applied to problems in computer vision, natural language processing, and other domains. Transfer learning techniques take advantage of features learned from one task and apply them to another related task, even when limited data is available for the second task. Deploying machine learning models in production requires techniques for serving predictions through scalable APIs and caching layers to meet performance requirements.

![21

Use neural network to learn features

Each layer learns features, at different levels of abstraction

Y LeCun

MA Ranzato

Deep Learning = Learning Hierarchical Representations

It's deep if it has more than one stage of non-linear feature

transformation

Trainable

Classifier

Low-Level

Feature

Mid-Level

Feature

High-Level

Feature

Feature visualization of convolutional net trained on ImageNet from [ Zeiler & Fergus 2013]](https://image.slidesharecdn.com/strata-london-deeplearning-05-2015-150507163508-lva1-app6891/85/Strata-London-Deep-Learning-05-2015-21-320.jpg)

![34

Application to scene parsing

©Carlos Guestrin 2005-2014

Y LeCun

MA Ranzato

Semantic Labeling:

Labeling every pixel with the object it belongs to

[ Farabet et al. ICML 2012, PAMI 2013]

Would help identify obstacles, targets, landing sites, dangerous areas

Would help line up depth map with edge maps](https://image.slidesharecdn.com/strata-london-deeplearning-05-2015-150507163508-lva1-app6891/85/Strata-London-Deep-Learning-05-2015-28-320.jpg)

![44

Careful where you cut…

Last few layers tend to be too specific

Y LeCun

MA Ranzato

Deep Learning = Learning Hierarchical Representations

It's deep if it has more than one stage of non-linear feature

transformation

Trainable

Classifier

Low-Level

Feature

Mid-Level

Feature

High-Level

Feature

Feature visualization of convolutional net trained on ImageNet from [ Zeiler & Fergus 2013]

Too specific for

car detectionUse these!](https://image.slidesharecdn.com/strata-london-deeplearning-05-2015-150507163508-lva1-app6891/85/Strata-London-Deep-Learning-05-2015-38-320.jpg)