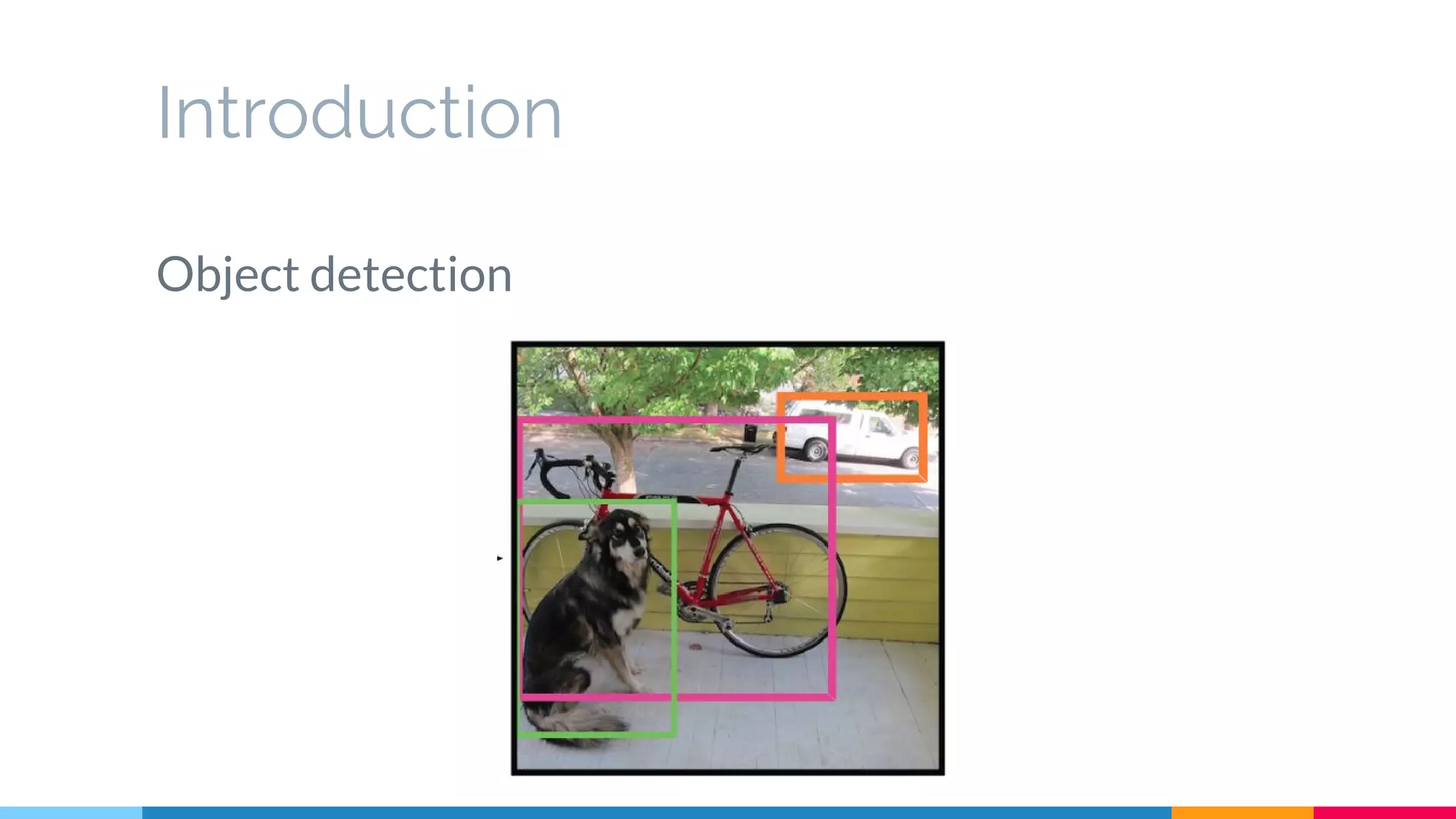

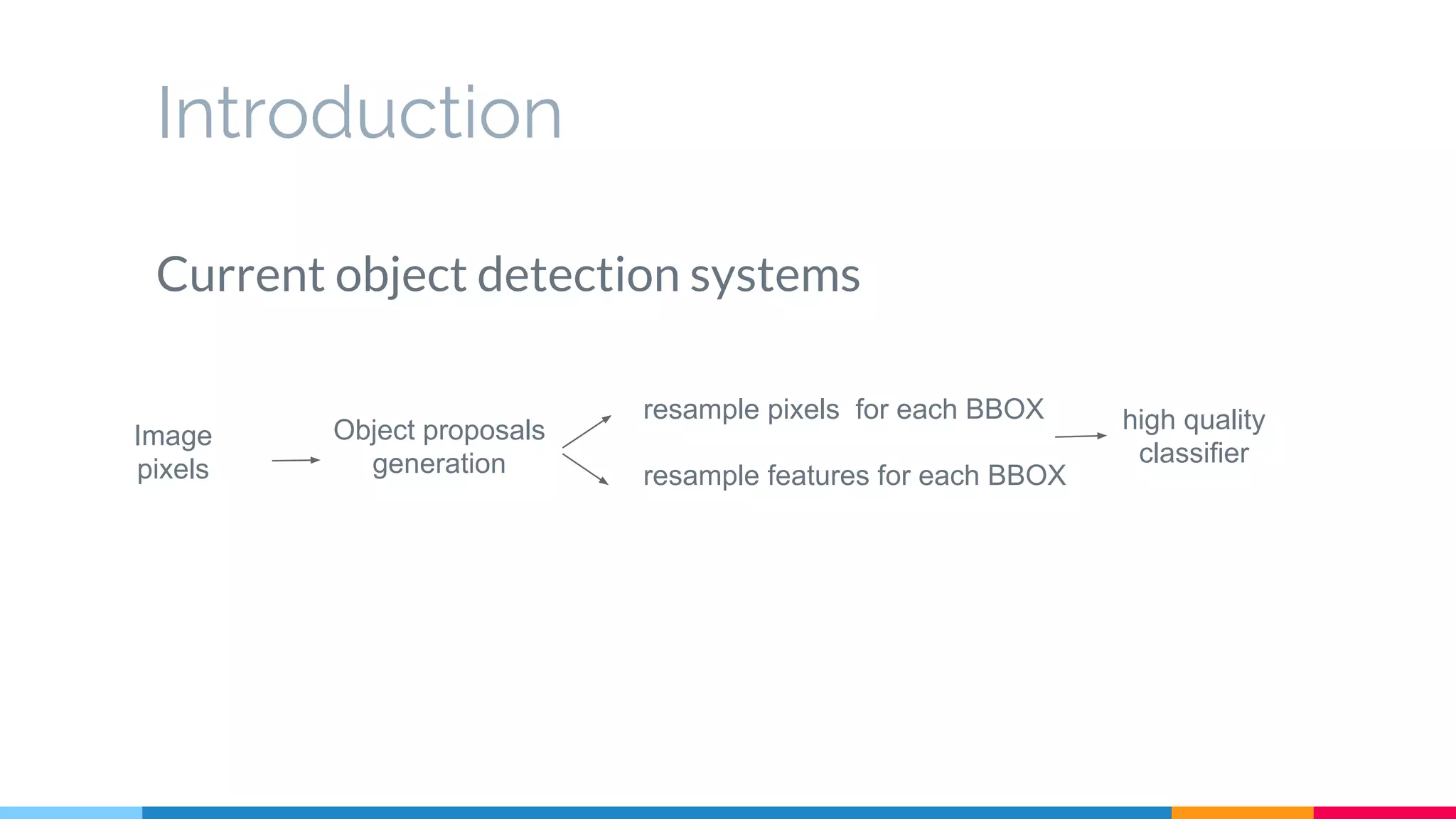

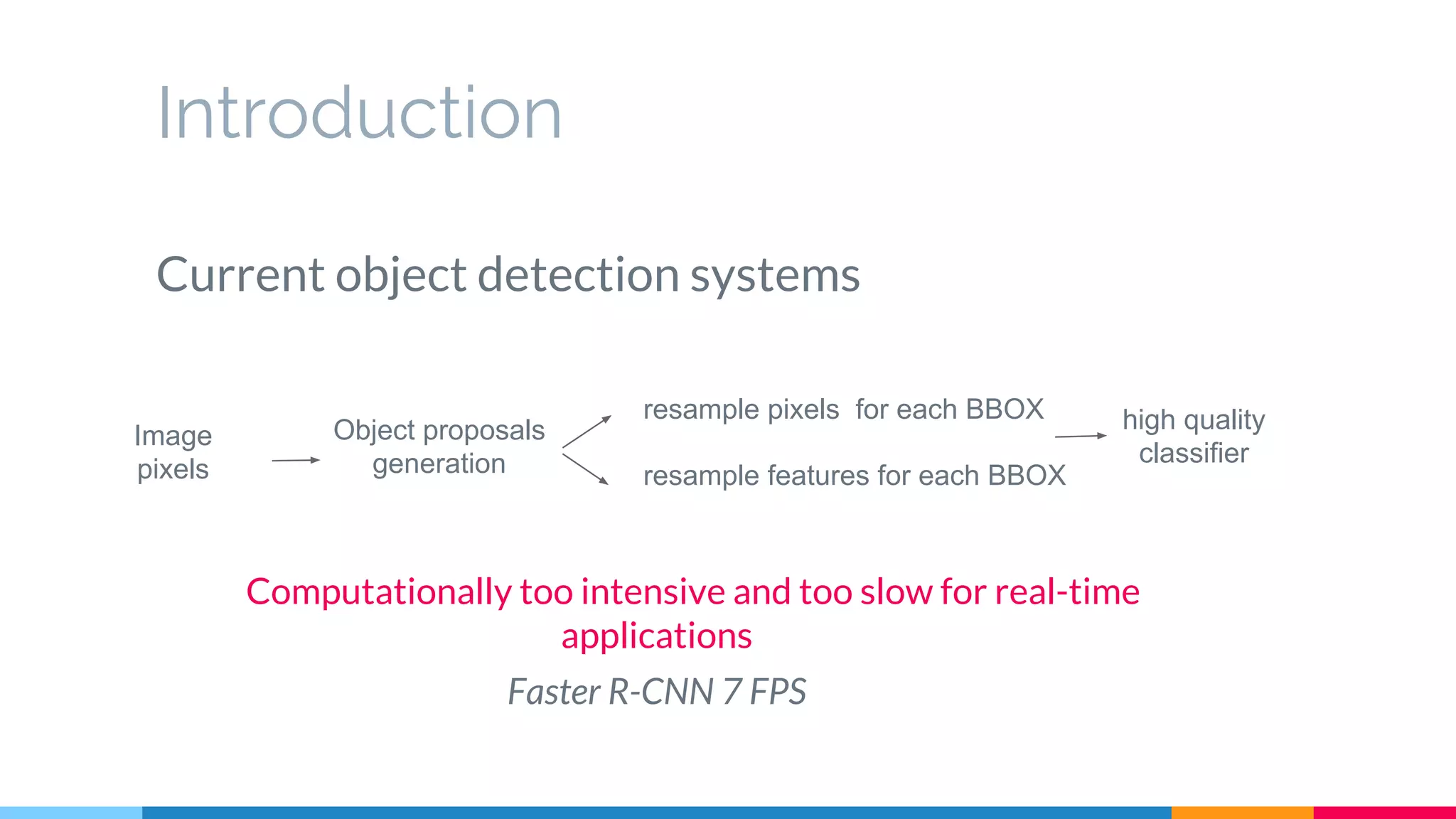

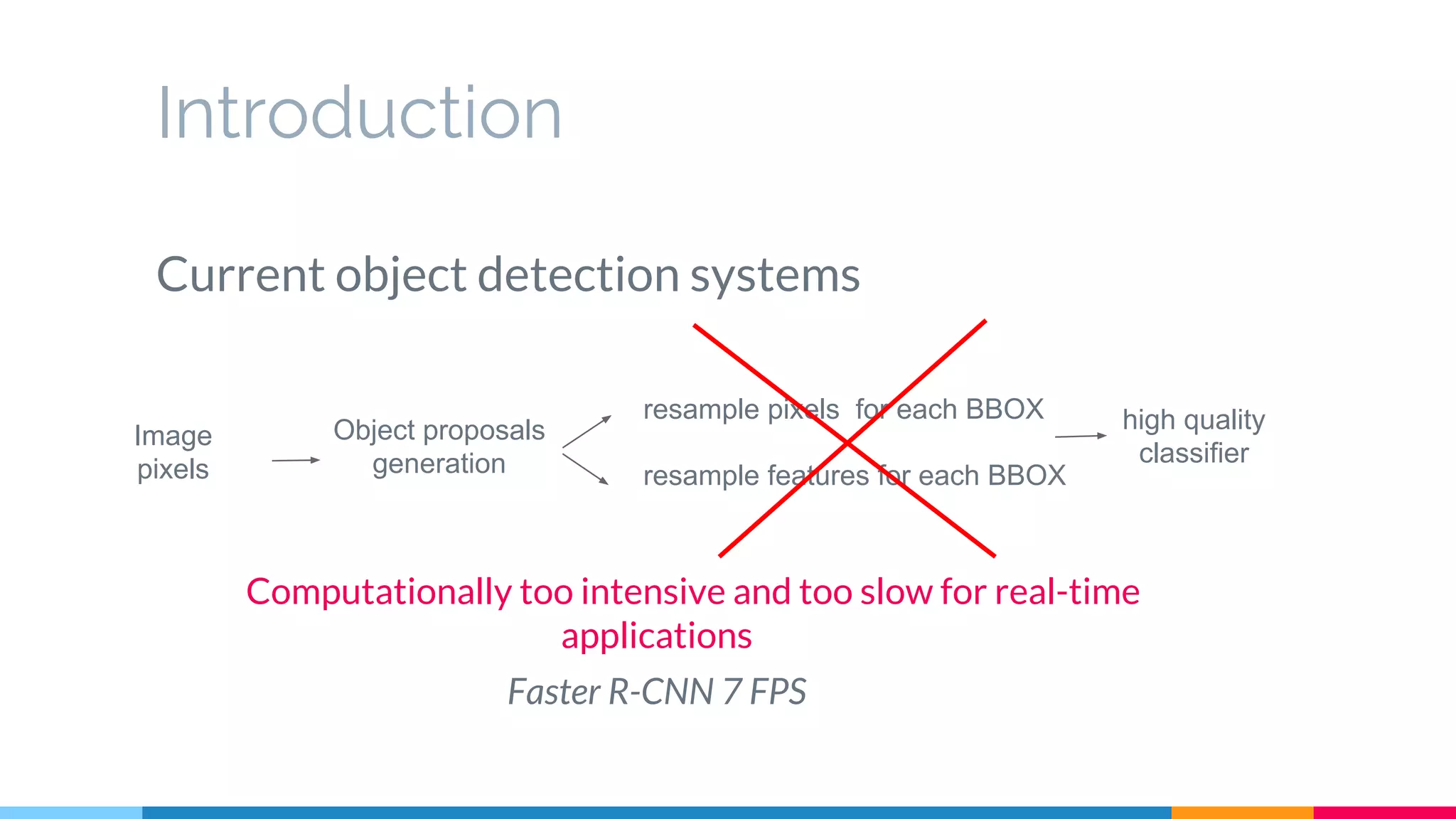

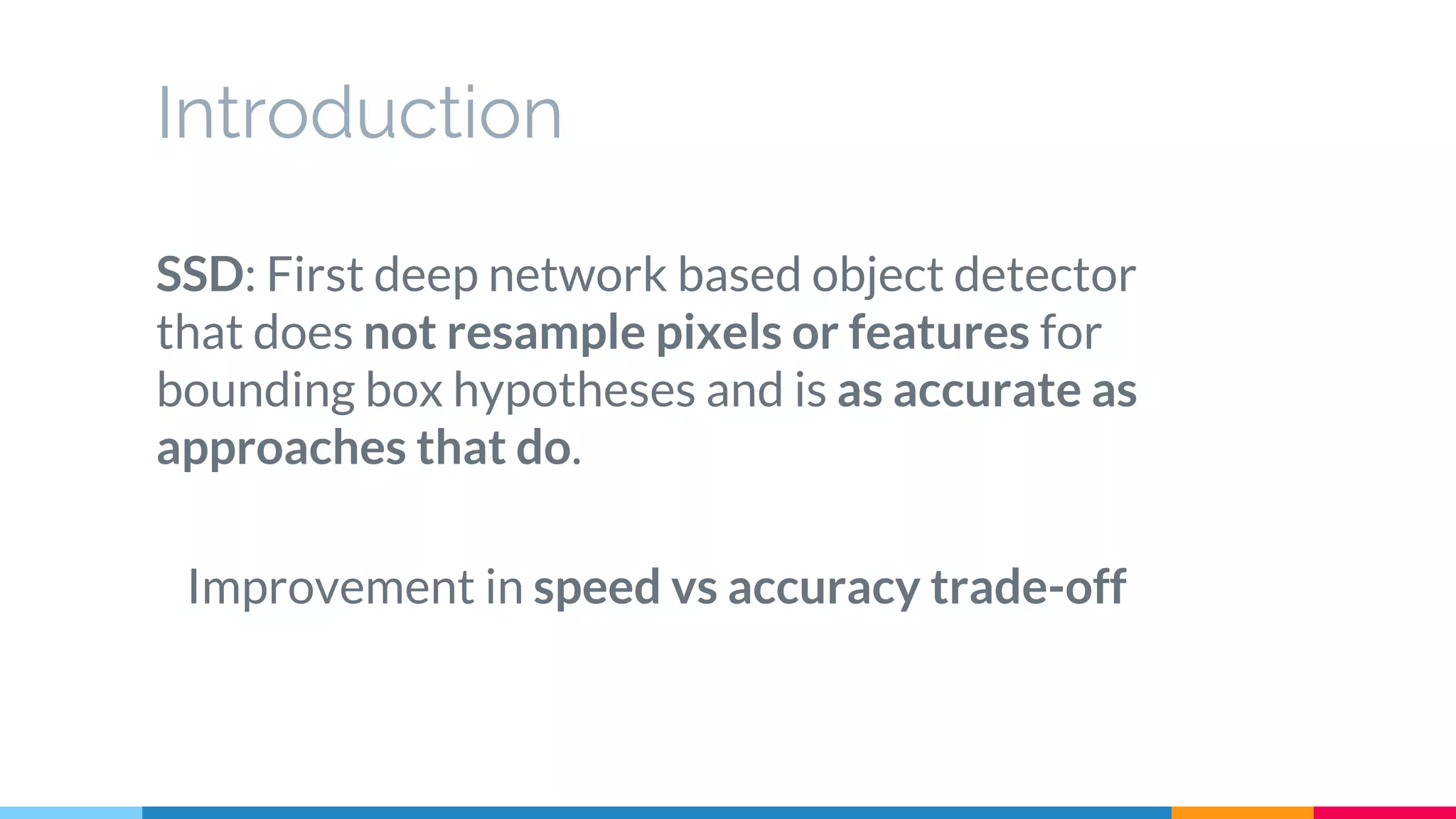

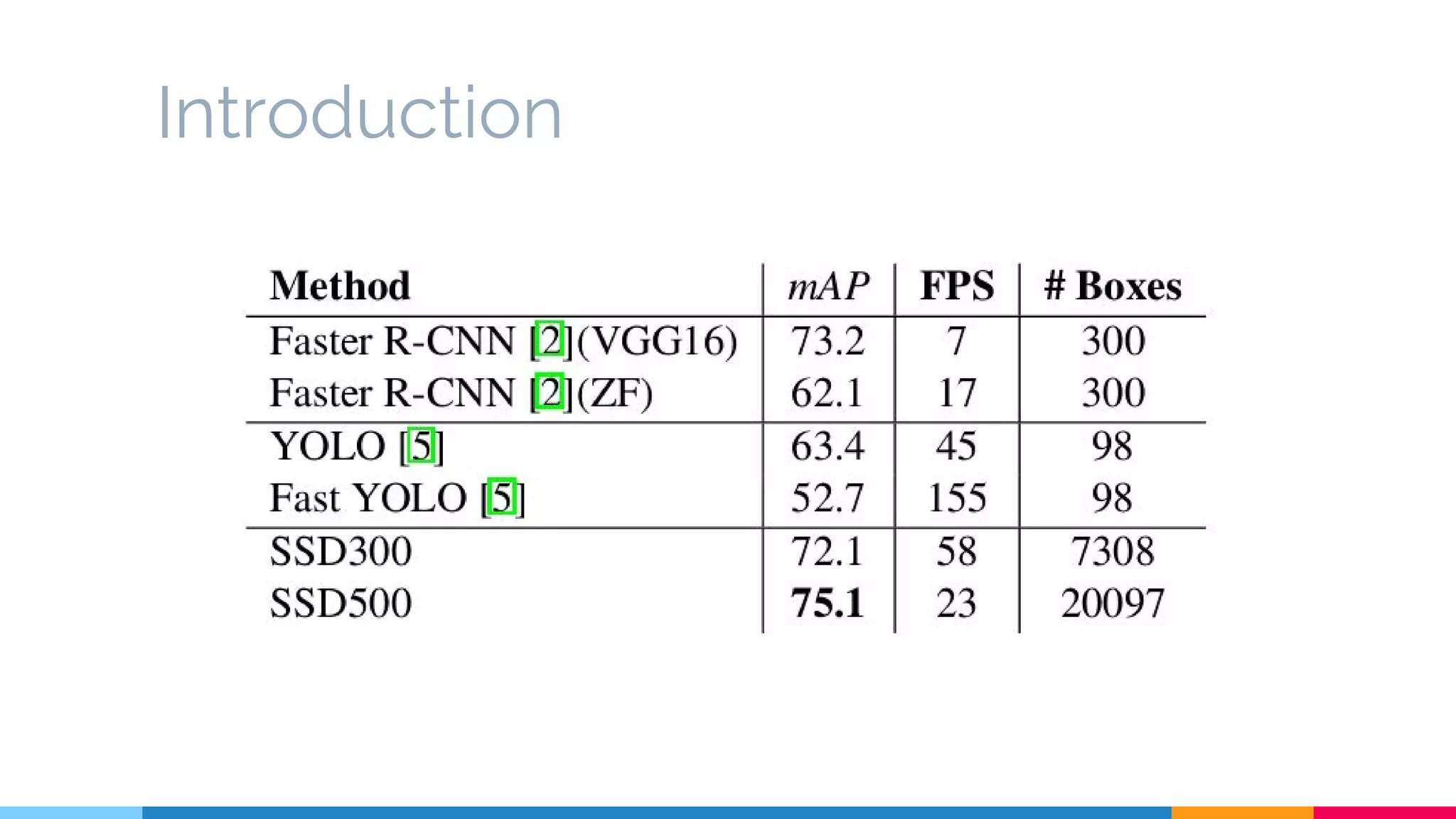

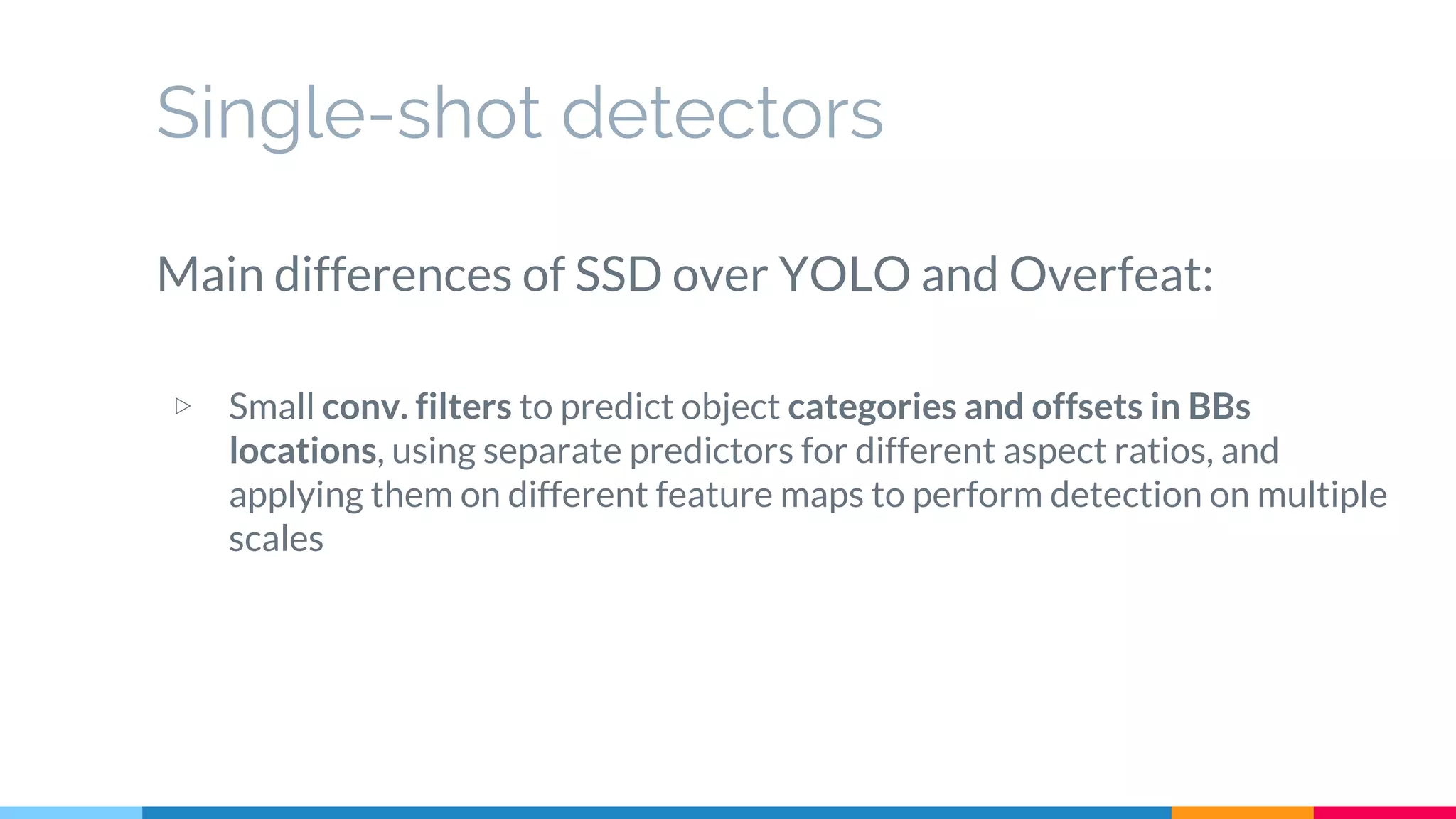

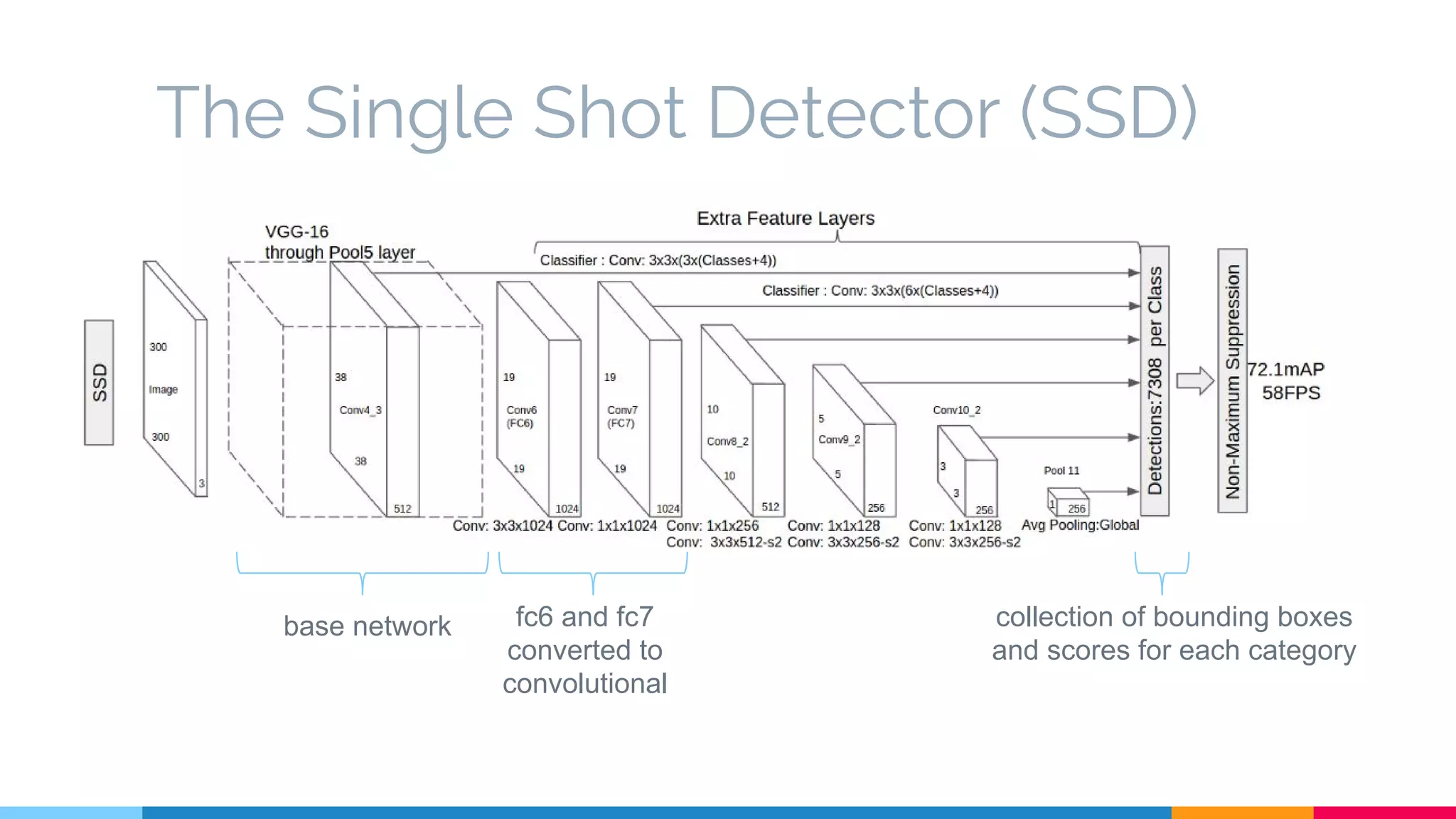

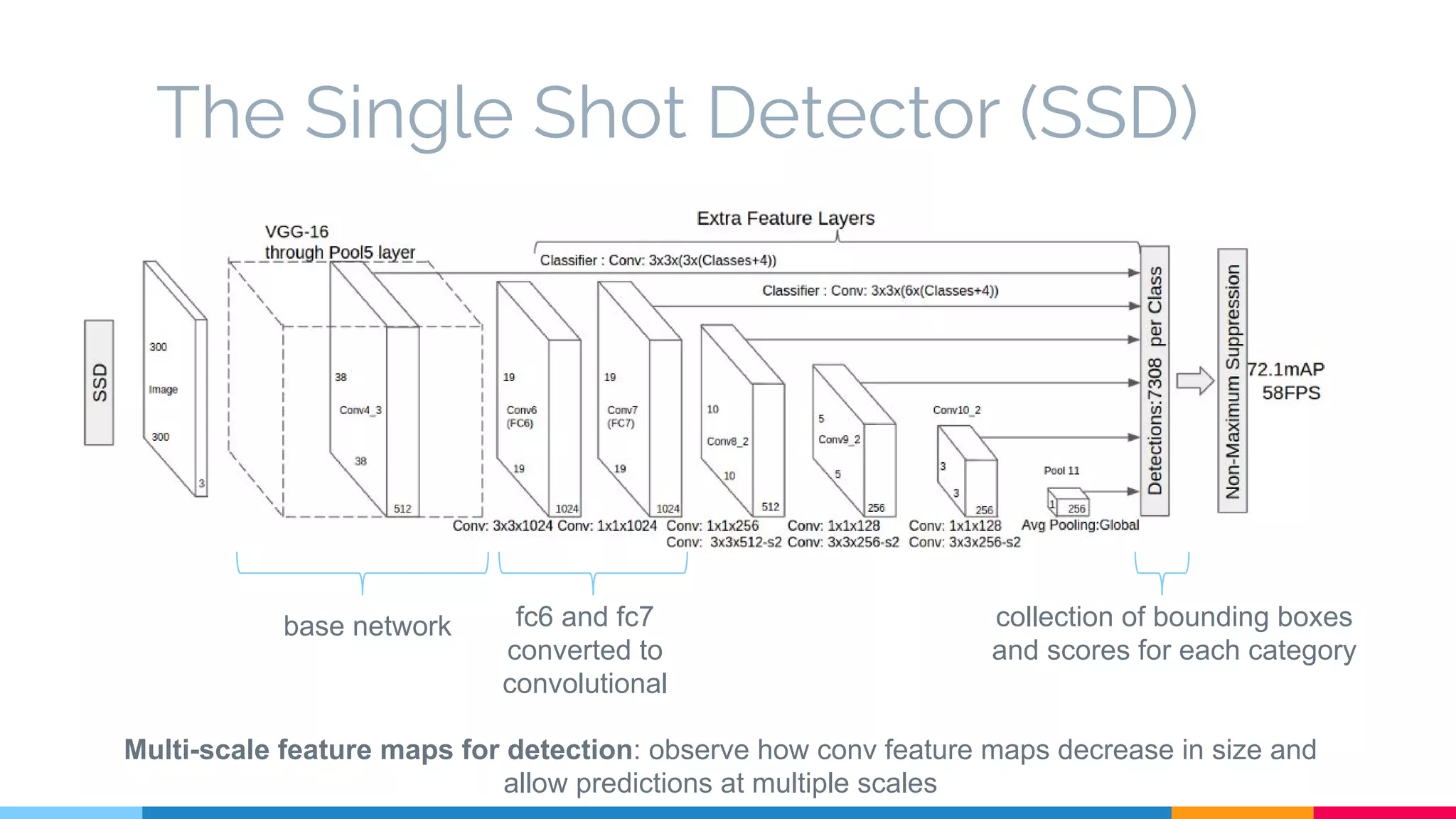

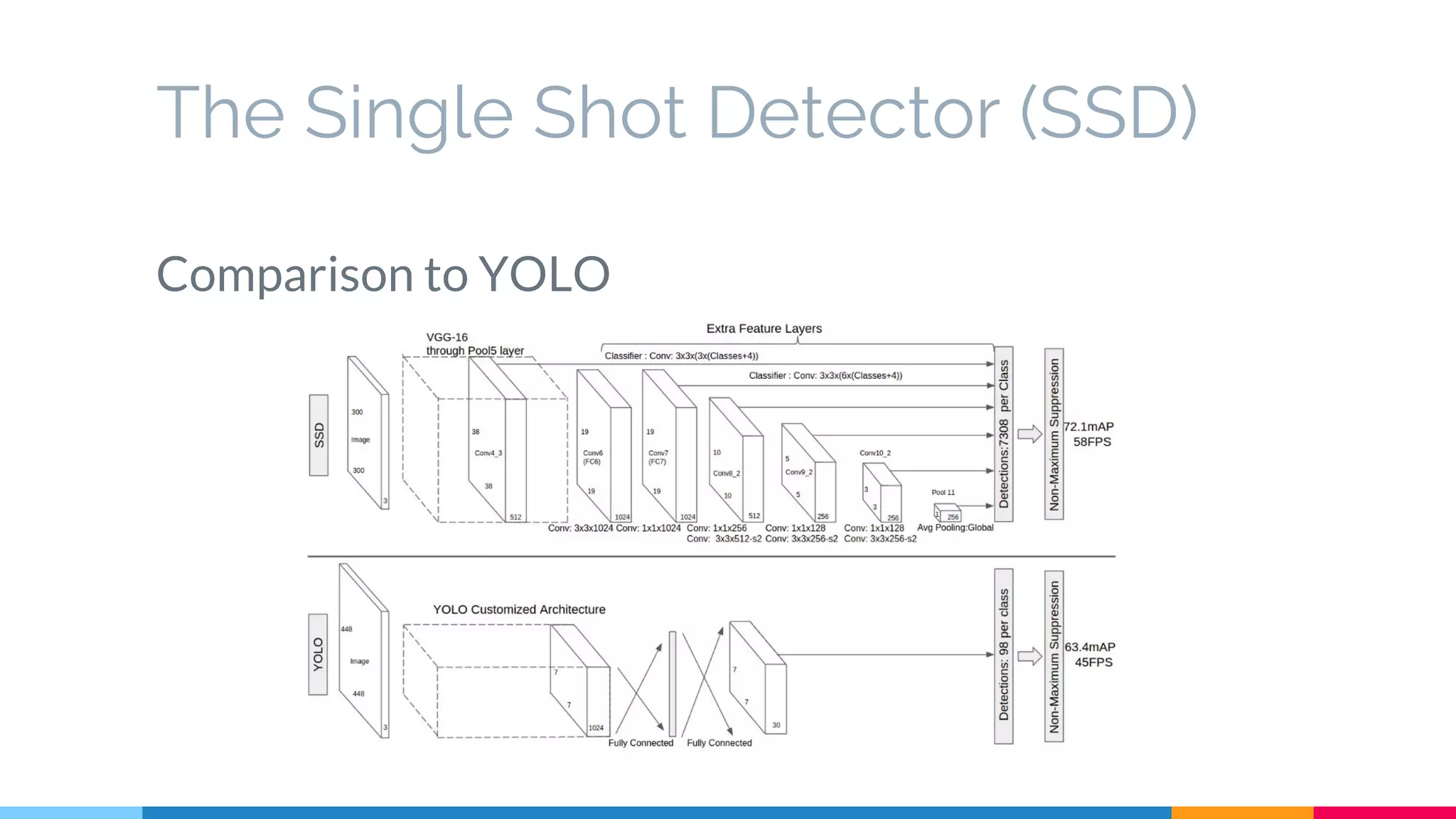

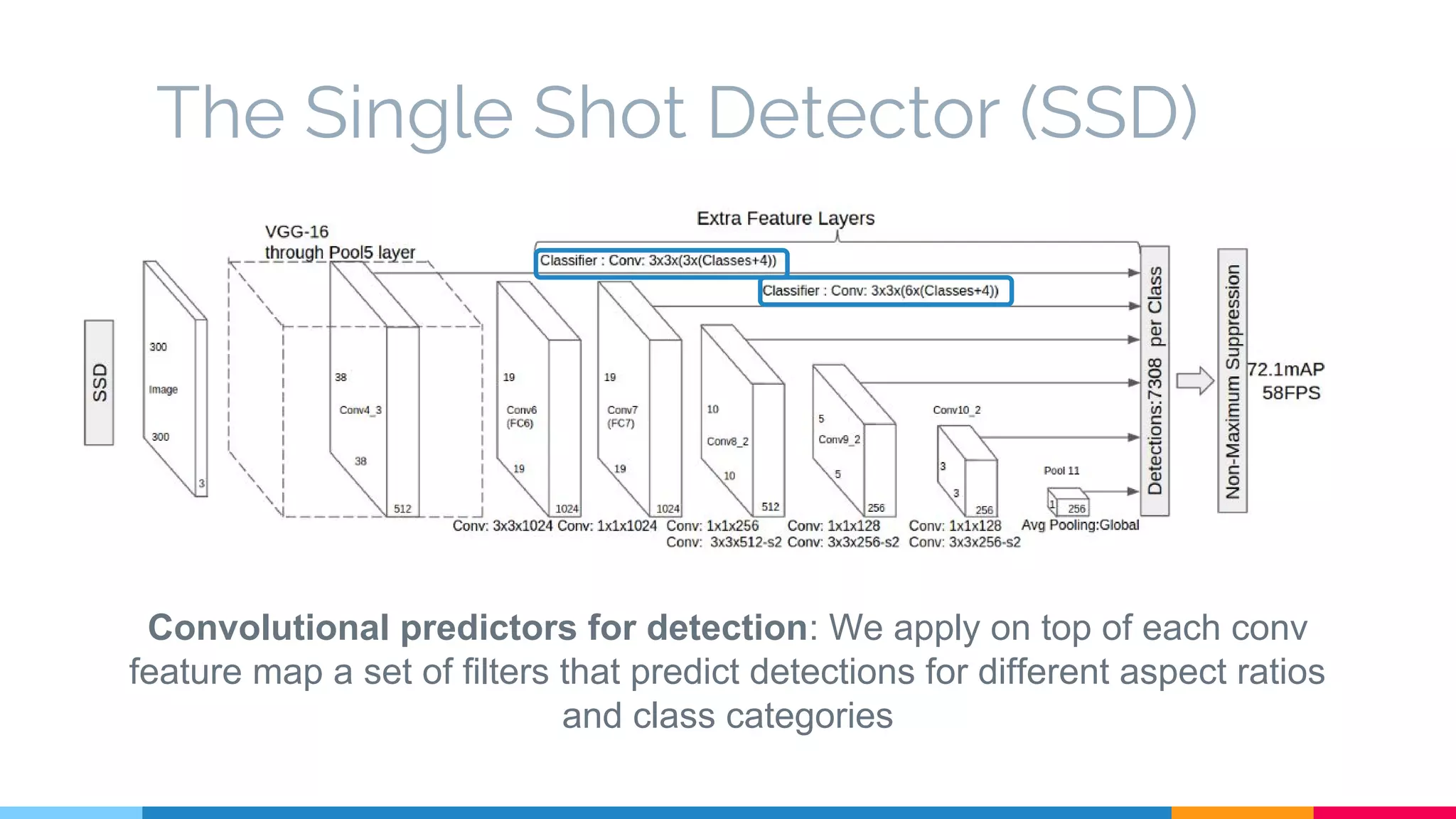

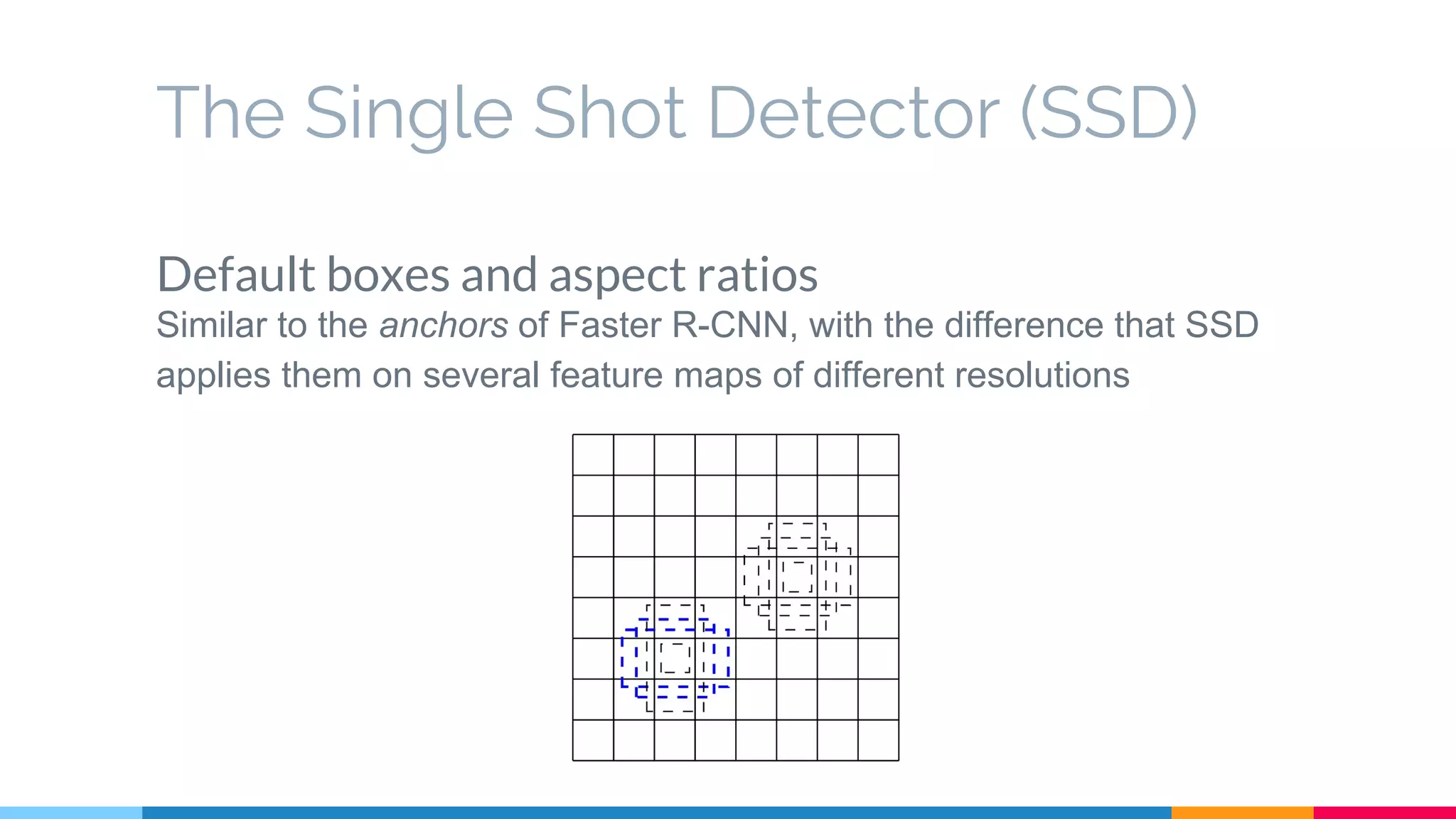

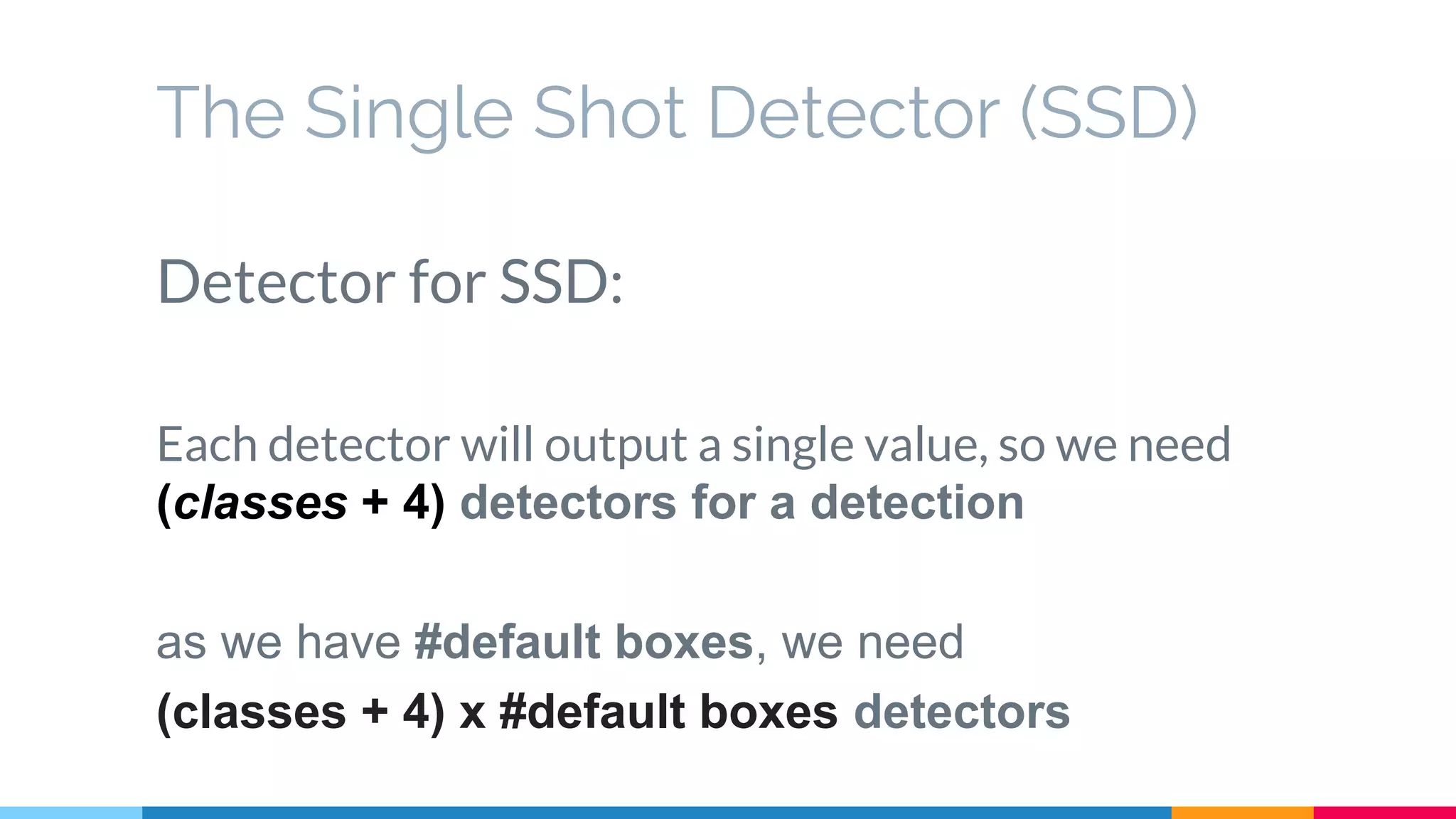

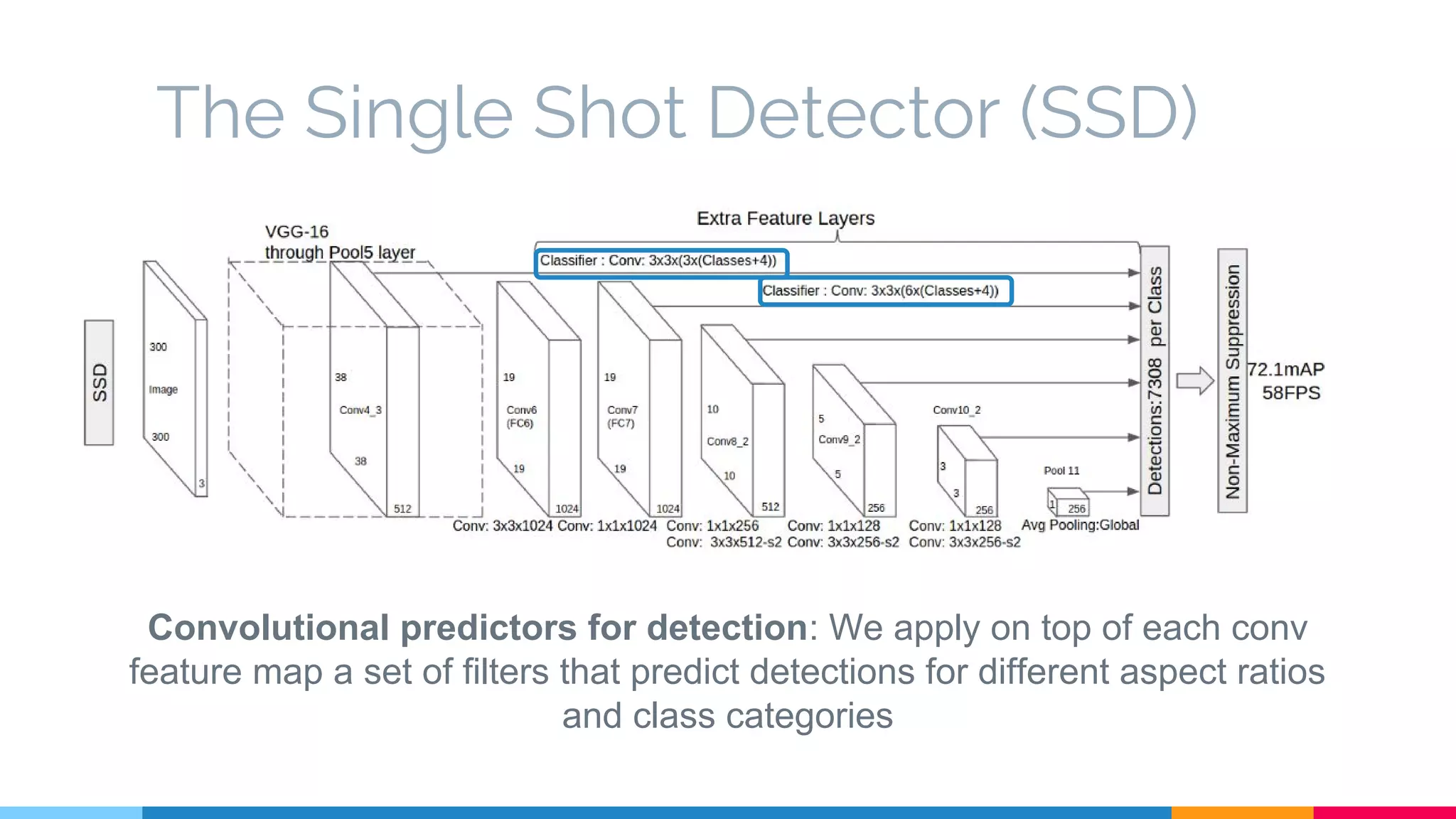

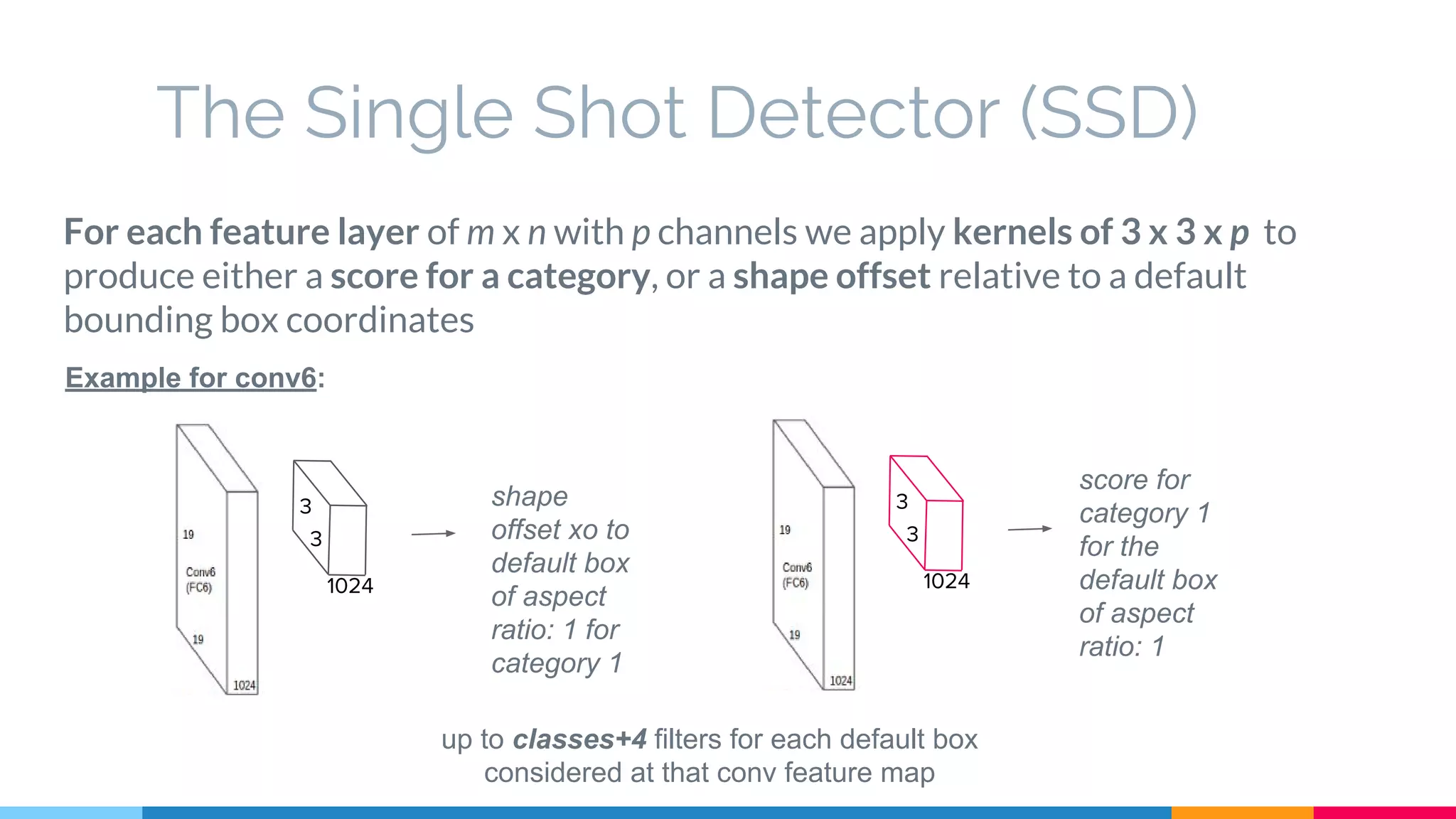

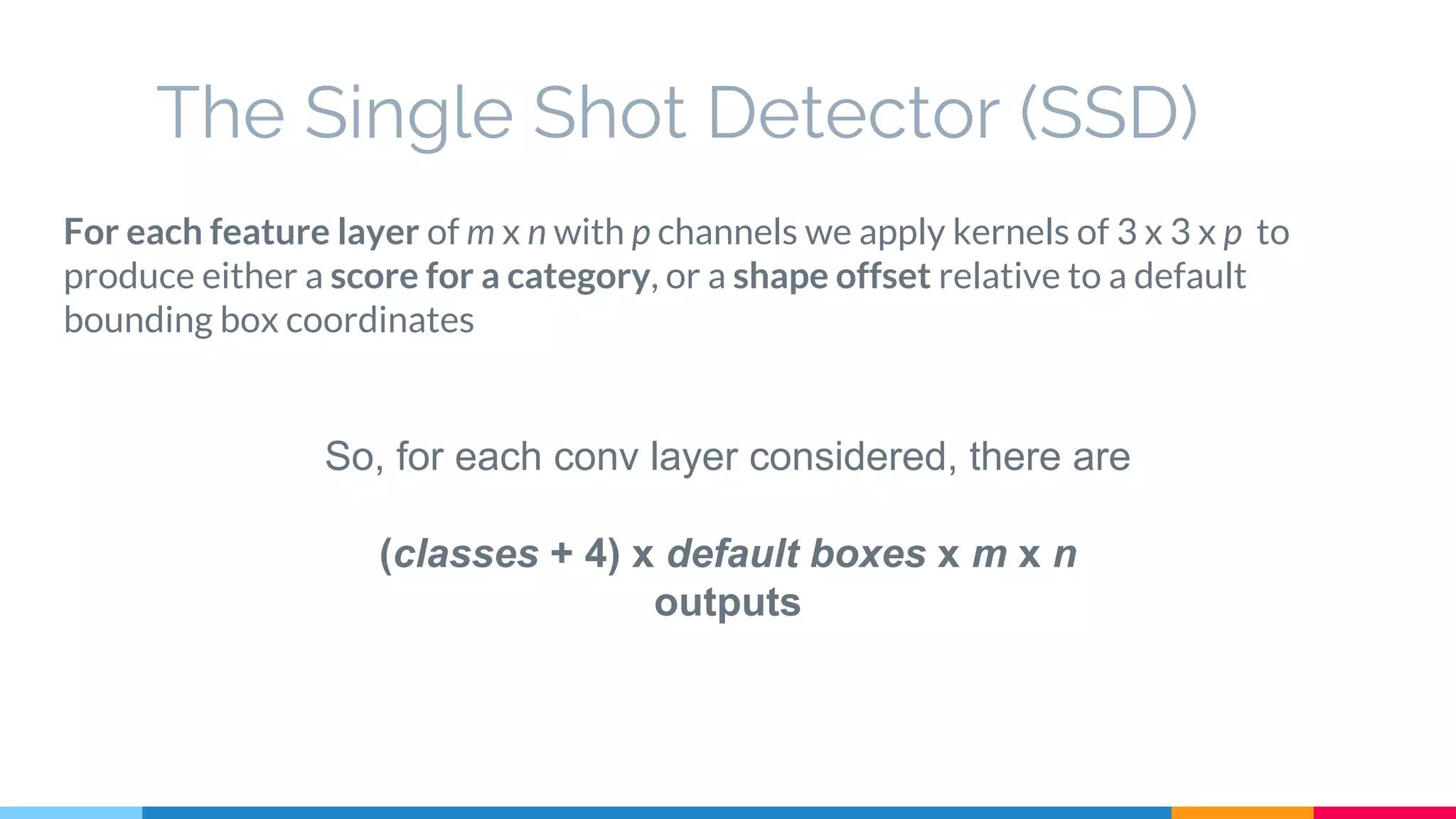

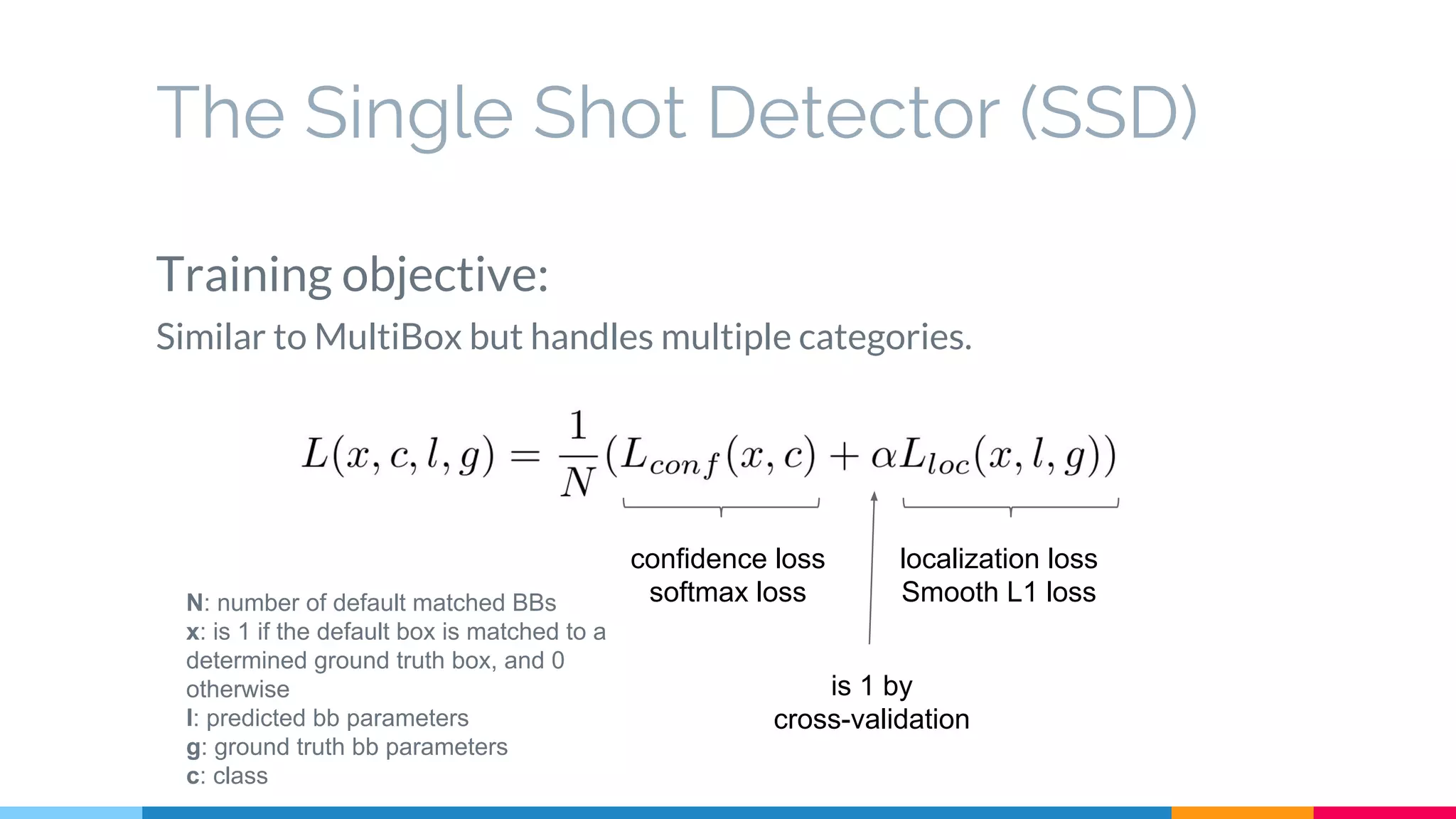

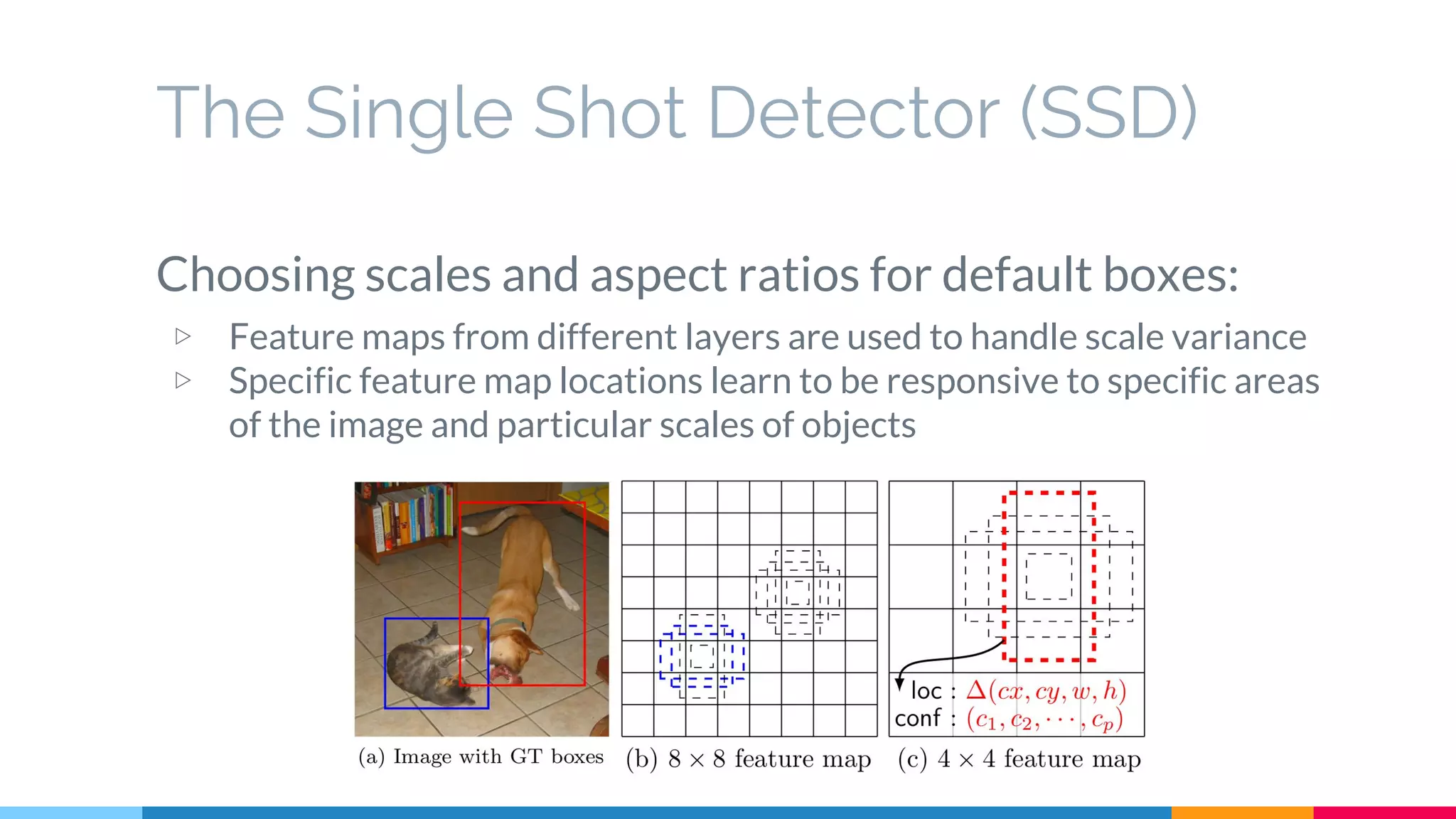

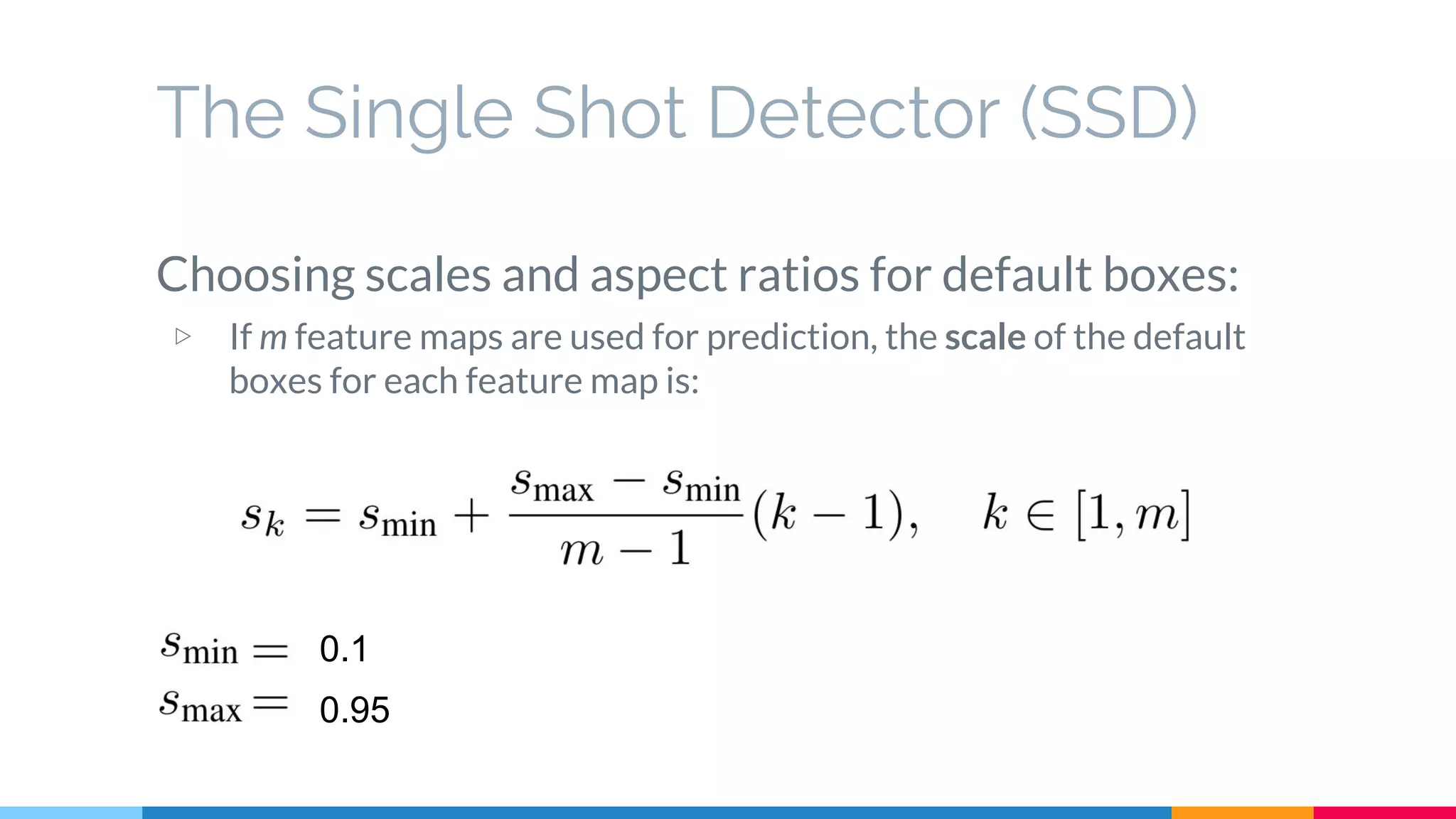

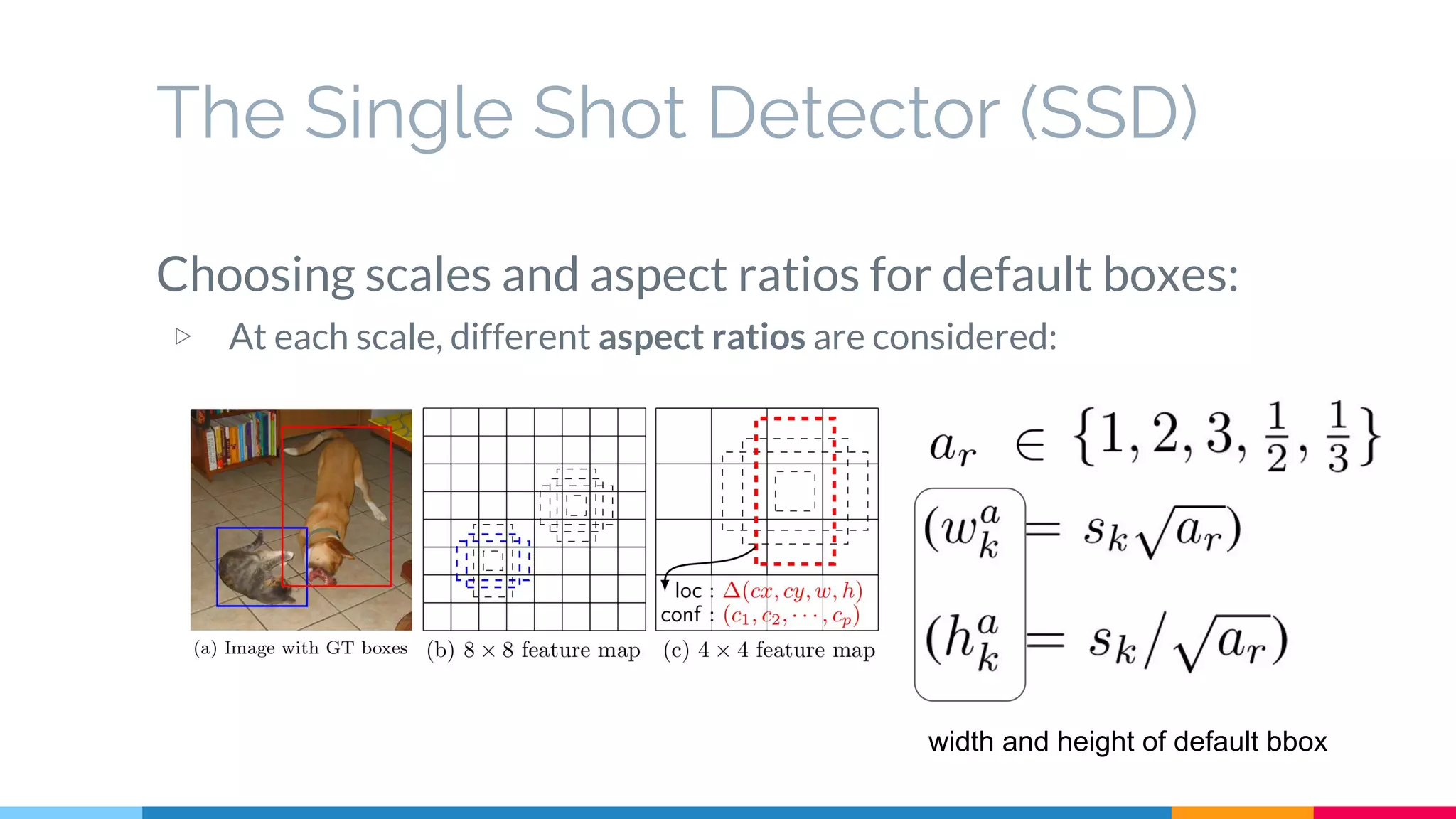

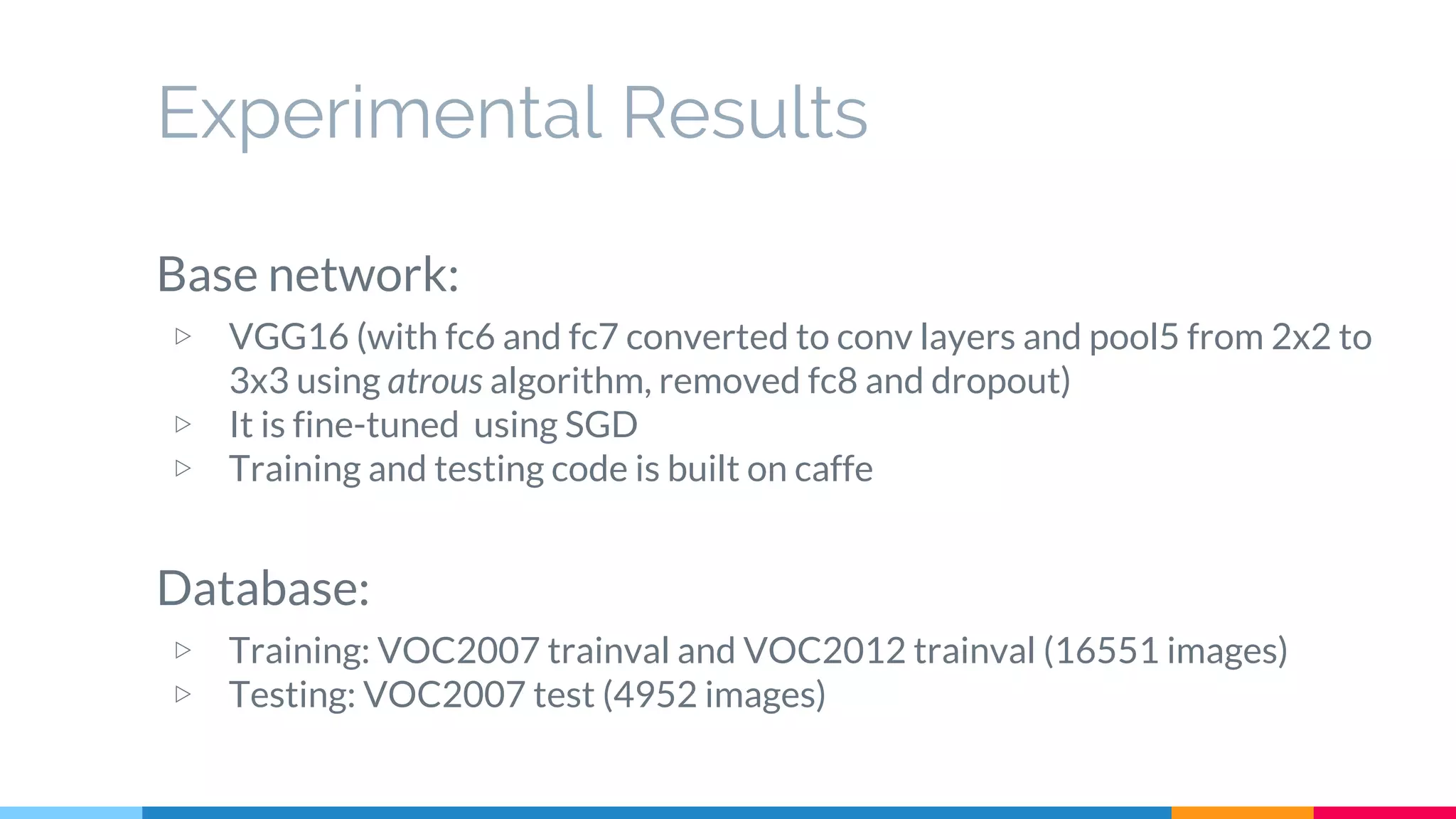

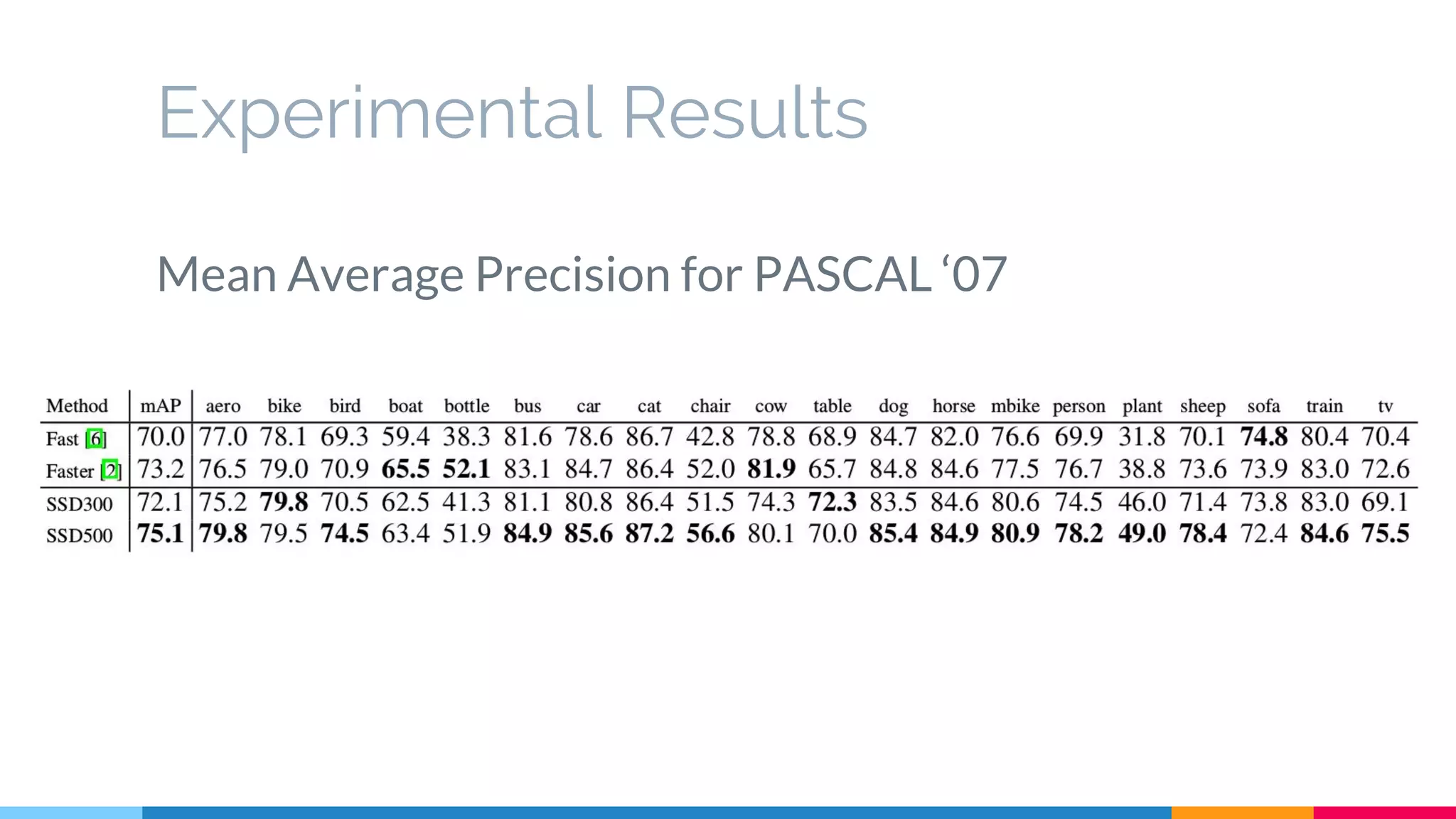

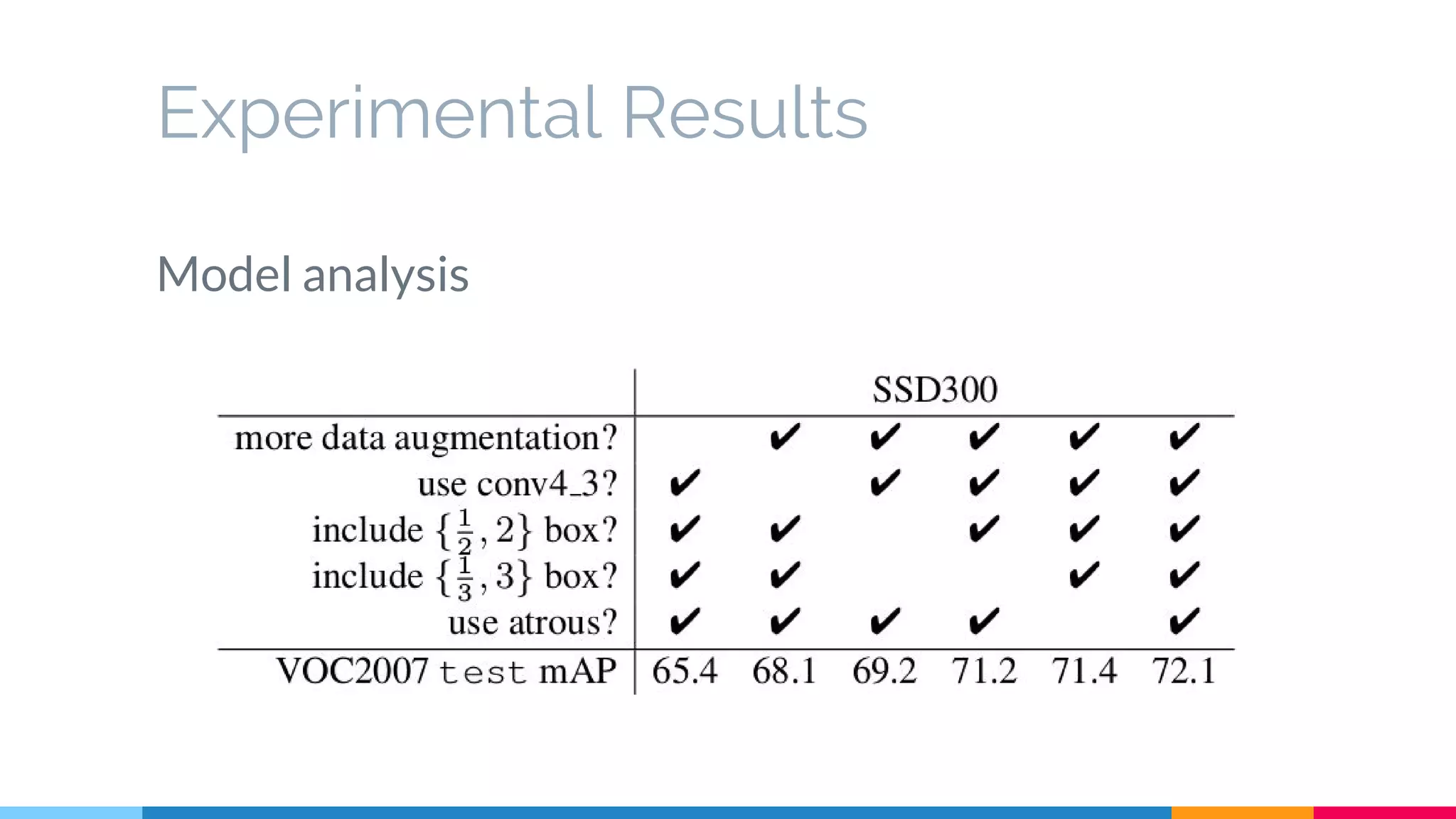

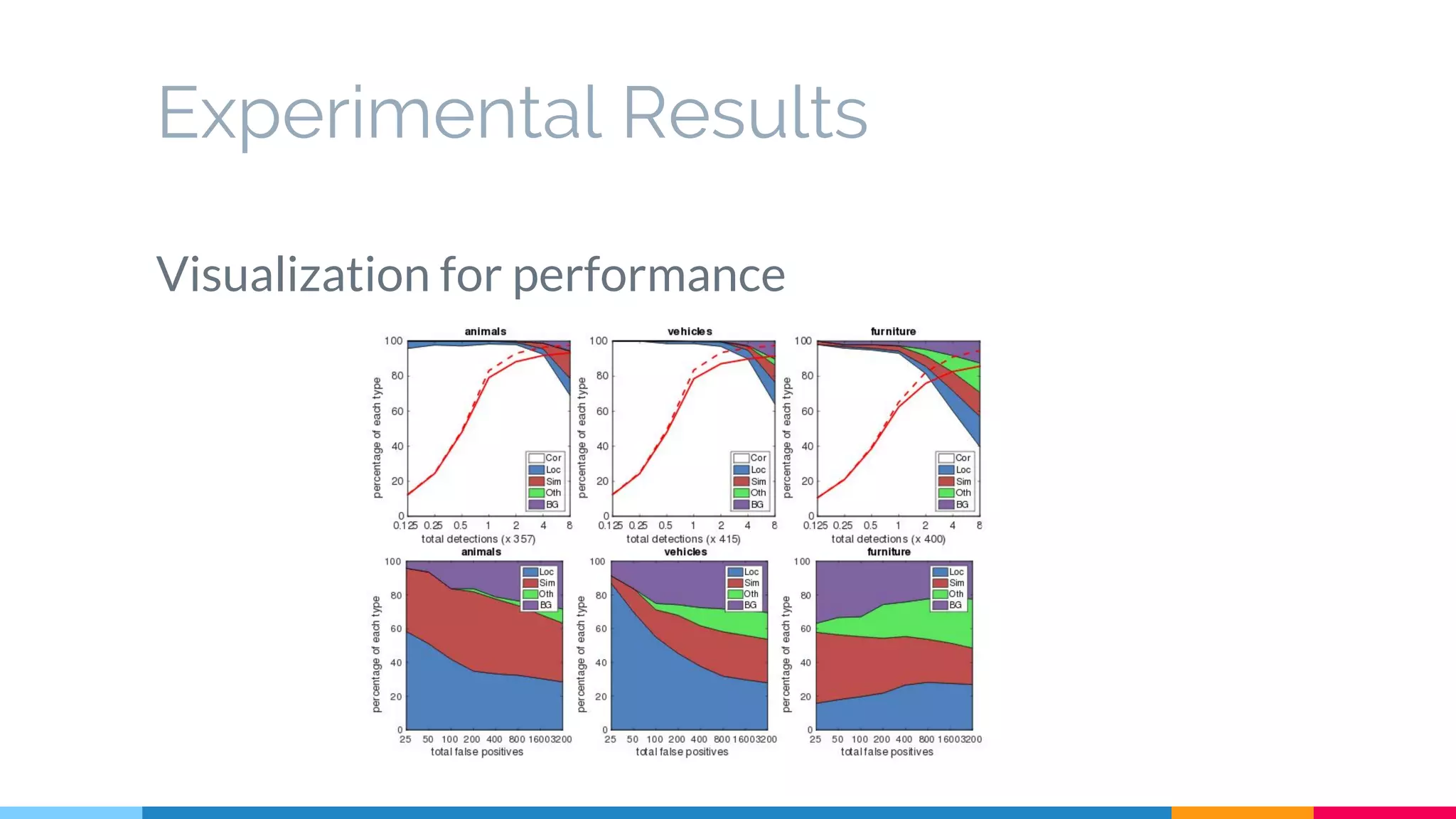

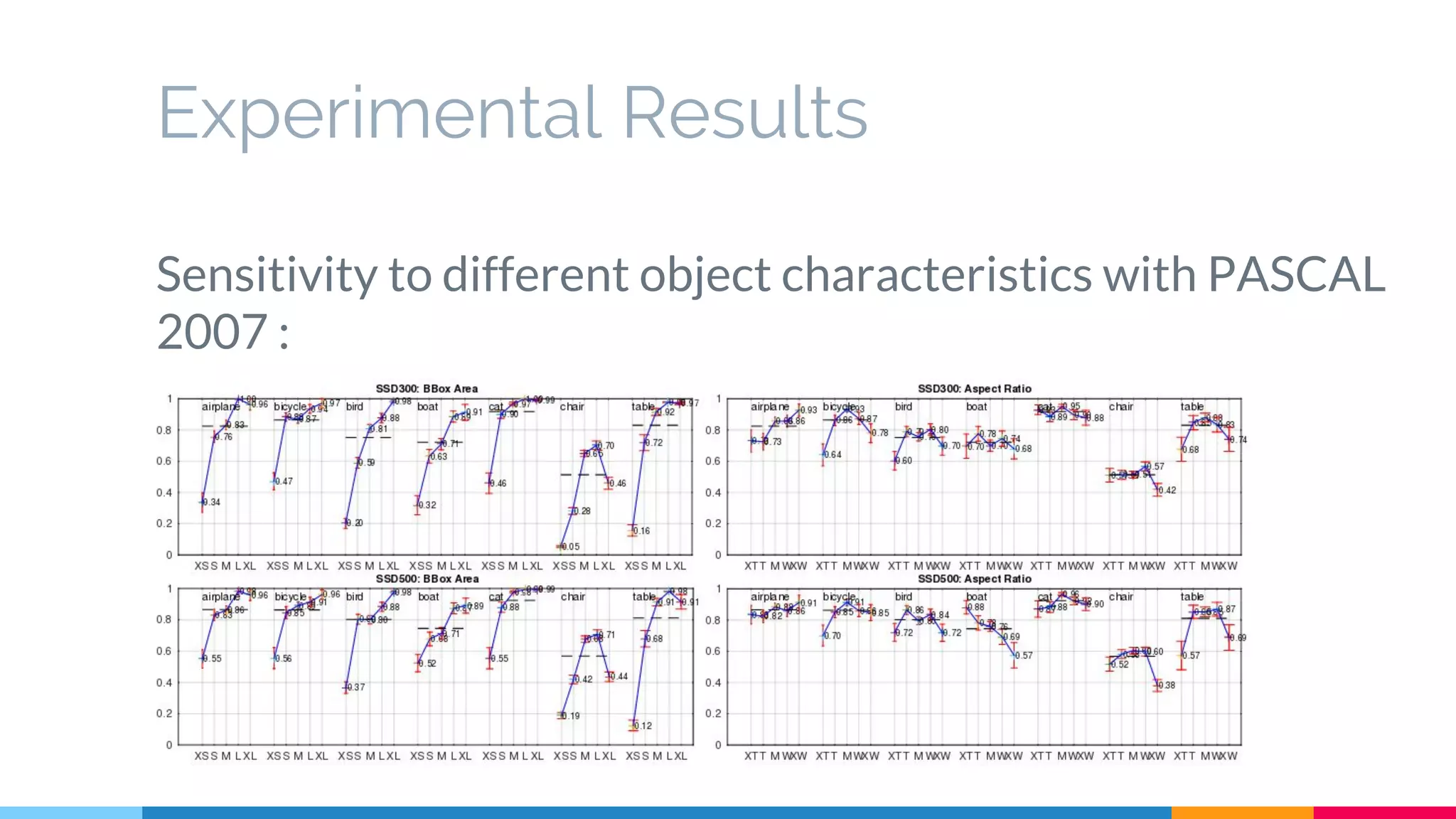

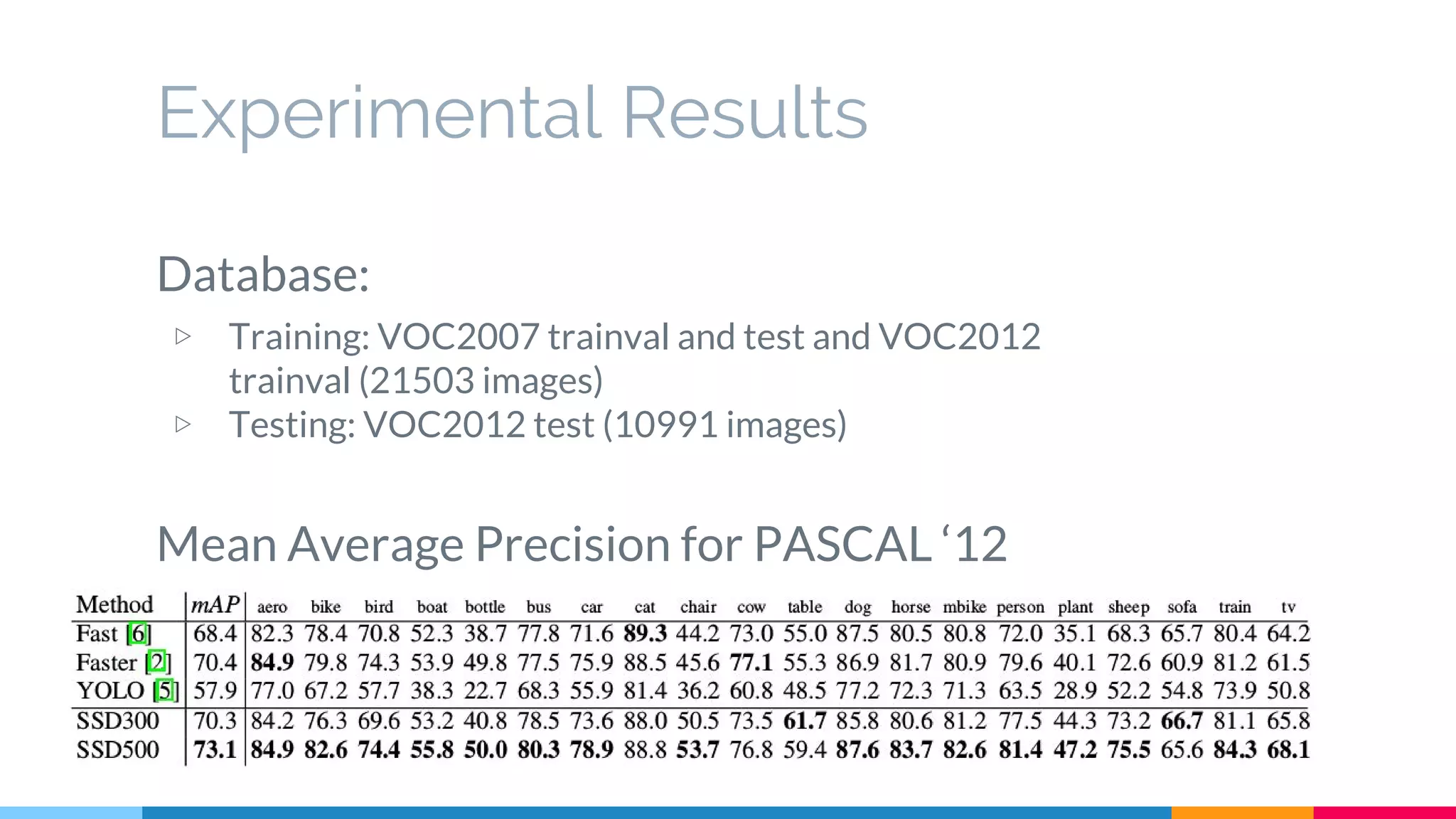

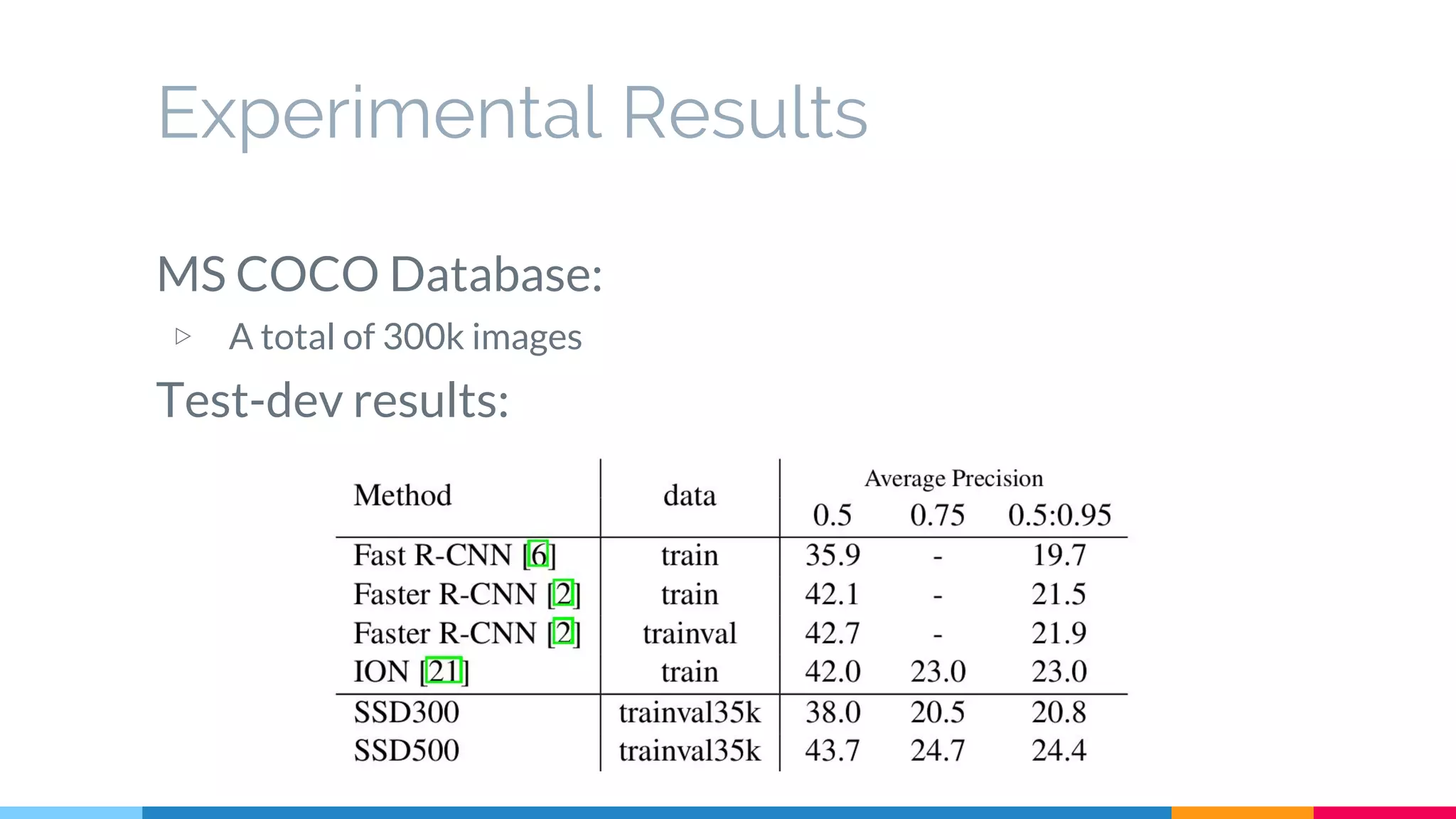

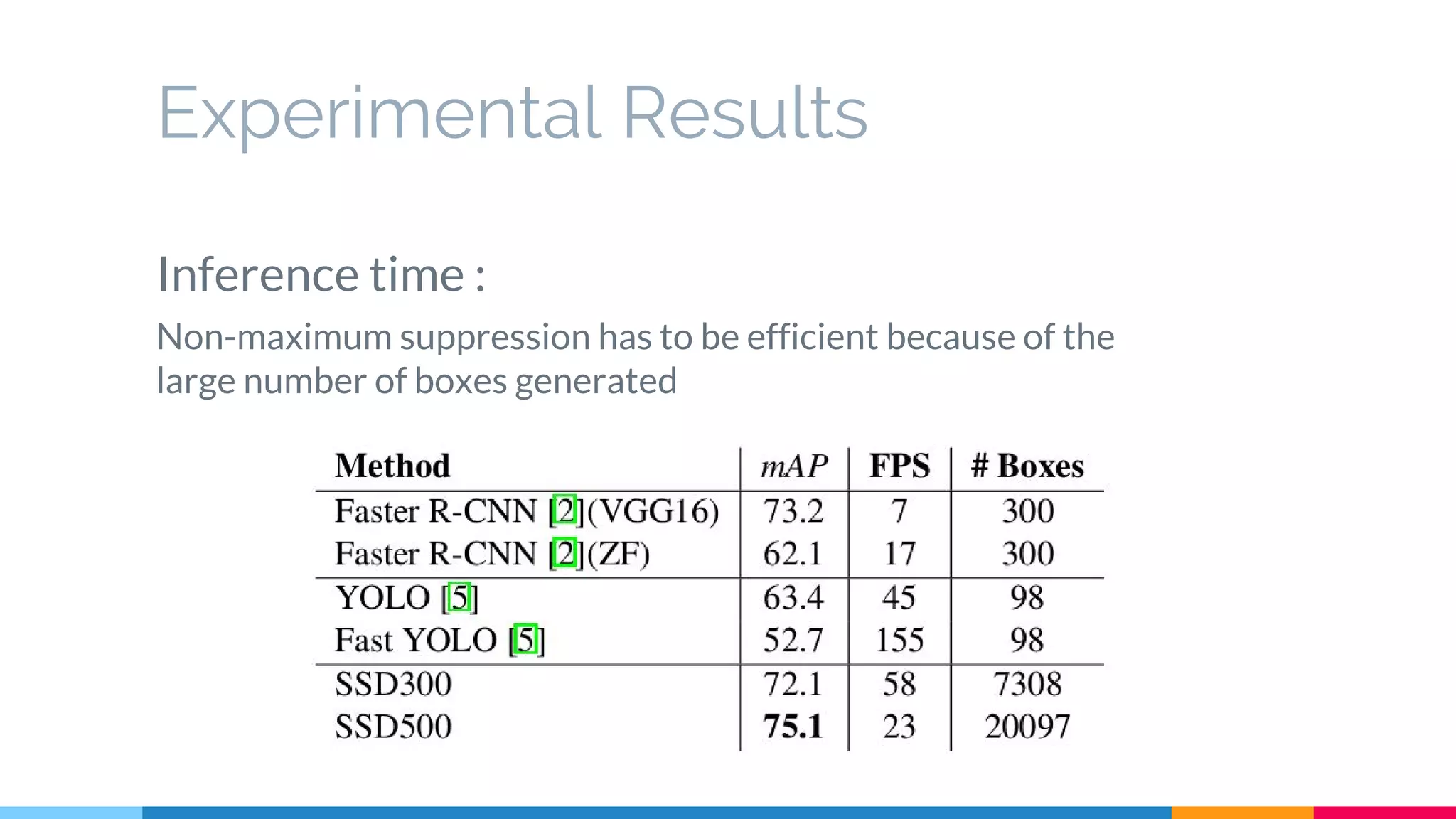

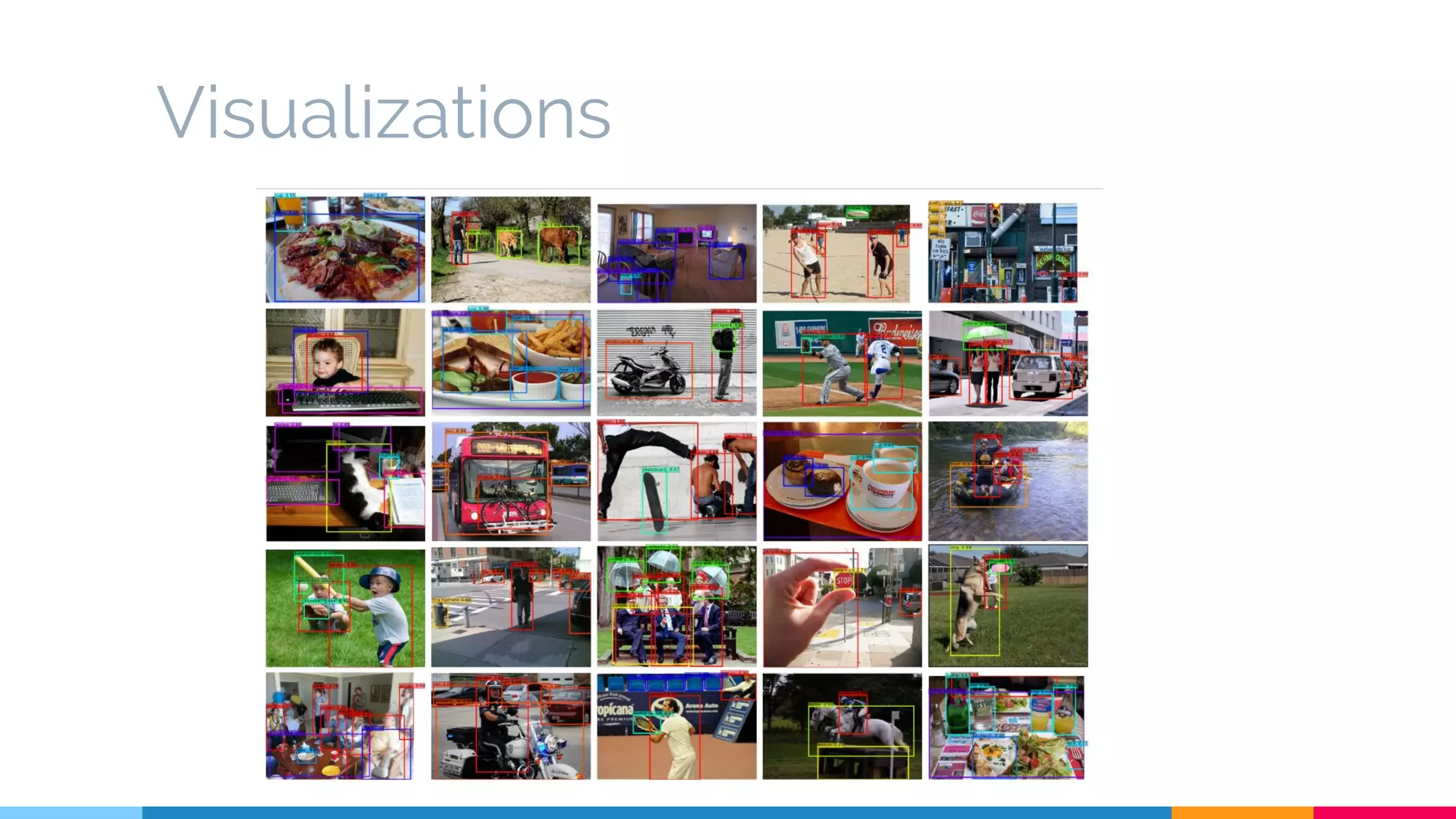

The SSD (Single Shot Multibox Detector) is a novel deep learning-based object detection framework that predicts object boxes and category scores in a single forward pass of the network, significantly improving speed while maintaining accuracy compared to previous methods like Faster R-CNN and YOLO. It utilizes small convolutional filters applied to different scaled feature maps to enhance detection of various object sizes and aspect ratios. The experimental results affirm SSD's efficiency and precision, marking it as a strong contender in real-time object detection applications.

![SSD: Single Shot MultiBox Detector

Wei Liu, Dragomir Anguelov, Dumitru Erhan, Christian Szegedy, Scott Reed,

Cheng-Yang Fu, Alexander C.Berg

[arXiv][demo][code] (Mar 2016)

Slides by Míriam Bellver

Computer Vision Reading Group, UPC

28th October, 2016](https://image.slidesharecdn.com/05-singleshotmultiboxdetector-161028144820/75/SSD-Single-Shot-MultiBox-Detector-UPC-Reading-Group-1-2048.jpg)