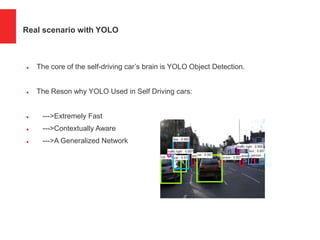

This document discusses the YOLO object detection algorithm and its applications in real-time object detection. YOLO frames object detection as a regression problem to predict bounding boxes and class probabilities in one pass. It can process images at 30 FPS. The document compares YOLO versions 1-3 and their improvements in small object detection, resolution, and generalization. It describes implementing YOLO with OpenCV and its use in self-driving cars due to its speed and contextual awareness.