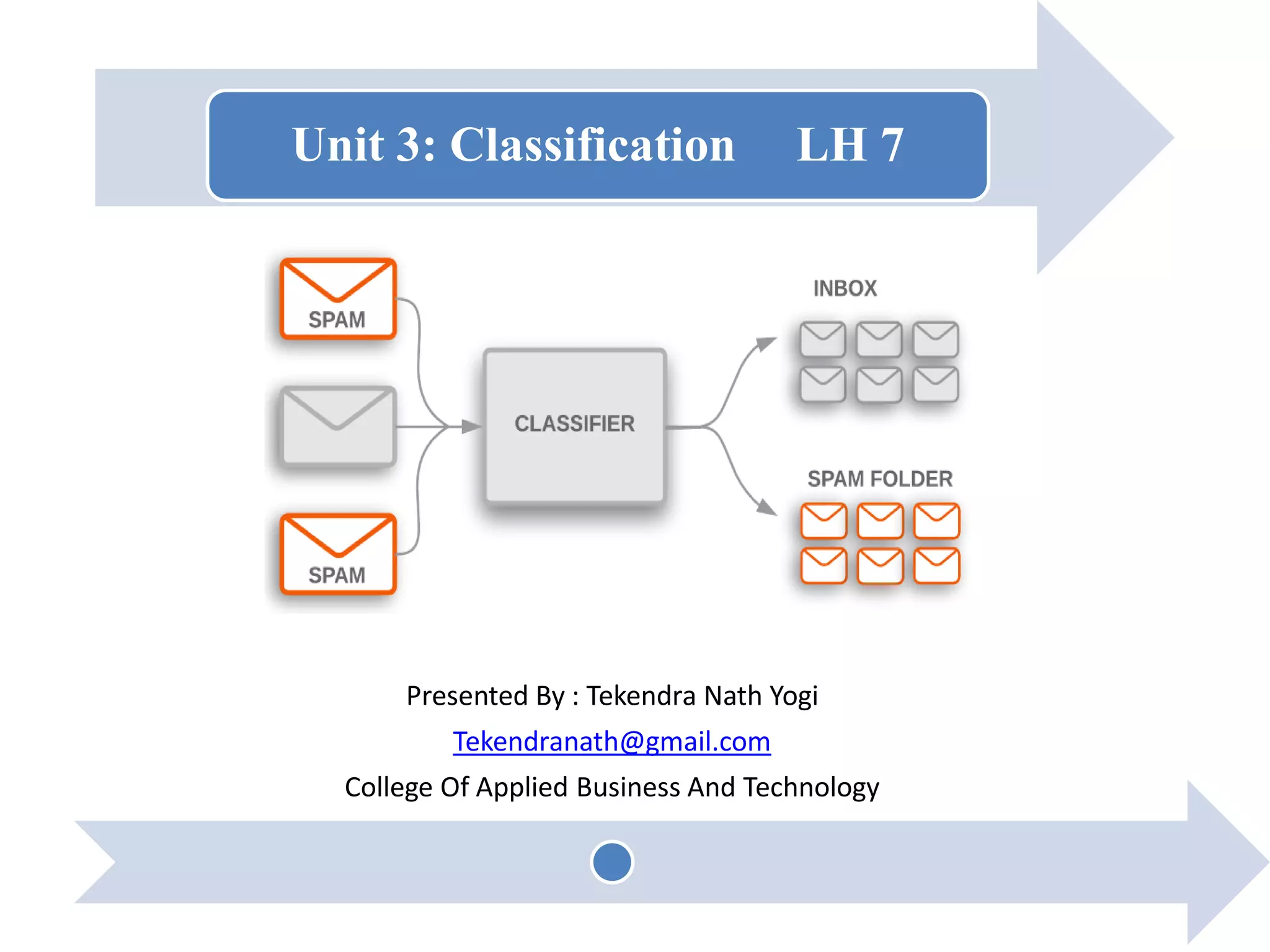

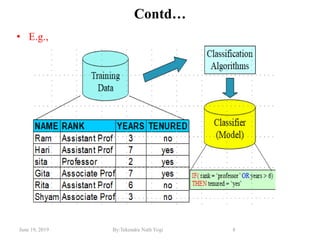

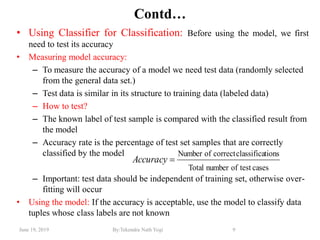

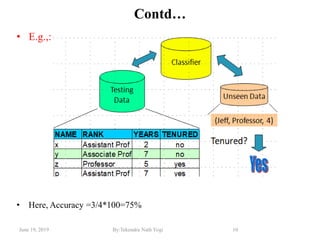

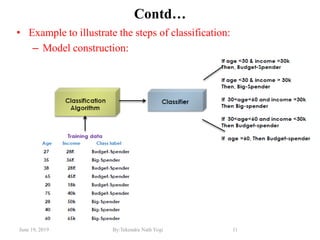

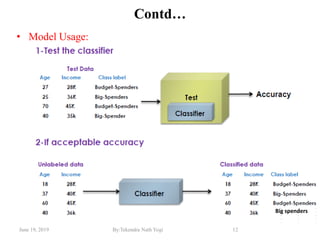

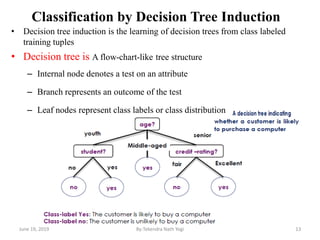

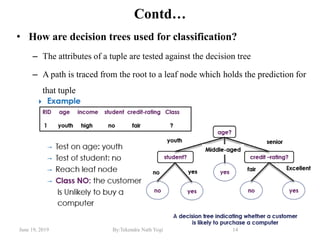

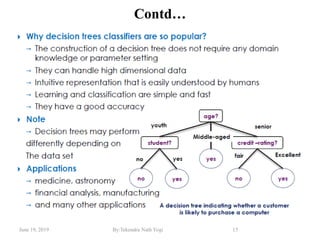

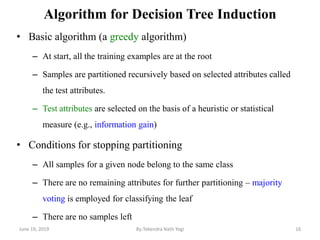

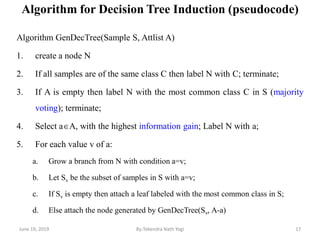

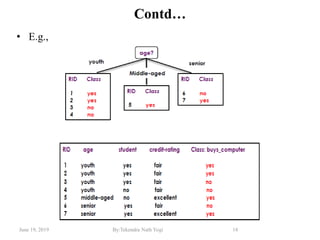

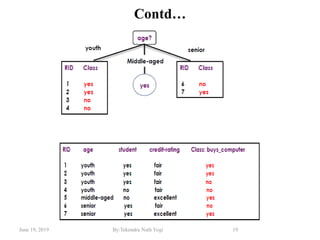

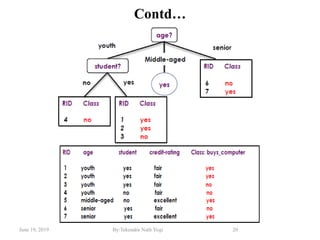

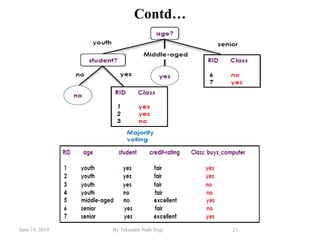

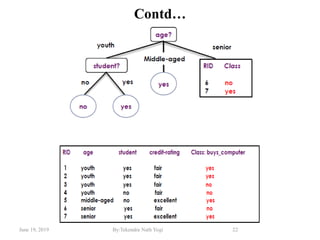

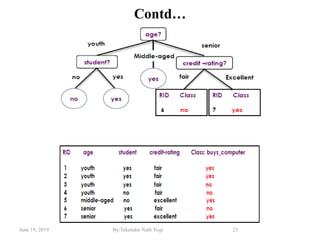

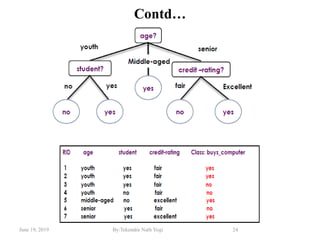

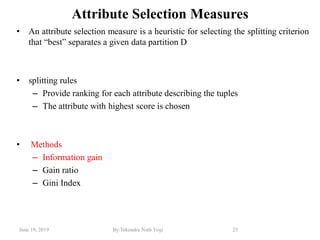

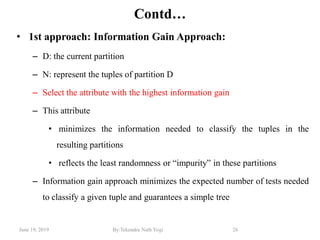

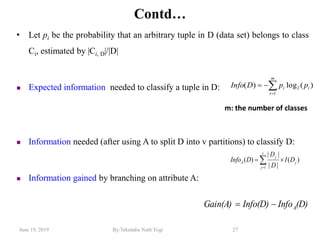

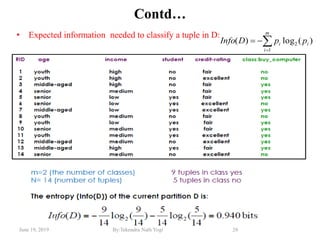

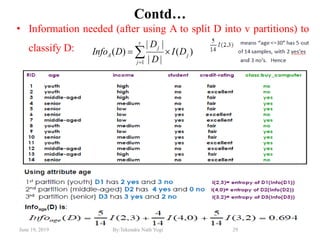

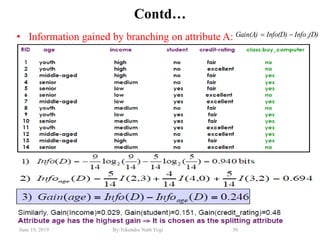

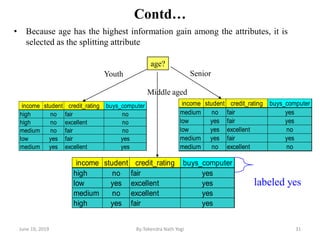

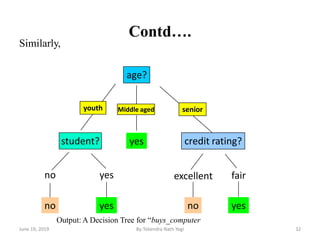

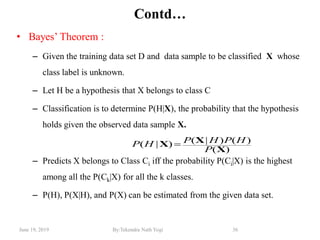

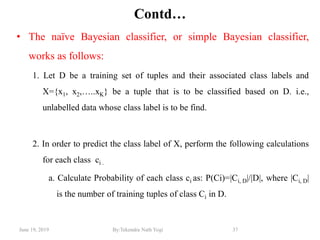

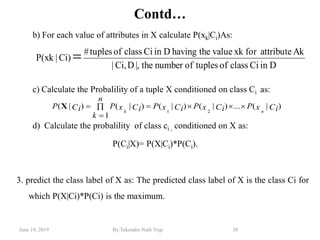

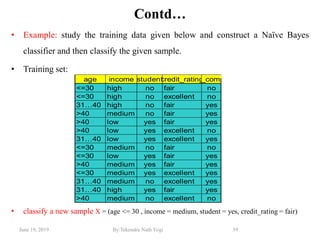

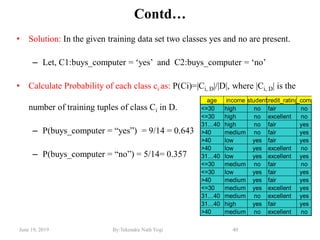

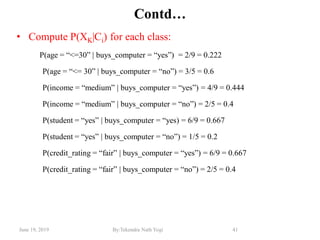

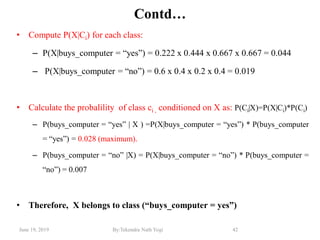

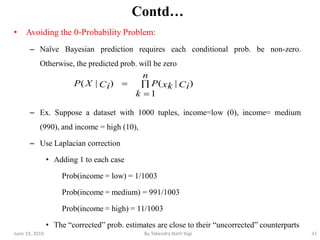

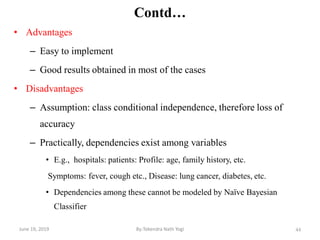

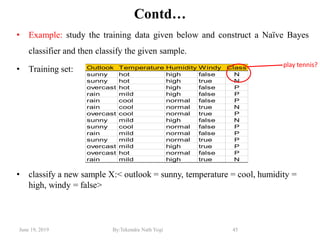

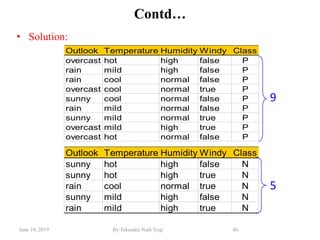

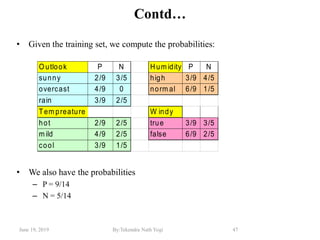

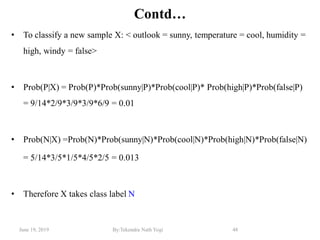

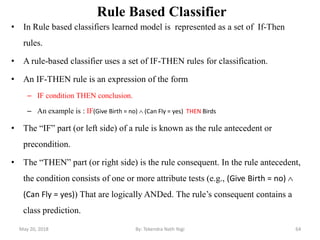

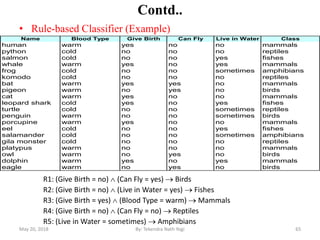

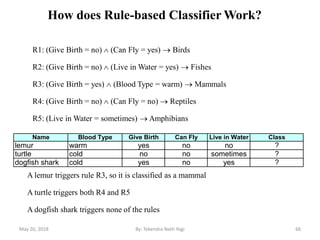

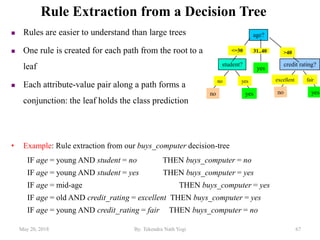

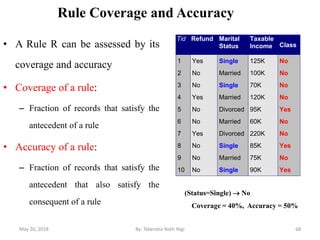

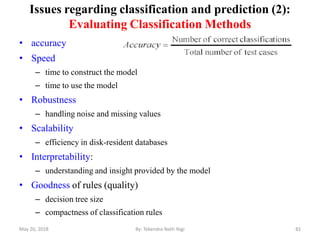

The document discusses various classification techniques used in data analysis, including decision tree classifiers, rule-based classifiers, and Bayesian classifiers. It covers when to use classification versus prediction, the process of building classifiers, and the importance of testing model accuracy to avoid overfitting. The document also highlights the advantages and disadvantages of decision tree methods and introduces basic concepts of naive Bayes classification.