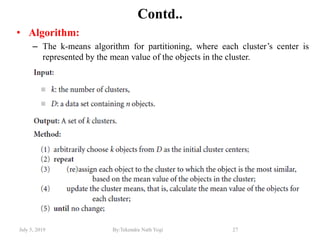

This document summarizes a presentation on cluster analysis given by Tekendra Nath Yogi. It defines cluster analysis and describes several clustering methods and algorithms, including k-means clustering. It also discusses applications of cluster analysis in fields like business intelligence, image recognition, web search, and biology. Requirements for effective clustering algorithms are outlined.