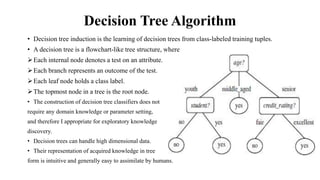

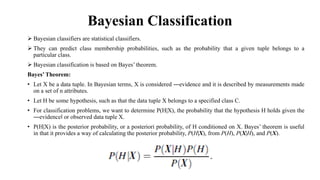

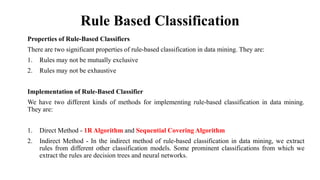

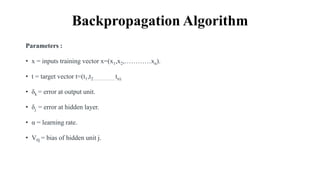

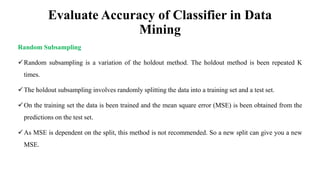

This document provides an overview of classification and prediction methods in data mining, detailing various techniques such as decision trees and Bayesian classification. Key issues addressed include data preprocessing, evaluation metrics, and various algorithms for implementing these methods. The document emphasizes the importance of accuracy, speed, robustness, and scalability in the classification and prediction processes.