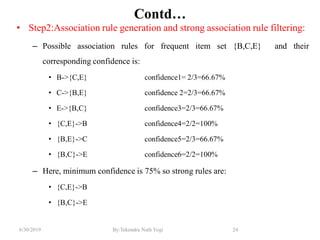

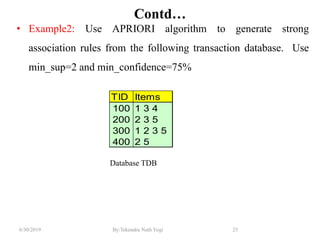

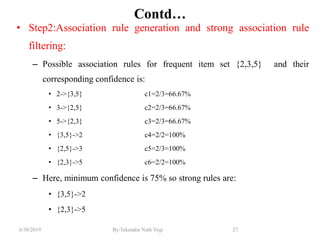

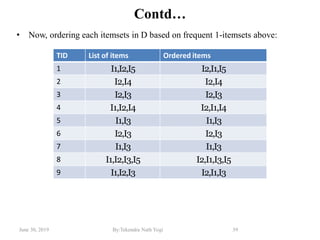

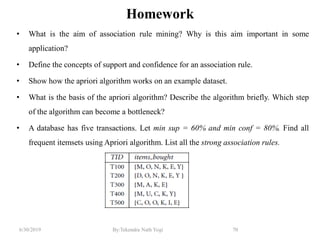

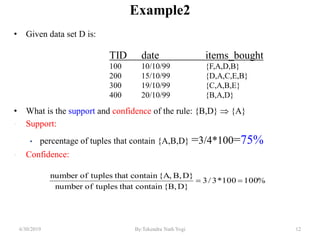

This document outlines the process of association rule mining using the Apriori algorithm. It begins with definitions of key terms like frequent itemsets, support, and confidence. It then explains how the Apriori algorithm reduces the search space using the Apriori property to only consider potentially frequent itemsets. Finally, it provides examples applying the Apriori algorithm to transaction databases to generate strong association rules that meet minimum support and confidence thresholds.

![Association Rule Mining Task

• Given a set of transactions T, the goal of association rule mining is to find all

rules having

– support ≥ minsup threshold

– confidence ≥ minconf threshold

• If a rule A=>B[Support, Confidence] satisfies min_sup and min_confidence

then it is a strong rule.

• So, we can say that the goal of association rule mining is to find all strong

rules.

6/30/2019 13By:Tekendra Nath Yogi](https://image.slidesharecdn.com/unit4-190708021811/85/BIM-Data-Mining-Unit4-by-Tekendra-Nath-Yogi-13-320.jpg)