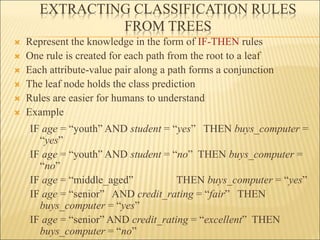

The document discusses various classification and prediction techniques used in machine learning, including decision trees, naive Bayes classification, and k-nearest neighbors. It provides examples of how these techniques work and compares supervised versus unsupervised learning approaches. The document also covers issues around preparing data and comparing different classification and prediction methods.