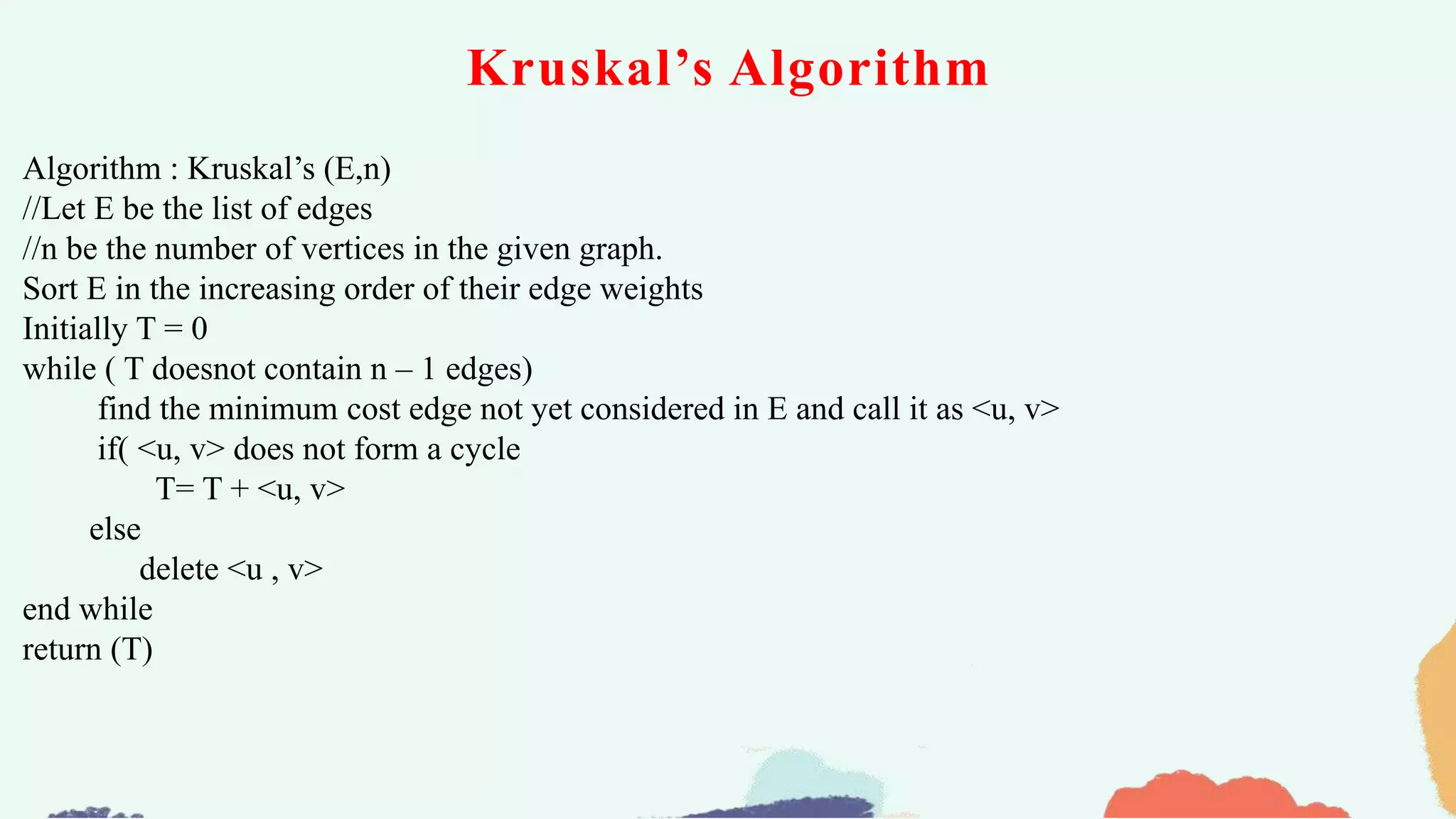

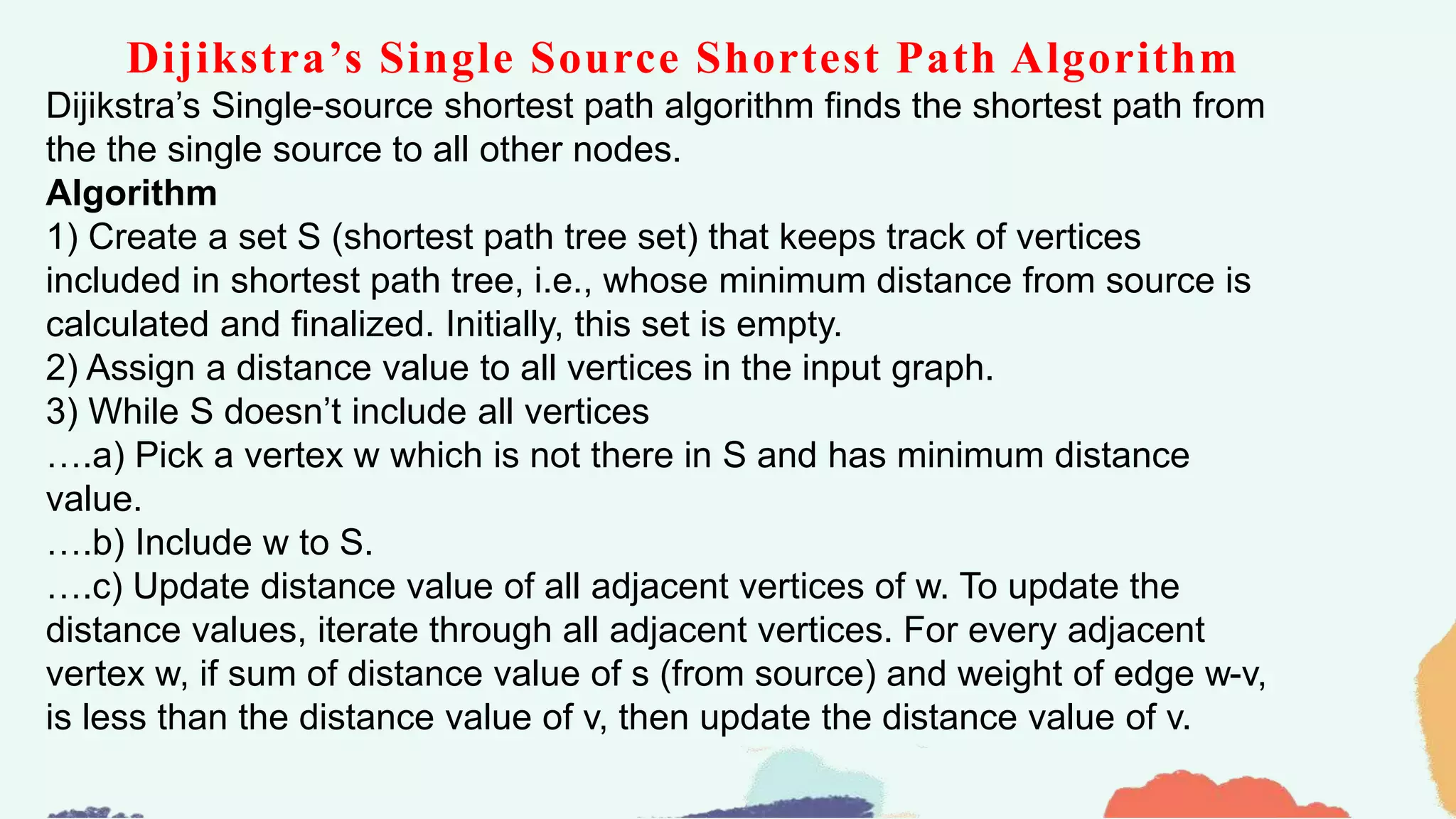

The document discusses various greedy algorithms including knapsack problems, minimum spanning trees, shortest path algorithms, and job sequencing. It provides descriptions of greedy algorithms, examples to illustrate how they work, and pseudocode for algorithms like fractional knapsack, Prim's, Kruskal's, Dijkstra's, and job sequencing. Key aspects covered include choosing the best option at each step and building up an optimal solution incrementally using greedy choices.

![Control Abstraction of Greedy method

Control Abstraction of Greedy method

Algorithm Greedy(a,n)

{

a[n] //array a with n inputs

solution := 0 //initialize the solution

for i:=1 to n do

{

x = select (a)

if Feasible (solution, x) then

solution:=union(solution, x);

}

return solution;

}](https://image.slidesharecdn.com/unit3-greedymethod-230522172031-e2a00f92/75/Unit-3-Greedy-Method-pptx-6-2048.jpg)

![Knapsack Fraction-Algorithm

Algorithm: Greedyknapsack fraction(M, n, w, P, x)

Assume the objects to be arranged in decreasing order of pi / wi. Let M be the knapsack capacity, n is the

number of edges, w [1 : n] is the array of weights, P[1 : n]is the array of profits ,x is a solution vector.

Step 1: Remaining capacity RC =m

Step 2: Repeat for i=1 to n do

x[i] = 0

end for

Step 3: Repeat for i==1 to n do

if (w[ i ] < RC)

x[ i ]=1

RC=RC-w[ i ]

Step 4: else

x[ i ]=RC/w[ i ]

break

End for

Step 5: For i=1 to n do

sum=sum+p [ i ]*x[ i ]

End for](https://image.slidesharecdn.com/unit3-greedymethod-230522172031-e2a00f92/75/Unit-3-Greedy-Method-pptx-14-2048.jpg)

![Knapsack 0/1-Algorithm

Algorithm: Greedyknapsack0/1(M, n, w, P, x)

Assume the objects to be arranged in decreasing order of pi / wi. Let M be the knapsack capacity, n is the

number of edges, w [1 : n] is the array of weights, P[1 : n]is the array of profits ,x is a solution vector.

Step 1: Remaining capacity RC =m

Step 2: Repeat for i=1 to n do

x[i] = 0

end for

Step 3: Repeat for i=1 to n do

if (w[ i ] < RC)

x[ i ]=1

RC=RC- w[ i ]

Step 4: else

x[ i ]= 0

break

End for

Step 5: For i=1 to n do

sum=sum+p [ i ]*x[ i ]

End for](https://image.slidesharecdn.com/unit3-greedymethod-230522172031-e2a00f92/75/Unit-3-Greedy-Method-pptx-15-2048.jpg)

![Prim’s algorithm

Algorithm :Prim’s(n, c)

//Assume G is connected, undirected and weighted graph.

//Input : The cost adjacency matrix C and number of vertices n.

//Output: Minimum weight spanning tree.

for i=1 to n do

visited [ i ]=0

u=1

visited [ u ] = 1

while( there is still an unchosen vertex ) do

Let <u, v> be the lightest edge between any chosen vertex u and unchosen vertex v

visited [ v ] =1

T=union (T, <u,v>)

end while

return T](https://image.slidesharecdn.com/unit3-greedymethod-230522172031-e2a00f92/75/Unit-3-Greedy-Method-pptx-20-2048.jpg)

![Algorithm-Dijikstra’s Single Source Shortest Path

dist[S] ← 0 // The distance to source vertex is set to 0

Π[S] ← NIL // The predecessor of source vertex is set as NIL

for all v ∈ V - {S} // For all other vertices

do dist[v] ← ∞ // All other distances are set to ∞

Π[v] ← NIL // The predecessor of all other vertices is set as NIL

S ← ∅ // The set of vertices that have been visited 'S' is

initially empty

Q ← V // The queue 'Q' initially contains all the vertices

while Q ≠ ∅ // While loop executes till the queue is not empty

do u ← mindistance (Q, dist) // A vertex from Q with the least distance is selected

S ← S ∪ {u} // Vertex 'u' is added to 'S' list of vertices that have

been visited

for all v ∈ neighbors[u] // For all the neighboring vertices of vertex 'u'

do if dist[v] > dist[u] + w(u,v) // if any new shortest path is discovered

then dist[v] ← dist[u] + w(u,v) // The new value of the shortest path is selected

return dist](https://image.slidesharecdn.com/unit3-greedymethod-230522172031-e2a00f92/75/Unit-3-Greedy-Method-pptx-24-2048.jpg)