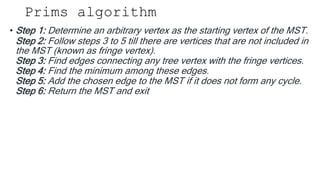

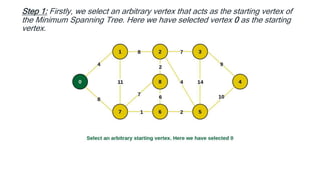

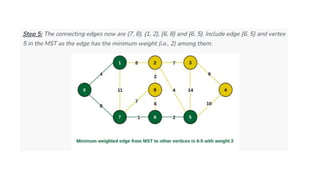

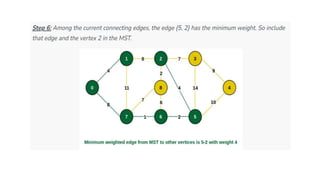

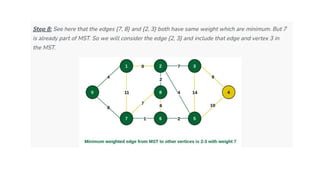

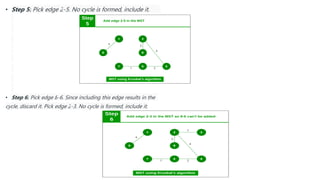

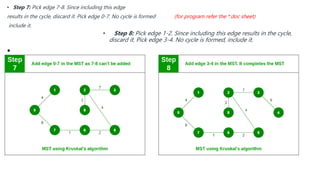

The document discusses greedy algorithms and their application in optimization problems such as the minimum spanning tree and knapsack problem. It outlines the general structure of greedy algorithms, characteristics like the greedy choice property and optimal substructure, and provides examples including Prim's and Kruskal's algorithms. Additionally, it explores applications of greedy algorithms in network design, machine learning, image processing, and financial optimization.