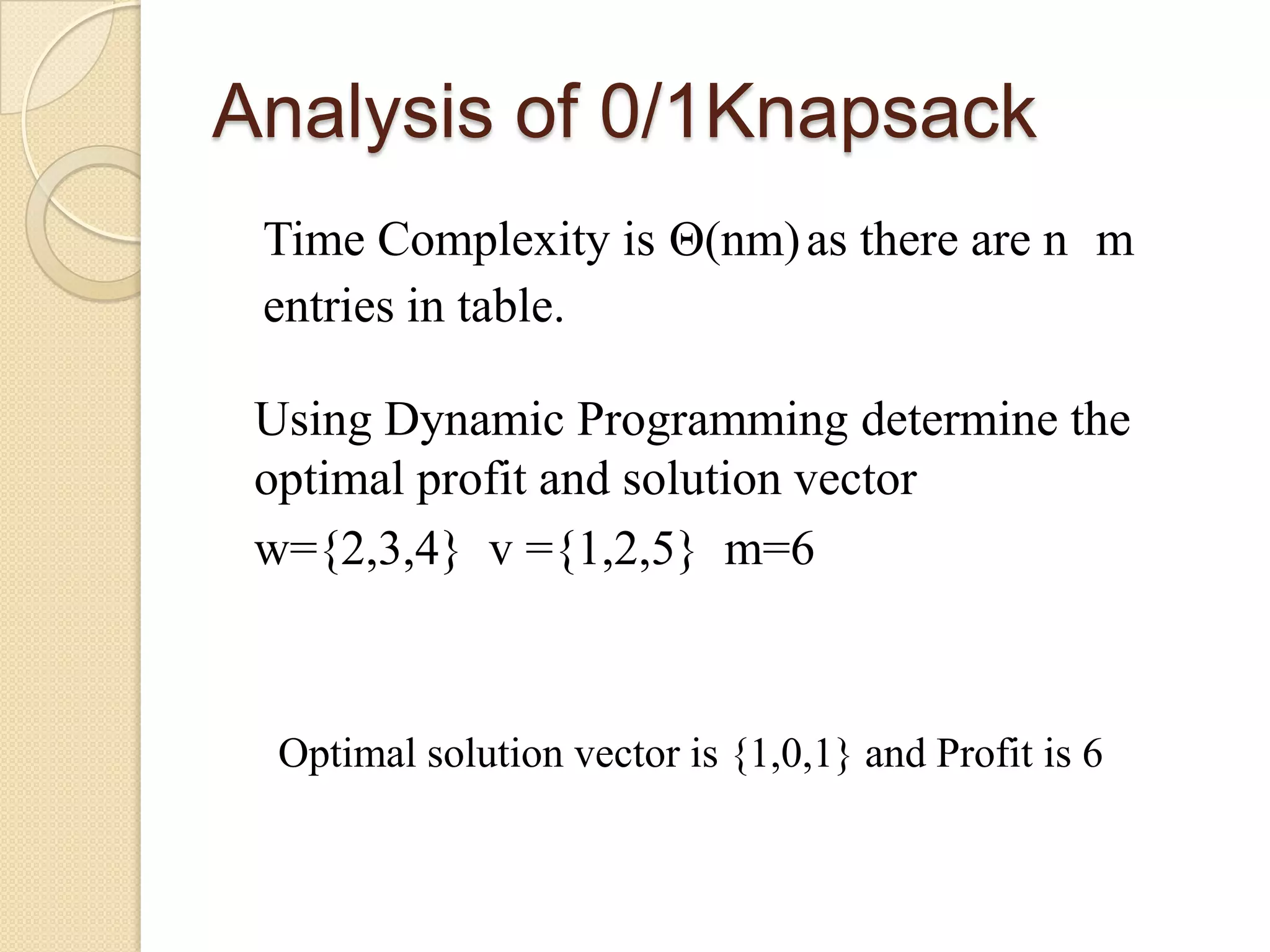

Dynamic programming is used to solve optimization problems by breaking them down into subproblems. It solves each subproblem only once, storing the results in a table to lookup when the subproblem recurs. This avoids recomputing solutions and reduces computation. The key is determining the optimal substructure of problems. It involves characterizing optimal solutions recursively, computing values in a bottom-up table, and tracing back the optimal solution. An example is the 0/1 knapsack problem to maximize profit fitting items in a knapsack of limited capacity.

![=0 if i=0 or j=0

=-∞ if j<0

C[i,j] =c[i-1,j] if wi ≥ 0

=max{vi+c[i-1,j-wi],c[i-1,wi]} if i>0 & j>wi

Consider the E.g. : w={1,2,5,6,7}

v={1,6,18,22,28}

m=11

How to calculate the cost table

C[1,1]=max{C[1-1,1-1]+1,C[1-1,1]}

=max{1,0}=1

C[1,2]=max{C[1-1,2-1]+1,C[1-1,1]}

=max{1,0}=1

C[3,3]=max{C[3-1,3-5]+18,C[3-1,3]}

=max{-∞,C[2,3]}

=C[2,3]](https://image.slidesharecdn.com/daa-120721003138-phpapp02/75/Daa-Dynamic-Programing-8-2048.jpg)

![Dknap(i,j,m)

{

w :=[w1,w2,…,wn] ; // array of weights

v := [v1,v2,…,vn]; // array of values

C:= [1,2,…,n,1,2,…,m]; // Knapsack array(2-D)

// 1,2,…i,…,n are objects

// 1,2,…,j,…,m are capacities of knapsack](https://image.slidesharecdn.com/daa-120721003138-phpapp02/75/Daa-Dynamic-Programing-10-2048.jpg)

![for i :=1 to n do

C[i,0]:=0; //Knapsack with capacity 0

for i:=1 to n do

{

for j:=1 to m do

{

if (i=1 and j<w[i]) then

C[i,j]=0;

else

if (i=1) then

C[i,j]=v[i];

else

if(j<w[i]) then

C[i,j]=C[i-1,j];

else

if(C[i-1,j] > C[i-1,j-w[i]]+v[i]) then

C[i,j]= C[i-1,j];

else

C[i,j]= C[i-1,j-w[i]]+v[i];

}

}

Traceback(C)

}](https://image.slidesharecdn.com/daa-120721003138-phpapp02/75/Daa-Dynamic-Programing-11-2048.jpg)

![Traceback(C)

{ //opt[] array is used to store optimal solution vector

i:=n; j:=m;

while(i≥1)

{ k:=i; //k is the index of opt[] array

while(j≥0)

{

if(C[i,j]=C[i-1,j]) then

{

i:=i-1;

opt[k]:=0;

else

{

opt[k]=1;

i:=i-1;

j:=j-w[i];

}

}](https://image.slidesharecdn.com/daa-120721003138-phpapp02/75/Daa-Dynamic-Programing-12-2048.jpg)