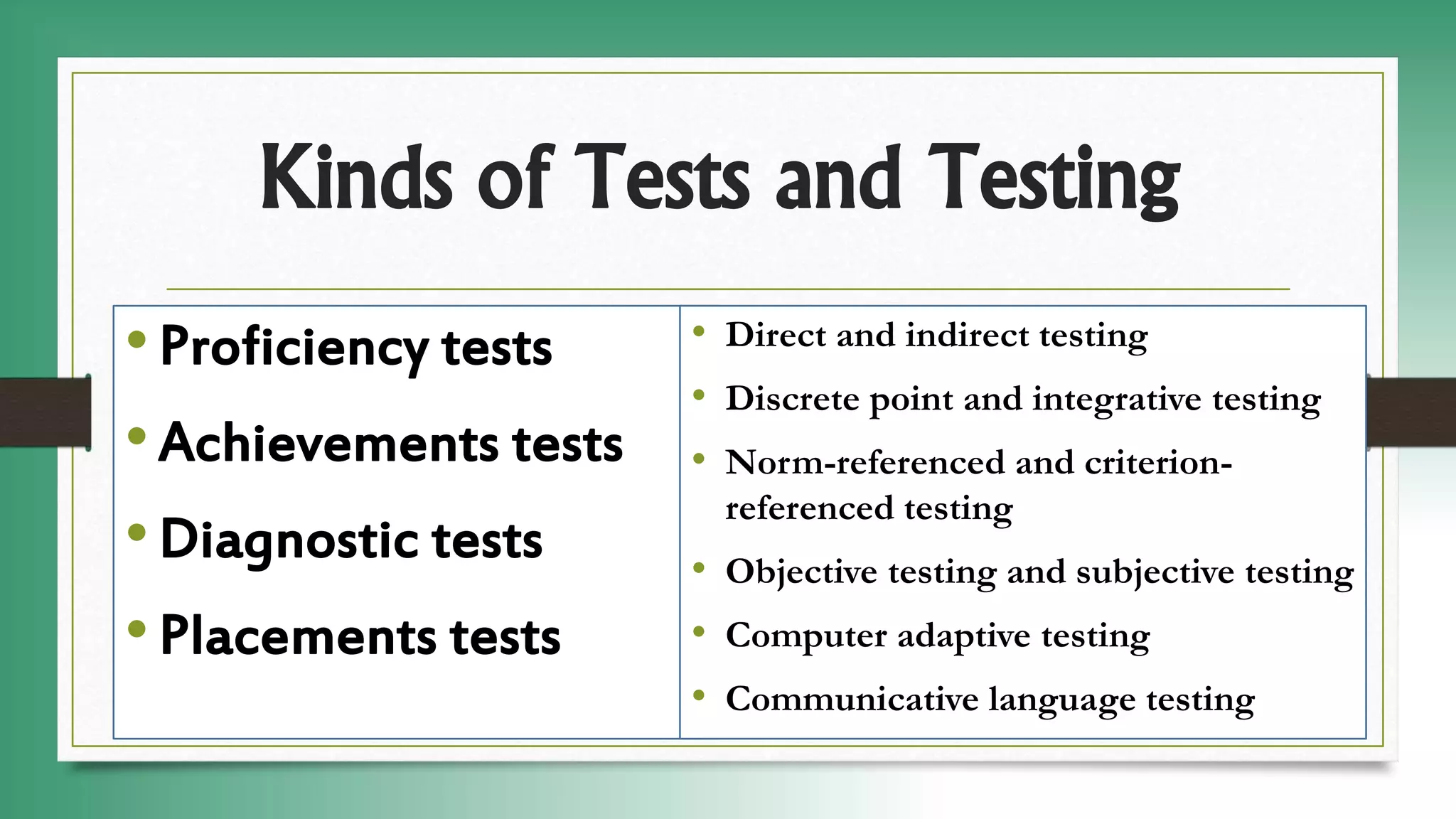

This document discusses different types of language tests and testing, including proficiency tests, achievement tests, diagnostic tests, placement tests, direct and indirect testing, discrete point and integrative testing, norm-referenced and criterion-referenced testing, objective and subjective testing, and computer adaptive testing. It provides details on the purpose and characteristics of each type of test.