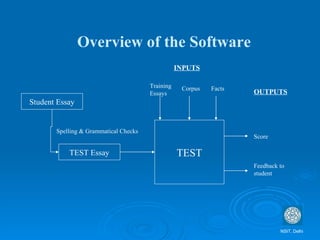

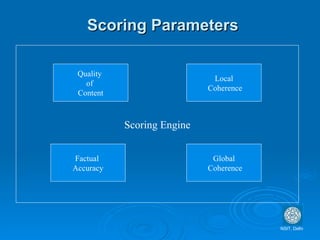

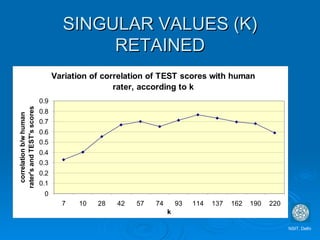

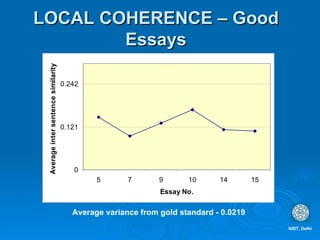

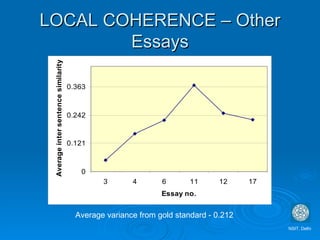

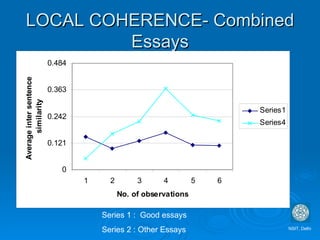

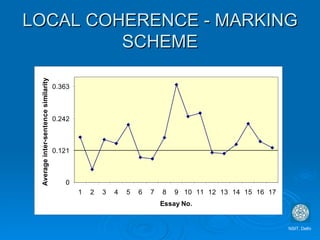

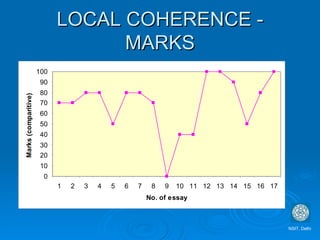

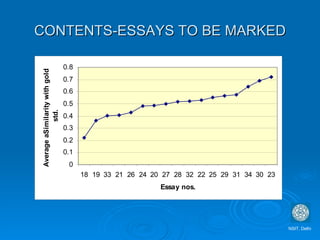

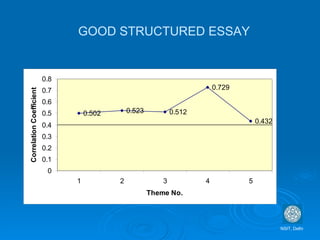

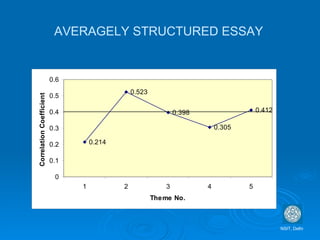

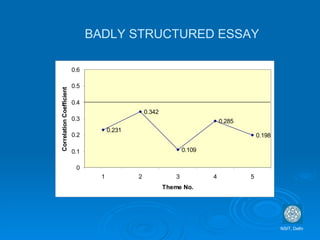

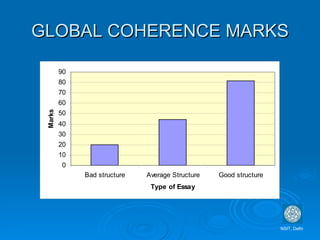

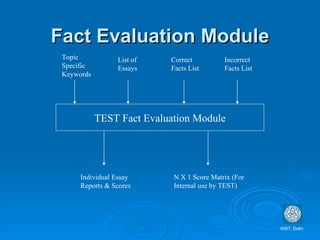

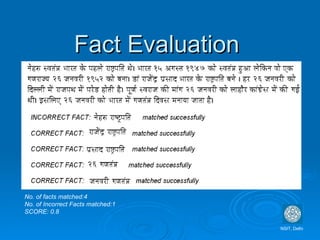

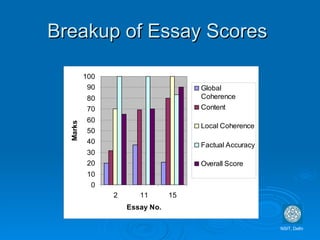

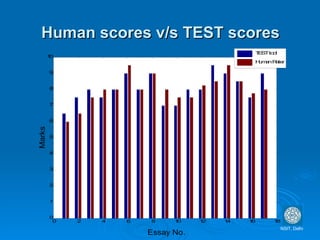

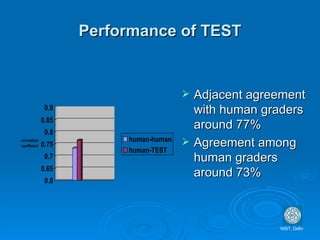

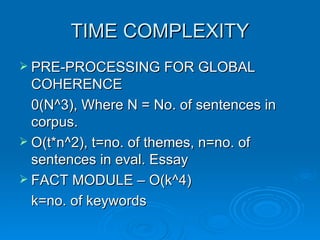

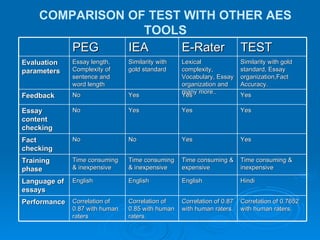

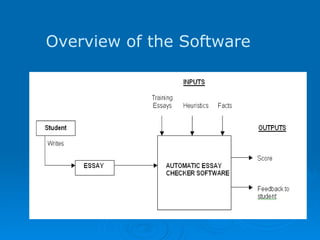

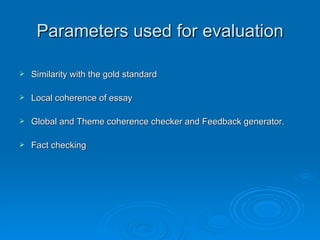

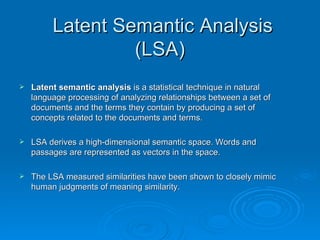

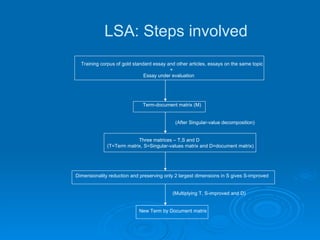

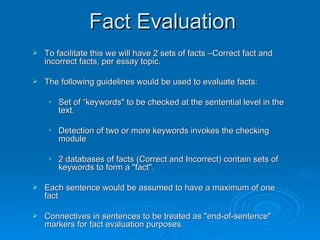

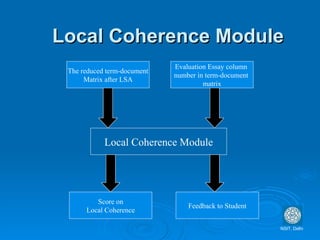

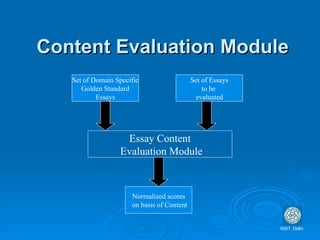

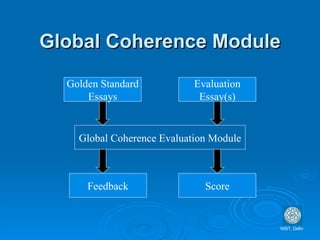

The document summarizes an automatic essay scoring tool called TEST that was developed as a final year project. It uses latent semantic analysis to analyze essays and compare them to a training corpus to evaluate four parameters: local coherence, global coherence, content quality, and factual accuracy. The tool was found to have a 77% agreement rate with human graders, similar to the 73% agreement among human graders. Future work planned to expand the tool's capabilities and reduce computational complexity.