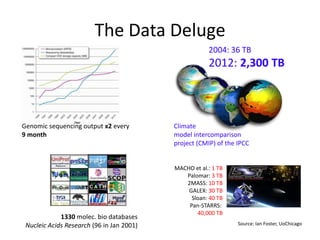

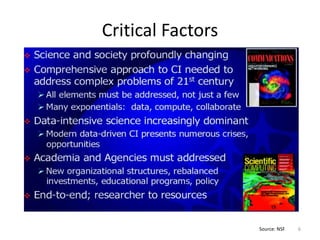

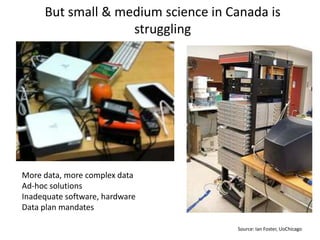

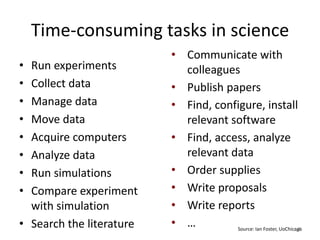

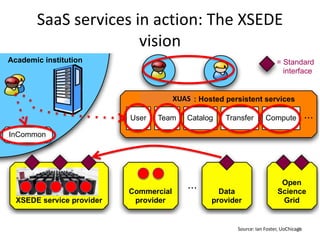

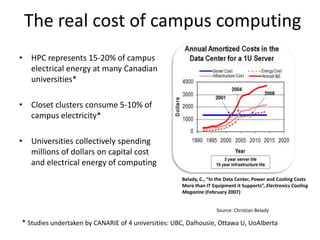

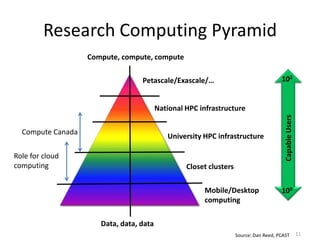

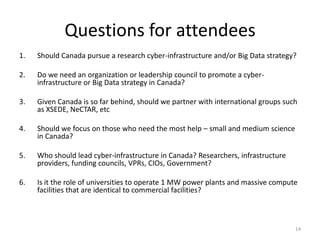

This document discusses the growing issue of large quantities of data from scientific research and simulations. It notes that data volumes are doubling every 9 months for genomic sequencing and that various scientific projects are now generating petabytes and exabytes of data. Current solutions for data management and analysis are often ad-hoc and inadequate for small and medium scale research. The document advocates for a national research cyberinfrastructure strategy in Canada that could provide standardized services and leverage partnerships with international organizations and commercial cloud providers to support scientists in dealing with large and complex research data. It raises questions about leadership and roles for universities, research organizations, and government in developing solutions.