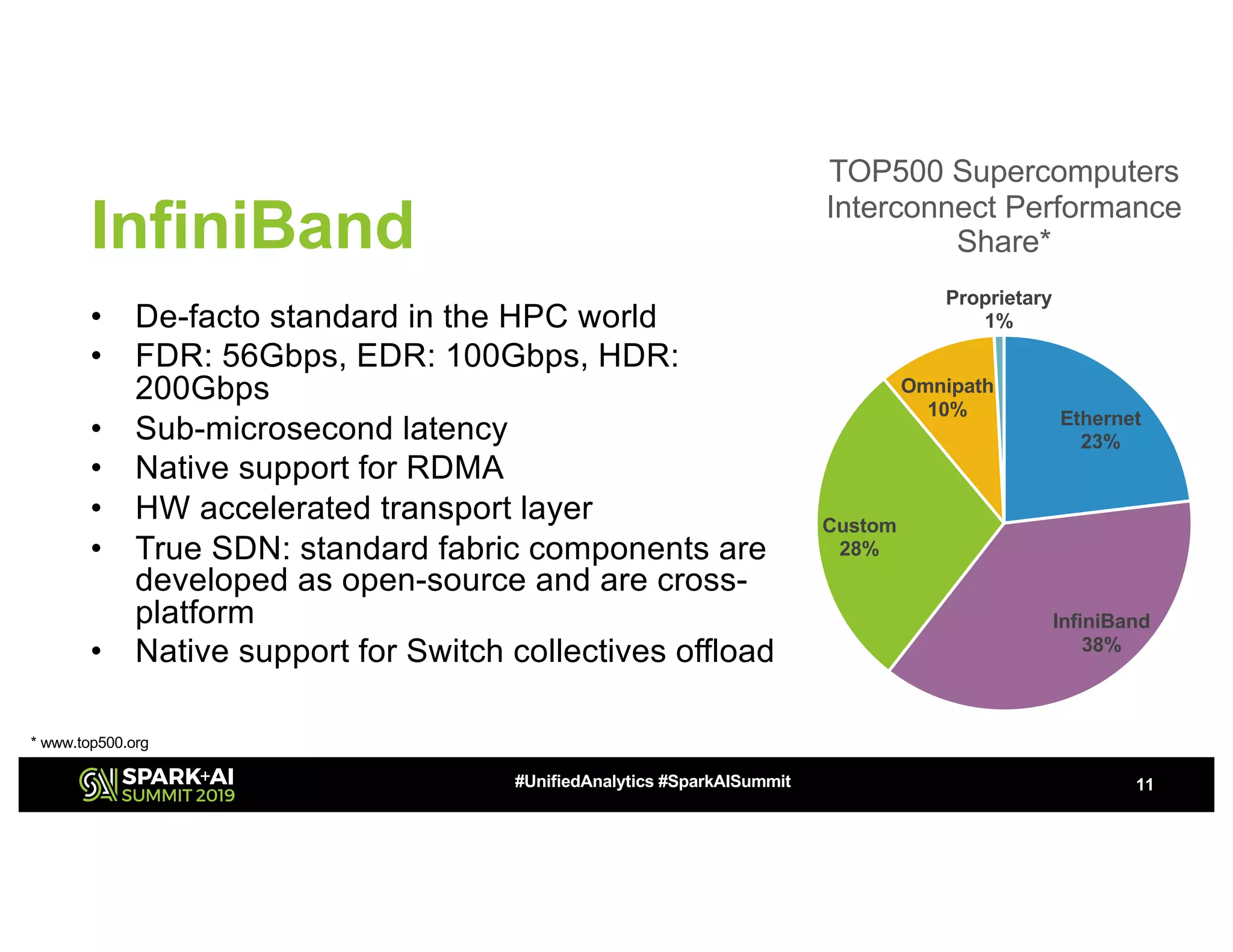

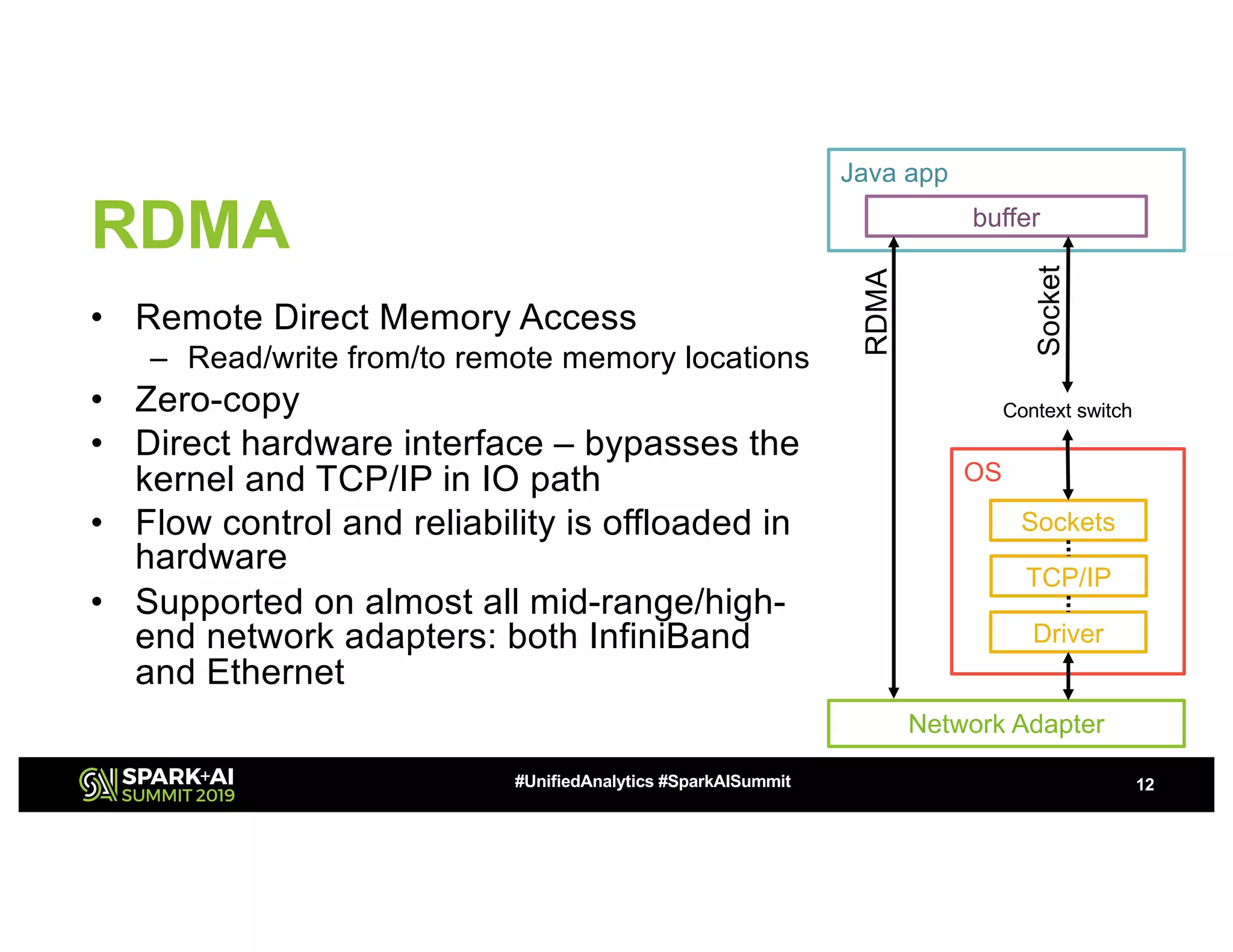

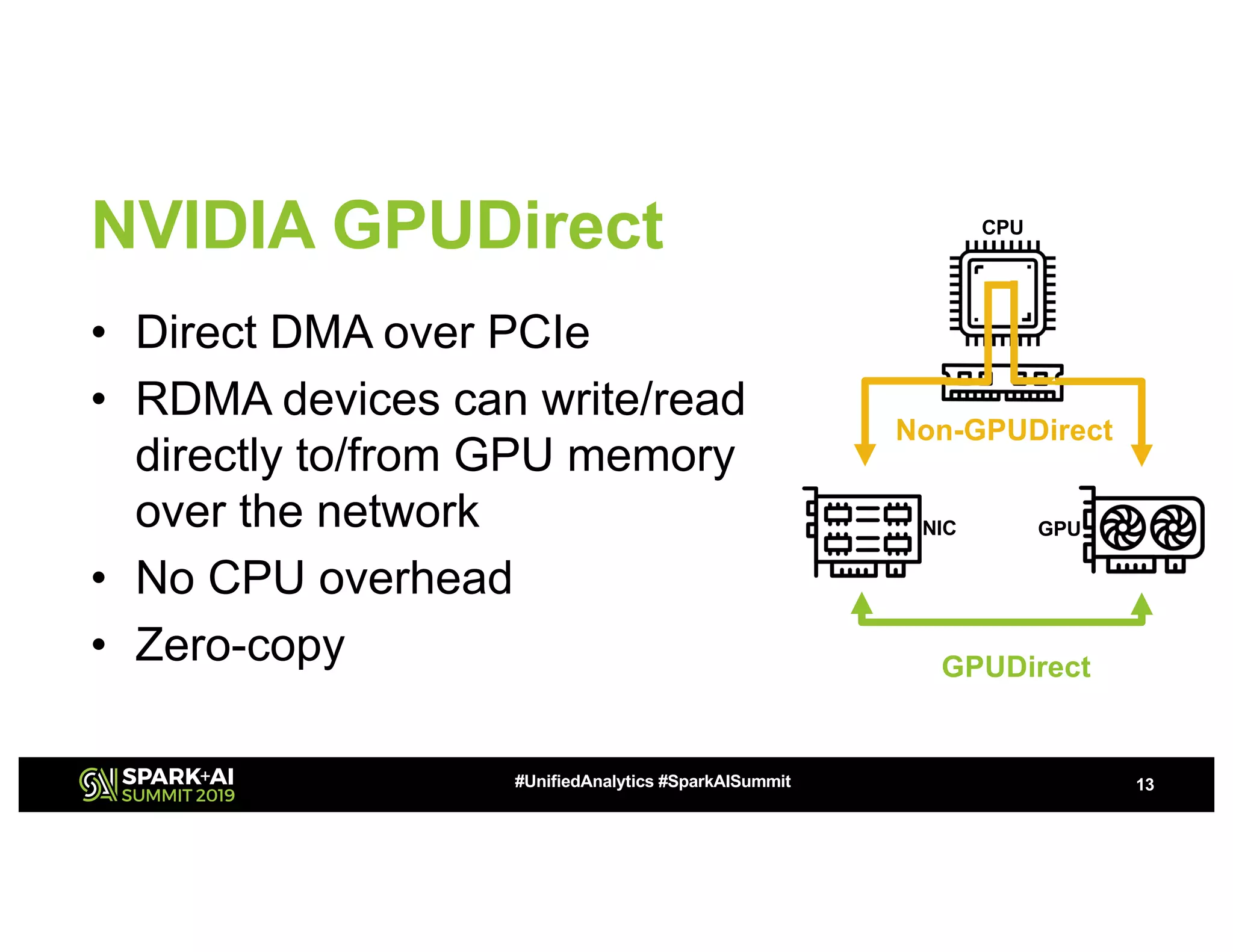

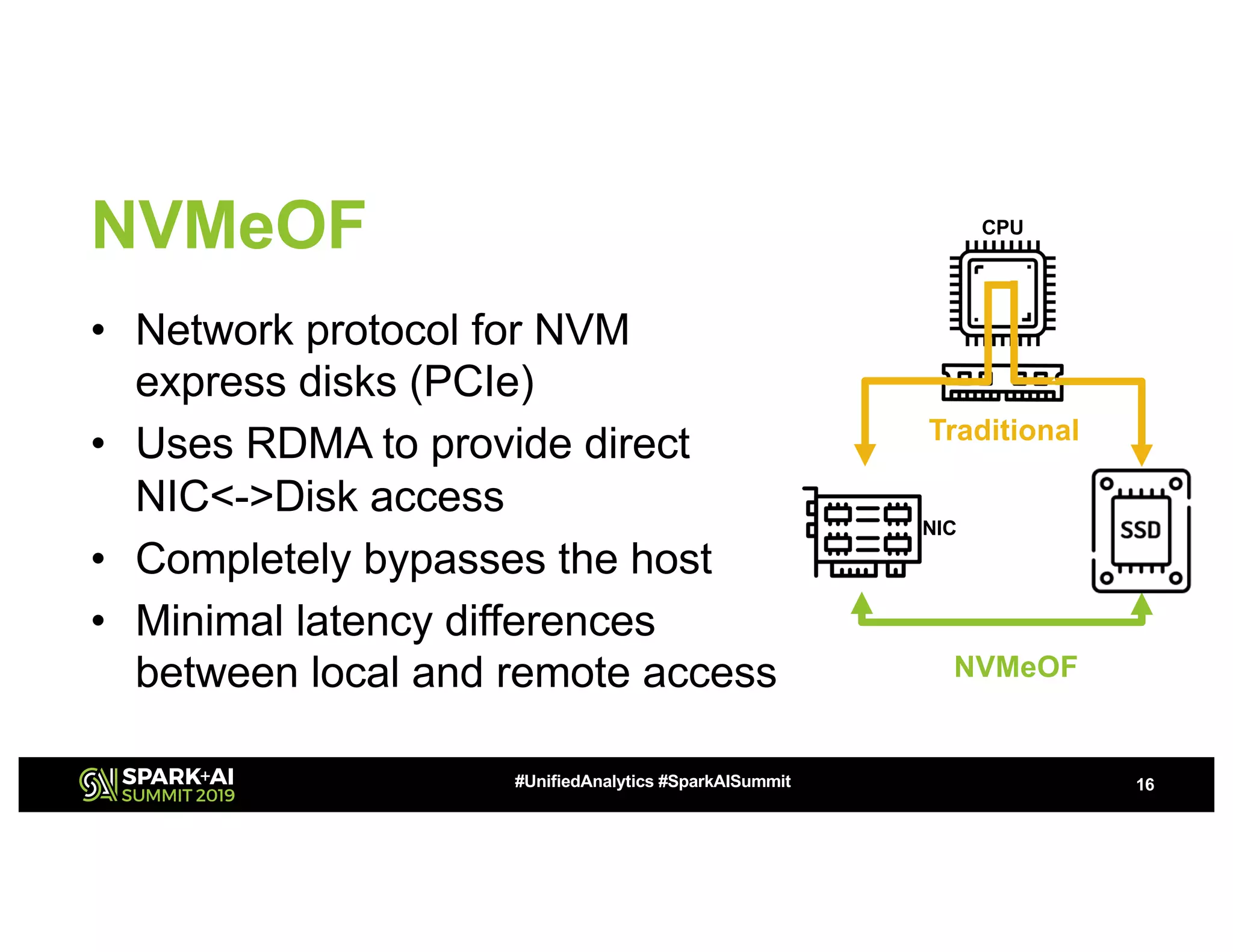

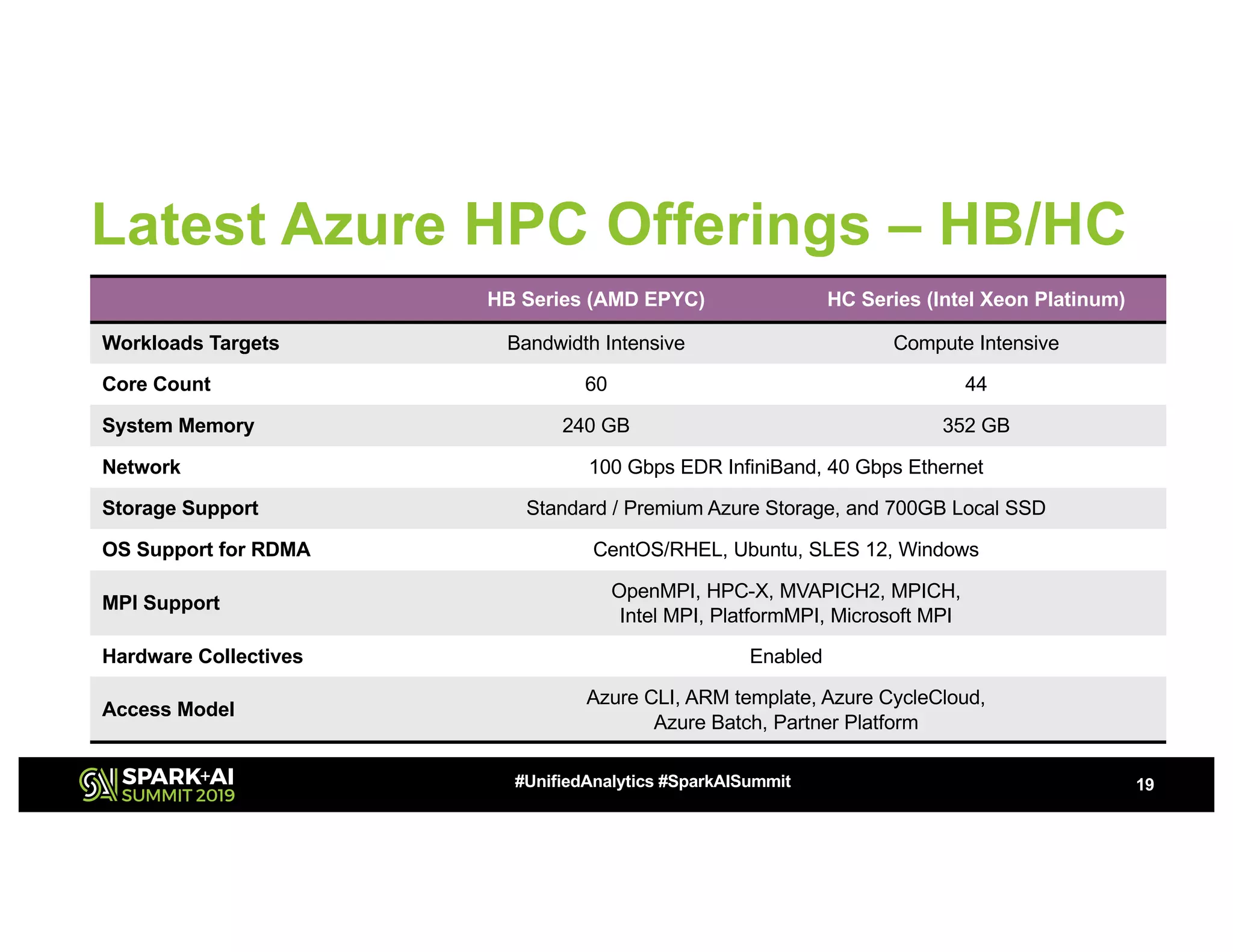

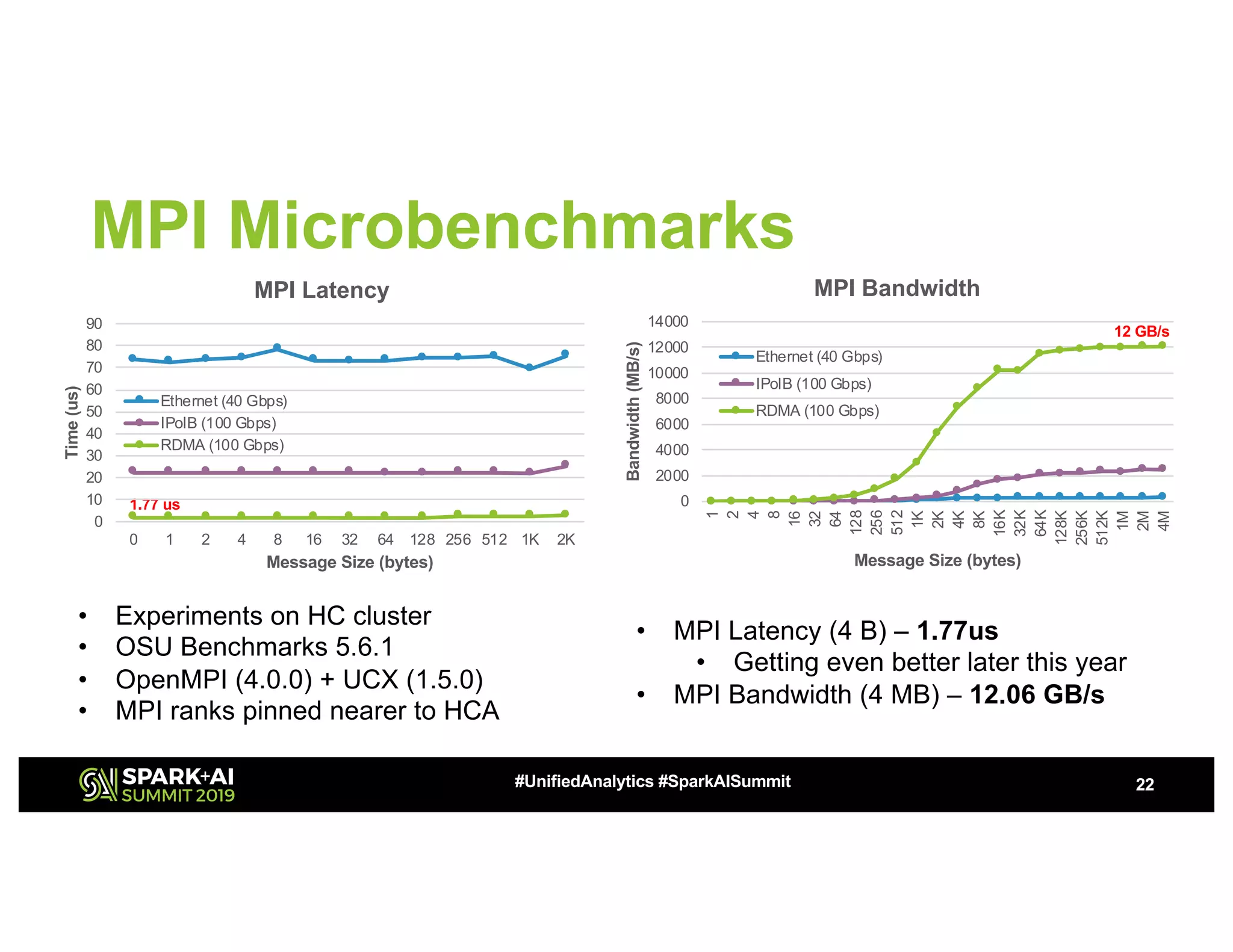

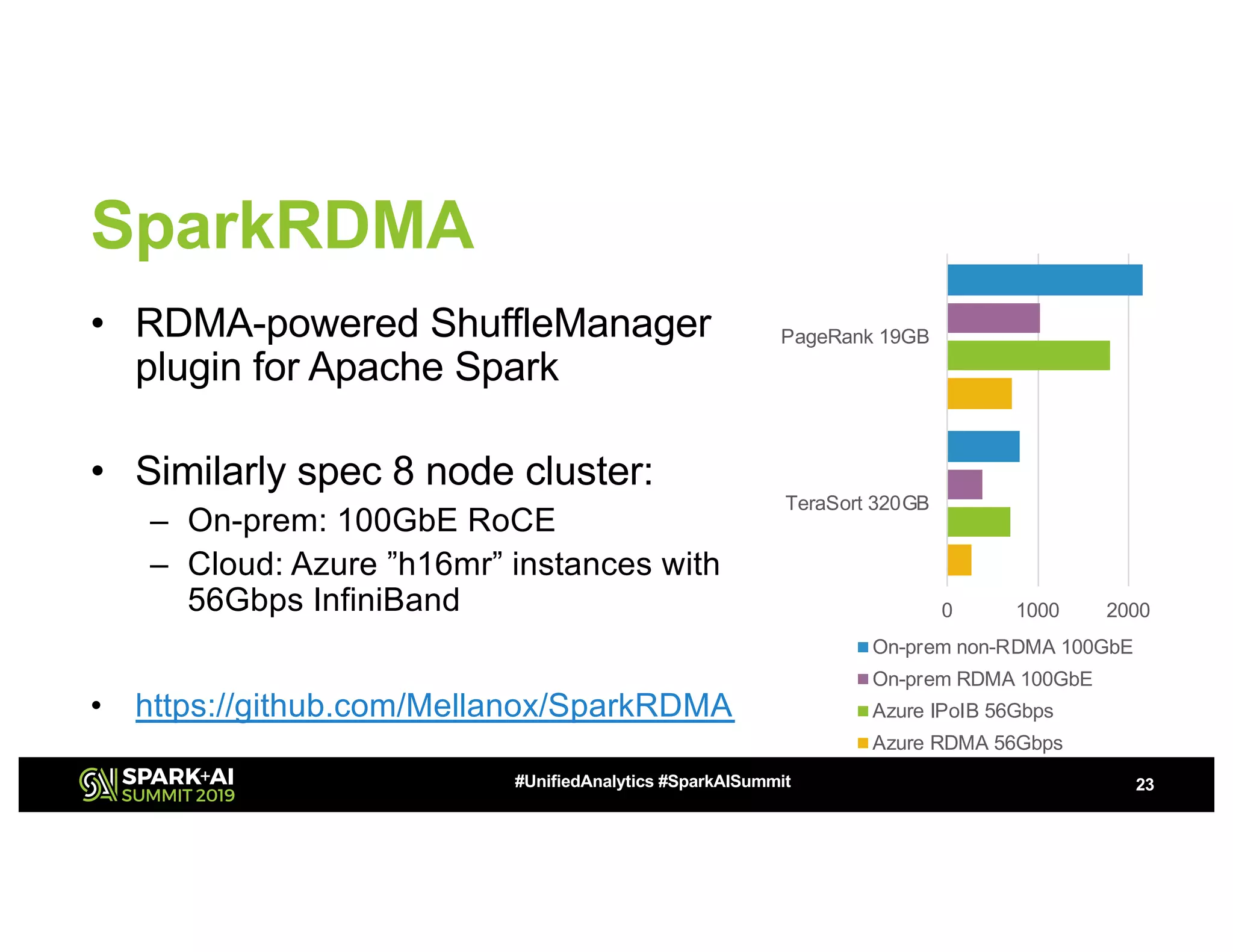

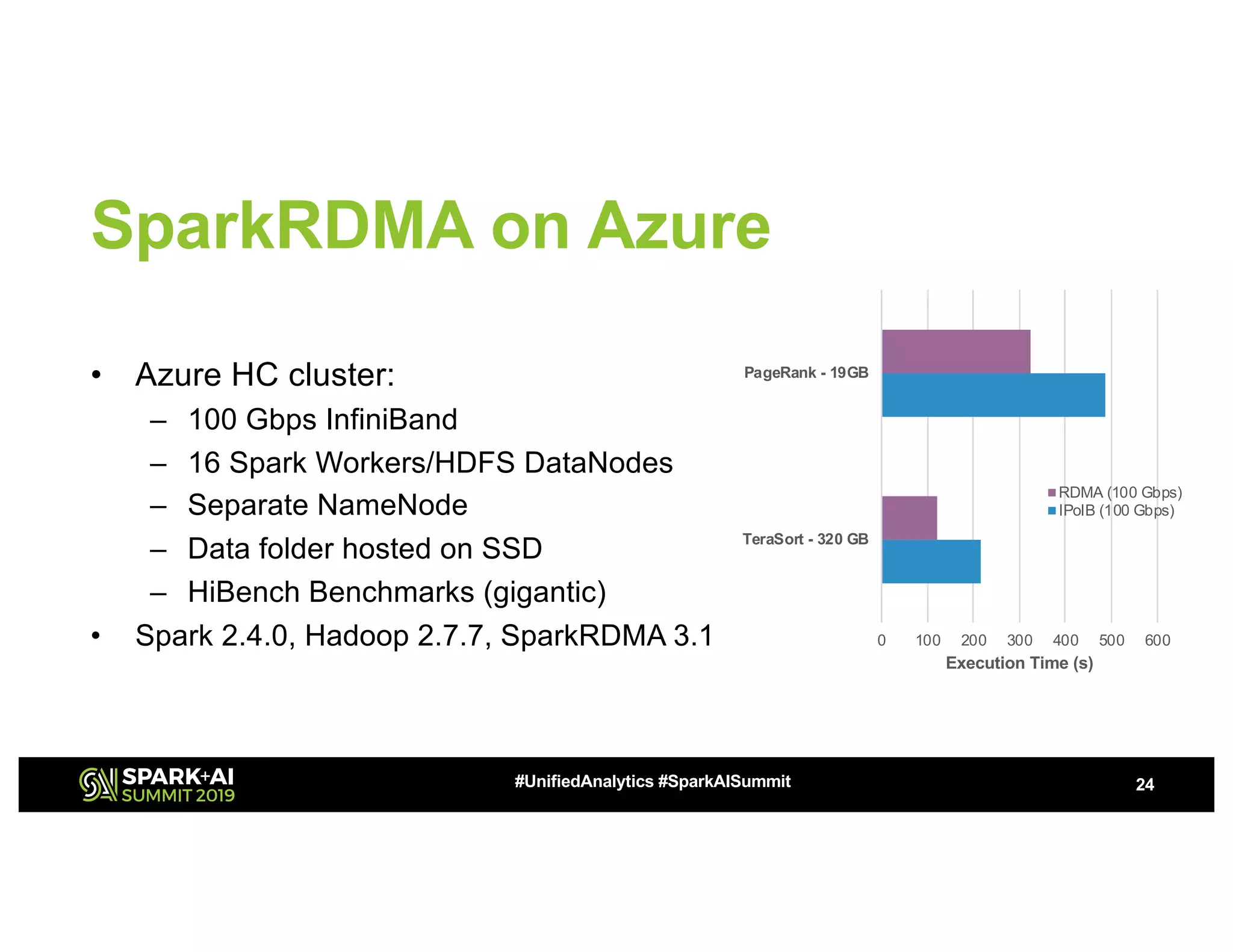

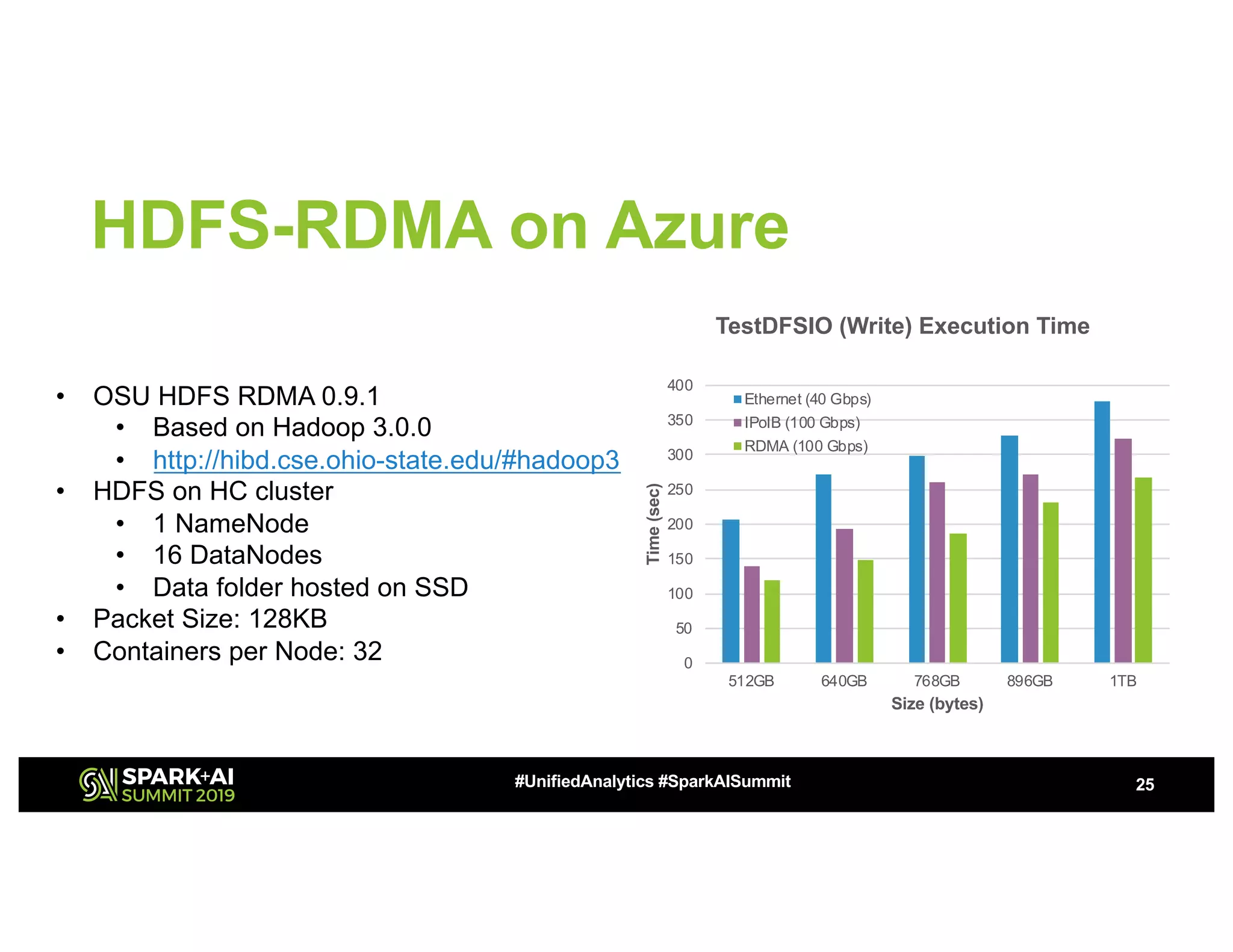

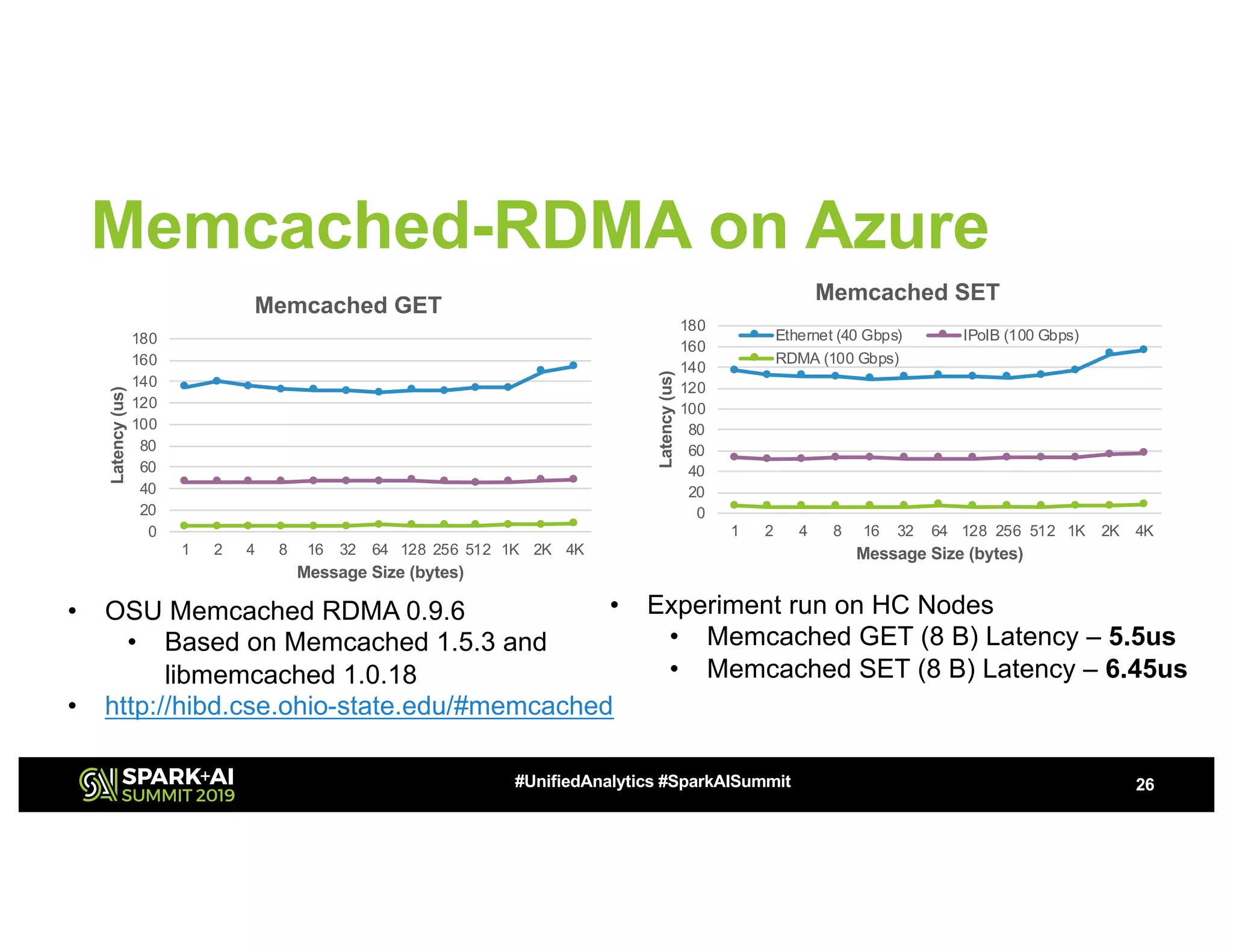

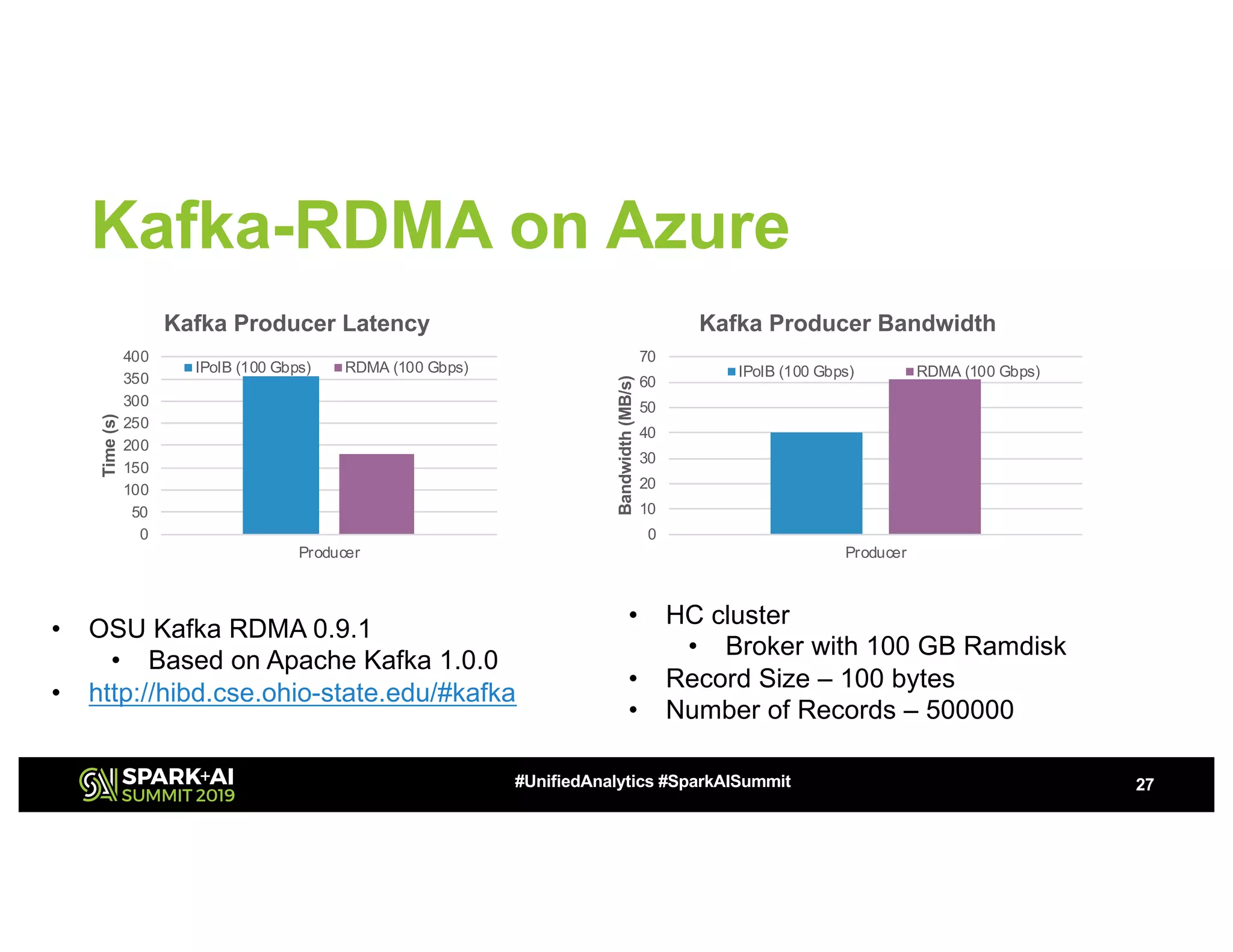

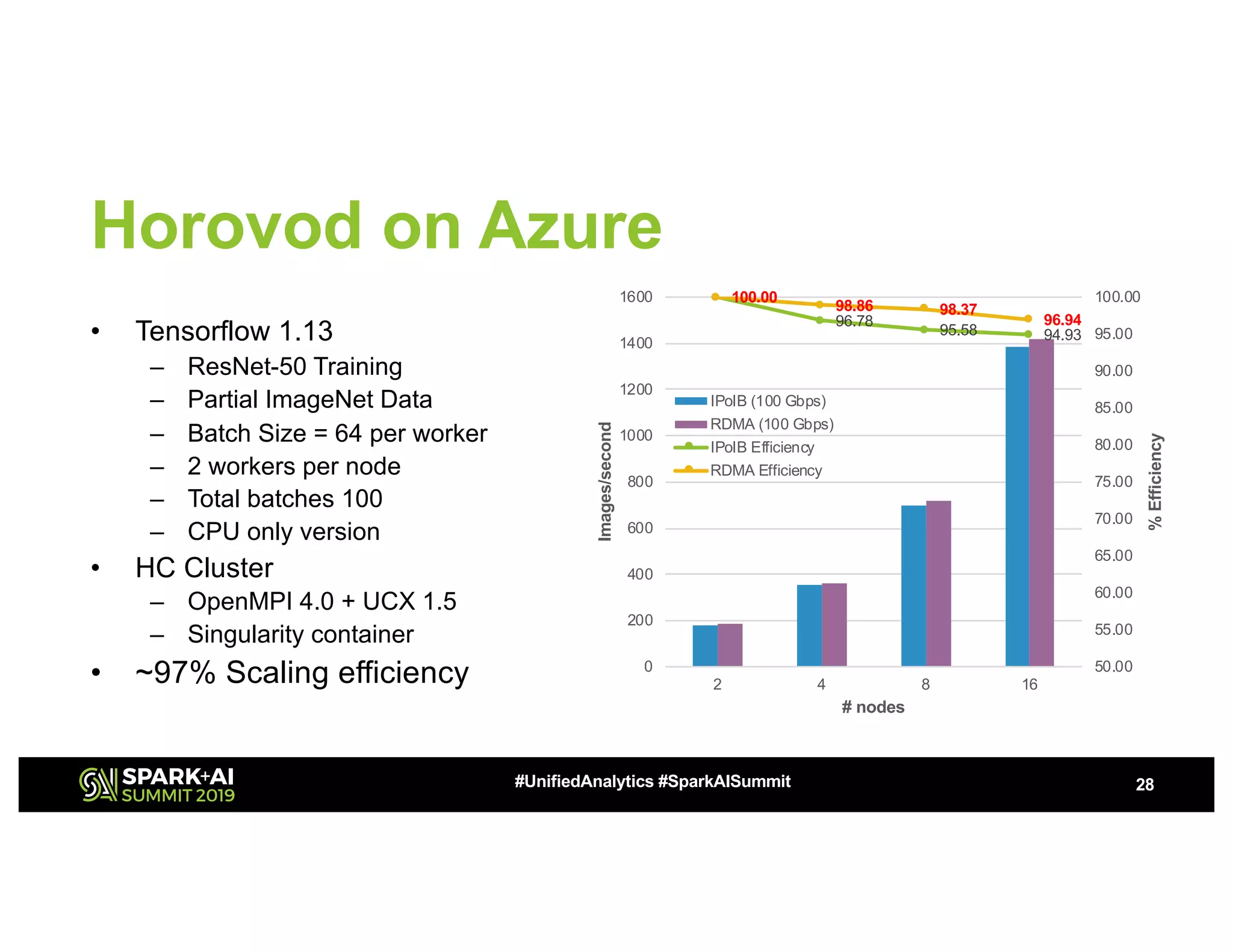

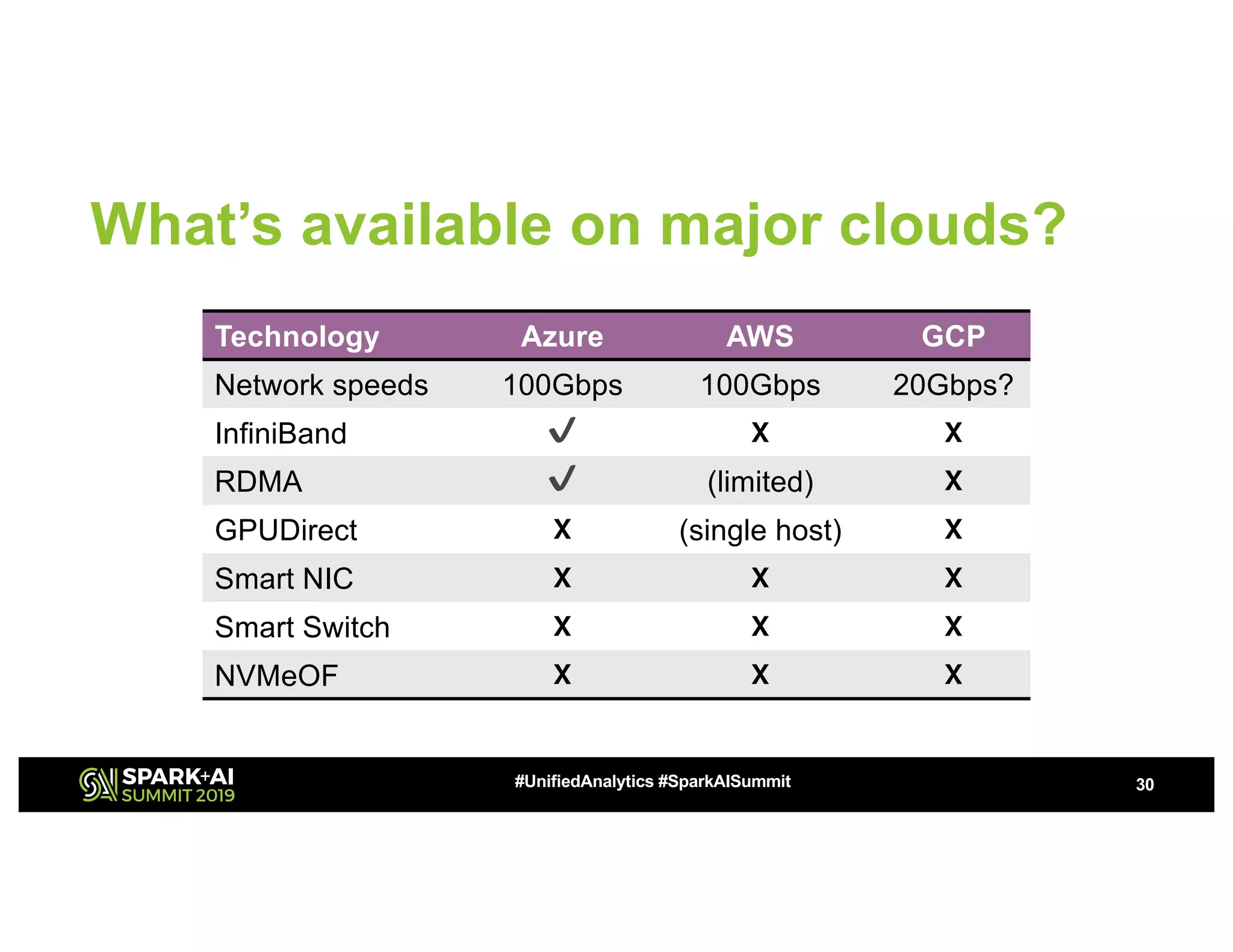

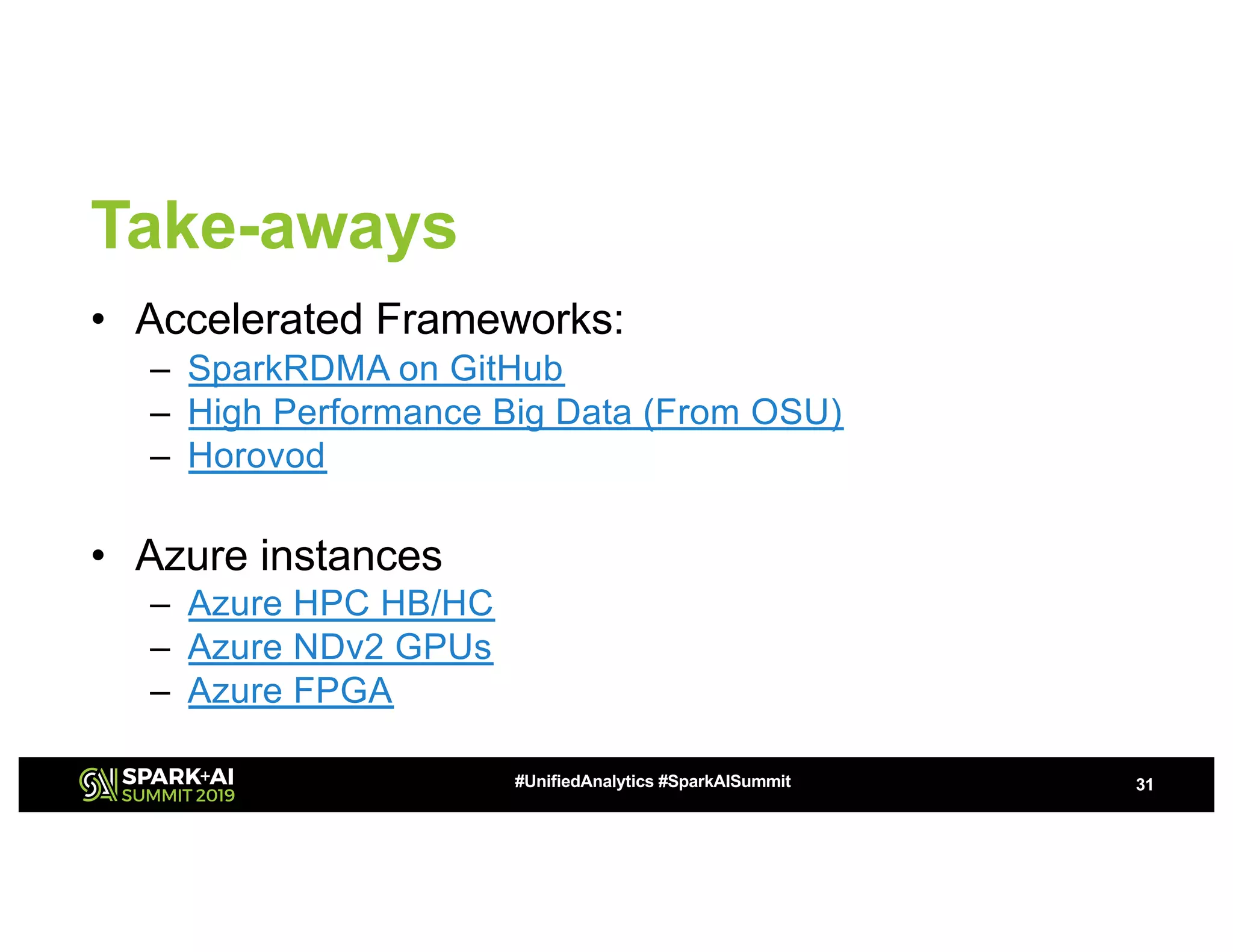

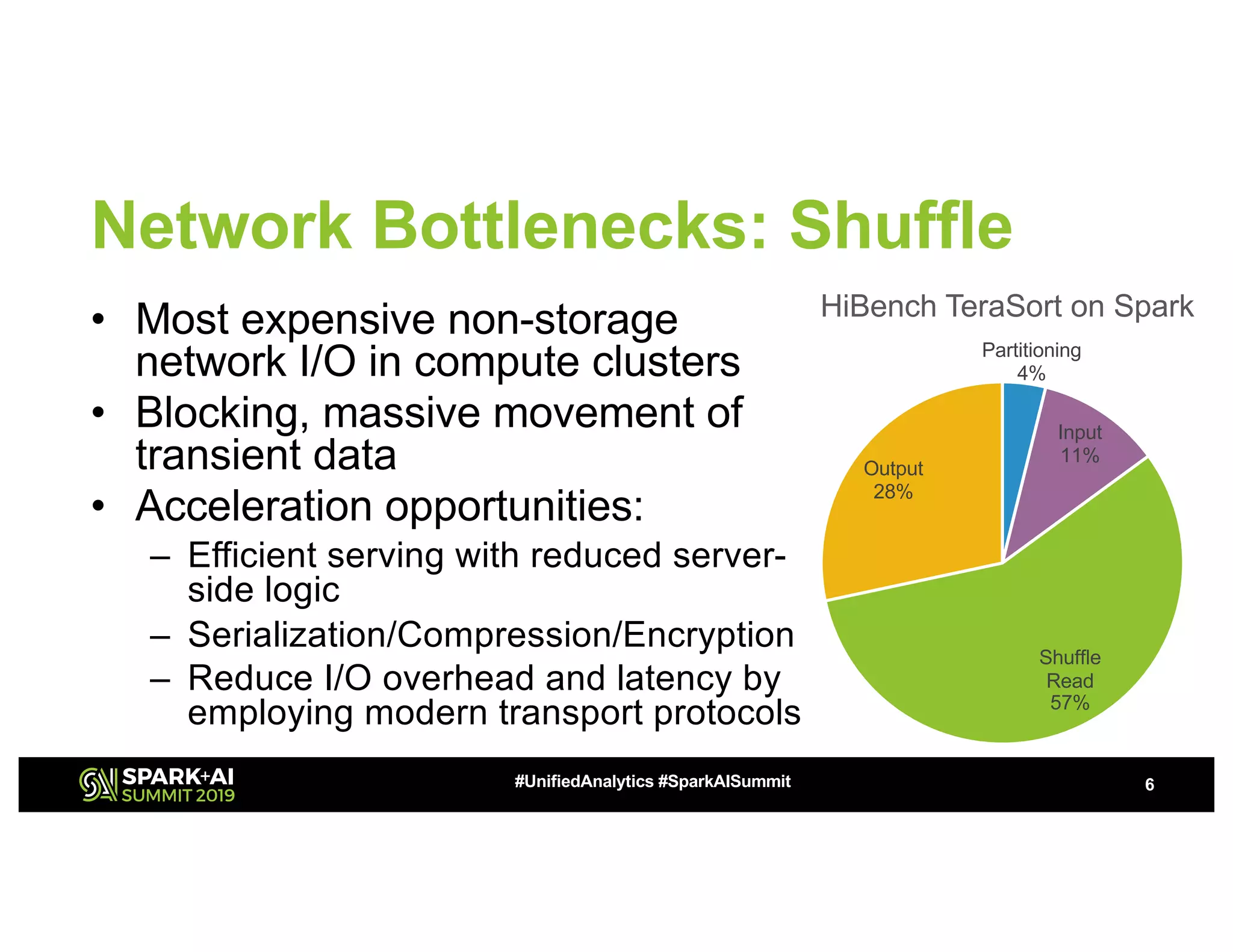

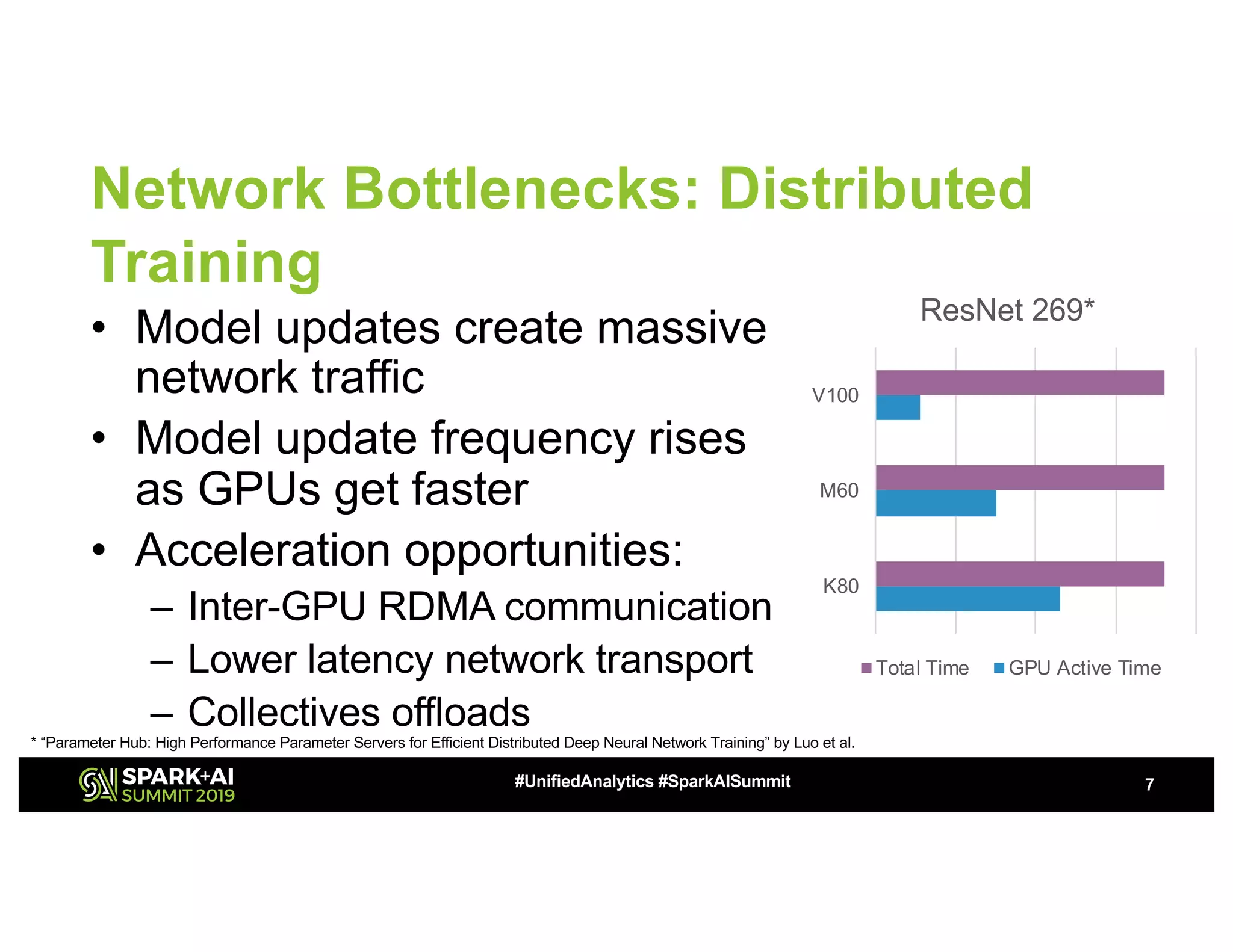

The document discusses the challenges of network bottlenecks in compute clusters and how hardware acceleration can address these issues to improve performance. It highlights technologies such as RDMA, GPUDirect, and NVMe over Fabrics, along with their potential to reduce latency and overhead. The document also presents Azure's HPC offerings and the benefits of accelerated frameworks for big data applications.

![Speeds

• 1, 10, 25, 40, 100, 200Gbps

• Faster network doesn’t

necessarily mean a faster

runtime

• Many workloads consist of

relatively short bursts rather

than sustainable throughput:

higher bandwidth may not have

any effect

10#UnifiedAnalytics #SparkAISummit

0

100

200

300

400

500

600

700

800

Flink

TeraSort

Flink

PageRank

PowerGraph

PageRank

Timely

PageRank

Effect of network speed

on workload runtime*

1GbE 10GbE 40GbE

* “On The [Ir]relevance of Network Performance for Data Processing” by Trivedi et al.](https://image.slidesharecdn.com/072020yuvaldeganijithinjose-190508203942/75/Tackling-Network-Bottlenecks-with-Hardware-Accelerations-Cloud-vs-On-Premise-10-2048.jpg)